Loss Functions and Optimization

Loss Functions

Cross-Entropy Loss

Definition

Suppose the number of data is and the number of classes is , then the cross entropy loss is defined as

Link to original

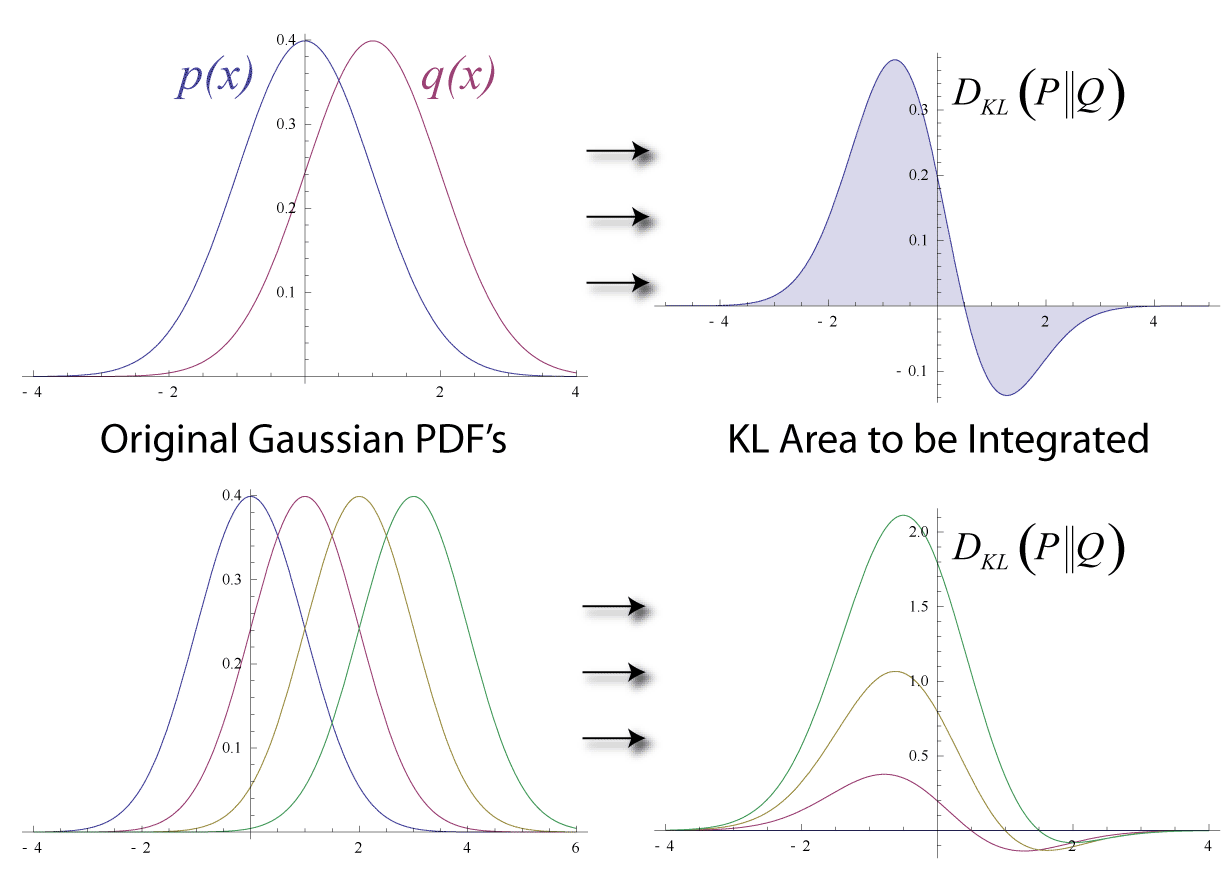

Kullback-Leibler Divergence

Definition

Assume that two Probability Distributions and are given. Then the Kullback-Leibler divergence between and is defined as

Kullback-Leibler divergence measures how different two distributions are.

It also can be expressed as a difference between the cross entropy (difference between distributions and )and entropy (inherent uncertainty of ).

Facts

Link to originalLet be a sequence of distributions. Then, The convergence of the KL-Divergence to zero implies that the JS-Divergence also converges to zero. The convergence of the JS-Divergence to zero is equivalent to the convergence of the Total Variation Distance to zero. The convergence of the Total Variation Distance to zero implies that the Wasserstein Distance also converges to zero. The convergence of the Wasserstein Distance to zero is equivalent to the Convergence in Distribution of the sequence.

Optimization

Gradient Descent

Definition

An iterative optimization algorithm for finding a local minimum of a differentiable function

where is a learning rate

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient is

Then, solution is

Link to original

Cross Validation

Definition

Partition a set of data into sets and denote a function such that . For the dataset , let be the estimator based on observations except , then the cross validation estimation for the prediction error is defined as where is a loss function

Facts

Link to originalIf the data is partitioned into group with equal size, then it is called a -fold cross validation.

Neural Networks

Neural Networks and Backpropagation

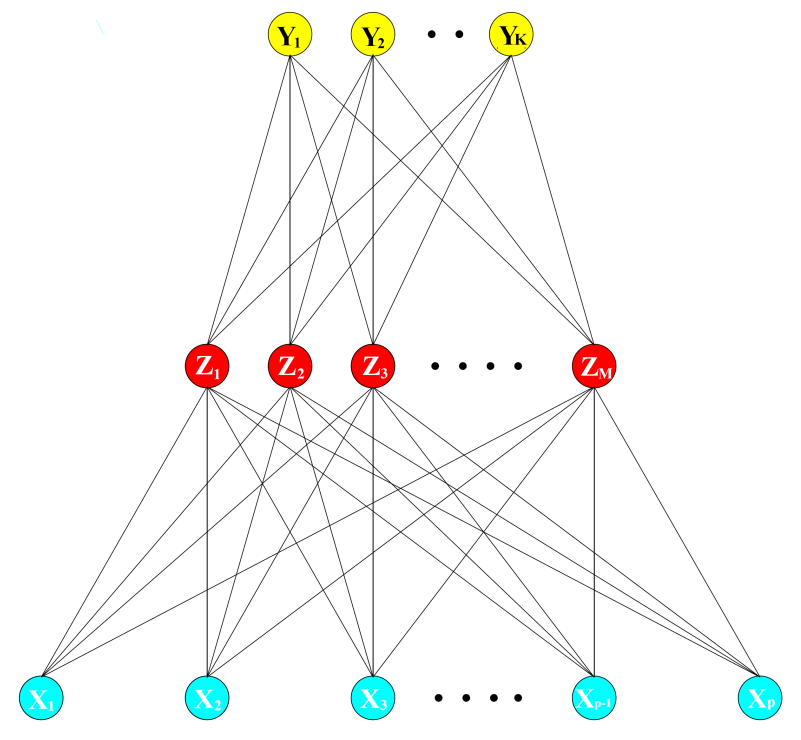

Neural Network

Definition

Neural network can be thought as a non-linear generalization of linear model.

The derived features are constructed by an Activation Function and linear combinations of the inputs. where is an Activation Function

Output nodes are the linear combinations of And the output is modeled by a function of a linear combinations of where is called an output function.

Facts

The output function varies by the problem. For regression is Identity Function, and for -class classification Softmax Function is used as the .

For regression problem, Sum of Squared Errors Loss is used as Loss Function. For classification problem, we use Cross-Entropy Loss

With the softmax activation function and the Cross-Entropy Loss, the neural network model is exactly a linear Logistic Regression model in the hidden units.

The parameters of a neural network are estimated by Backpropagation.

Link to originalNeural network is especially effective in problem with a high signal-to-noise ratio.

Backpropagation

Definition

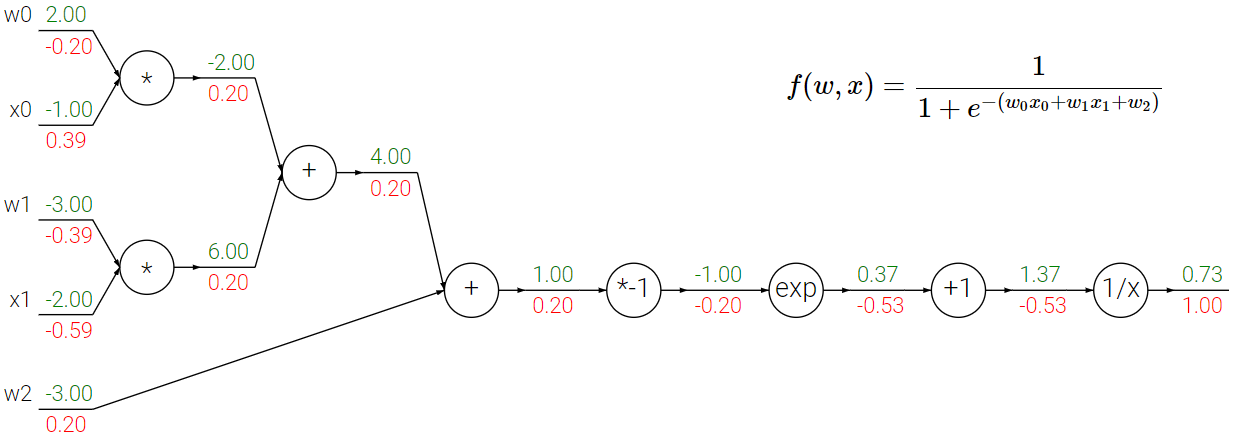

Backpropagation is a gradient estimation method used for training neural networks. The gradient of a loss function with respect to the weights is computed iteratively from the last layer to the input.

Without backpropagation, we would need to calculate the gradient of the weights of each layer independently. However, we can reuse past layers’ gradients and can avoid redundant calculations with backpropagation. Also, in a computational aspect, the calculations of gradients within the same layer can be parallelized.

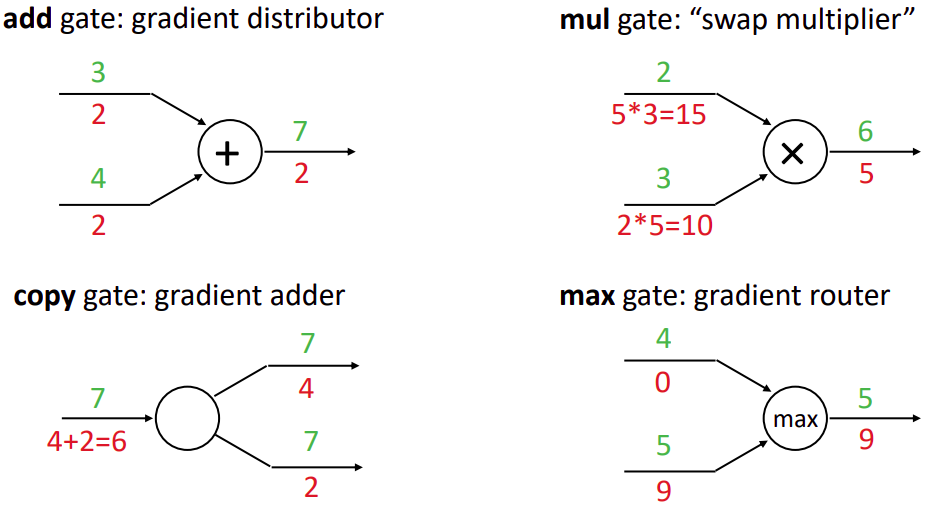

Patterns in Gradient Flow

Algorithm

- Make a prediction and calculate the loss using the data (feedforward step)

- Update gradients using the chain rule and obtained results from feedforward step (backpropagation)

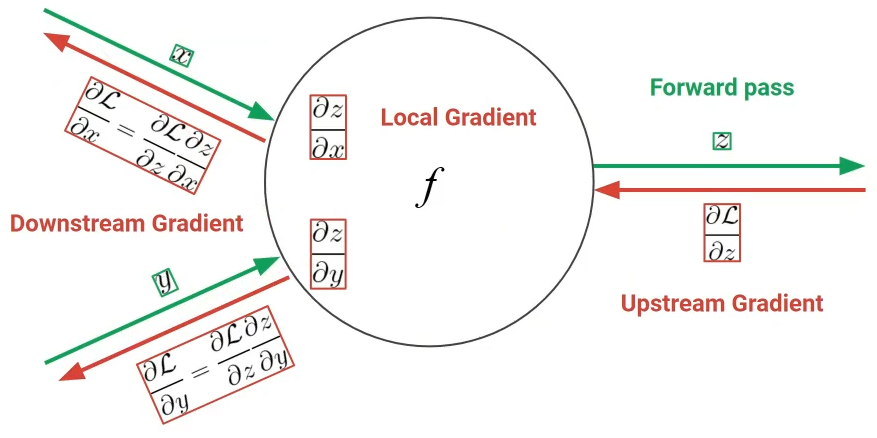

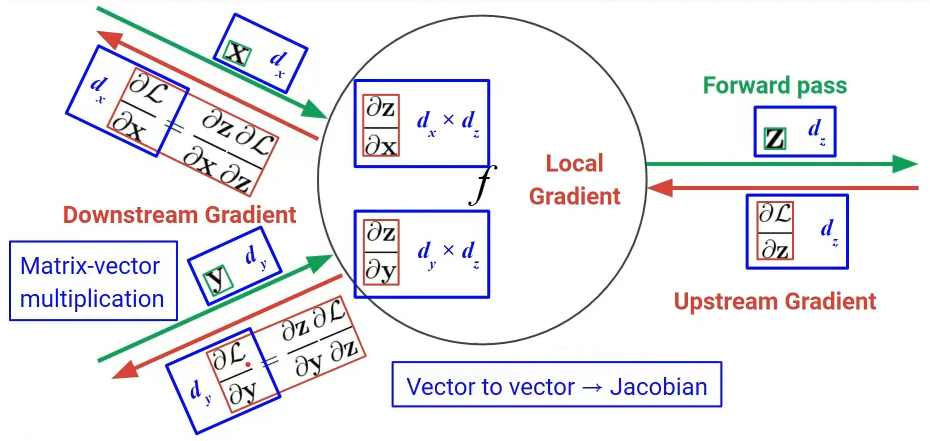

The downstream gradients of a node are calculated by the product of the upstream gradient and the local gradient.

Scalar Case

Vector Case

Examples

Link to original

Activation Function

Definition

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on the linear combination of its inputs. It is used to add a non-linearity to the model.

Examples

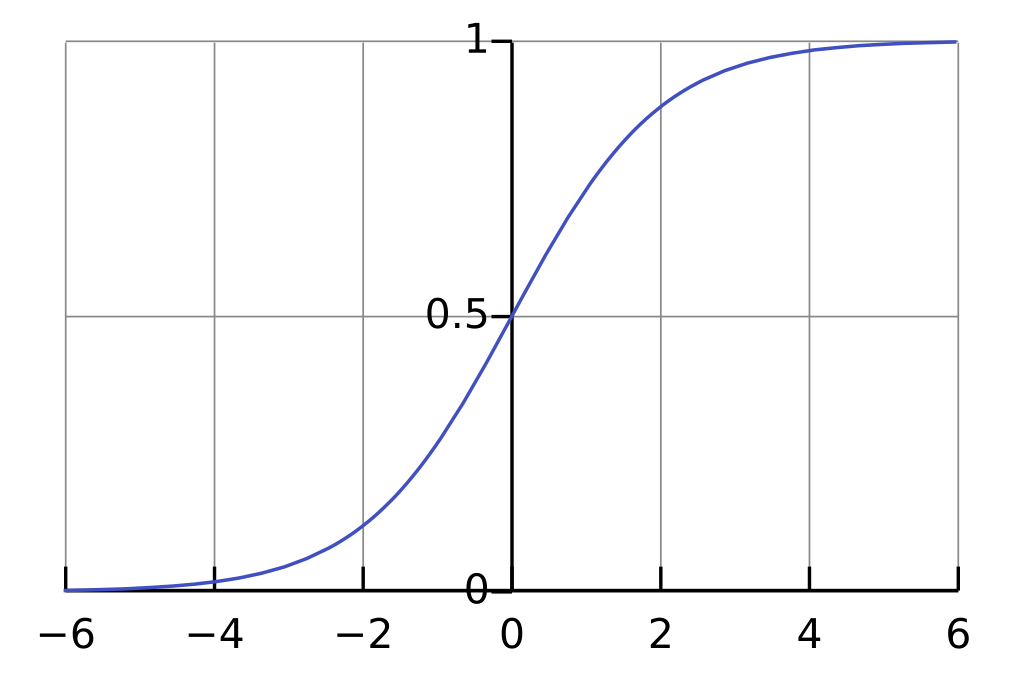

Logistic Function

Definition

The logistic function is inverse function of Logit.

Facts

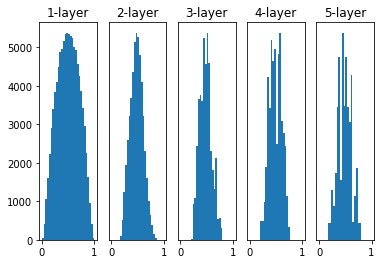

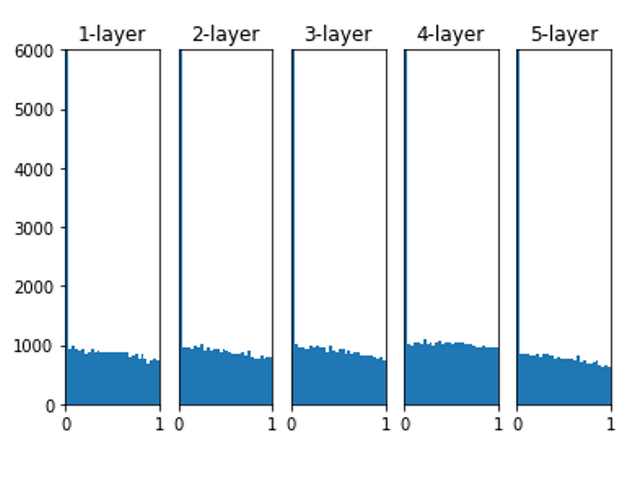

Link to originalSigmoid activation function is vulnerable to vanishing gradient problem. The image of the derivative of the sigmoid function is . For this reason, after passing node with sigmoid Activation Function, the gradient is decreased

Also, with the sigmoid Activation Function, if all the inputs are positive, then all the gradients also positive.

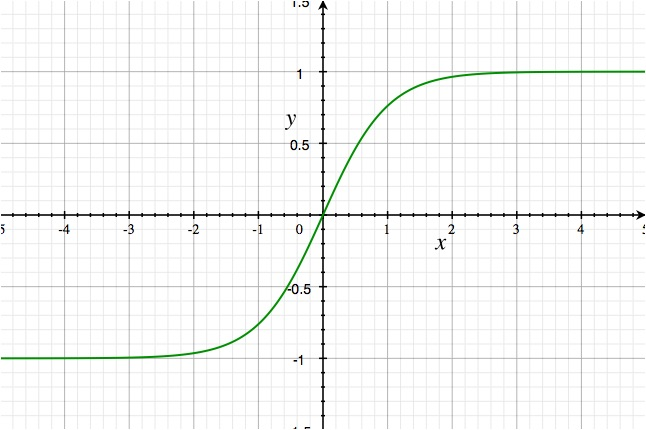

Hyperbolic Tangent Function

Definition

Link to original

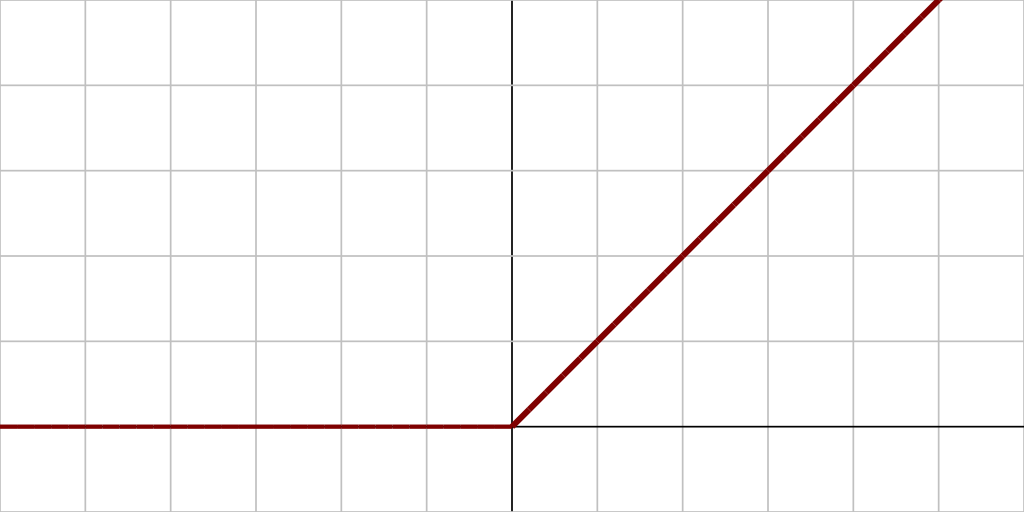

Rectified Linear Unit Function

Definition

Facts

Link to originalIf an initial value is negative, it is never updated.

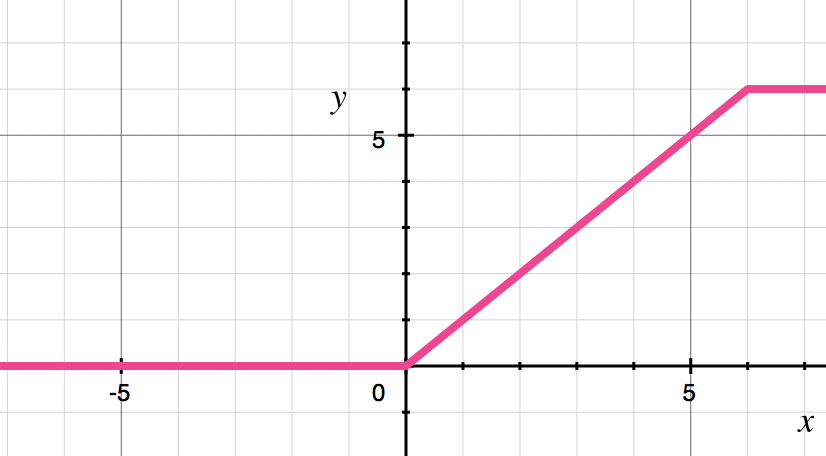

ReLU6

Definition

Link to original

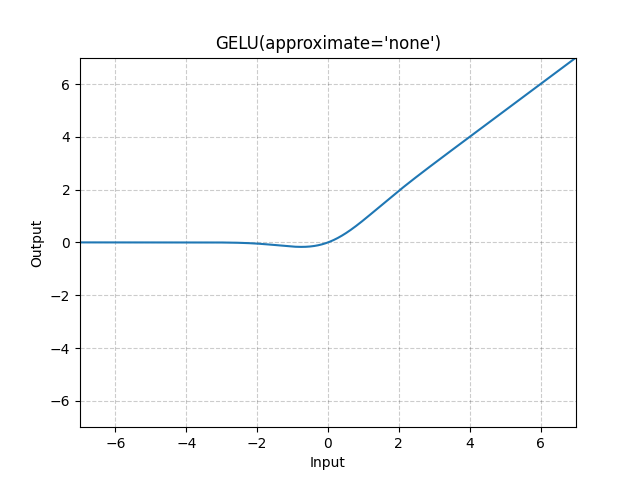

Gaussian-Error Linear Unit

Definition

GELU is a smooth approximation of ReLU.

where is the CDF of the standard normal distribution.

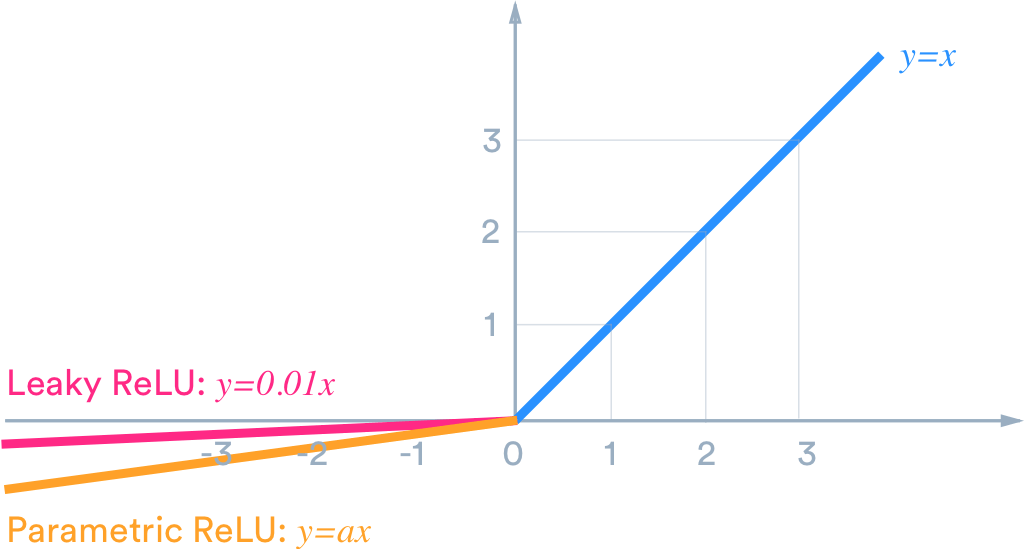

Link to originalParametric ReLU

Definition

where is a hyperparameter

Facts

Link to originalIf , it is called a Leaky ReLU

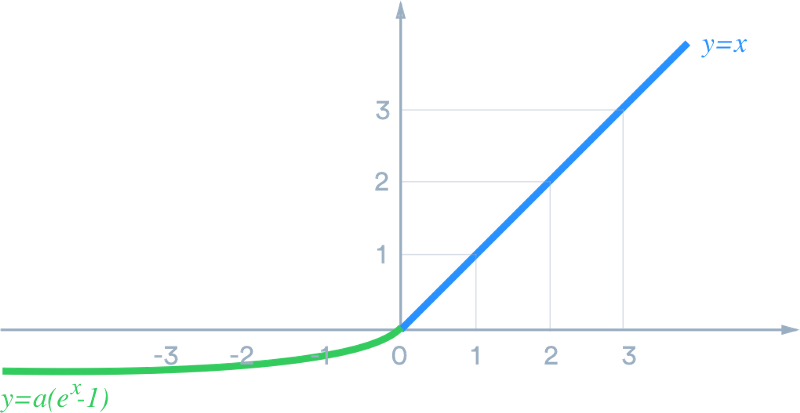

Exponential Linear Unit

Definition

where is a hyperparameter

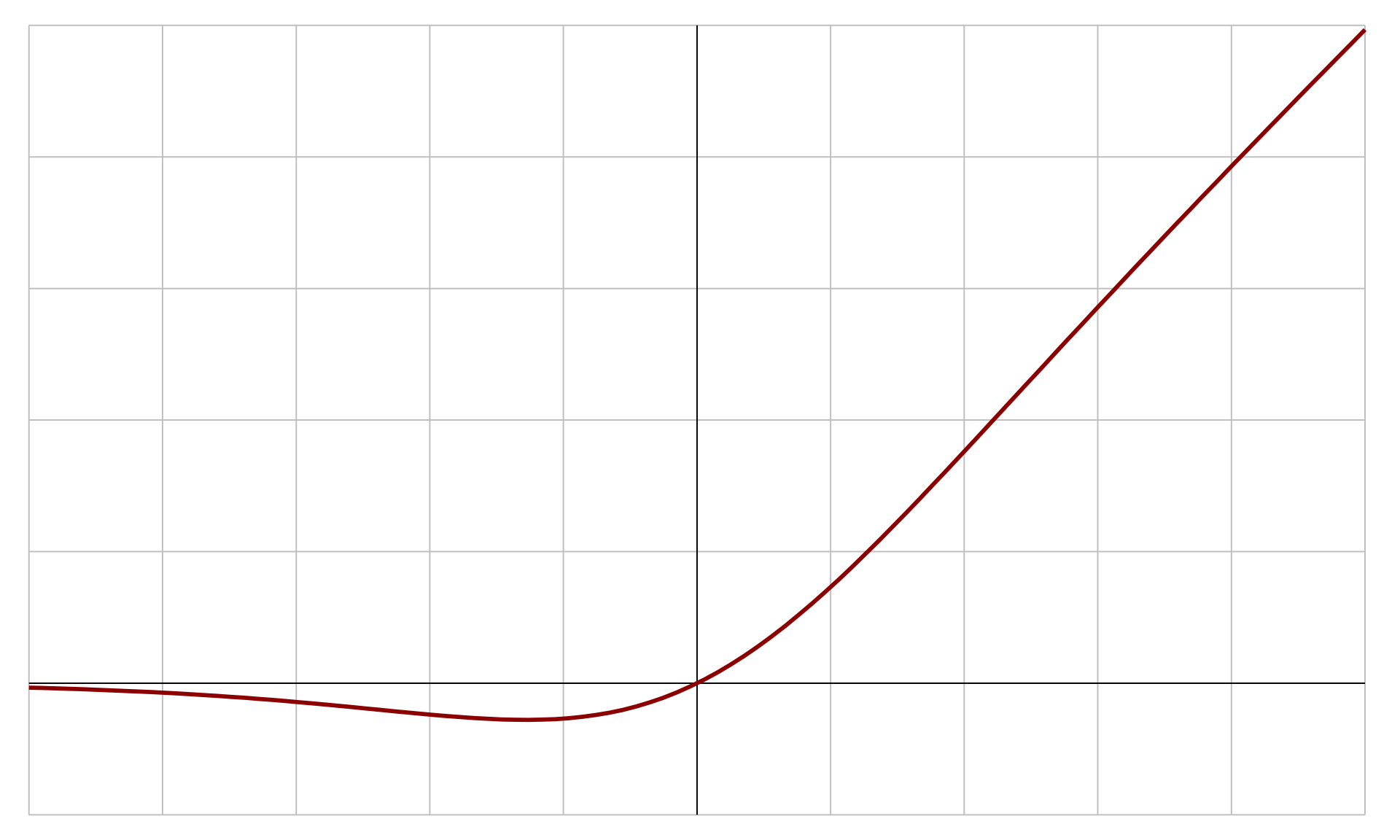

Link to originalLink to originalSwish Function

Definition

where is Sigmoid Function, and is a hyperparameter

When , the function is called a sigmoid liniear unit (SiLU).

Link to original

Weight Initialization

LeCun Initialization

Definition

LeCun initialization is designed for Neural Network using the Tanh activation function.

For Normal Distribution: For Uniform Distribution where is the number of input nodes.

Link to original

Xavier Initialization

Definition

Xavier initialization is designed for Neural Network using the sigmoid or Tanh activation function.

For Normal Distribution: For Uniform Distribution where is the number of input nodes, and is the number of output nodes.

Link to original

He Initialization

Definition

He initialization is designed for Neural Network using the ReLU activation function.

For Normal Distribution: For Uniform Distribution where is the number of input nodes, and is the number of output nodes.

Link to original

Convolutional Neural Networks

Convolutional Neural Network

Definition

A convolutional neural network (CNN) is a regularized type of fully connected Neural Network that learns features by itself via filter optimization. It consists of convolution layers.

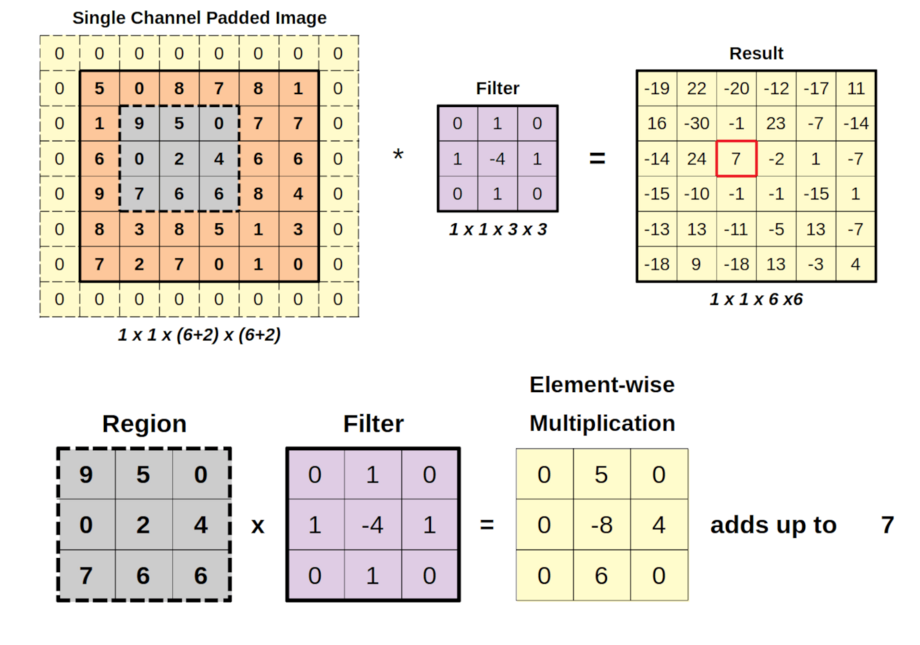

Convolutional Layer

The layer’s parameters consist of a set of learnable filters that slide over the input image. Each filter performs a Convolution operation, computing the Dot Product between the filter values and the input values at each position. The output of the convolution operation is a feature map.

The output size is determined by the input size, filter size, padding, and stride. where is the input size, is the filter size, is padding, and is the stride.

Stride

Stride refers to the step size the convolution filter moves each time it slides over the input.

Padding

Padding adds extra border pixels around the input images. It preserves spatial dimension of the feature map, and retains information at the borders.

Link to original

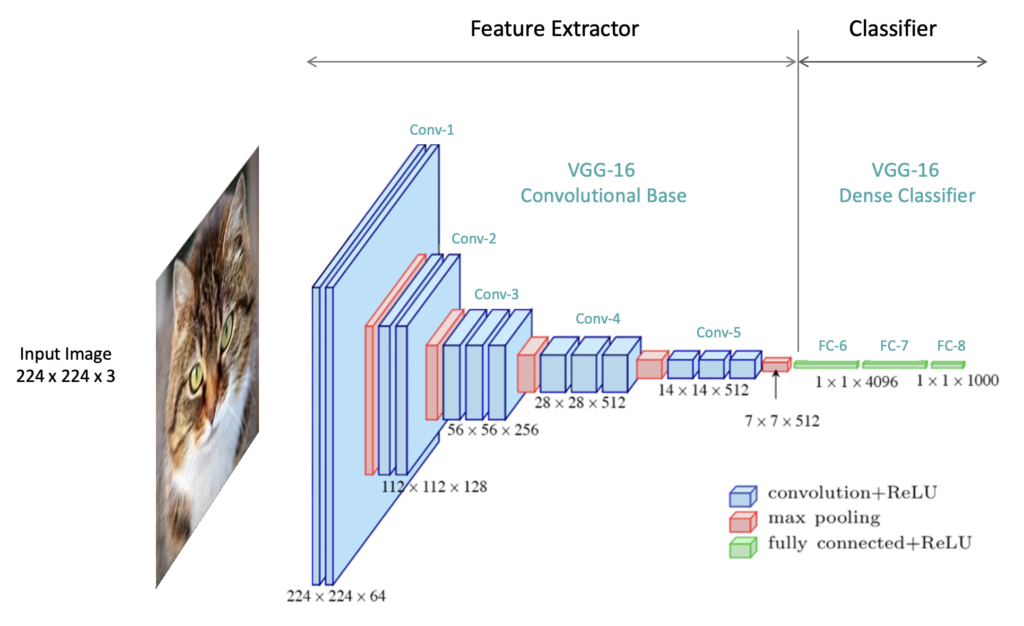

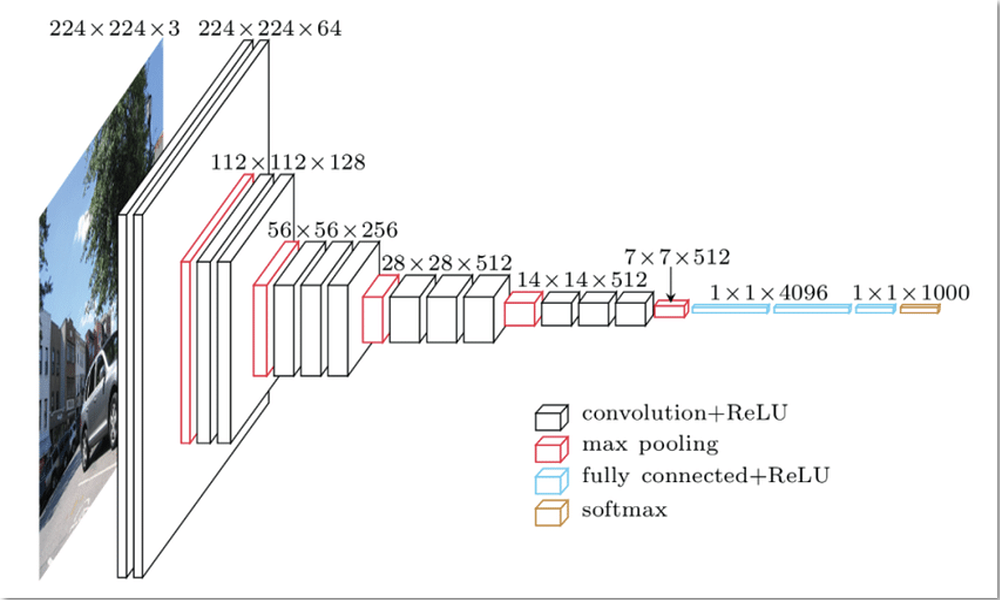

VGG Net

Definition

VGG model is a deep Convolutional Neural Network architecture. The VGG model is characterized by its depth and uniformity. It consists of a series of convolutional layers followed by fully connected layers.

Link to original

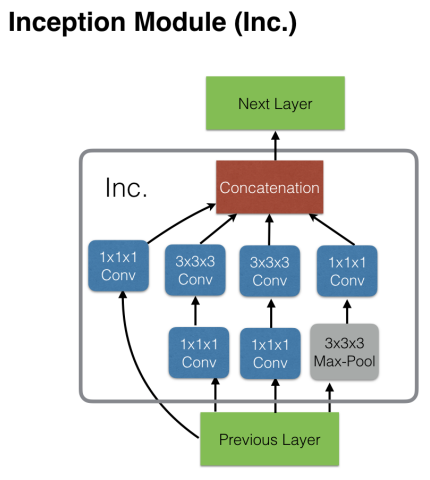

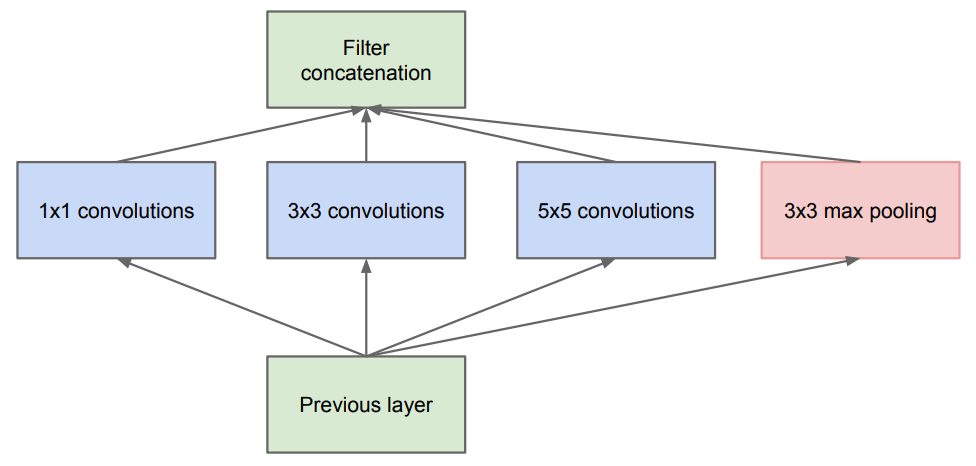

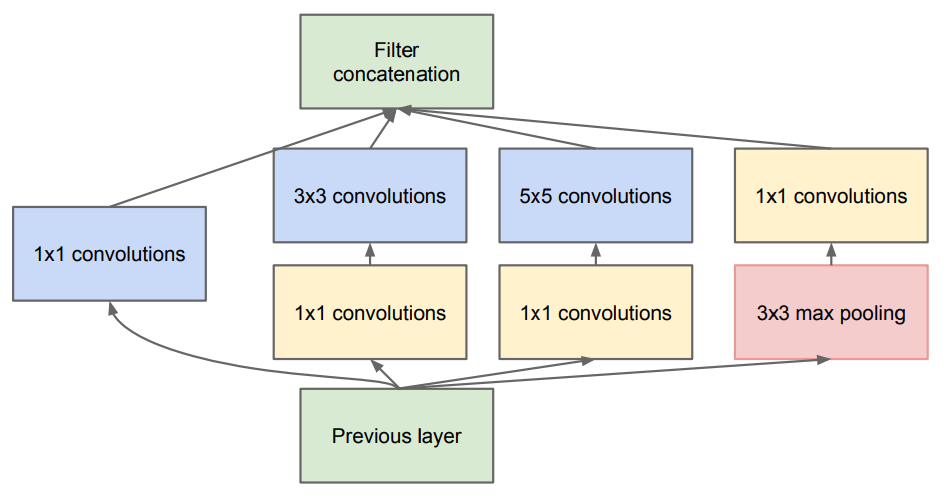

Inception Net

Definition

Inception Net model is a deep Convolutional Neural Network architecture using the inception module.

Architecture

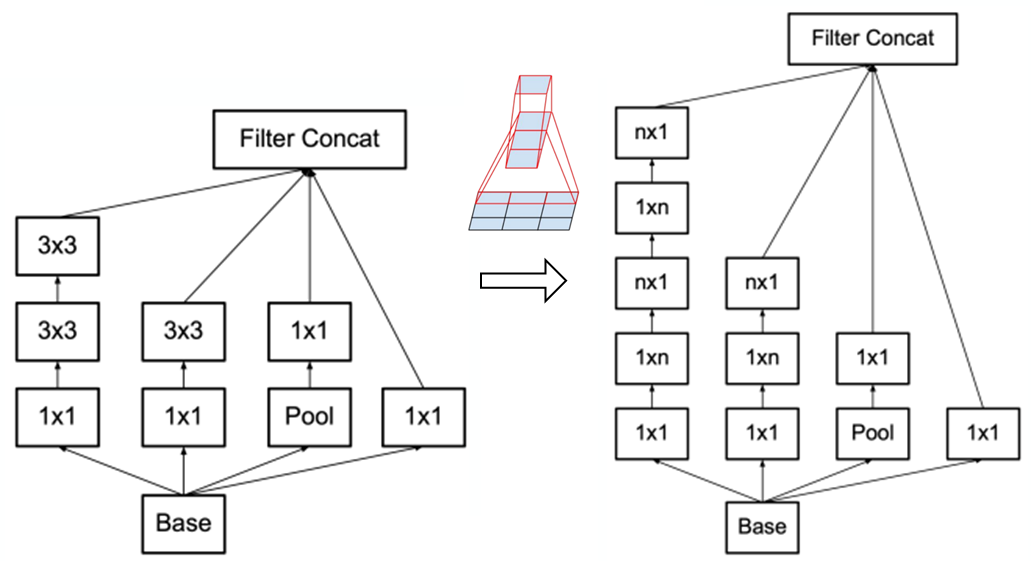

Inception Net V1

Inception Module

Inception module is a building block of the inception net. It uses multiple filter sizes (, , and ) and pooling operations in parallel, allowing the network to capture features at different scales simultaneously.

The are used for dimensionality reduction, helping to reduce computational complexity.

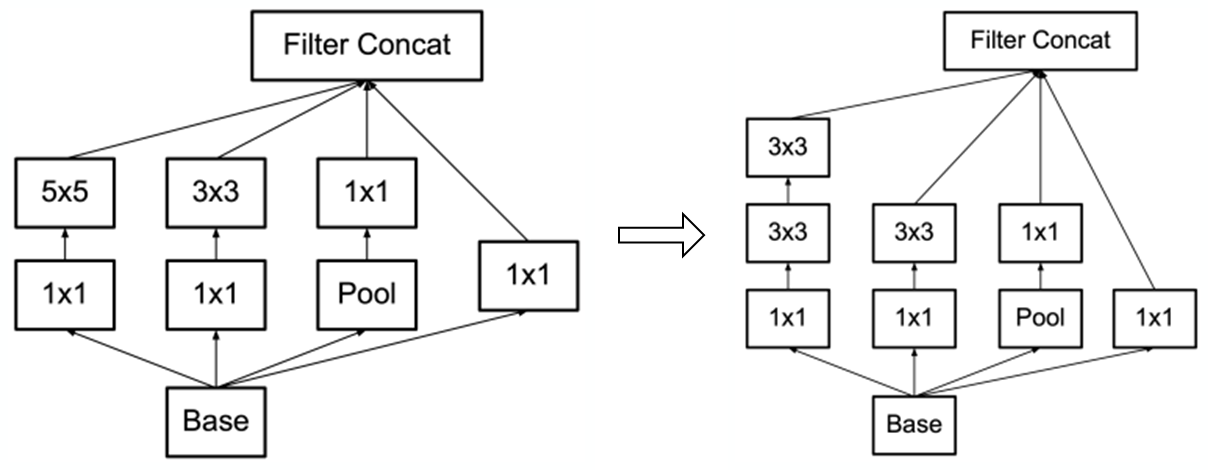

Inception Net V2, V3

Factorized Convolution

The large convolutions in the inception module were replaced with multiple smaller convolutions reducing parameters and computational cost.

Asymmetric Convolution

are decomposed into and convolution.

Label Smoothing

The model prevent from becoming overconfident applying the label smoothing to the labels. where is the smoothed label, is the original one-hot encoded label, is the smoothing parameter, and is the number of classes.

Link to original

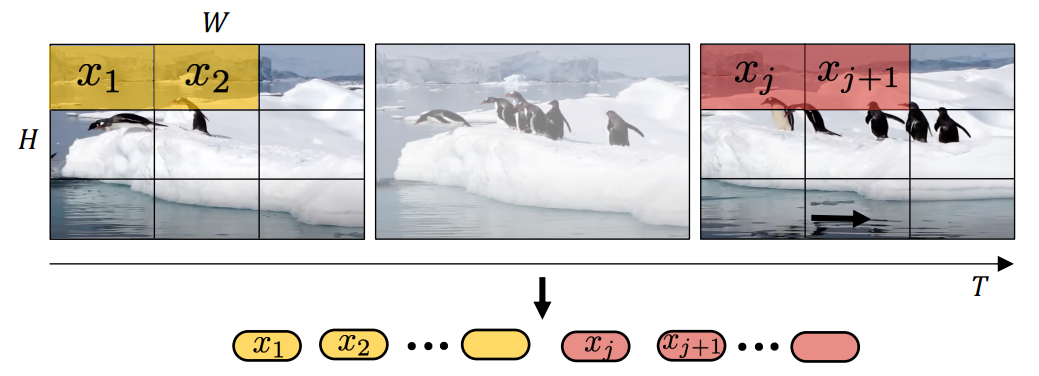

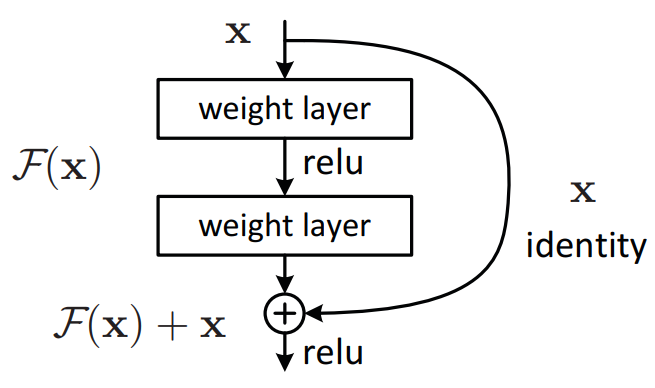

ResNet

Definition

ResNet is a deep Convolutional Neural Network architecture. It was designed to address the degradation problem in very deep neural networks.

Architecture

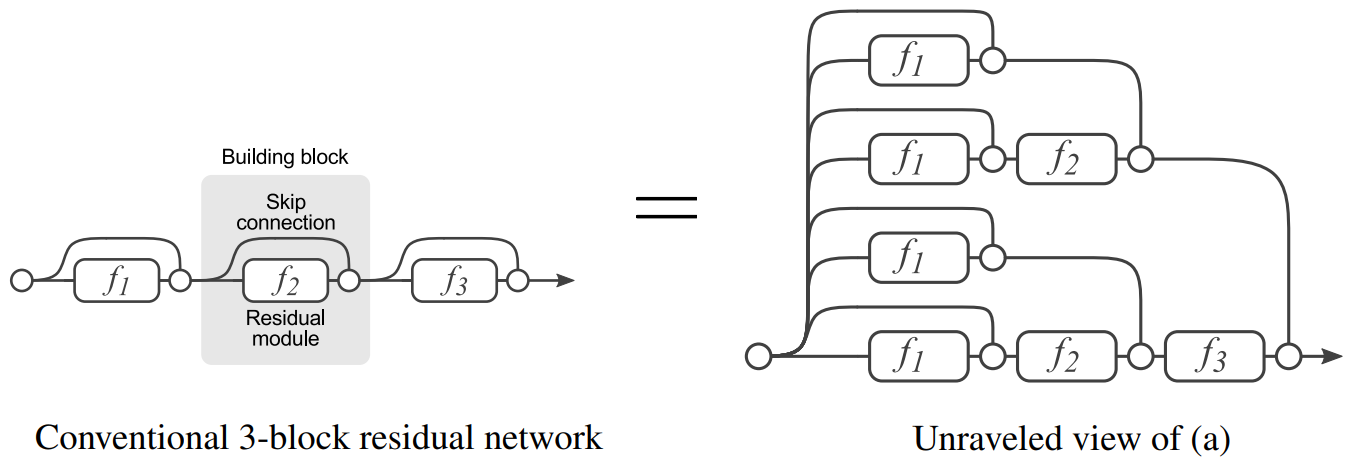

Skip Connection

The core innovation of ResNet is the introduction of skip connections (shortcut connections or residual connections). These connections allow the network to bypass one or more layers, creating a direct path for information flow. It performs identity mapping, allowing the network to easily learn the identity function if needed.

The residual block is represented as where is the input to the block, is the learnable residual mapping typically including multiple layers, and is the output of the block

Skip connection create a mixture of deep and shallow models. skip connections, makes possible paths, where each path could have up to modules.

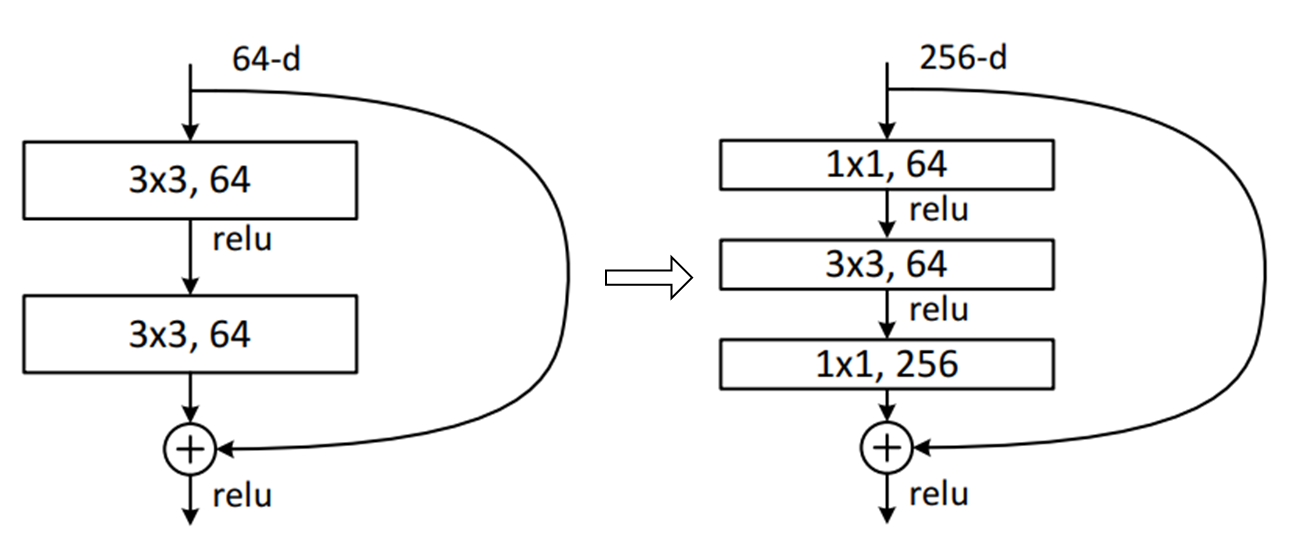

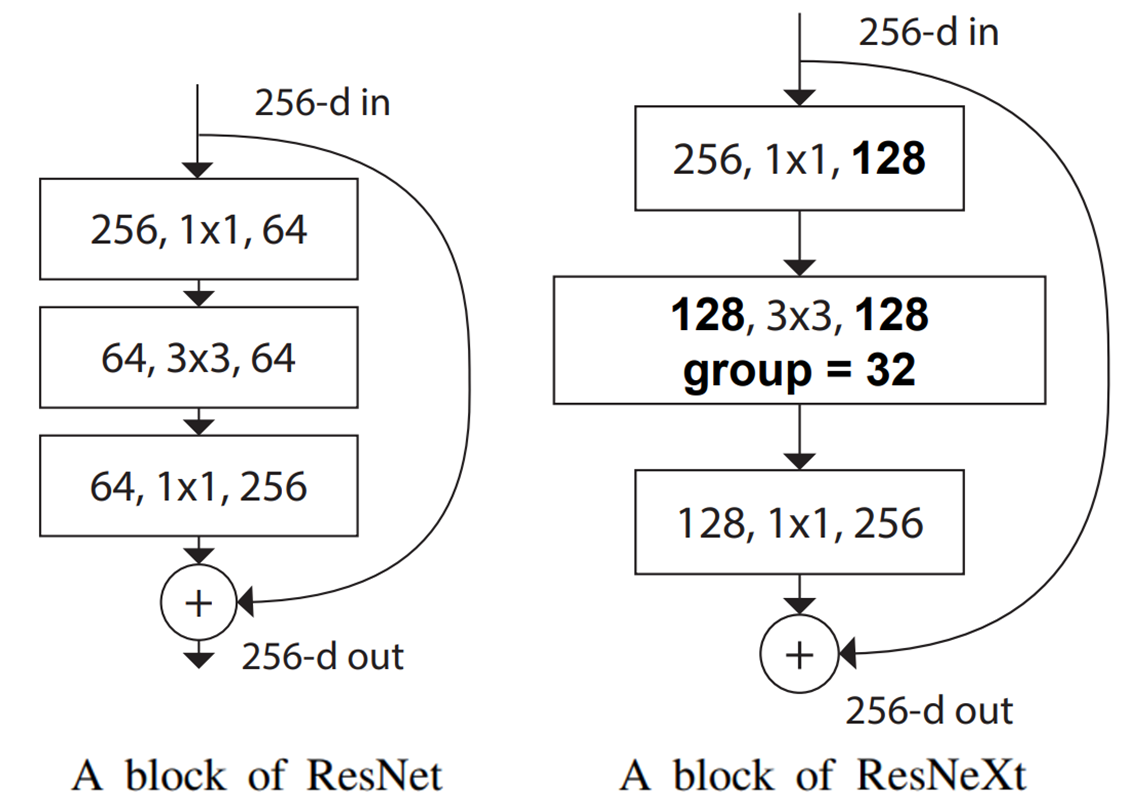

Bottleneck Block

The bottleneck architecture is used in deeper versions of ResNet to improve computational efficiency while maintaining or increasing the network’s representational power. The bottleneck block consists of three layers in sequence: convolutions. The first convolution reduces the number of channels, the convolution operates on the reduced representation, and the second convolution increases the number of channels back to the original.

Architecture Variants

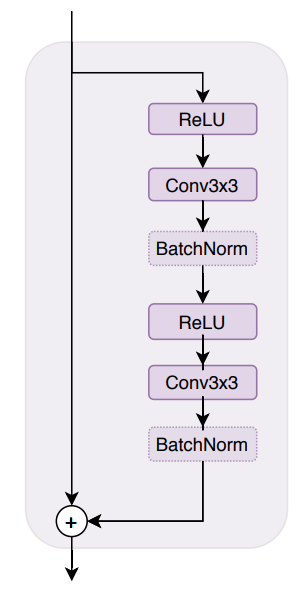

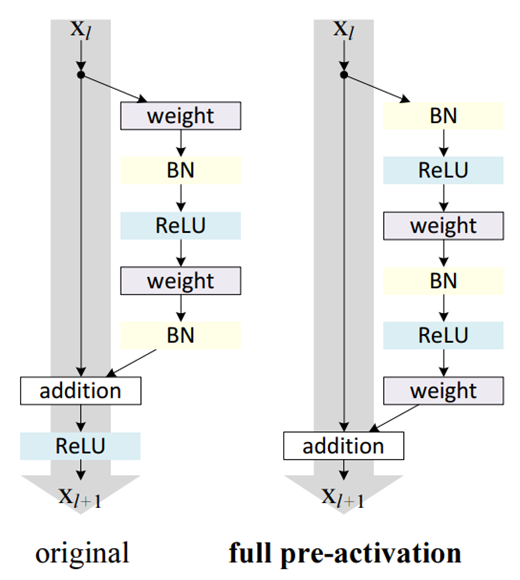

Full Pre-Activation

Full pre-activation is an improvement to the original ResNet architecture. This modification aims to improve the flow of information through the network and make training easier. In full pre-activation, the order of operations in each residual block is changed to move the batch normalization and activation functions before the convolutions.

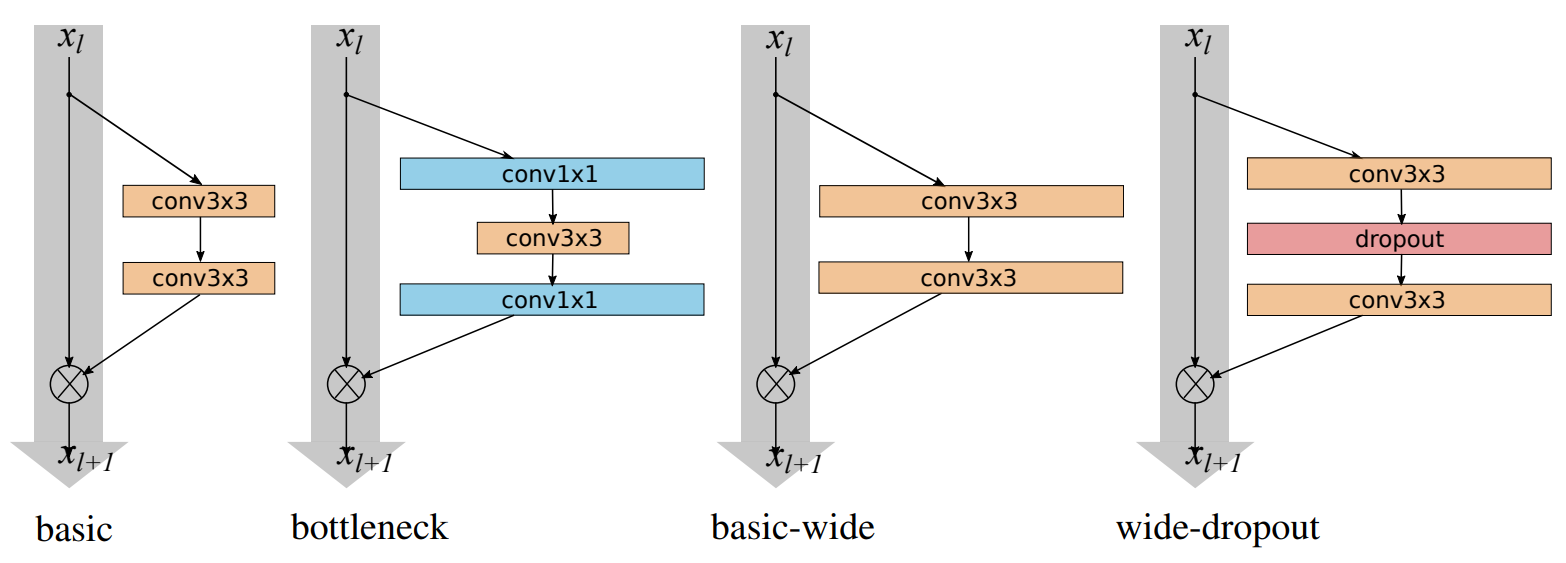

WideResNet

WideResNet increases the number of channels in the residual blocks rather than increasing Network’s depth.

ResNeXt

The ResNeXt model substitutes the convolution of residual block of ResNet with the Grouped Convolution. ResNeXt achieve better performance than ResNet with the same number of parameters, thanks to its more efficient use of model capacity through the grouped convolution.

Link to original

Regularization for Neural Networks

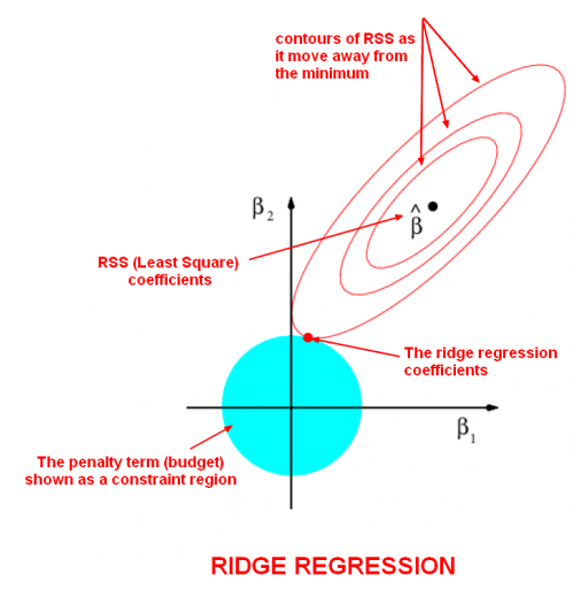

Ridge Regression

Definition

where is a complexity parameter that controls the amount of shrinkage.

Ridge regression is particularly useful to mitigate the problem of Multicollinearity in linear regression

Facts

Link to originalwhere by Singular Value Decomposition

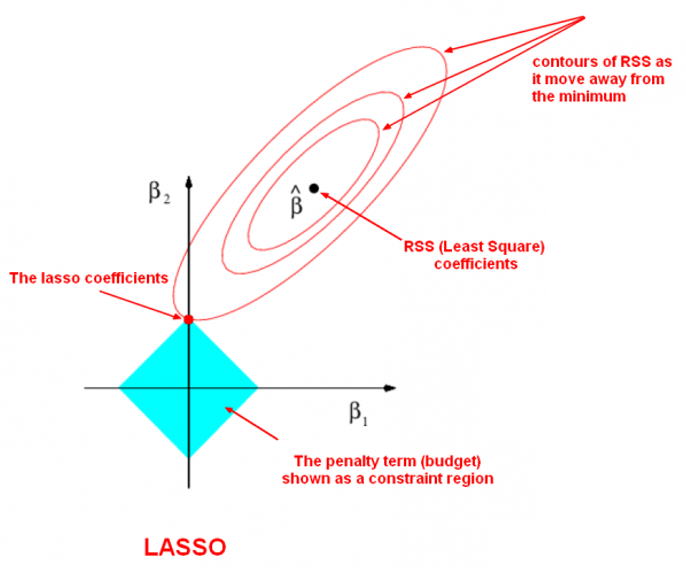

Lasso Regression

Definition

Lasso model assume that the coefficients of the model are sparse.

Link to original

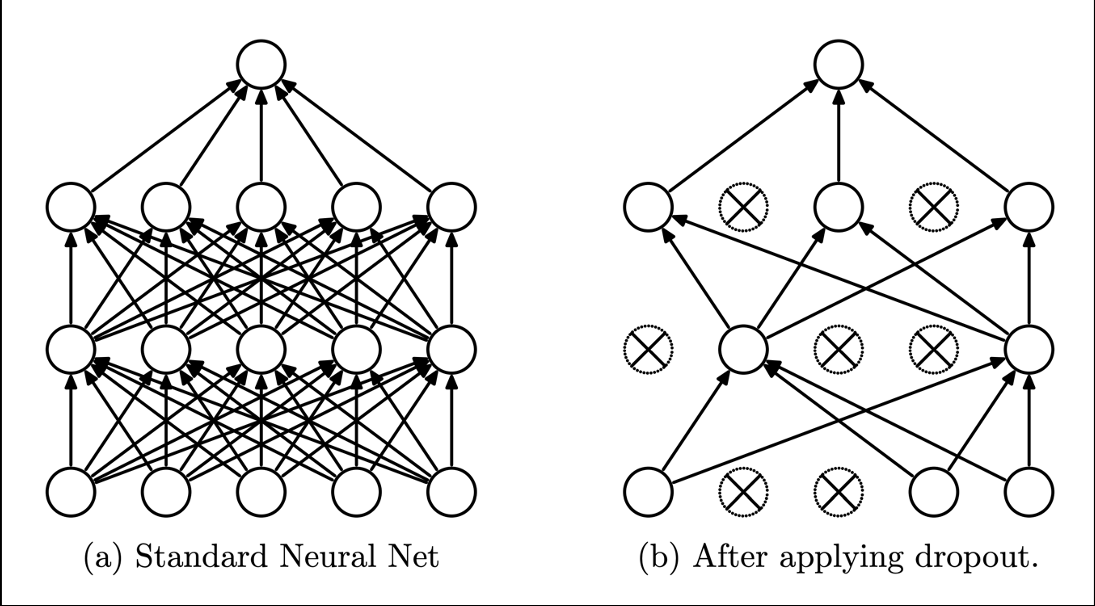

Dropout

Definition

Dropout is a regularization technique used for Neural Network. Dropout randomly dropping out or omitting units during training process of a Neural Network.

Link to original

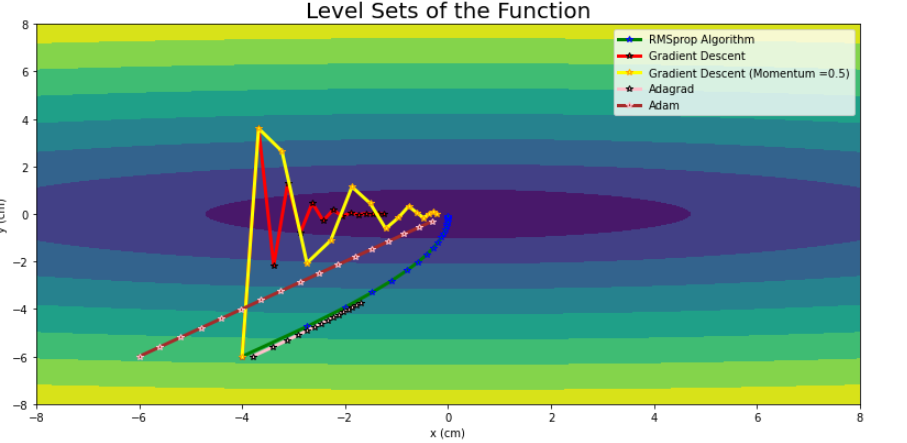

Optimization

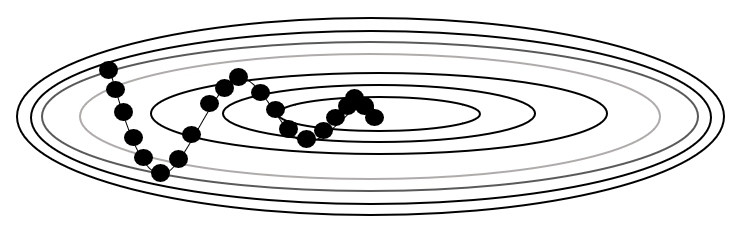

Stochastic Gradient Descent

Definition

Stochastic gradient descent (SGD) is a stochastic approximation of Gradient Descent. It replaces the actual gradient (calculated from the entire dataset) with an estimation of it by randomly selecting a subset of the data.

where is the learning rate.

Link to original

Optimizers

Definition

Optimizers are algorithms used to adjust the parameters of a model to minimize the loss function. The optimizers aim to improve the convergence speed and stability of the training process compared to standard Stochastic Gradient Descent.

Examples

Momentum Optimizer

Definition

Momentum optimizer remembers the update at each iteration, and determines the next update as a linear combination of the gradient and the previous update

where is the momentum coefficient.

Link to originalAdaGrad Optimizer

Definition

Adaptive gradient descent (AdaGrad) is a Gradient Descent with parameter-wise learning rate.

where is the sum of squares of past gradients, and is a small constant to prevent division by zero.

Link to originalRMSProp Optimizer

Definition

RMSProp optimizer resolves AdaGrad Optimizer’s rapidly diminishing learning rates and relative magnitude difference by taking the exponential moving average on history.

where is the decay rate.

Link to originalLink to originalAdam Optimizer

Definition

Adaptive momentum estimation (Adam) combines the ideas of momentum and RMSProp optimizers.

Where:

Link to original

- is the estimate of the first moment (mean) of the gradients

- is the estimate of the second moment (un-centered variance) of the gradients

- and are decay rates for the moment estimates

- and are bias-corrected estimates

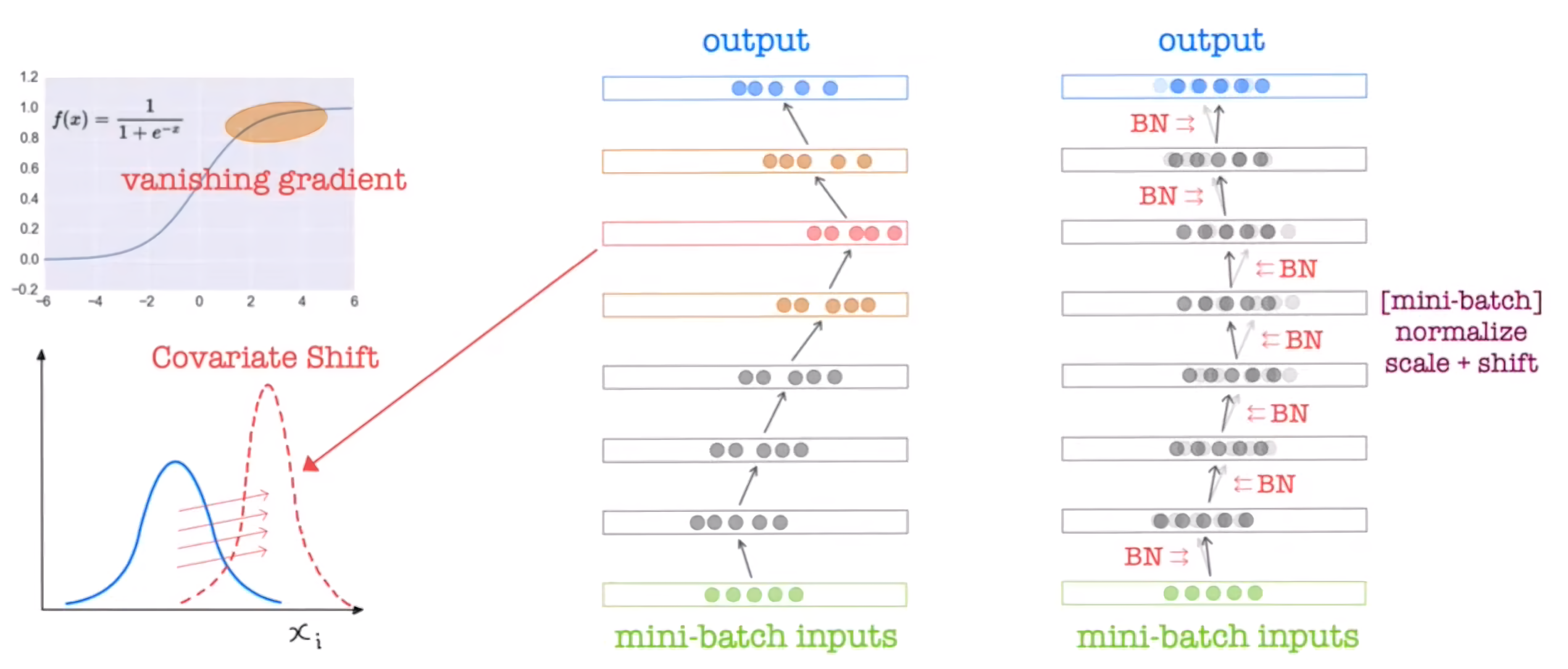

Normalization

Batch Normalization

Definition

Batch normalization (batch norm) make training of neural network faster through normalization of each layers’ input by re-centering and re-scaling where:

- is the mean vector of the mini-batch

- is the variance vector of the mini-batch

- is the batch size.

- , are the learnable scaling parameters.

- is a small constant to prevent division by zero.

In test stage, the training stage’s moving average of the and are used.

Link to original

Layer Normalization

Definition

Layer normalization (layer norm) normalizes the inputs across the features for each sample. In a fully connected layer, layer norm is applied across all the neurons in that layer for each input. In a convolutional layer, layer norm is applied across the channel dimension for each spatial position.

Link to original

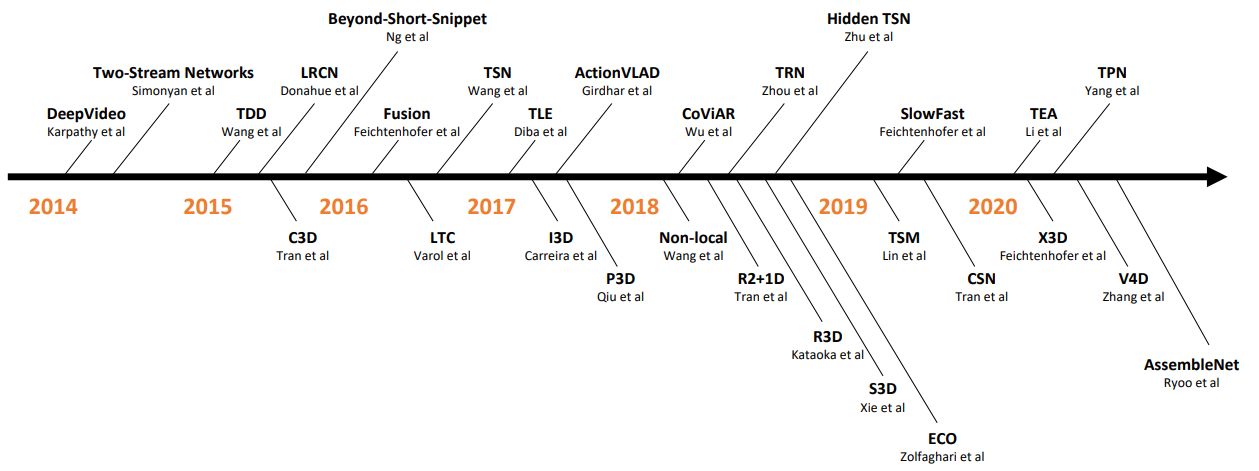

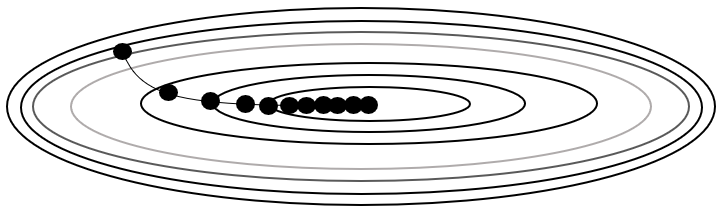

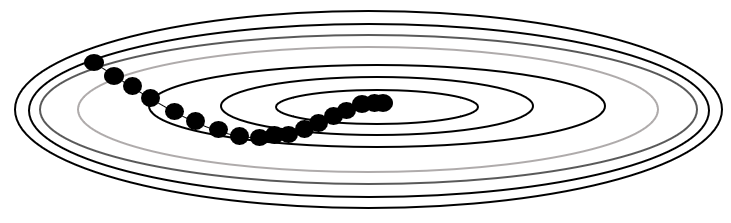

Action Recognition Models

! !

!

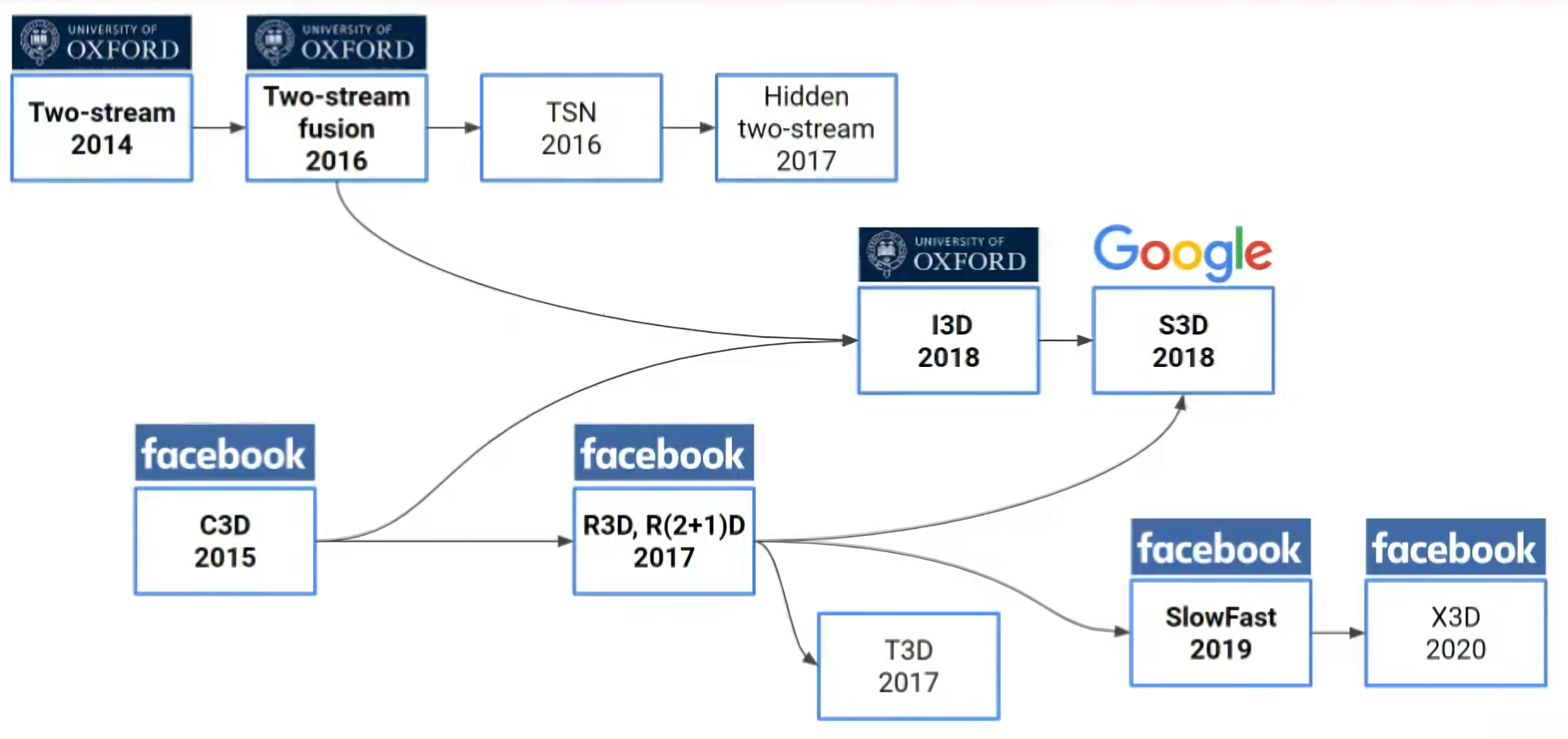

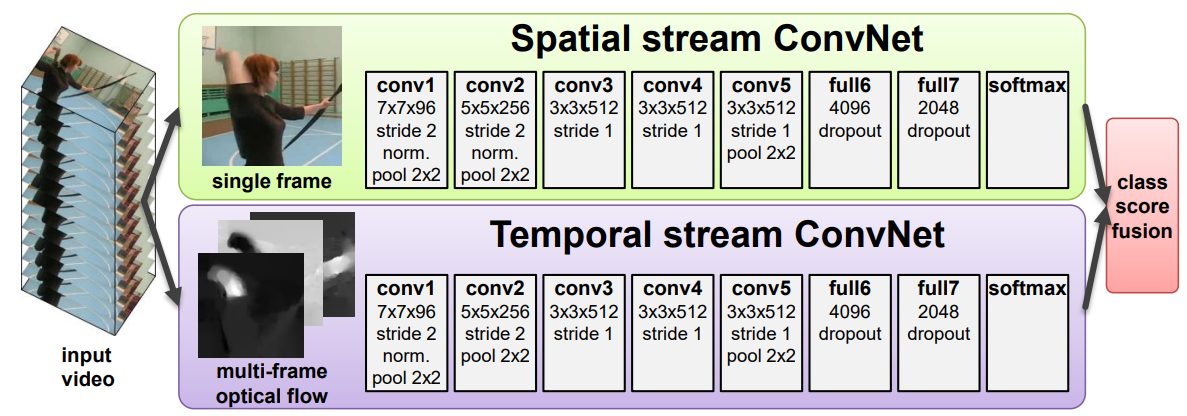

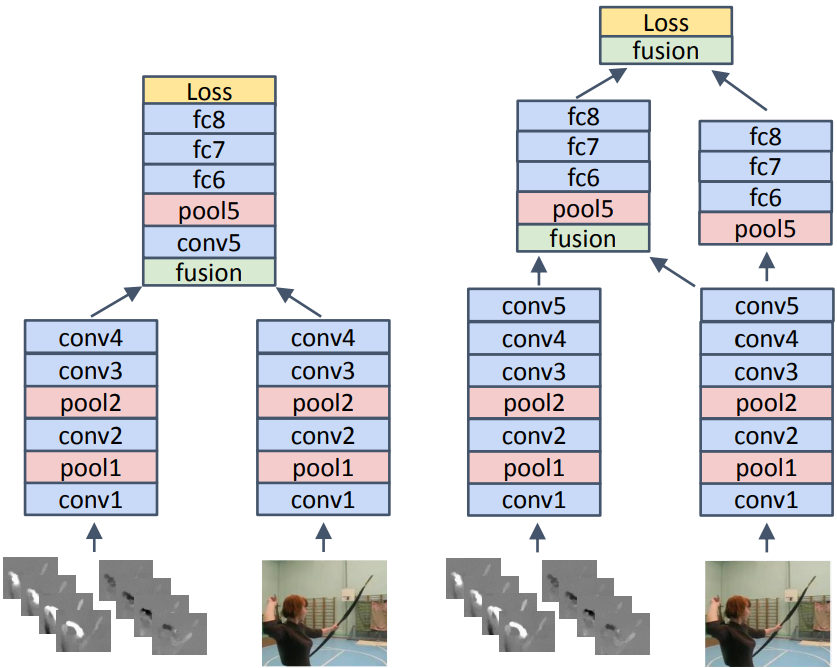

Two-Stream Models

Two-Stream Network

Definition

Explicitly separate appearance (spatial) and motion (temporal) in training, the features are combined at scoring stage. From the input video, model takes a single frame and feed it to spatial stream which has CNN structure. To the motion stream, feed pre-calculated optical flows, stacked as a channel, to learn temporal dynamics, independent of appearance.

Link to original

Two-Stream Network Fusion

Definition

An improved version of Two-Stream Network. Instead of fusing at the scoring step, two streams are fused in the middle.

Link to original

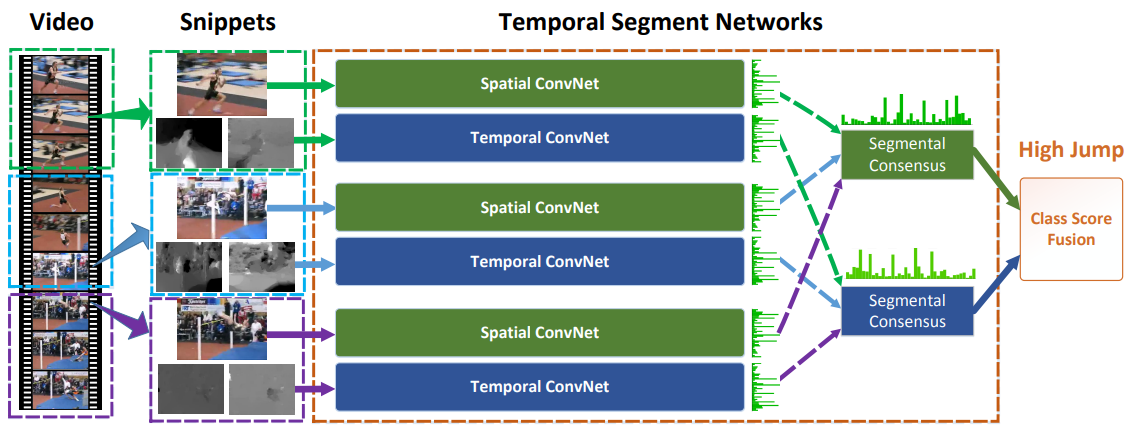

Temporal Segment Network

Definition

Temporal Segment Network (TSN) sample more than one images for better long-range temporal modeling. Also, Batch Norm and Dropout is utilized.

Link to original

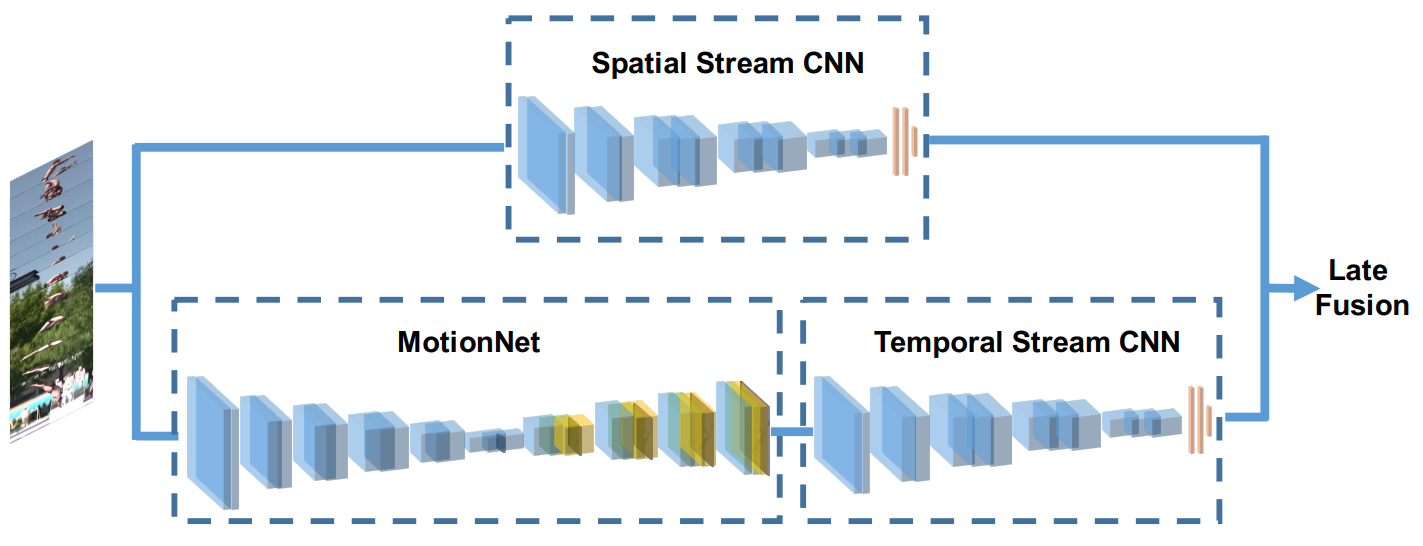

Hidden Two-Stream Network

Definition

Hidden two-stream network substitutes the optical flow, used for the Two-Stream Network, with the motion net estimating optical flow

Link to original

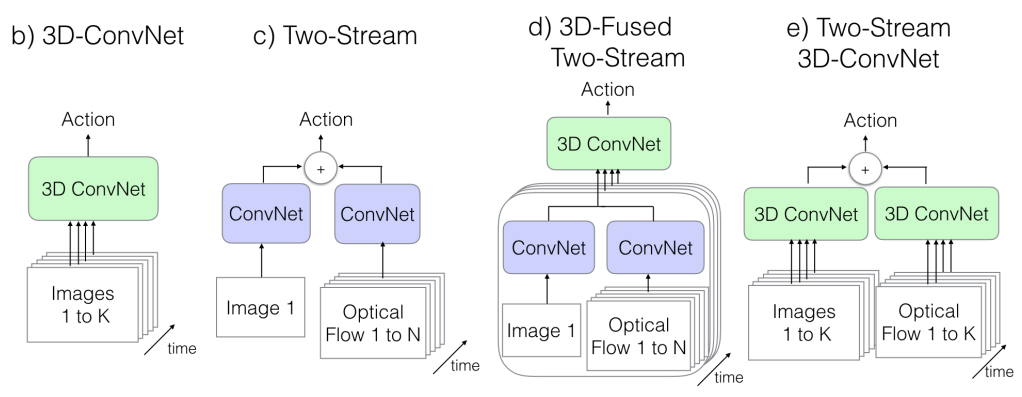

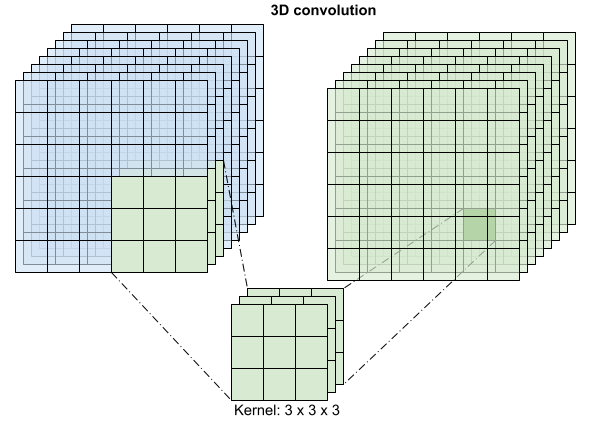

3D Convolutional Models

3D Convolution

Definition

3d convolution utilizes 3d convolutional filter for the 3d input (depth, height, width). Similar to the 2d convolution, the kernel slides across the input volume in all three dimensions, computing the Dot Product between the filter values and the input values at each position. The output of the convolution operation is a 3d feature map.

Link to original

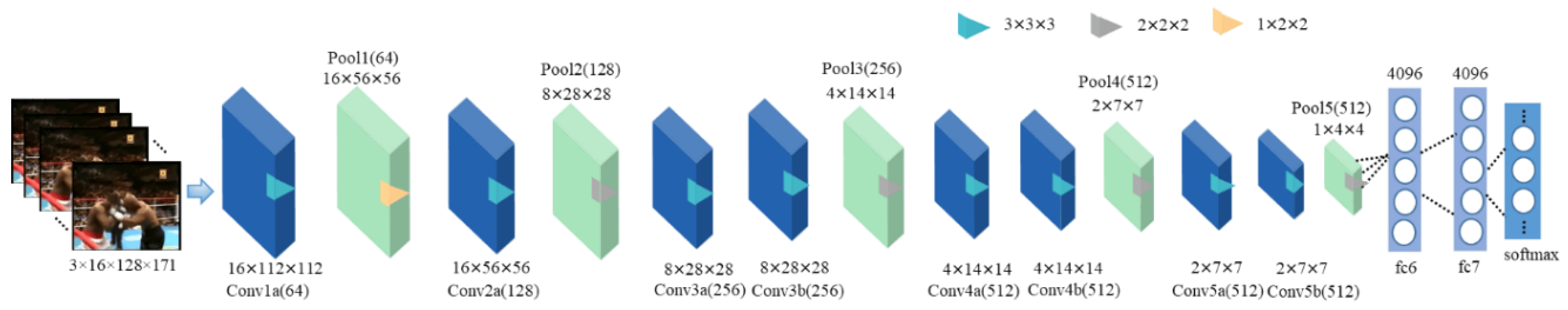

3D Convolutional Network

Definition

3D Convolutional Network (C3D) model applies 3D Convolution on video volume.

Link to original

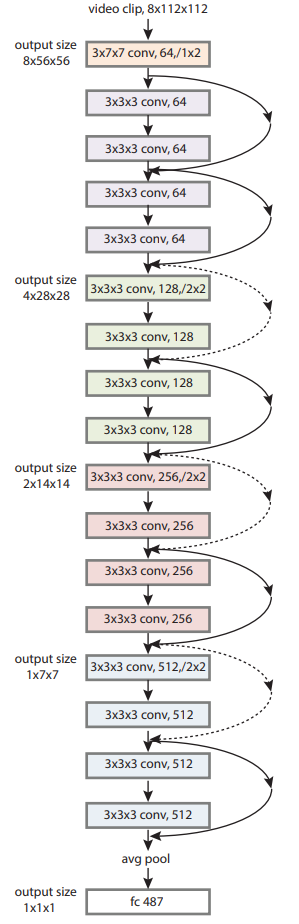

3D Residual Networks

Definition

3D Residual Networks (R3D) model applied resnet structure on 3D Convolutional Network model.

Link to original

(2+1)D Residual Networks

Definition

(2+1)D Residual Networks (R(2+1)D) model decomposes the 3d convolution used for R3D into spacial and temporal axes.

Link to original

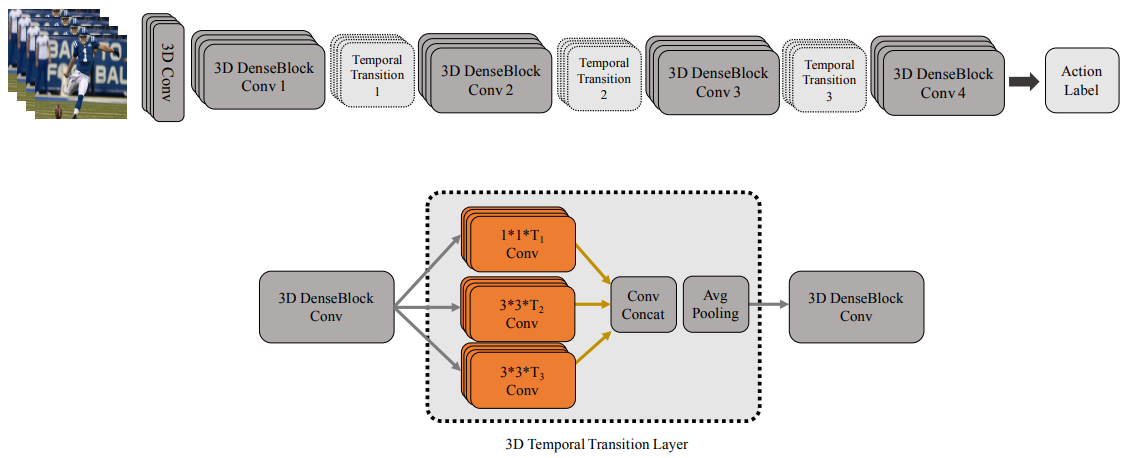

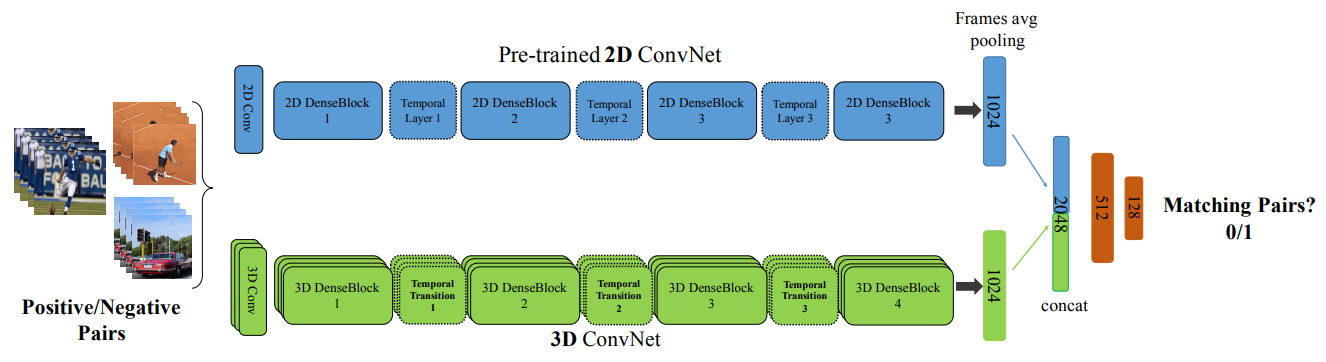

Temporal 3D ConvNet

Definition

Temporal 3D ConvNet (T3D) applied DenseNet structure on 3D Convolutional Network model. The 3D Temporal Transition Layer, similar to the inception module of GoogLeNet), stacked after DenseBlock to capture different temporal lengths

Also, the model utilized the pre-trained 2D ConvNet as a teacher to make the 3D ConvNet learn mod-level feature representation by image-video correspondence task. During the training, the model parameters of the 2d ConvNet is frozen.

Link to original

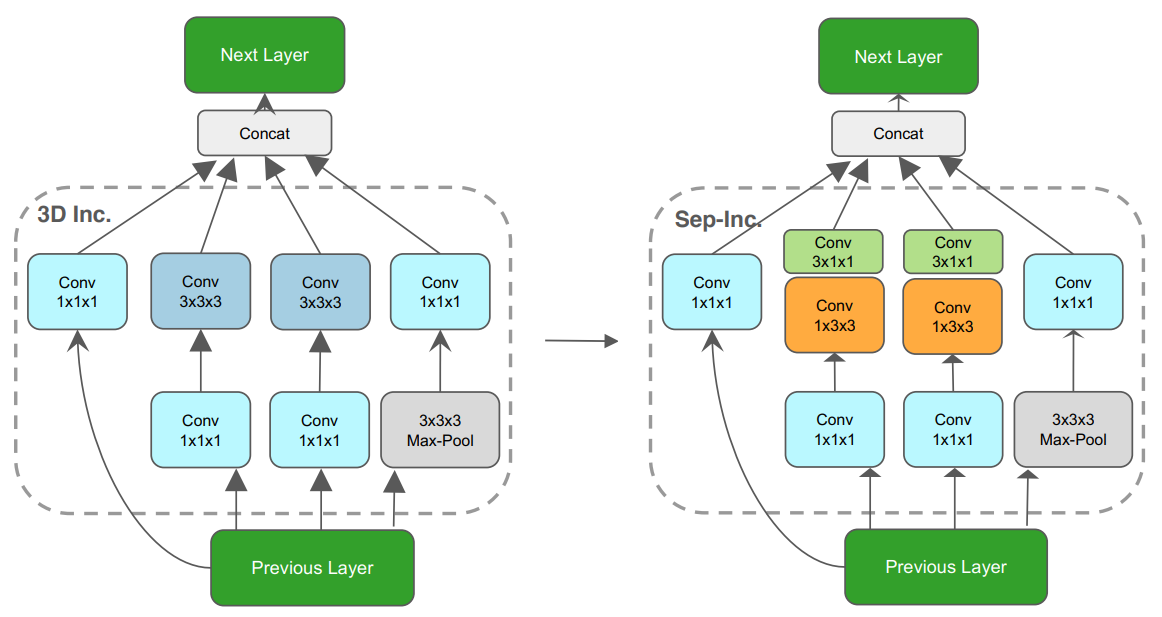

Two-Stream Inflated 3D ConvNet

Definition

Two-Stream Inflated 3D ConvNet (I3D) combines the idea of Two-Stream Network and 3D Convolutional Network. Both spatial and temporal stream has 3D Convolutional Network structure with InceptionNet backbone.

Link to original

Seperable 3D CNN

Definition

Seperable 3D CNN (S3D) model decomposes the 3d inception block used for Two-Stream Inflated 3D ConvNet into spacial and temporal axes.

Link to original

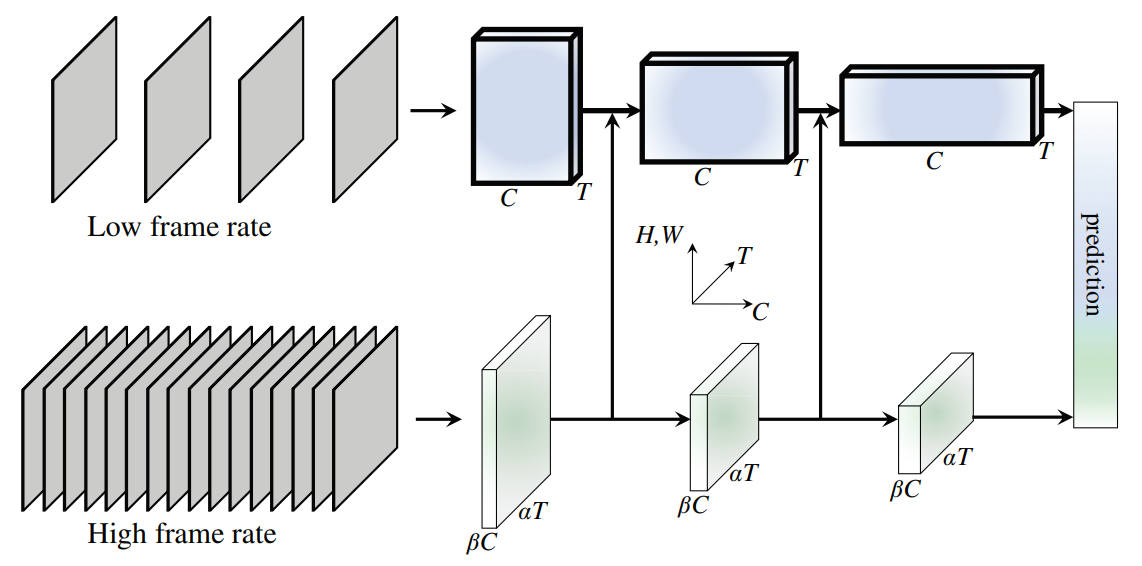

SlowFast Network

Definition

SlowFast Network has a two-stream structure: slow pathway and fast pathway, where both stream have 3D Residual Networks structure. The slow pathway has low frame rate and high channel size, and the fast pathway has high frame rate and low channel size. The connections across pathways allows each pathway to be aware of the representation learned by the another pathway.

Link to original

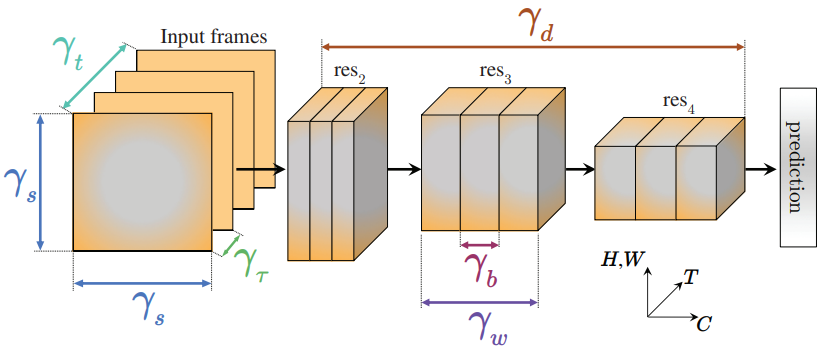

Expand 3D CNN

Definition

Expand 3D CNN (X3D) model tried to find the optimal parameters for 3D Residual Networks using AutoML method. It tunes six parameters:

Link to original

- X-Fast (): the input frame rate (temporal resolution)

- X-Temporal (): the number of frames in the input

- X-Spatial (): the spacial resolution

- X-Depth (): the depth of the network

- X-Width (): the number of channels for all layers

- X-Bottleneck (): the inner channel width of the center convolutional filter in each residual block

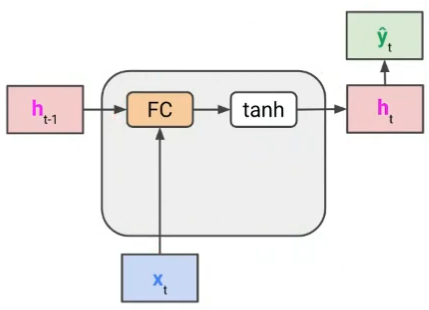

Recurrent Neural Networks

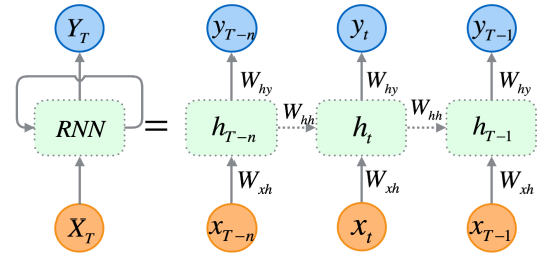

Recurrent Neural Network

Definition

Recurrent neural network (RNN) is a class of Neural Network for sequential data processing. RNNs maintain an internal state, representing the semantics of the input sequence processed so far, which is updated at each step based on the current input and the previous hidden state. RNNs can process input sequences of any length, and the model size doesn’t increase for longer input. However, due to the sequential structure, the computation is slow, and it suffers from the vanishing gradient problem in training.

Applications

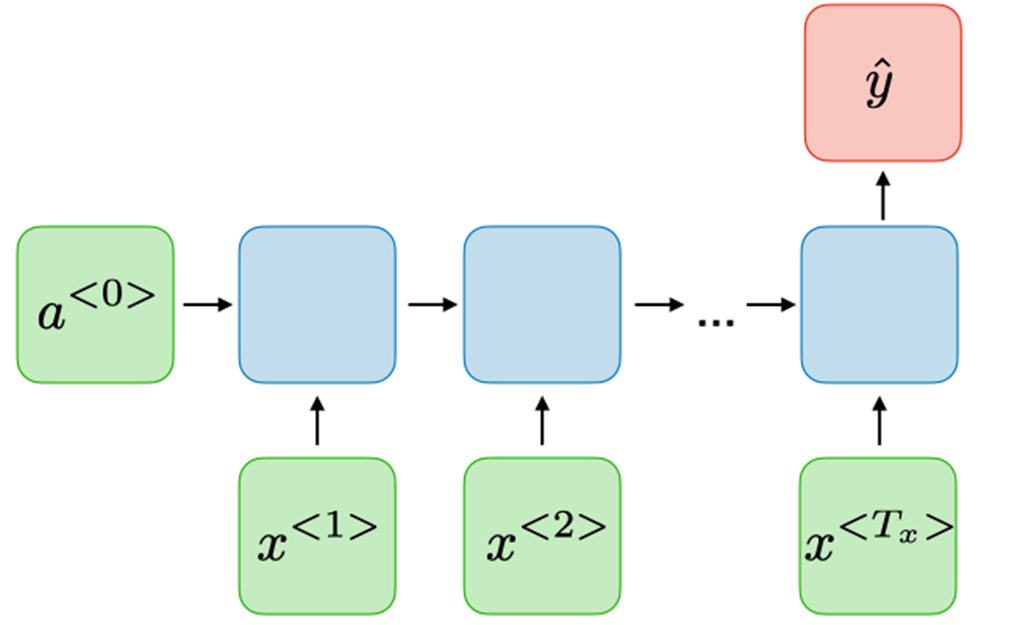

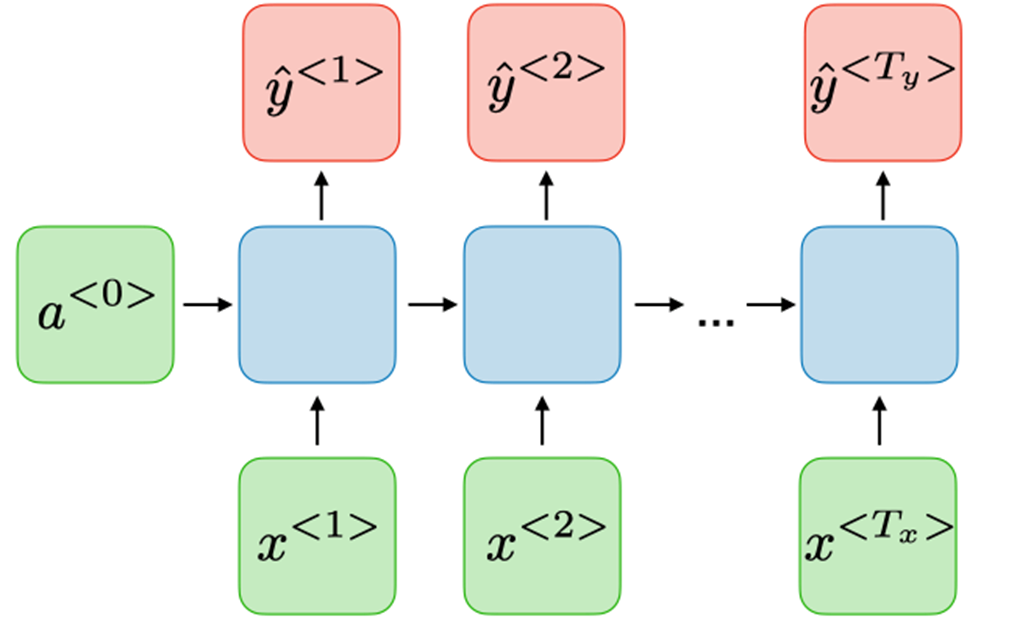

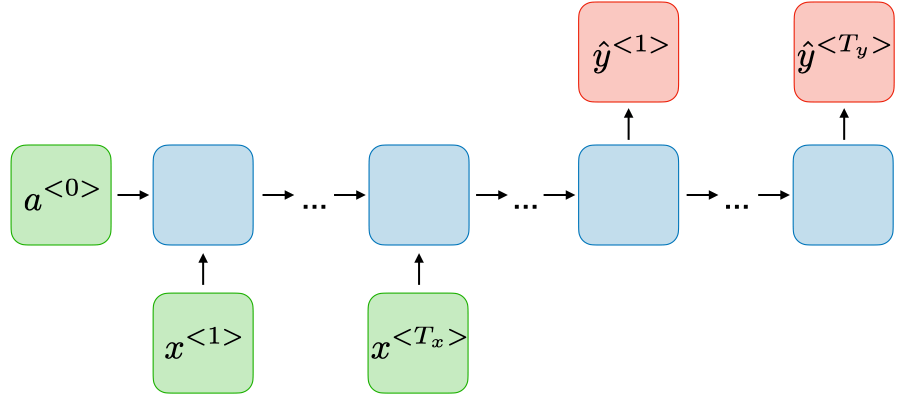

One-to-Many

Many-to-One

Many-to-Many

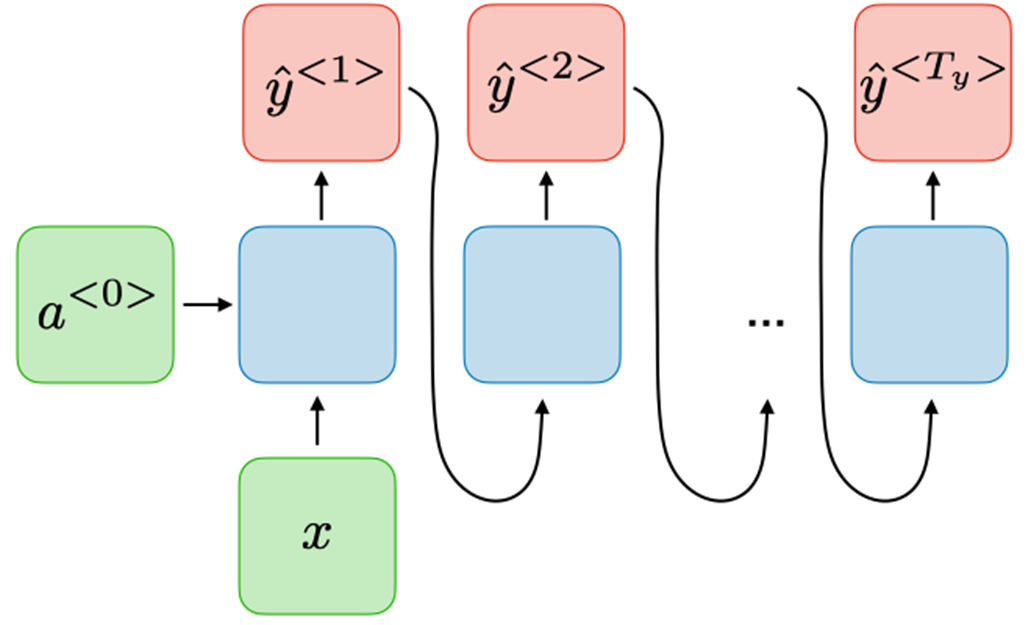

Sequence-to-Sequence

Link to original

Long Short-Term Memory

Definition

Long short-term memory (LSTM) is a type of RNN aimed at dealing with the vanishing gradient problem. The model is composed of a cell, an input gate, an output gate, and a forget gate. The three gates regulate the flow of information into and out of the cell.

Architecture

where:

Link to original

Gated Recurrent Unit

Definition

Gated recurrent unit (GRU) is like a Long Short-Term Memory with a gating mechanism to input or forget certain features, but lacks a context vector or output gate, resulting in fewer parameters than LSTM.

Architecture

where:

Link to original

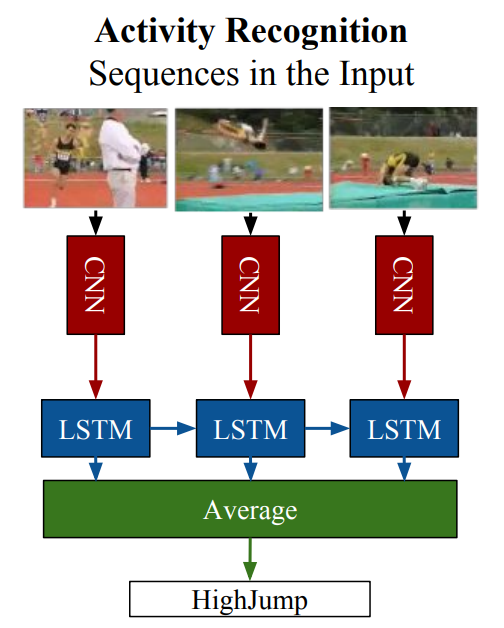

RNN-based Video Models

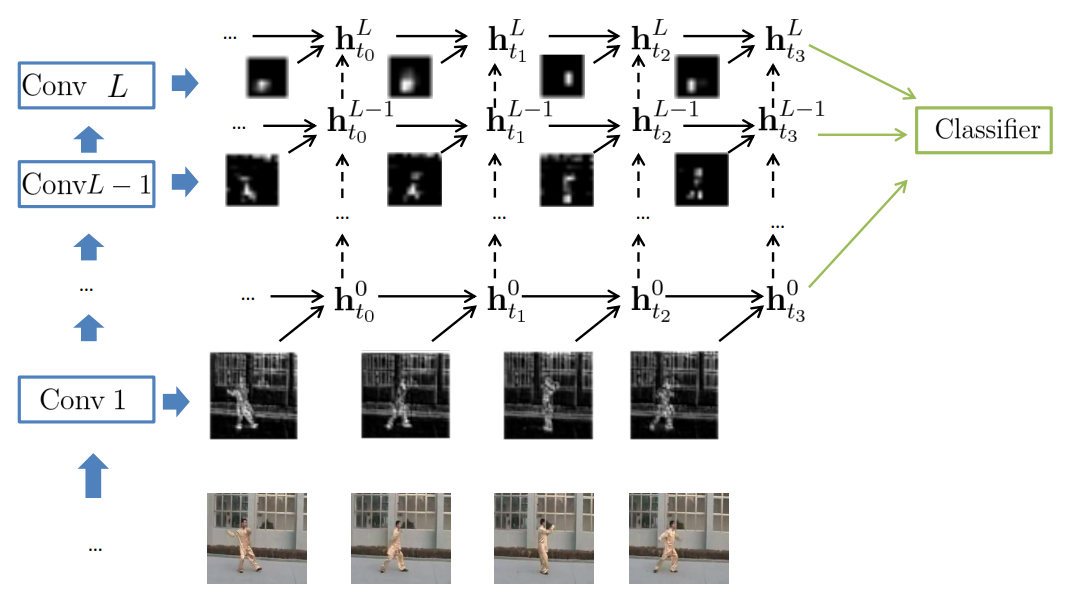

Long-Term Recurrent Convolutional Network

Definition

Long-term recurrent convolutional network (LRCN) is a direct application of LSTM idea to action recognition. CNN features are fed into the LSTM cell as input.

Link to original

Beyond Short Snippets

Definition

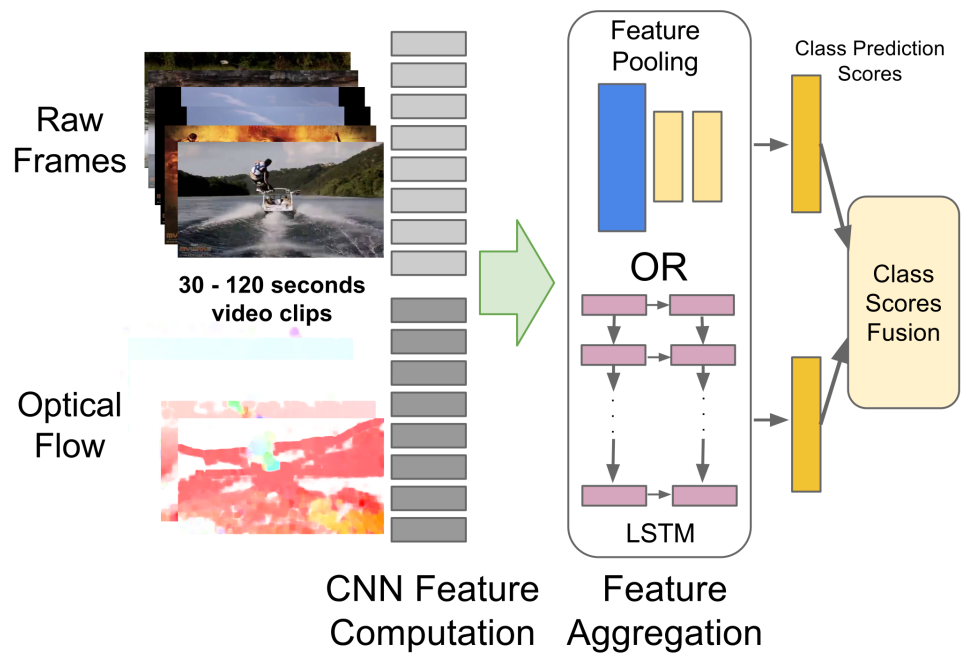

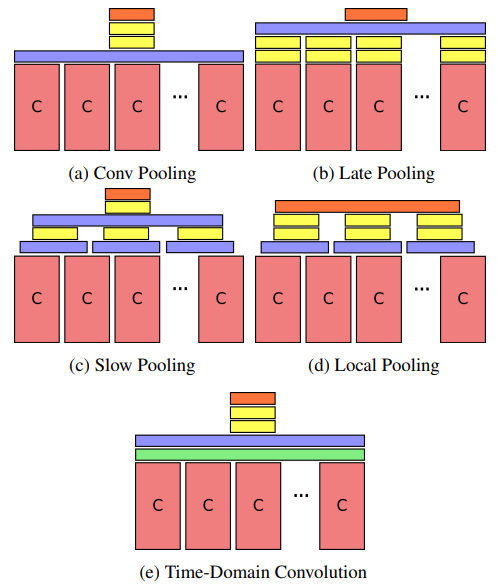

Beyond Short Snippets (BSS) utilized optical flow, in addition to the CNN features to better understand longer videos. For frame aggregation, both pooling and LSTM are considered

Poolings

Link to original

Fully-Connected LSTM

Definition

Fully-connected LSTM introduced additional connections from the cell state to the FC layers for each gate in LSTM cell.

Architecture

where:

Link to original

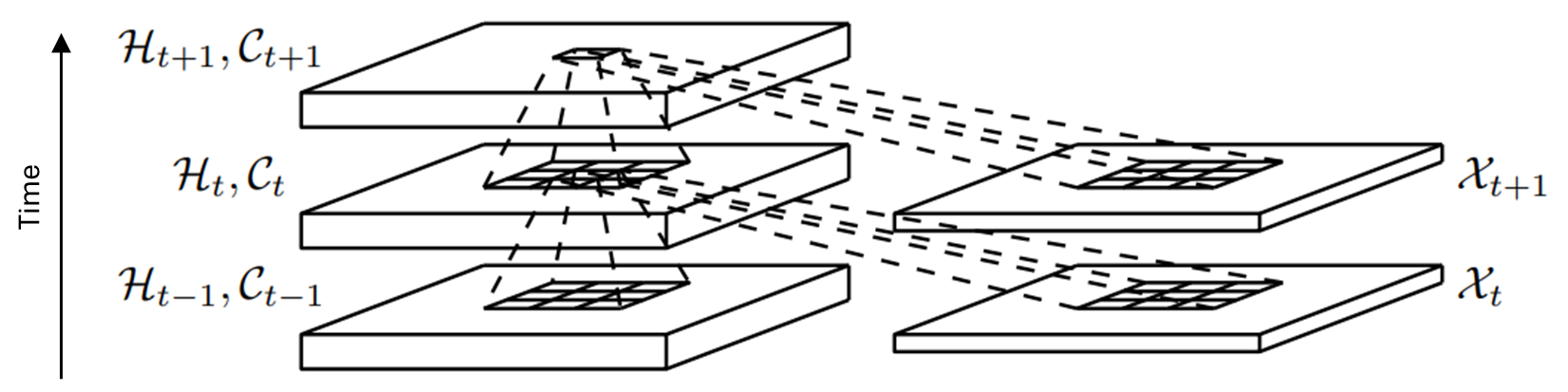

Convolutional LSTM

Definition

The input and hidden state of convolutional LSTM have a matrix form, and the fully connected layer in the LSTM cell is substituted with convolutional layer.

Link to original

- where are convolution filters.

Convolutional GRU

Definition

Convolutional GRU applied the idea of Convolutional LSTM to Gated Recurrent Unit. When stacking multiple ConvGRU layers, each layer takes previous layer’s hidden state as an extra input.

Link to original

- where are convolution filters.

Transformers

Attension

Attention

Definition

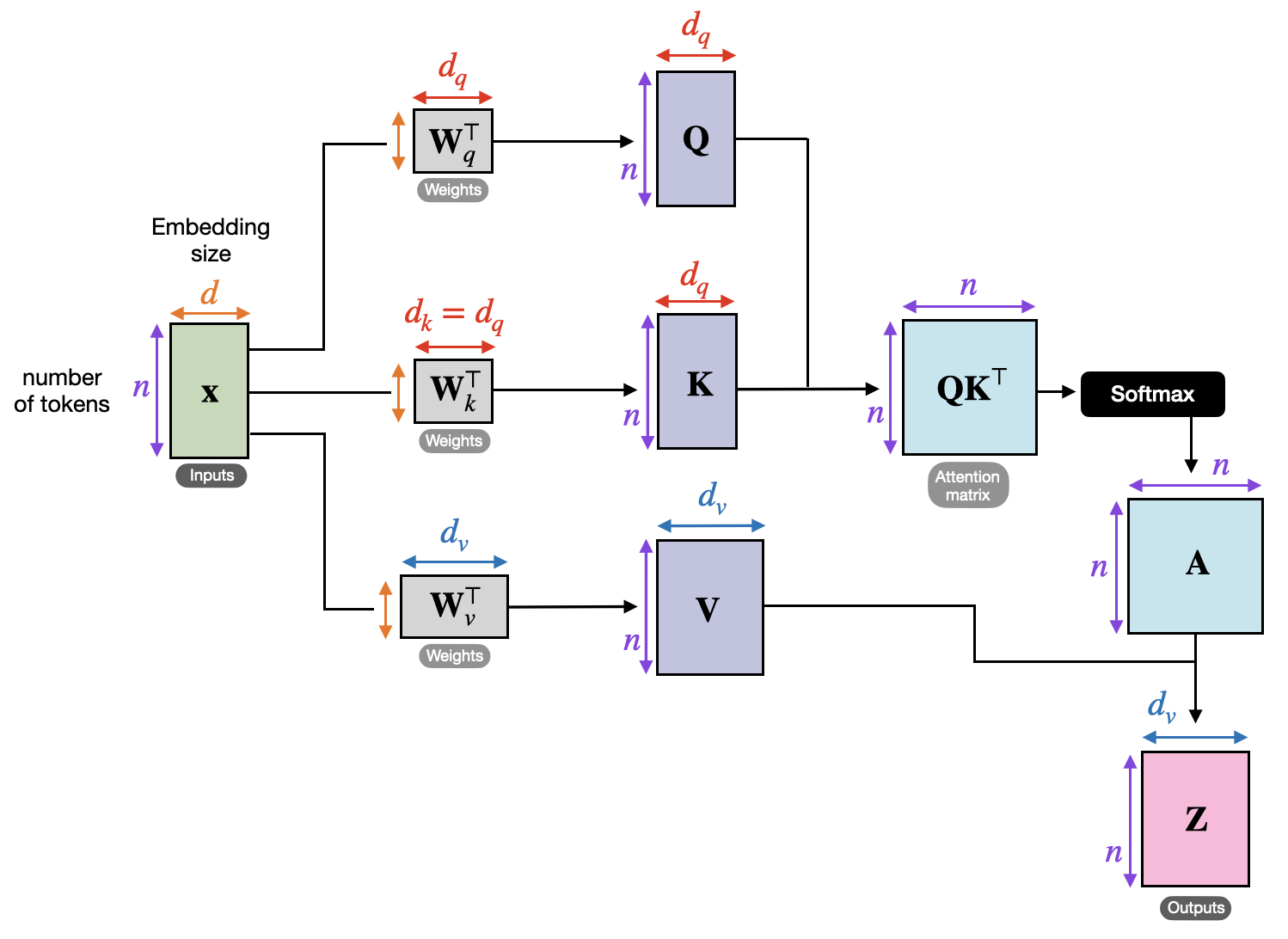

Attention is a method that determines the relative importance of each component in a sequence relative to the other components in that sequence.

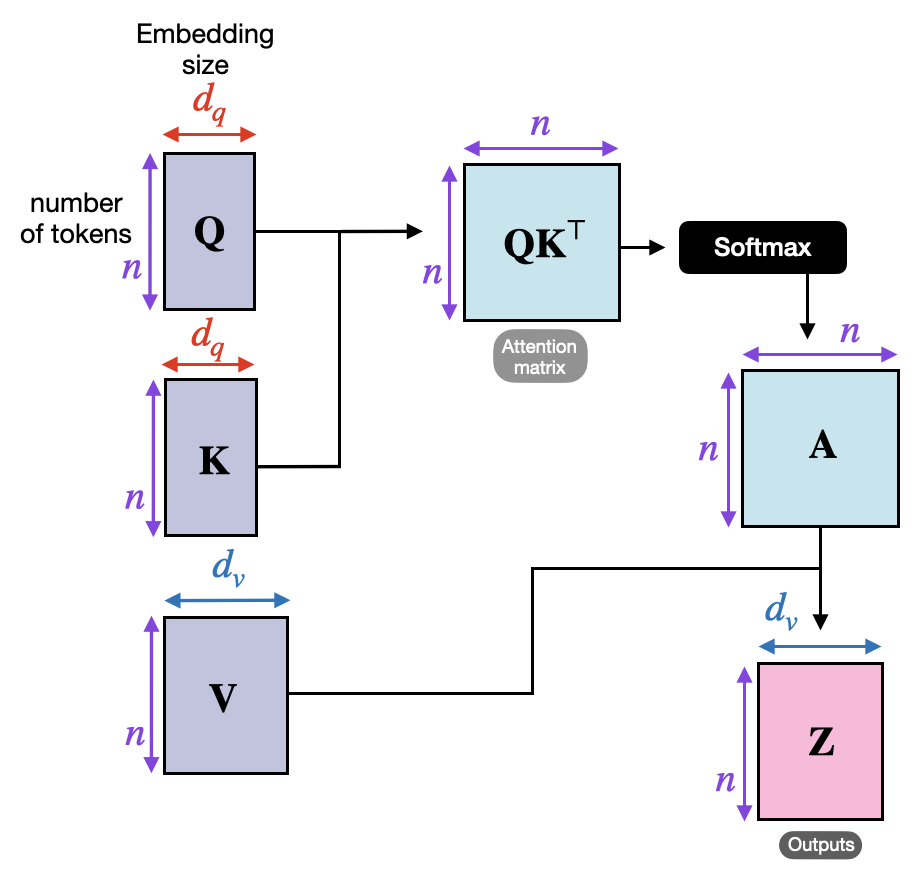

The attention function is formulated as where Q (query) represents the current context, K (key) and V (value) represents the references, and is the dimension of the keys.

The attention value is the convex combination of values, where each weight is proportional to the relevance between the query and the corresponding key.

Examples

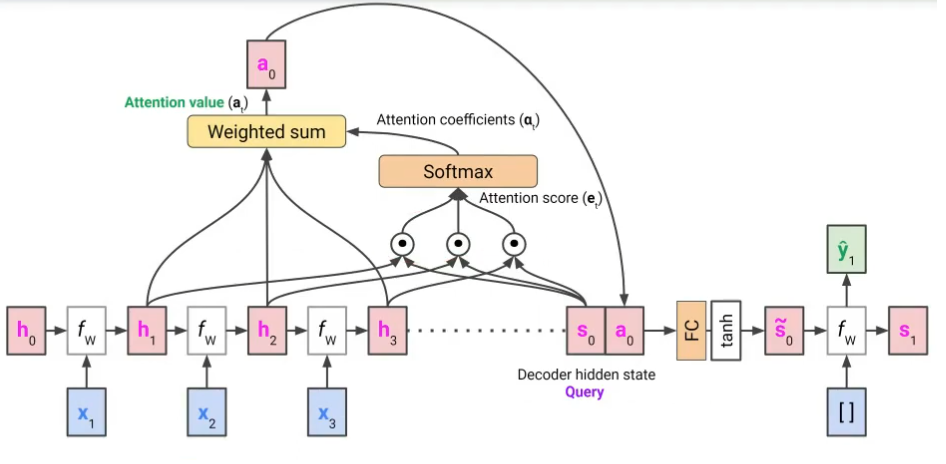

Seq-to-Seq with RNN

Link to original

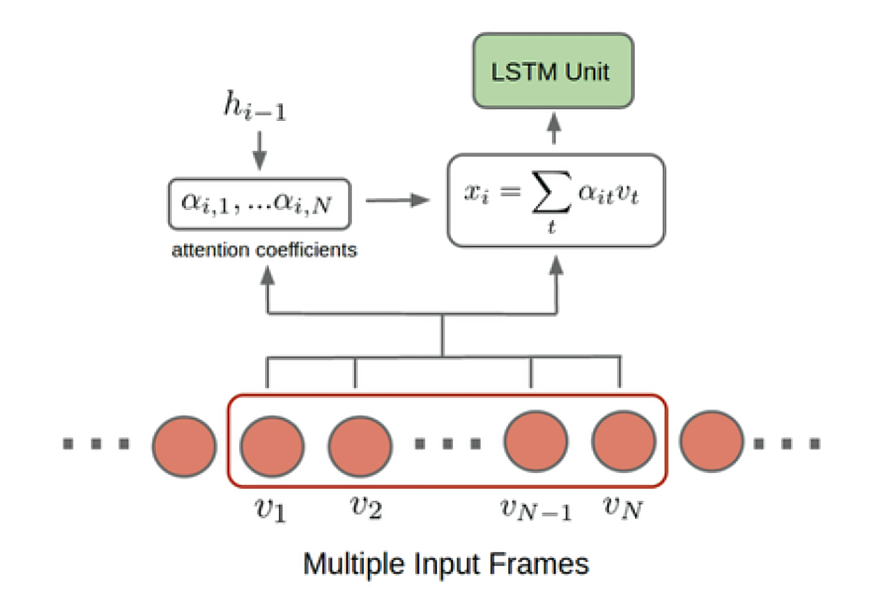

MultiLSTM

Definition

MultiLSTM is similar to the LRCN. The model applies Attention to recent N-input features instead of simply taking a single feature. The previous hidden state of LSTM is used as a query, and the recent N-input features are used as a key and value.

Link to original

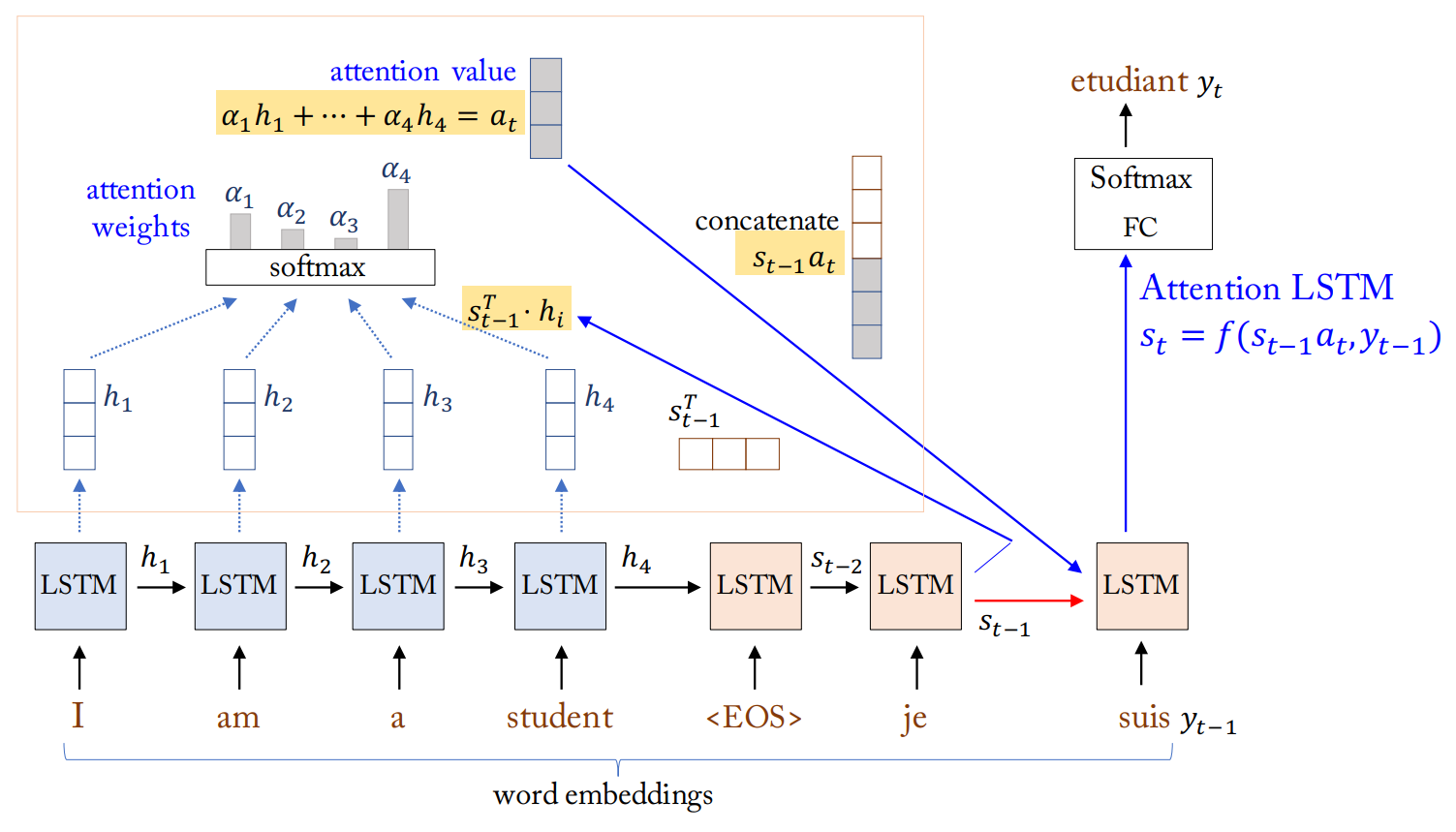

Attention LSTM

Definition

Attention LSTM is a variant of LSTM architecture incorporating Attention mechanism. In a sequence-to-sequence setting, the model uses Attention in the decoding stage. The previous hidden state of LSTM cell is used as the query, and the hidden states of LSTM cell of the encoder are used as the key and value.

Link to original

Visual Attention

Definition

Visual Attention model applies Attention spatially, the hidden state of LSTM (: 1, 1, channels) is used as a query, and the CNN feature map (: height, width, channels) are used as a key and value. The result of the attention (: 1, 1, channels) is used as the hidden state of the next step.

Link to original

Word Embeddings

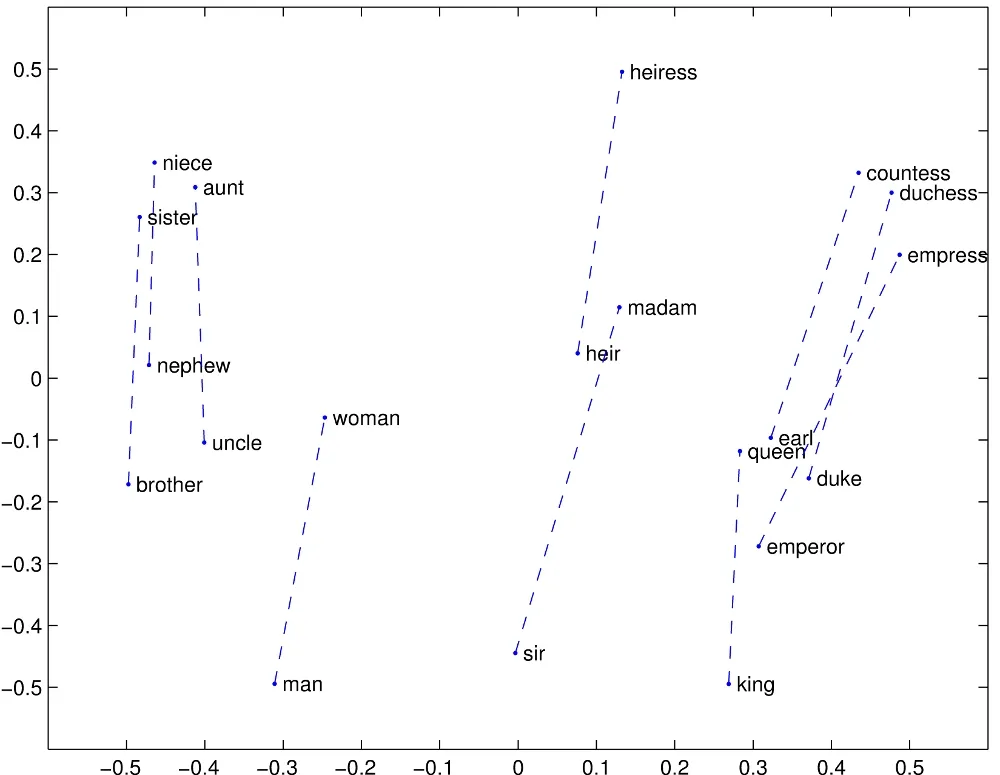

Word2Vec

Definition

The Word2Vec model learns vector representations of words that effectively capture the semantic relationships them using large corpus of text.

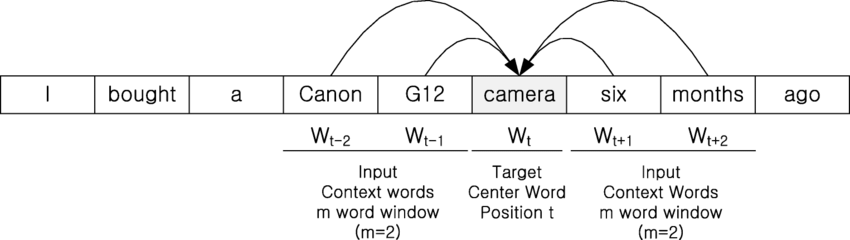

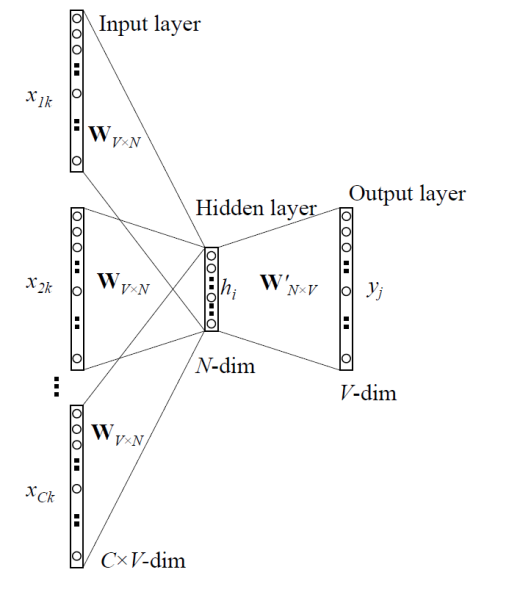

Continuous Bag-Of-Words

Continuous bag-of-words (CBOW) predicts a target word given its context words. The model takes a window of context words around a target word. The context words are fed into the network, and it tries to predict the target word. The model is trained to maximize the probability of the target word given the context words.

Algorithm

- Generate one-hot encodings of the context words of window size .

- Get embedded words vectors using linear transformation, and take average of them where the weight is shared

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the one-hot encoding of actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output word vector.

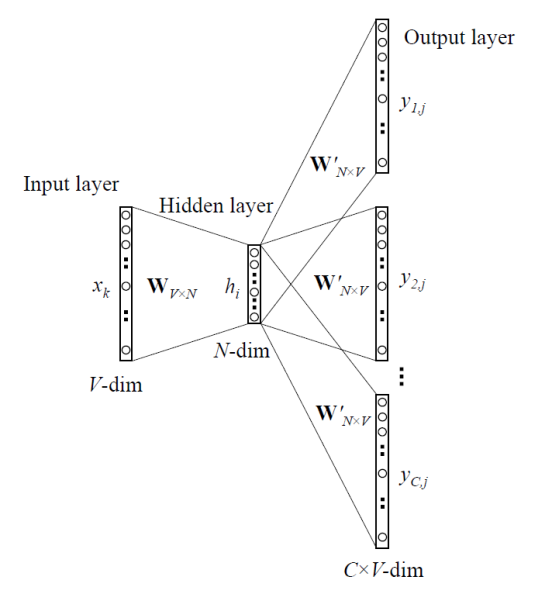

Skip-Gram

The Skip-gram model does the opposite of CBOW. It predicts the context words given a target word The model takes a single target word as input. The target word is fed into the network, and it tries to predict the surrounding context words. The model is trained to maximize the probability of each context word given the target word.

Algorithm

Link to original

- Generate one-hot encoding of the input word

- Get an embedded word vector using linear transformation

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the many one-hot encodings of the actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output -th context word vector.

Noise Contrastive Estimation

Definition

Noise contrastive estimation (NCE) transforms the problem of density estimation to binary classification between data samples and noise samples. Given a sample of data points that follow unknown probability distribution parametrized by . Noise contrastive estimation (NCE) is used to find an estimator that best approximate the true parameter . Although MLE has good properties, it requires the parametric family to be normalized while calculating. NCE finds the estimator by maximizing an objective function (like in MLE) but without needs of normalizing while calculating, so that it treats normalization coefficient as another estimation parameter. It is desirable property, since the normalization constant may be difficult or expensive to calculate.

The idea is to pollute sample with noise, data points that come from a known distribution, and perform nonlinear logistic regression to discriminate between data and noise.

Consider a data sample and noise sample and the union of the two samples . A binary class label is assigned to each , where .

By the definition of the function, and . By the Bayes Theorem, and , where is the sample-noise ratio. The log-likelihood function of the binary classification problem is derived as The NCE estimator is obtained by maximizing the objective function (log-likelihood) with respect to

Examples

Word Embedding (Skip-Gram Model)

In the context of word embeddings, particularly the Skip-gram model, the unknown distribution becomes , the probability of a context word given an input word . The represents the parameters of the word embedding model.

The noise distribution , where is the count of word in the corpus, is the unigram distribution of contexts raised to the power of to balance frequent and rare words.

For each word-context pair from the true data, we sample negative examples from . The NCE objective function is derived as: where is the set of observed word-context pairs, and is the number of noise samples per data sample.

In practice, is often modeled as , without explicit normalization. By maximizing this objective, we obtain word embeddings (vectors and ) that can discriminate between true context words and random noise words, effectively capturing semantic relationships in the vector space.

Link to original

Negative Sampling

Definition

Negative sampling (NS) is a simplified version of NCE. It shares the core idea of transforming the problem of density estimation into binary classification between true data samples and noise samples.

In the context of word embeddings, particularly the Skip-gram model, NS aims to learn good word representations without the need to estimate a full probability distribution. The goal is to find parameters that best represent the relationship between words and their contexts. Given a word-context pair from the true data distribution, NS samples negative examples (noise) from a noise distribution . The objective is to maximize the probability of the true pair while minimizing the probability of the noise pairs.

NS models with the Sigmoid Function:

The NS objective function is derived as: where is the set of negative samples drawn from the noise distribution.

The NS objective function is similar to the Skip-gram objective but replaces the expensive Softmax Function with a simpler binary classification task between true and noise samples.

Link to original

Global Vectors for Word Representation

Definition

Global vectors for word representation (GloVe) model learns word embedding vectors such that their Dot Product is proportional to the logarithm of the words’ probability of co-occurrence.

where and are word vectors, is the co-occurence count between words and .

Link to original

Transformer Models

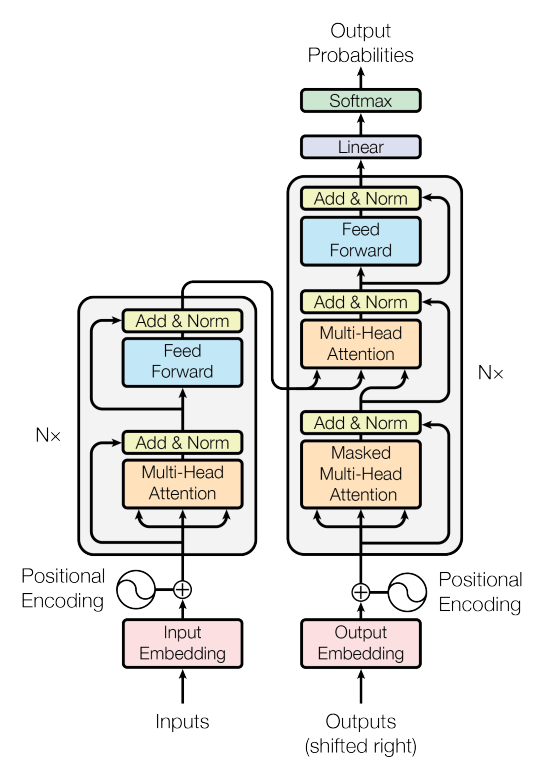

Transformer

Definition

Transformer model uses self-attention, the Attention in which the Q, K, and V derived from the same source, for sentences. The result vector of the self-attention reflects its context. Usually, self-attention is repreated multiple times to further contexualize.

Architecture

Self-Attention

The initial query (Q), key (K), and value (V) are matrices are the result of linear transformation of the input sequence.

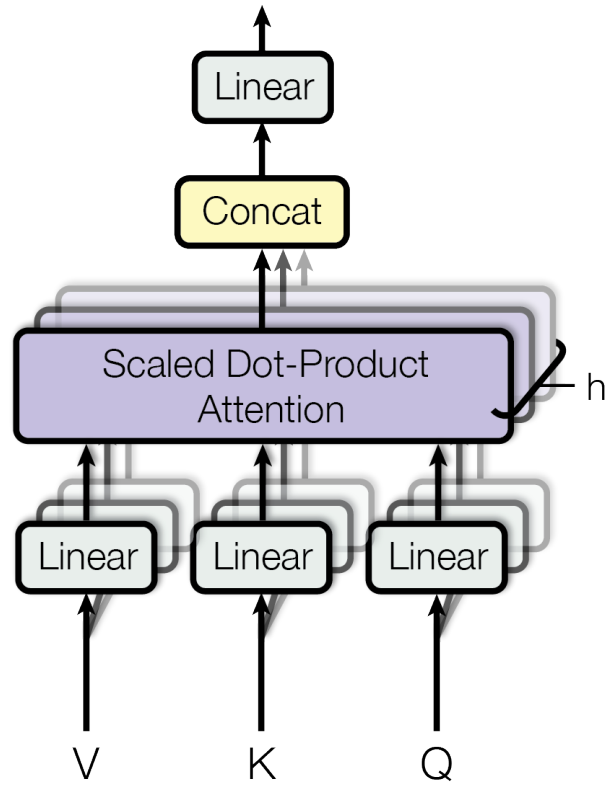

Multi-head Self-Attention

The Transformer uses multiple attention heads in parallel like the channel in CNN, allowing it to focus on different aspects of the input simultaneously. The output of multi-head attention is a concatenation of the outputs from individual attention heads, followed by a linear transformation.

where

Feed-Forward Layer

Each layer in the Transformer also contains a feed-forward layer applied to each position separately, i.e. there is no cross-token dependency. The linear transformations are the same across different positions in the same layer, but different in other layers.

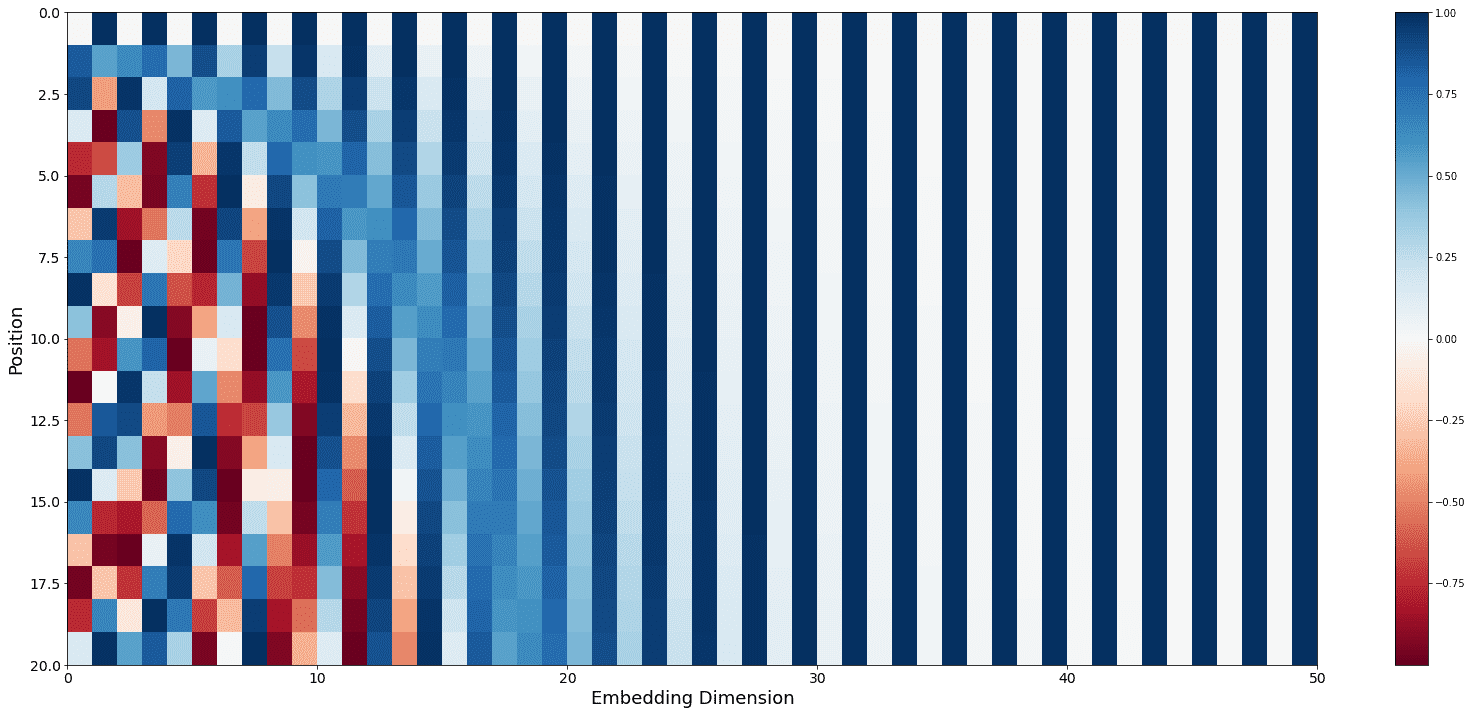

Positional Encoding

Since the Transformer doesn’t inherently capture sequence order, positional encodings are added to the input embeddings. These are typically sine and cosine functions of different frequencies:

PE_{(\text{pos},2i)} &= sin(\text{pos} / 10000^{2i/d_{\text{model}}})\\ PE_{(\text{pos},2i+1)} &= cos(\text{pos} / 10000^{2i/d_{\text{model}}}) \end{aligned}$$ ## Masked Multi-Head Self-Attention In the decoder, the self-attention layer is modified to prevent attending to later positions. This is achieved by masking future positions with negative infinity before the [[Softmax Function|softmax]] step. ## Encoder-Decoder Attention The decoder has an additional attention layer that performs multi-head attention over the output of the encoder. Where the query (Q) comes from the previous layer in the decoder, and the key (K) and value (V) come from the output of the encoder.Link to original

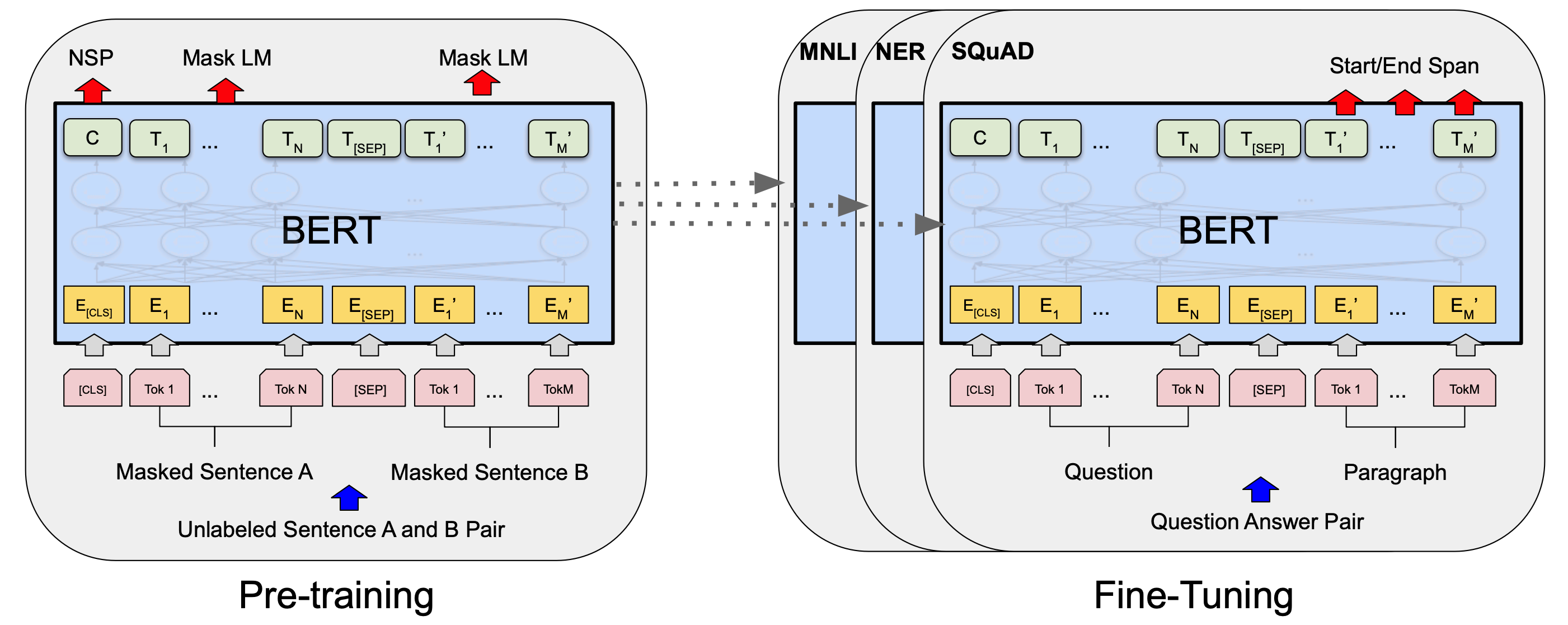

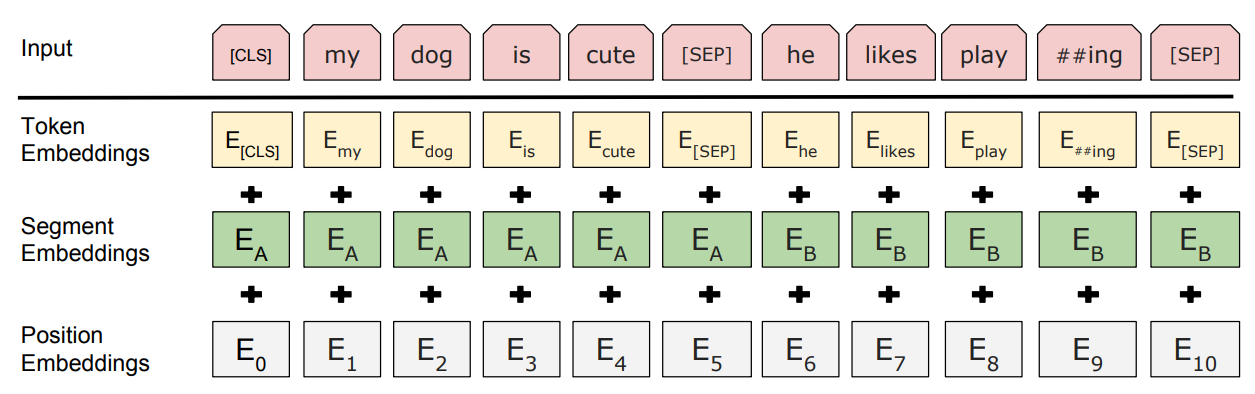

BERT

Definition

BERT model appended a CLS token to the input, and uses it as the aggregated embedding. The model learn word embedding by solving the masked token prediction and next sentence prediction problems.

Tasks

Masked Language Modeling

Figuring out the hidden words using the context.

Next Sentence Prediction

A binary classification problem, predicting if the two sentences in the input are consecutive or not.

Link to original

Image Tranformer Models

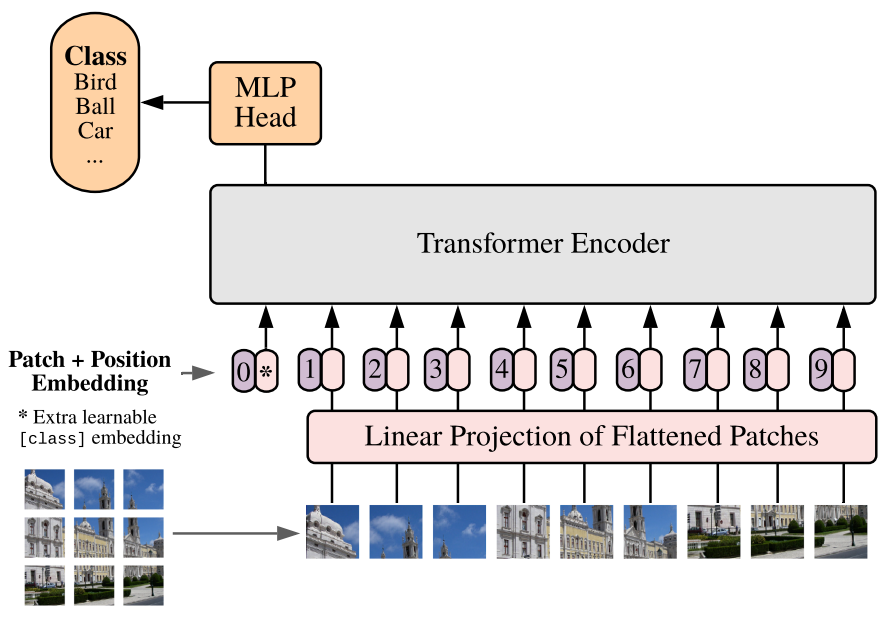

Vision Transformer

Definition

Vision transformer (ViT) applies Transformer architecture to the vision tasks. The model considers an image as a sequence of patches.

Architecture

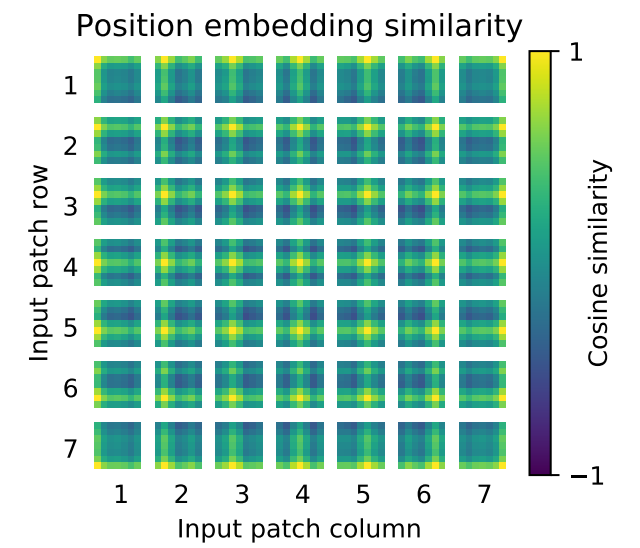

Positional Enbedding

ViT does not use pre-designed positional encoding, it leaves it as a learnable parameter. By doing so, ViT does not imply any inductive bias unlike to CNN

Link to original

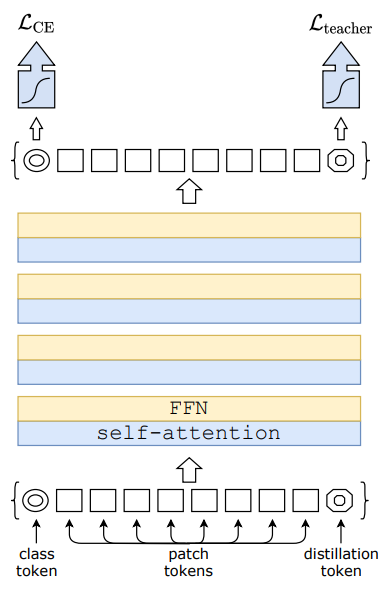

Data-Efficient Image Transformer

Definition

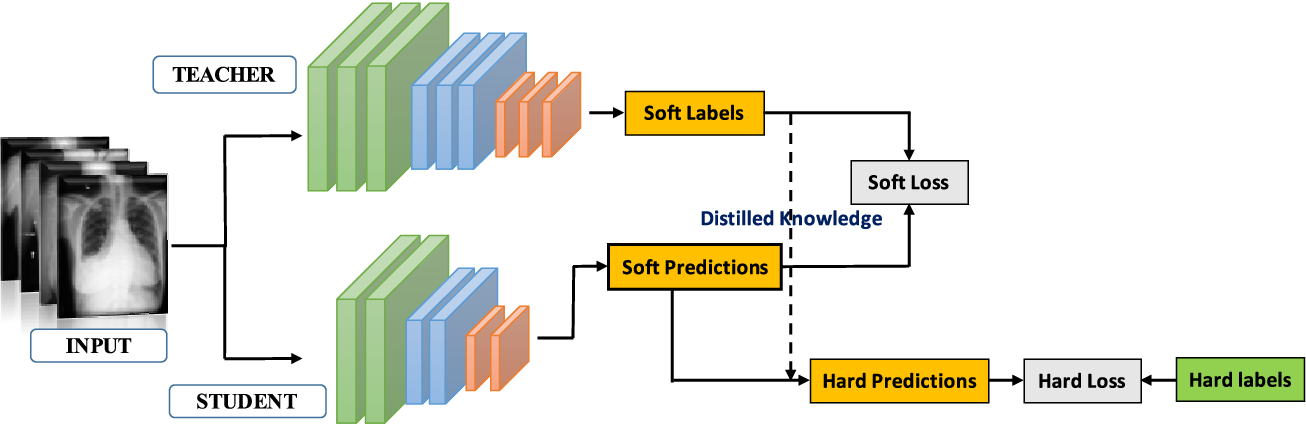

Data-efficient image transformer (DeiT) appended a distillation token to a ViT model and compare the token to the prediction of pre-trained teacher model (CNN).

The teacher model and the distillation process in DeiT help the student model (ViT) to generalize better by providing additional supervision from the teacher model. The teacher model’s predictions implicitly contain information about the model’s uncertainties and the relationships between classes.

Architecture

Soft-Label Distillation

Minimizes the KL-Divergence between the softmax label of the teacher model and that of the student.

Hard-Label Distillation

Take the hard decision of the teacher as a true label.

Link to original

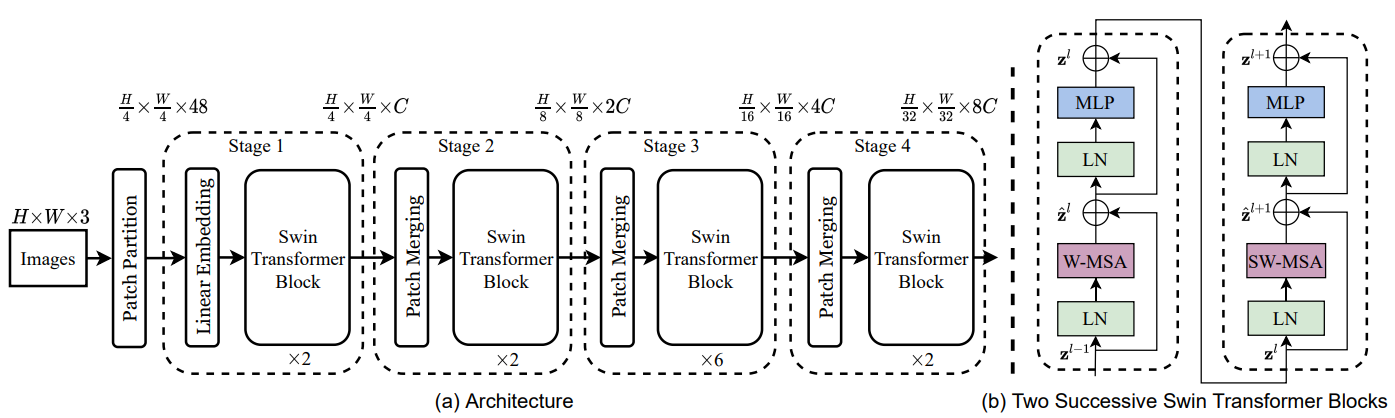

Swin Transformer

Definition

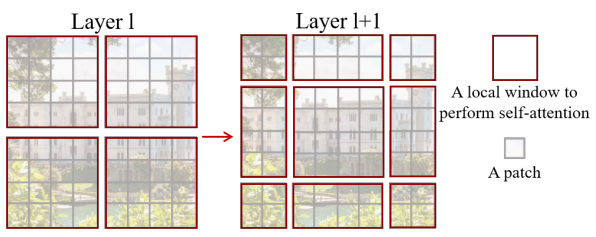

Swin transformer reintroduced the inductive bias by using the local self-attention. The problems of the local self-attention are solved with the hierarchical structure, shifted window partitioning, and relative position bias.

Architecture

Local Self-Attention

The self-attention is computed within local windows instead of globally, reducing computational cost.

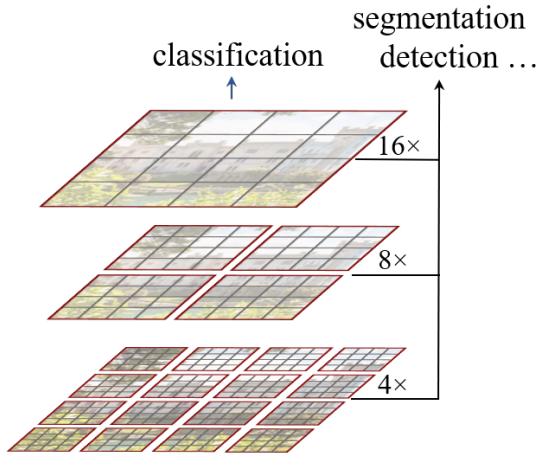

Hierarchical Structure

Swin Transformer starts with small-sized patches and gradually merges neighboring patches in deeper layers, similar to CNN. This allows the model to capture both fine and coarse features effectively.

Patch Merging:

To reduce the spatial resolution and increase the channel dimension, Swin Transformer uses a patch merging layer. The layer concatenates the features of each neighboring patches and applies a linear transformation. The output feature represents the merged patch and has doubled patch size.

Shifted Window Partitioning

The image is divided into non-overlapping windows, these windows are shifted in alternate layers for cross-window information exchange.

Relative Position Bias

Instead of using absolute position embeddings like in the original Transformer, Swin Transformer uses a relative position bias. This is a learnable parameter added to the attention scores before the softmax operation.

Link to original

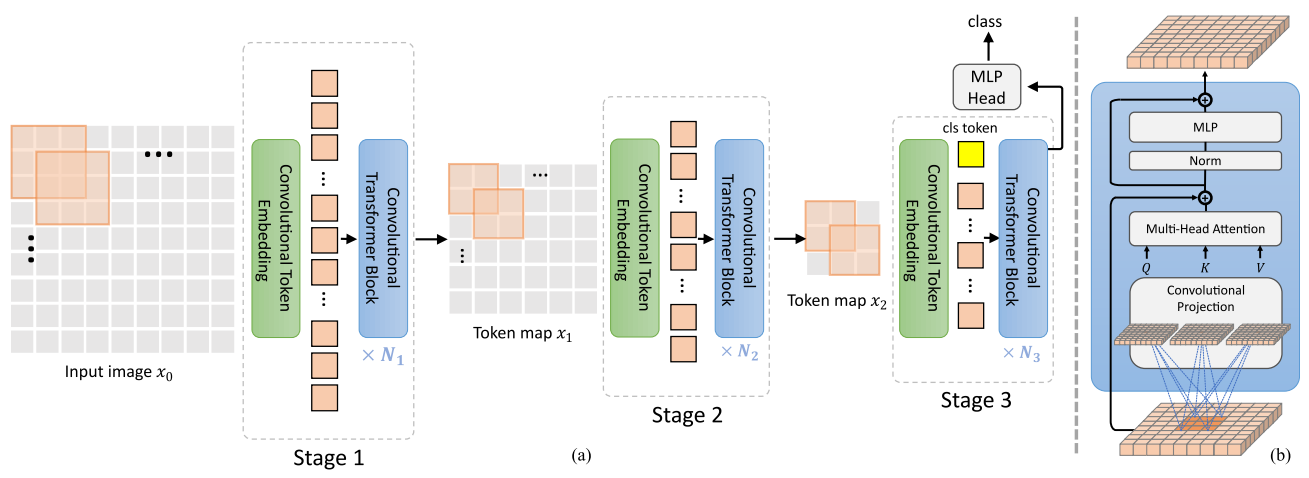

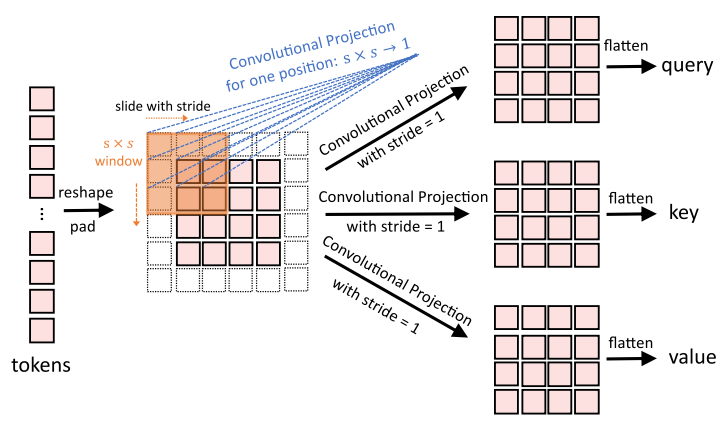

Convolutional Vision Transformer

Definition

Convolutional vision transformer (CvT) uses convolutional layers instead of the fully connected layers to improve its performance and efficiency.

Architecture

Convolutional Token Embedding

CvT replaced the patch embedding used in ViT with a convolutional layer.

Convolutional Projection

Instead of using linear transformation to project the input into query (Q), key (K), and value (V), convolutional layers are used

Squeezed Convolutional Projection

The squeezed convolutional projection is utilized to reduce computational complexity or to downsample the feature map.

Link to original

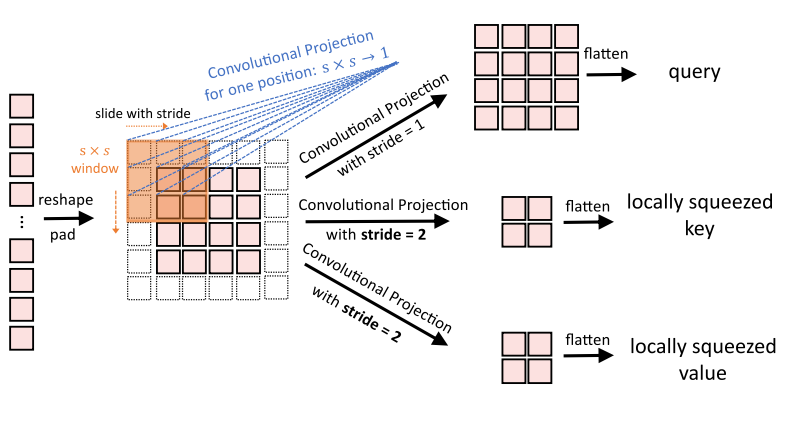

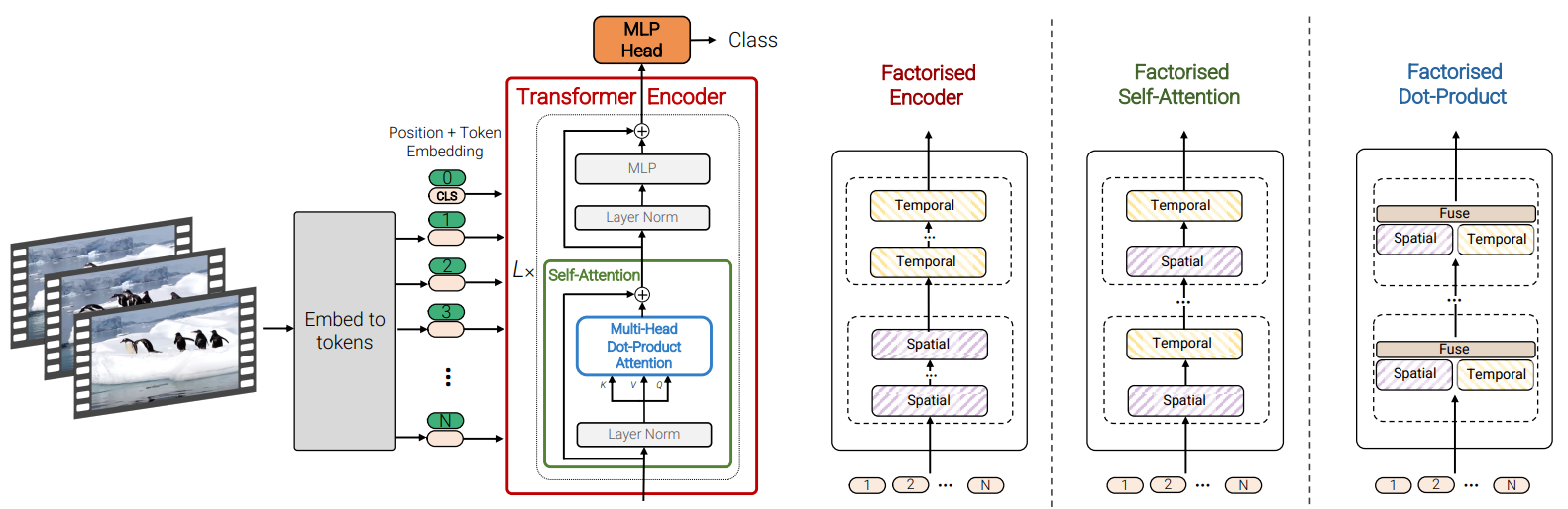

Video Tranformer Models

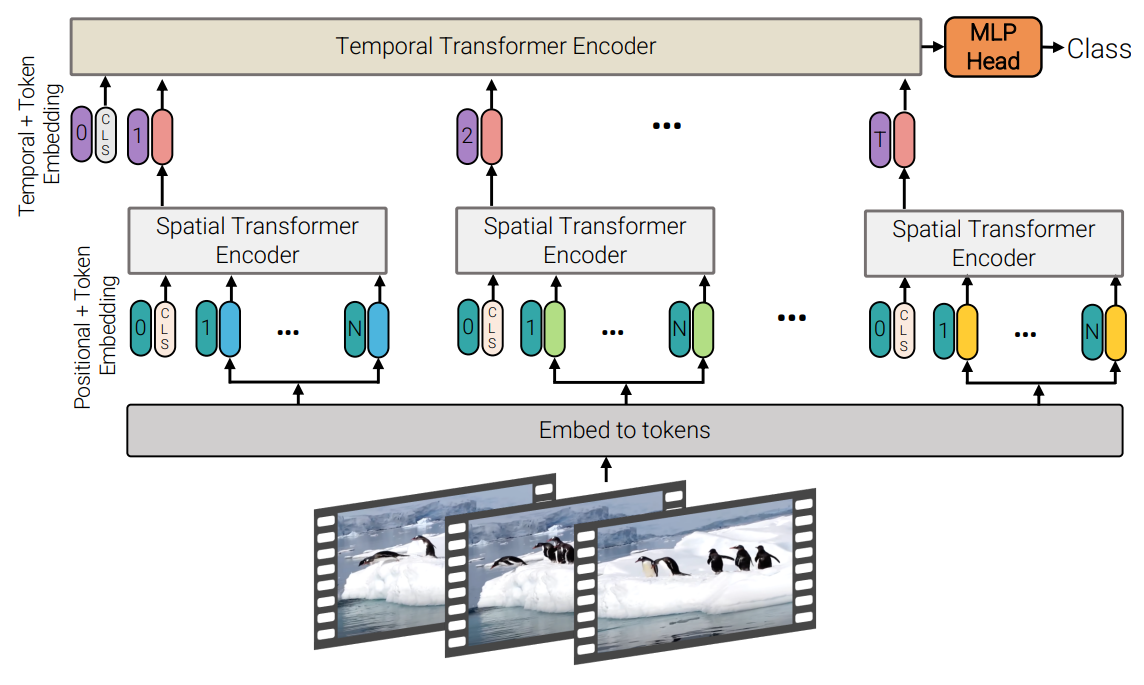

Video Vision Transformer

Definition

Video vision transformer (ViViT) extended the idea of ViT to video classification task.

Architecture

Uniform Frame Sampling

The fixed number of frames of the input video are uniformly sampled to handle videos of varying lengths and to reduce computational complexity.

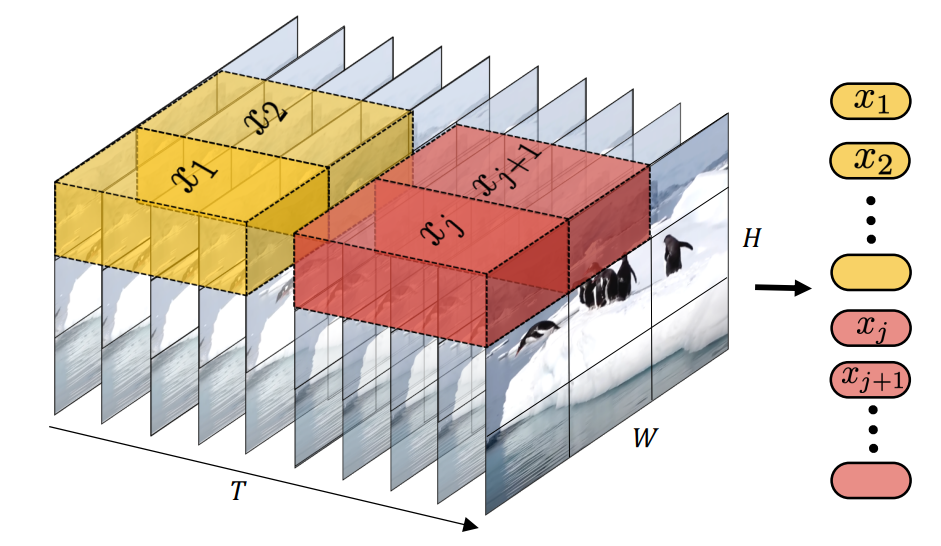

Tublet Embedding

The input video is divided into a sequence of non-overlapping tubelet tokens. Each tubelet is a 3D patch of the video with dimensions (frames, height, width). The tubelet tokens are linearly projected to obtain embedding vectors. Positional embeddings are added to provide spatial and temporal information.

Model Variants

ViViT paper proposed four different model variants.

Spatio-Temporal Attention

This is the most direct extension of the ViT to video. It treats the entire video as a single stream of tokens, applying self-attention across both spatial and temporal dimensions simultaneously.

Factorized Encoder

This variant uses two separate transformer encoders: one for spatial, and another for temporal. Each frame is fed into the spatial encoder (ViT), the sequence of the output CLS token is used as an input of the temporal encoder (Transformer).

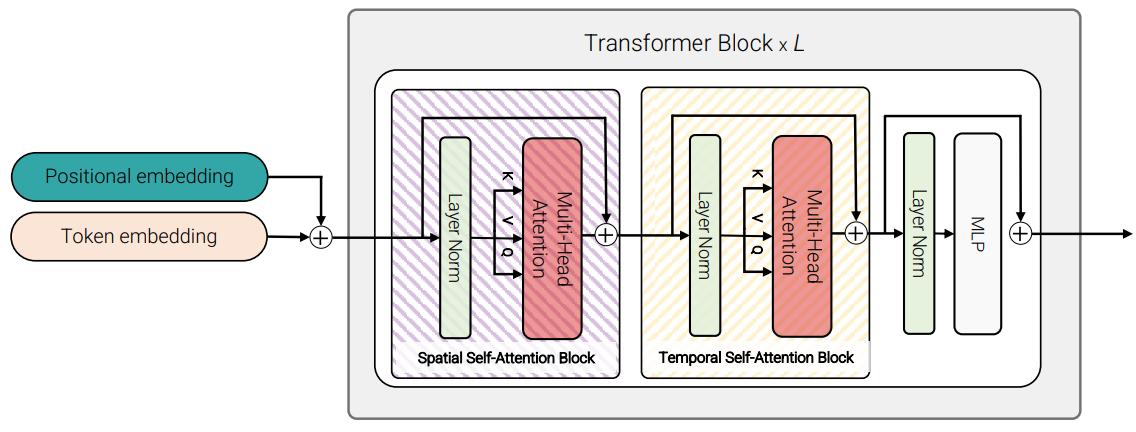

Factorized Self-Attention

This variant factorizes the self-attention operation within each transformer layer into spatial and temporal attention operations. The spatial attention is applied to tokens within the same frame, and the temporal attention is applied to tokens at the same spatial location across frames.

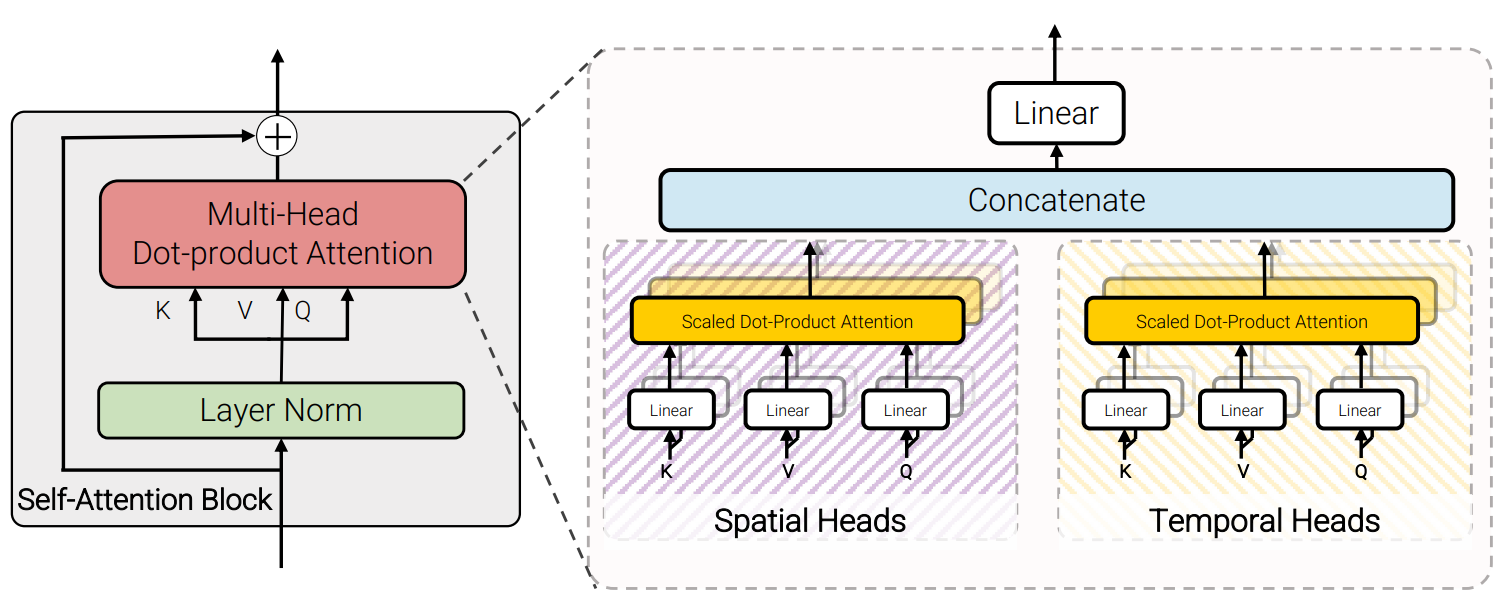

Factorized Dot-Product Attention

This variant factorizes the multi-head dot-product attention. The half of the heads only compute the spatial attention, and the other half only compute the temporal attention.

Link to original

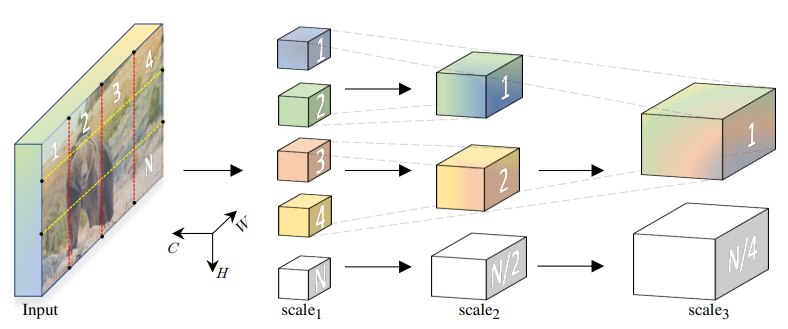

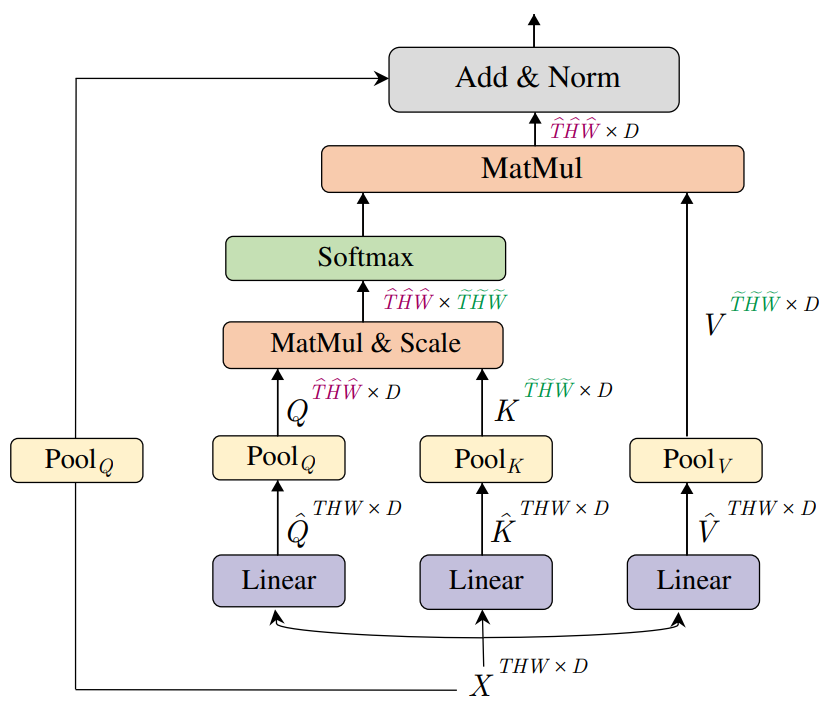

Multiscale Vision Transformer

Definition

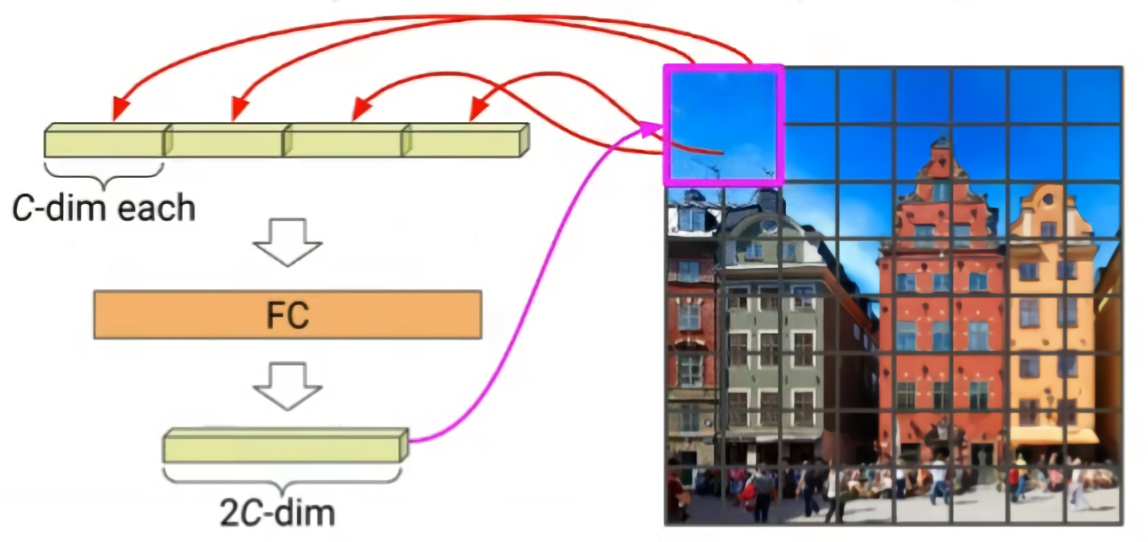

Multiscale vision transformer (MViT) applied the idea of CvT to the video inputs. Additionally, the multi head pooling attention is used for hierarchical structure.

Architecture

Pooling Attention

Pooling attention decreases the spatial dimension and increases the channel dimension of the Q, K, and V before applying self-attention. It is processed by the convolutional layer with a stride greater than 1.

Link to original

Object Detection

Proposal-Based Approaches

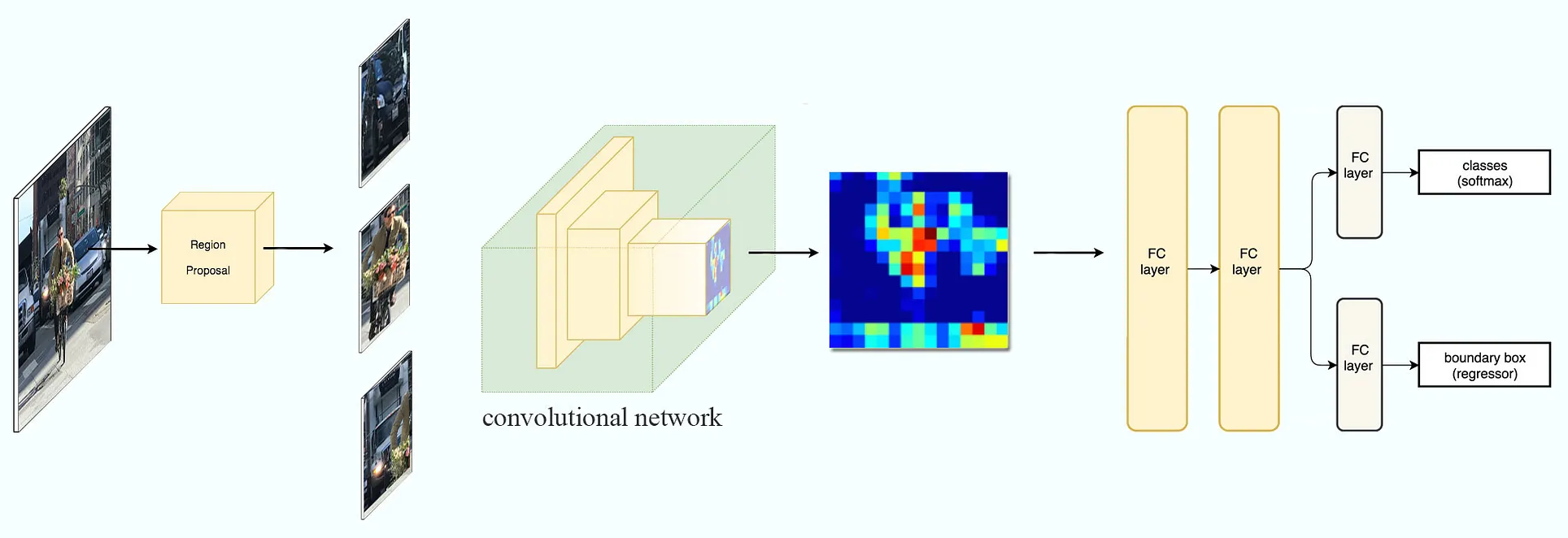

R-CNN

Definition

Regions with CNN features (RCNN) model performs object detection in two stages: region proposal and object recognition.

Region Proposal

The region proposal is performed by the off-the-shelf model. Around 200 regions are proposed.

Object Recognition

Extract CNN feature of the proposed image patch and map the features to labels using classifier (SVM).

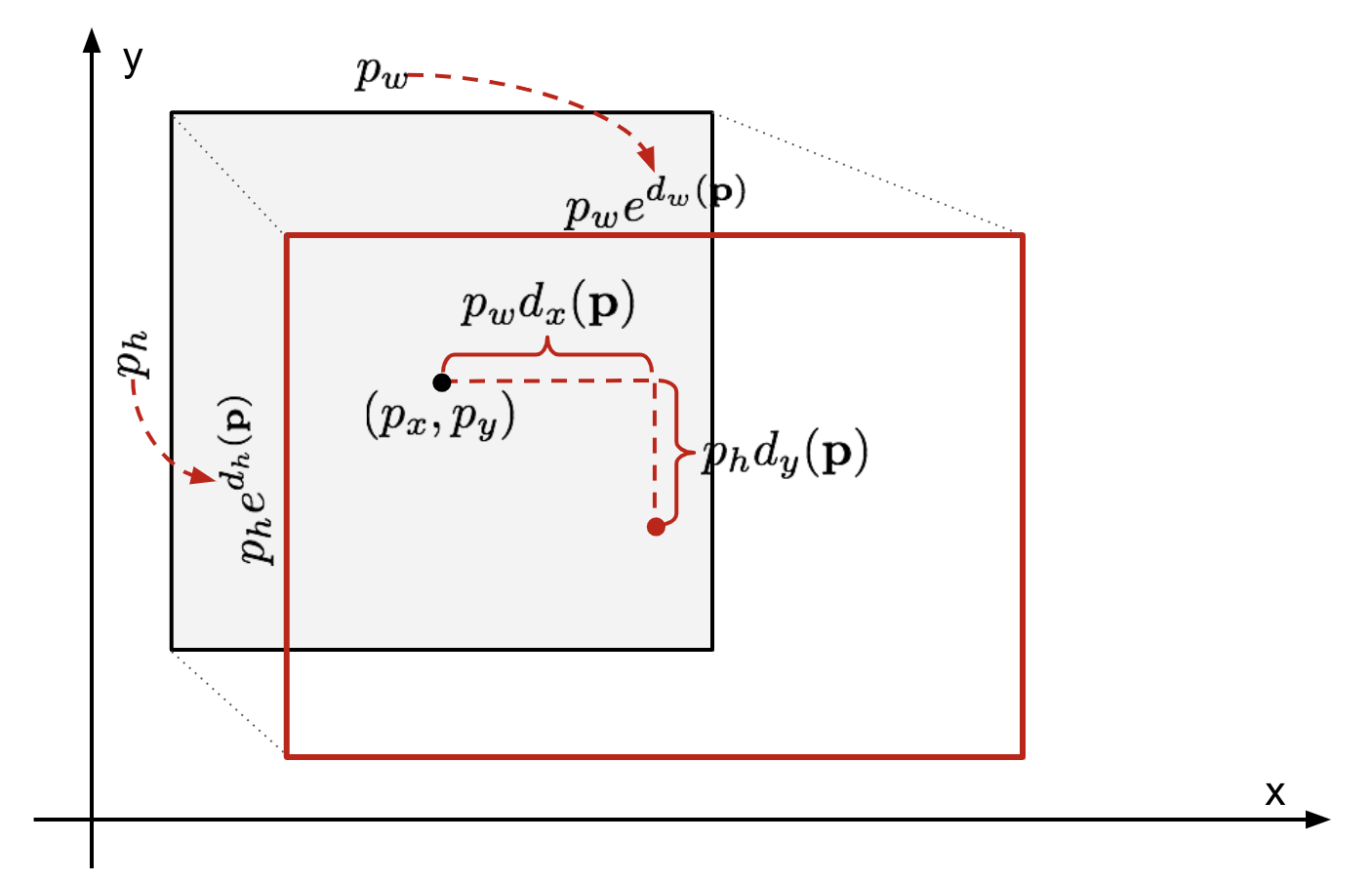

Bounding-Box Regression

After the classifier, the bounding-box regressor is applied. The regressor takes the CNN features of the proposed region and predicts a refined bounding box. The refinement aims to adjust the original region proposal to better fit the actual object boundaries. A separate bounding-box regressor is trained for each object class.

Link to original

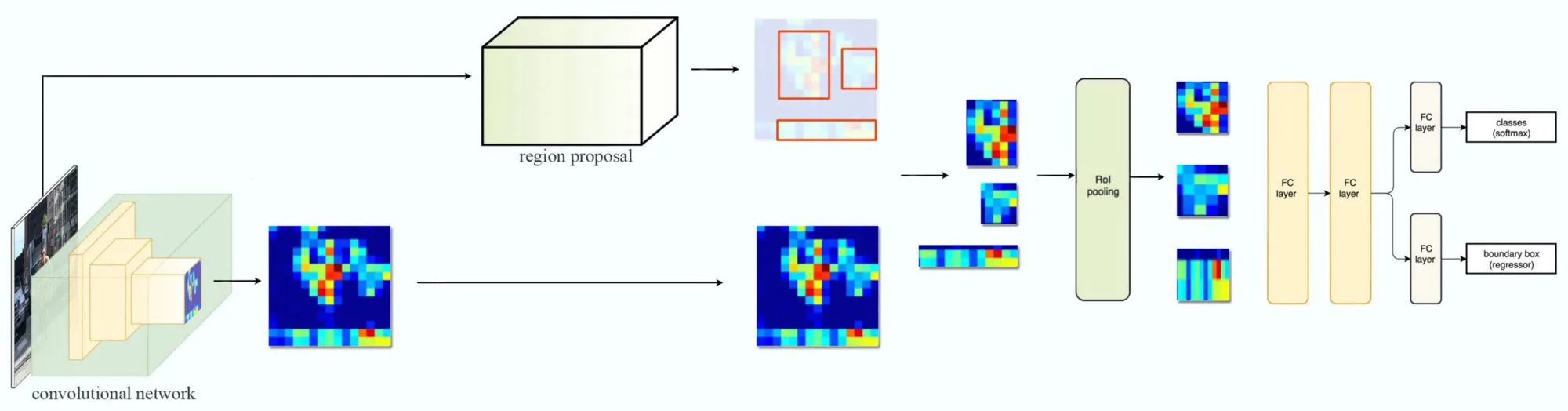

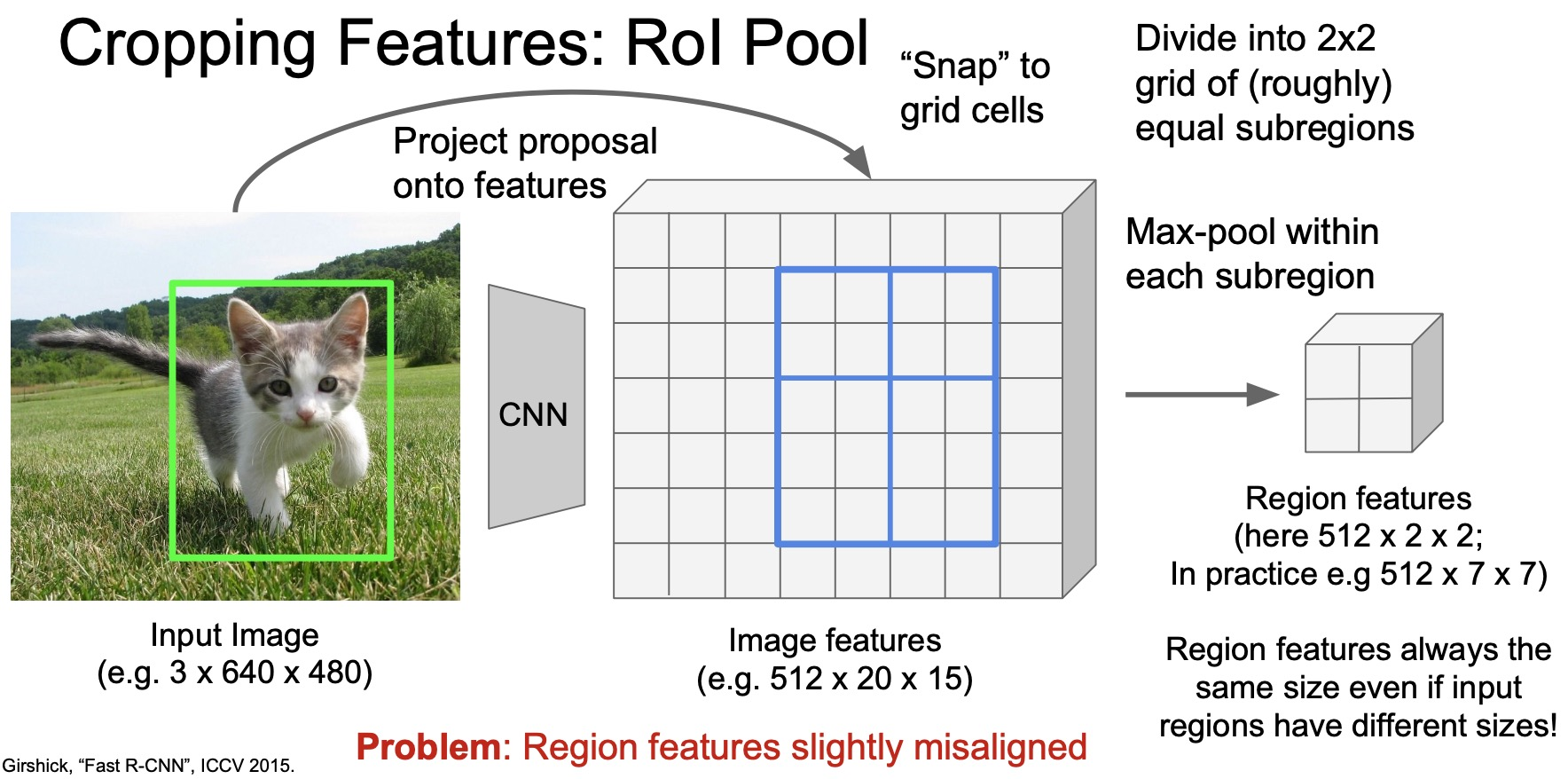

Fast R-CNN

Definition

Fast R-CNN improved the speed of object detection by extracting the CNN feature of the entire image only once and using a fraction of the feature map corresponding to the proposed bounding box for the object recognition stage. The fractions of the feature map are resized into the same size using ROI pooling.

Architecture

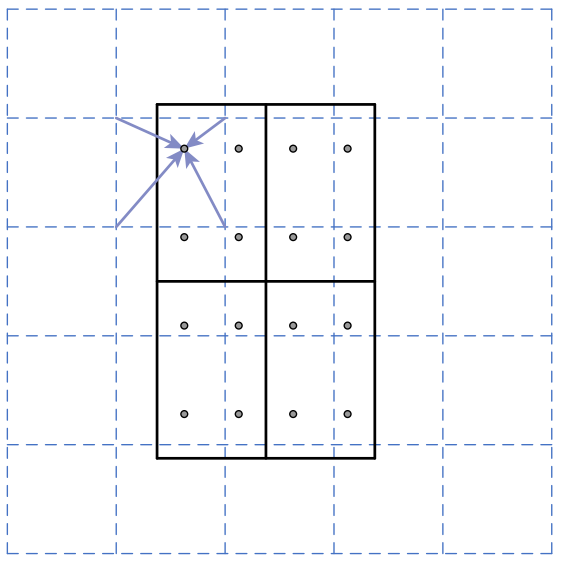

RoI Pooling

RoI pooling is a type of max pooling transforms features inside region of interest into a small feature map with fixed size. The RoI is divided into an grid of sub-windows, and for each sub-window, perform max pooling.

Link to original

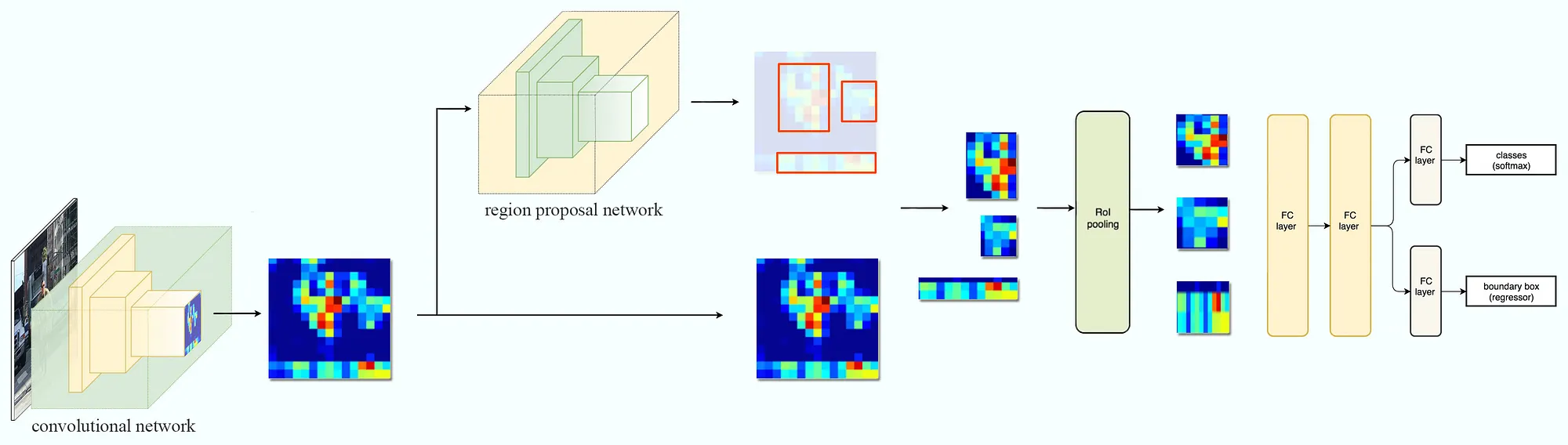

Faster R-CNN

Definition

Faster R-CNN substituted the off-the-shelf region proposal of R-CNN with the region proposal network (RPN)

Architecture

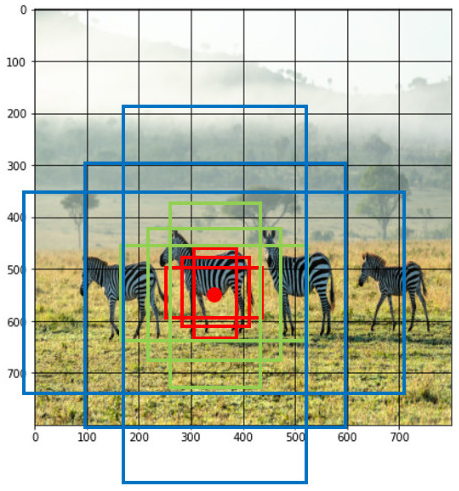

Region Proposal Network

Region proposal network (RPN) is a CNN that operates on the feature maps produced by the backbone CNN. RPN slides over the CNN feature map. At each location, it makes multiple region proposals have various sizes and aspect ratios. For each proposal, the RPN predicts the objectness score and the bounding box refinement.

Link to original

Proposal-Free Approaches

You Only Look Once

Definition

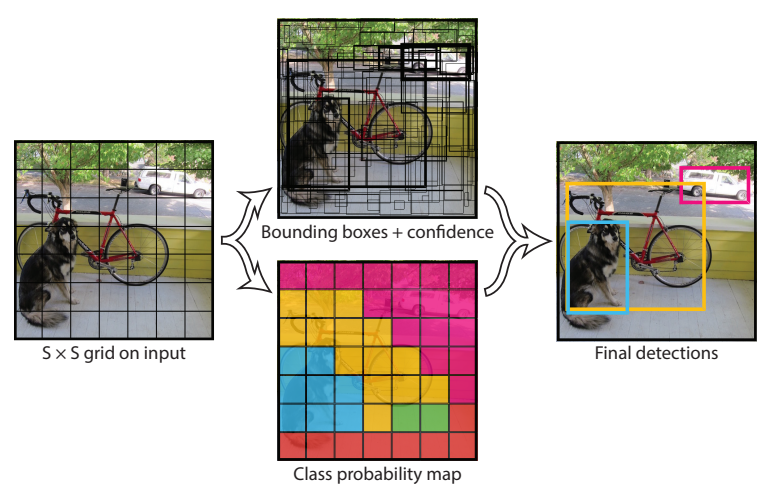

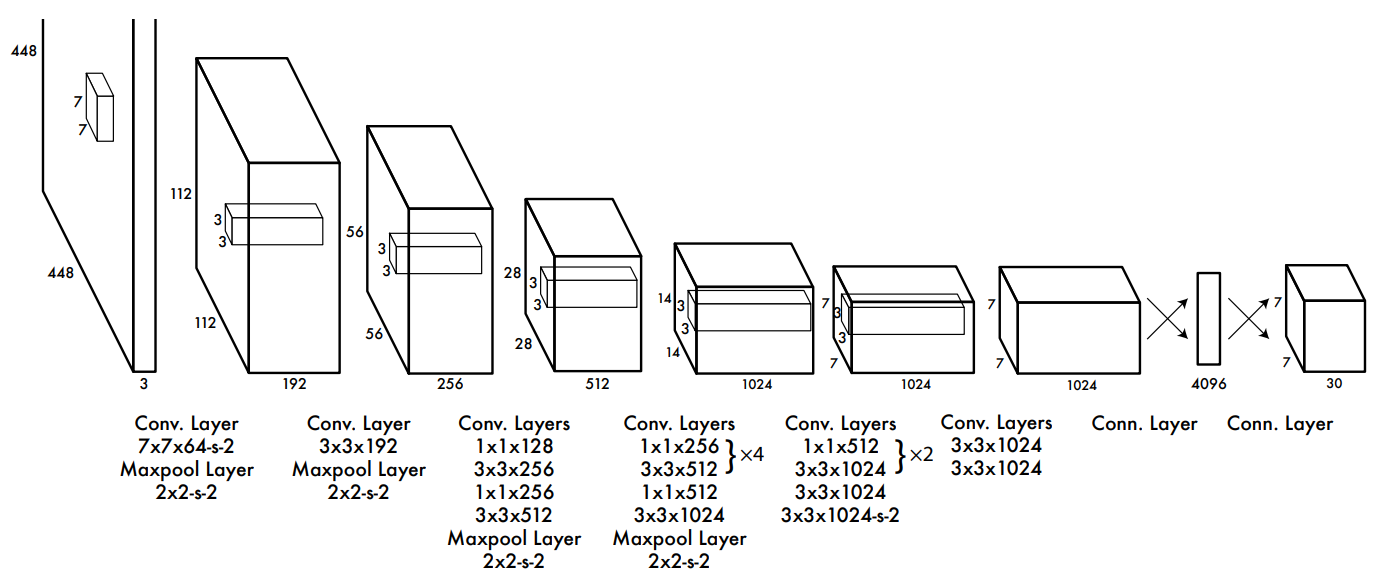

You Only Look Once (YOLO) is a proposal-free object detection model, that predict bounding boxes and class probabilities from an image in a single evaluation.

Architecture

Input image is divided into a grid, and each grid cell produces bounding boxes centered within it and predicts class probability.

YOLO: Divide the input image into a grid (), each grid cell produces bounding boxes and predicts class probability. And drop low-probable bounding boxes and make a final result.

The final output is a tensor of shape , where represents (x, y, w, h, confidence) for each bounding box.

Link to original

Single Shot MultiBox Detector

Definition

Single shot multi-box detector (SSD) model is a proposal-free object detection model that uses multiple feature maps from different layers to detect various-sized objects.

Architecture

The number of detections:

SSD uses a set of default boxes with different scales and aspect ratios at each location in the feature maps. CNN (Convolutional predictors) are applied to feature maps to produce class scores and box offsets for each default box.

Convolutional predictors

The convolutional predictors in SSD are designed to predict class scores and the bounding box offsets. The classification filter has the shape , where is the number of input channels, is the number of classes, and is the number of default boxes per location.

Link to original

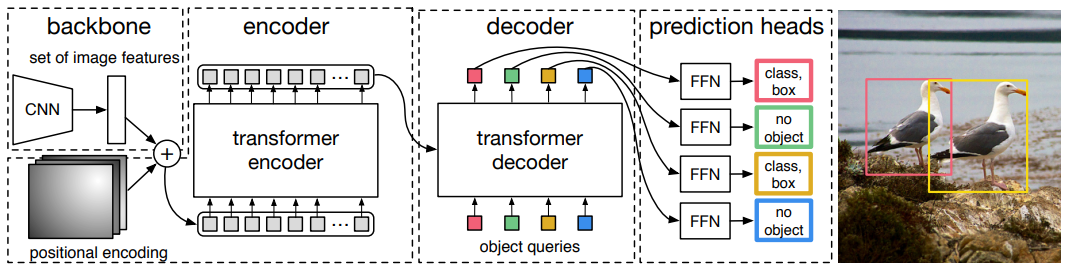

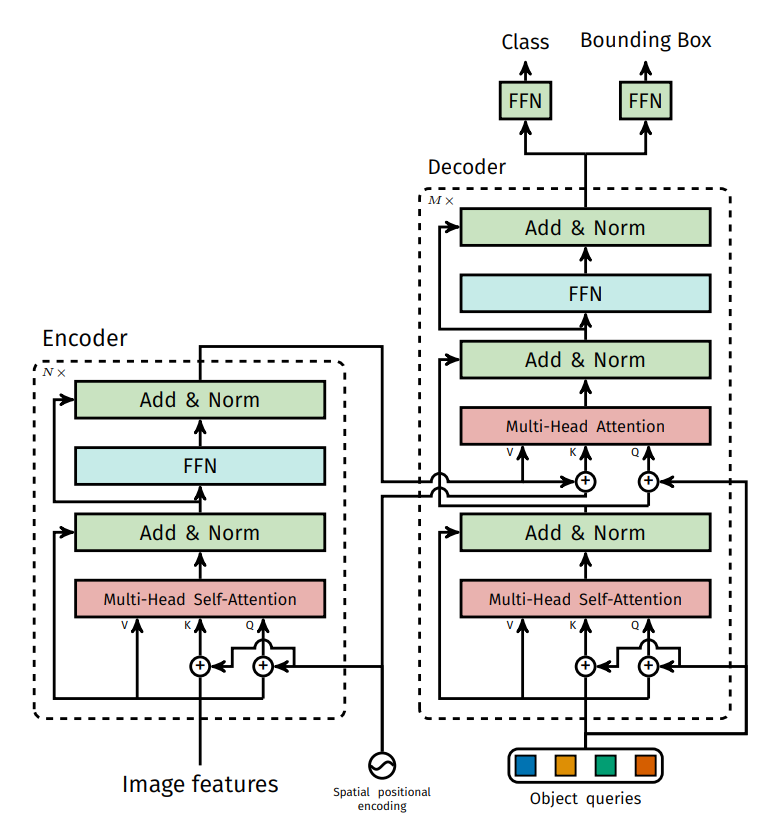

Detection Transformer

Definition

Detection Transformer (DETR) uses Transformer encoder-decoder architecture for the object detection problem.

Architecture

DETR extracts CNN features from the input image using a backbone CNN network. The feature map is flattened and fed into the encoder after adding positional encodings. The decoder takes the encoded image features and a set of learnable object queries as inputs. The decoder output embeddings correspond to the objects to be detected in the image. The output of the decoder is passed through two prediction heads: classification head, box head.

Link to original

Segmentation

Semantic Segmentation

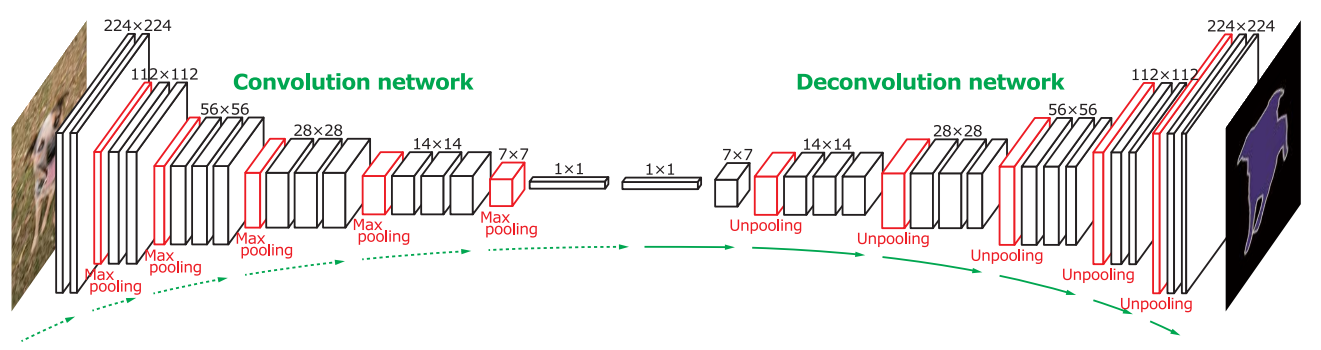

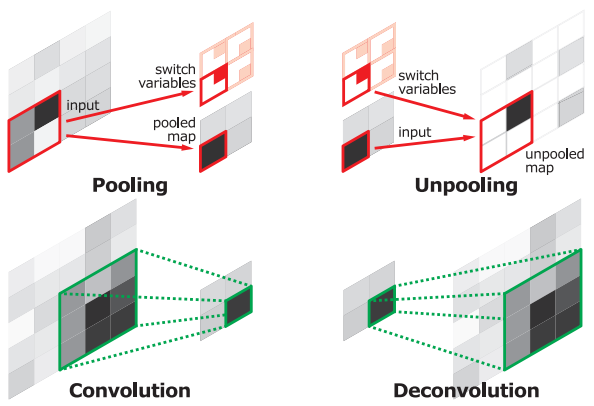

Deconvolutional Network

Definition

Deconvolutional Network uses auto-encoder-like CNN] structure. In the upsampling stage, the model use deconvolution layers.

Deconvolutional Layer

Deconvolutional layers are used to increase the spatial dimensions of input features. The values of the input feature map are used as scalars multiplied by the learnable deconvolutional filters. With strides greater than 1, the output of the deconvolutional layer is enlarged and densified.

Link to original

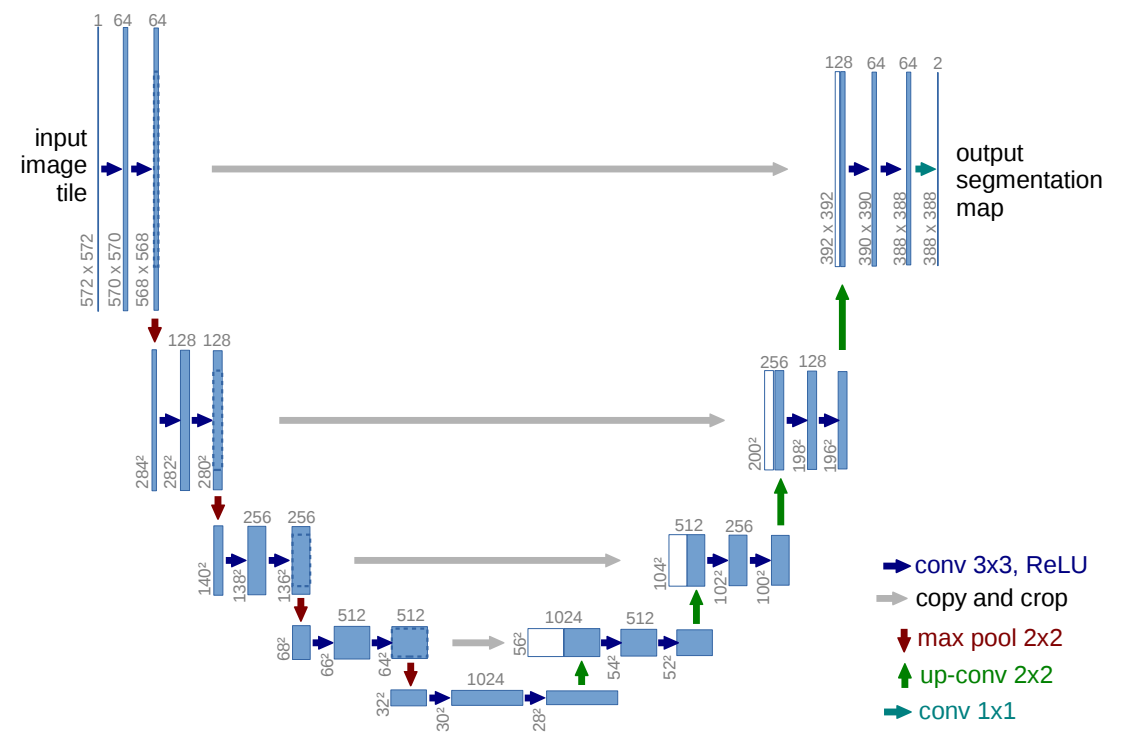

U-Net

Definition

U-Net is an encoder-decoder structured CNN used for image segmentation.

Architecture

Encoder-Decoder

The encoder extracts spatial patterns using regular convolutional layers. Each downsampling step doubles the number of channels. The decoder upsamples the input feature map with deconvolution that halves the number of channels.

Skip Connections

The feature maps from the layers of the encoder are cropped and concatenated with the upsampled feature maps in the layers of the decoder. This allows the network to combine low-level features with high-level features, preserving detail.

Link to original

Instance Segmentation

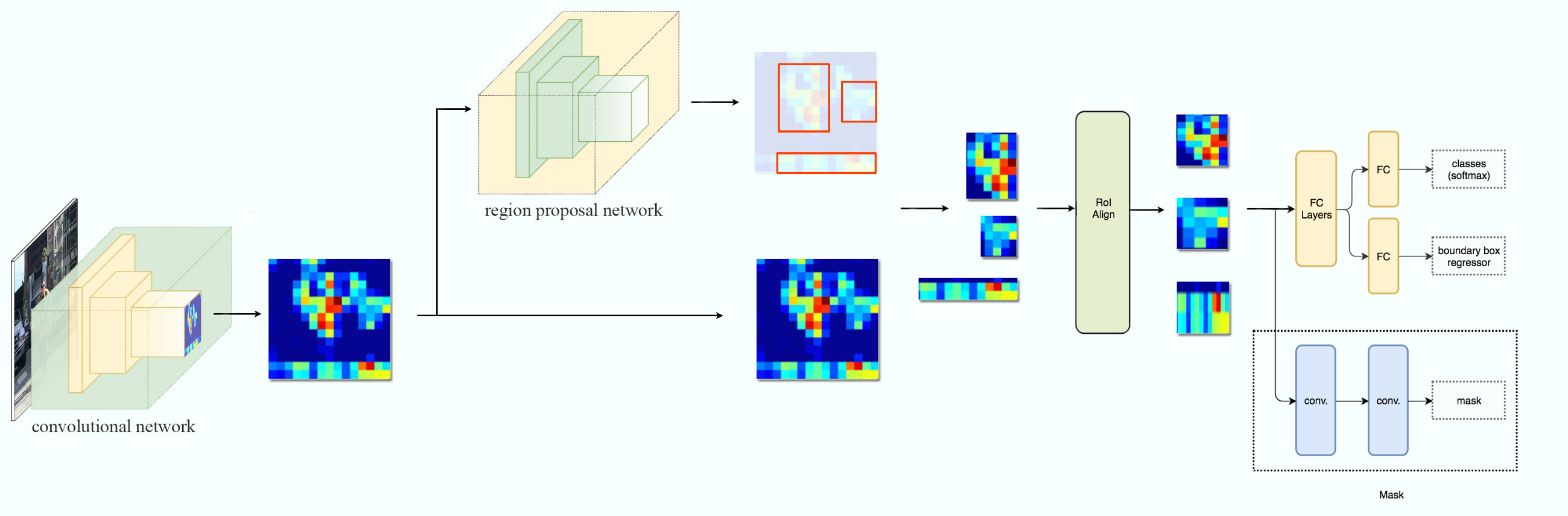

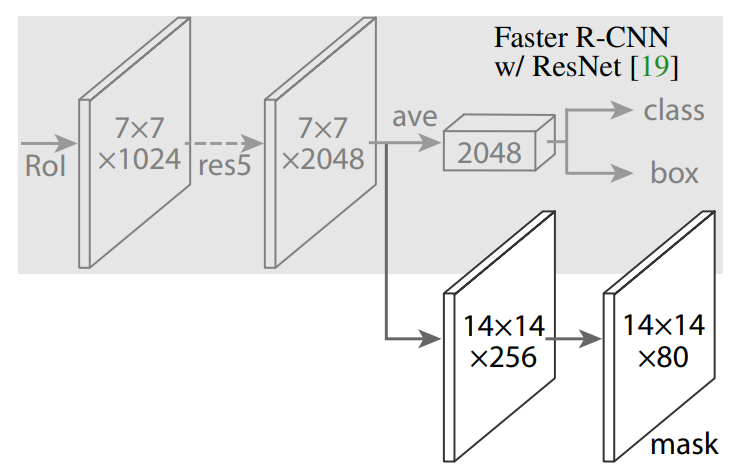

Mask R-CNN

Definition

Mask R-CNN extends Faster R-CNN by adding mask prediction branch for instance segmentation problems.

Architecture

RoI Align

Mask R-CNN replaces RoI pooling used in Faster R-CNN to RoI align. RoI align is performed to accurately align extracted features with the input image It computes the value of each sampling point by bilinear interpolation from the nearby grid points on the feature map.

Mask Head

Mask prediction head generates a binary mask for each RoI using the aligned feature.

The mask head is composed of convolutional layers and deconvolutional layers.

Link to original

Transformer-Based Segmentation Models

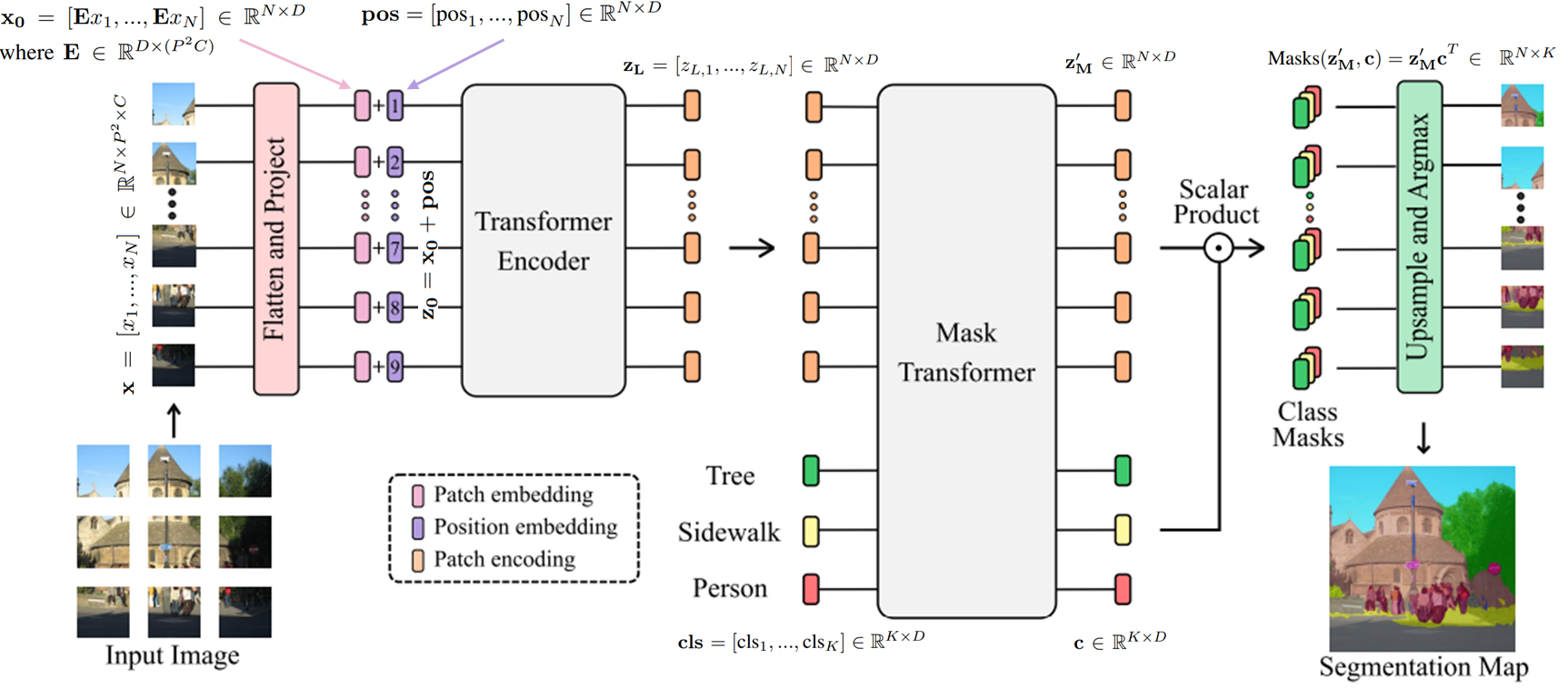

Segmenter

Definition

Segmenter is a Transformer-based semantic segmentation model.

Architecture

The encoder of Segmenter has a ViT architecture.

The decoder takes the output of the encoder and the class tokens as inputs. The embedded token matrix () and the class mask matrix () are multiplied and Softmax Function is applied on the class dimension to obtain pixel-wise class scores, where is the number of patches, is the channel dimension, is the number of classes.

Link to original

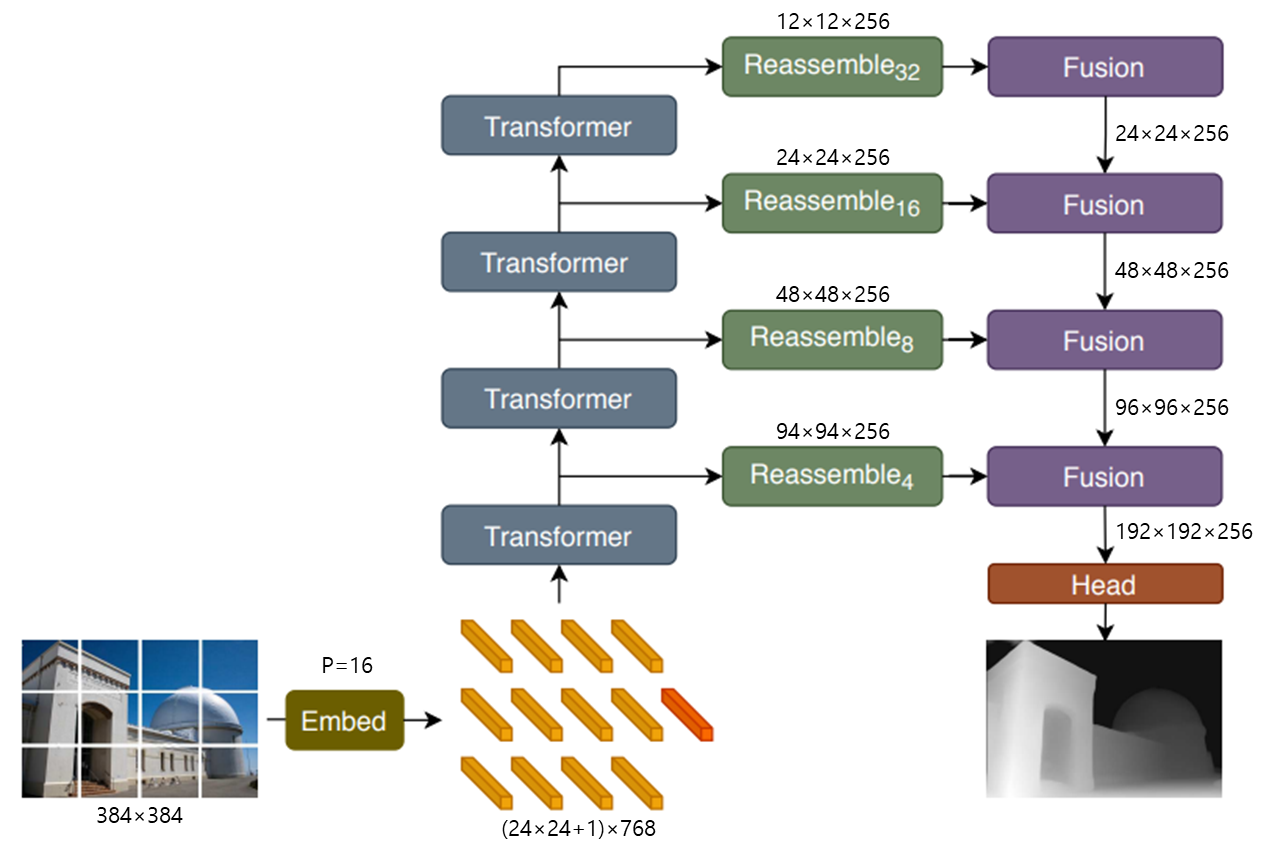

Dense Prediction Transformer

Definition

Dense prediction transformer (DPT) reassembles features at multiple resolutions unlike the Segmenter which only uses fixed-size patches.

Architecture

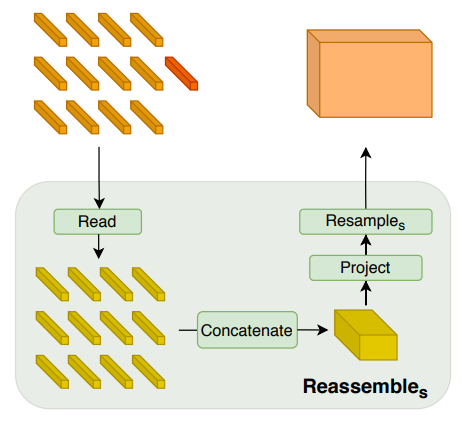

Reassemble

The resample operation reshapes the flatten feature vectors back to image-like dimensions, change the spatial and feature sizes using the convolutional layer followed by a convolution or deconvolutional layer. In the process, the CLS token is specially processed (ignored, added, or concatenated and projected)

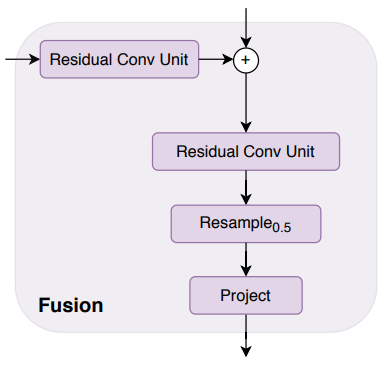

Residual Convolutional Unit

The output of the transformer is concatenated to the fusion module after passing through the residual convolutional unit.

Fusion

The fusion module concatenates the feature of the previous layer and the residual convolution unit, double the size of the feature map, and bring the channel size back to the input size.

Link to original

Metric Learning

Discounted Cumulative Gain

Definition

Discounted cumulative gain (DCG) is a measure of ranking quality in information retrieval.

where is the graded relevance of the result at position .

Normalized DCG

DCG is often normalized so that it is comparable across queries, giving Normalized DCG (NDCG). NDCG is DCG normalized by the maximum possible DCG of the result set.

Link to original

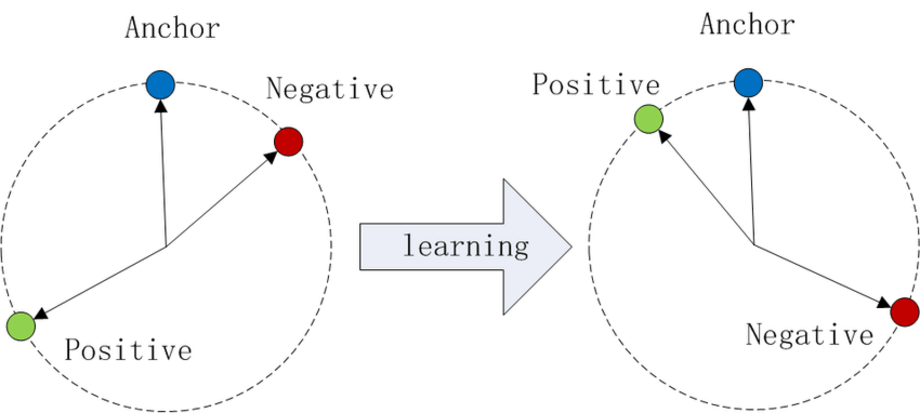

Triplet Loss

Definition

Triplet loss is a Loss Function where a reference input (anchor) is compared to a matching input (positive) and a non-matching input (negative). The distance from the anchor to the positive is minimized, and the distance from the anchor to the negative input is maximized.

where is a margin between positive and negative pairs

Link to original

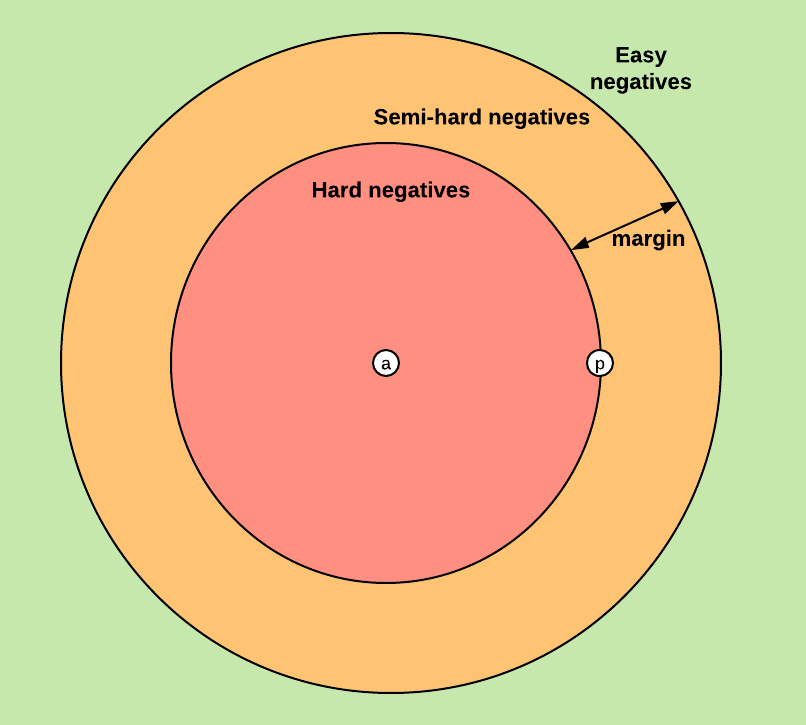

Negative Mining

Definition

In the context of metric learning, negative mining involves selecting the most informative negative examples to train the model well. Instead of randomly sampling negative examples, we focus on hard negatives, those that are closest to the anchor in the embedding space but belong to a different class. For each anchor, compute distances to all or a subset of negative examples, and select the negative examples that are closest to the anchor.

Online Negative Mining

Online negative mining looks for negatives from the current batch, instead of using one initially assigned.

Semi-Hard Negative Mining

Semi-hard negative mining selects negative examples that are farther from the anchor than the positive example, but still within a certain margin.

If , then consider as a semi-hard negative

Link to original

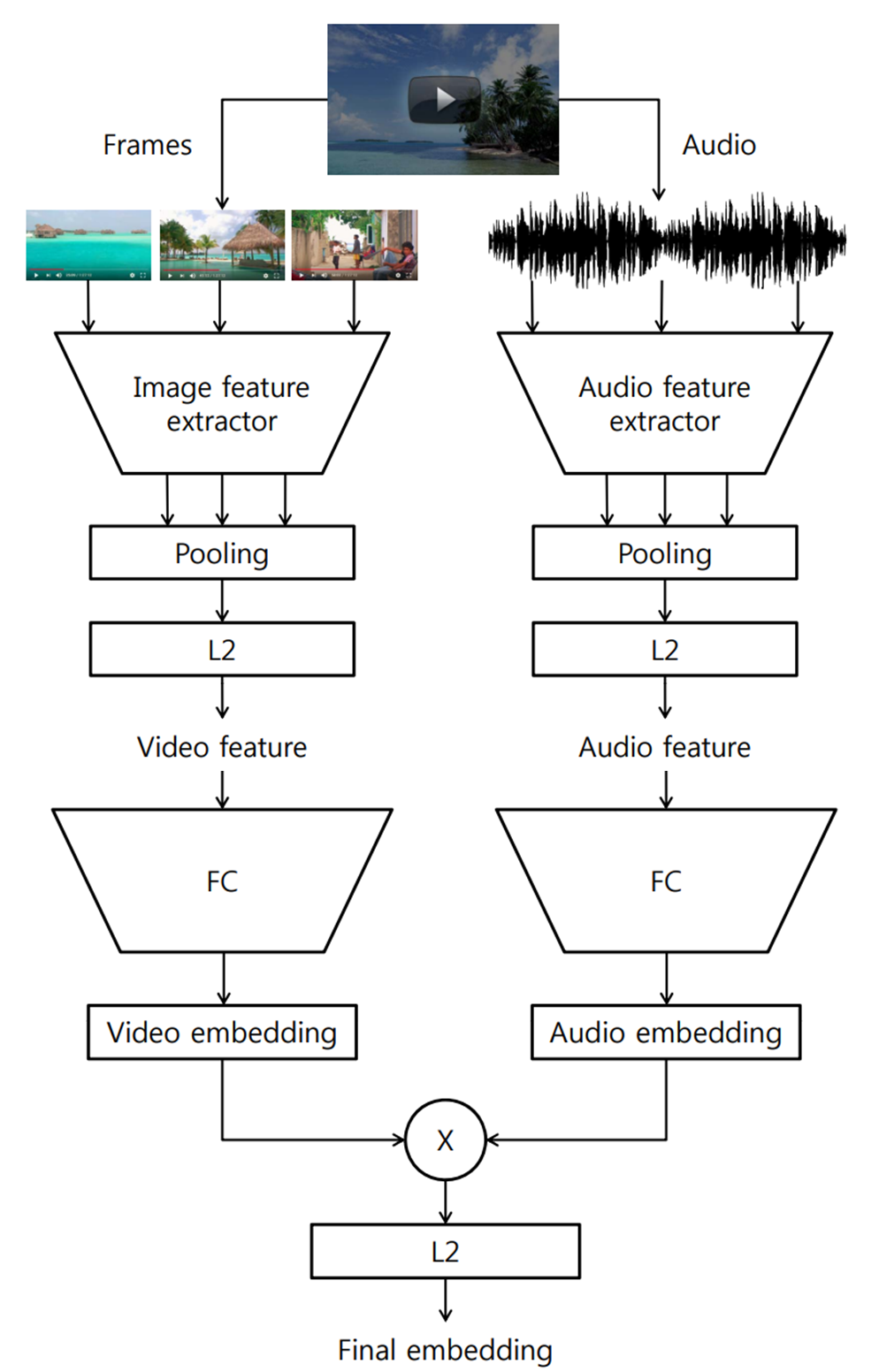

Collaborative Deep Metric Learning

Definition

Collaborative Deep Metric Learning (CDML) construct a simple graph whose nodes are contents, edges are existence of relationship (e.g. co-watched video). The model trains the embedding minimizing Triplet Loss, where the negative samples are randomly selected in the batch.

Link to original

Pairwise Loss

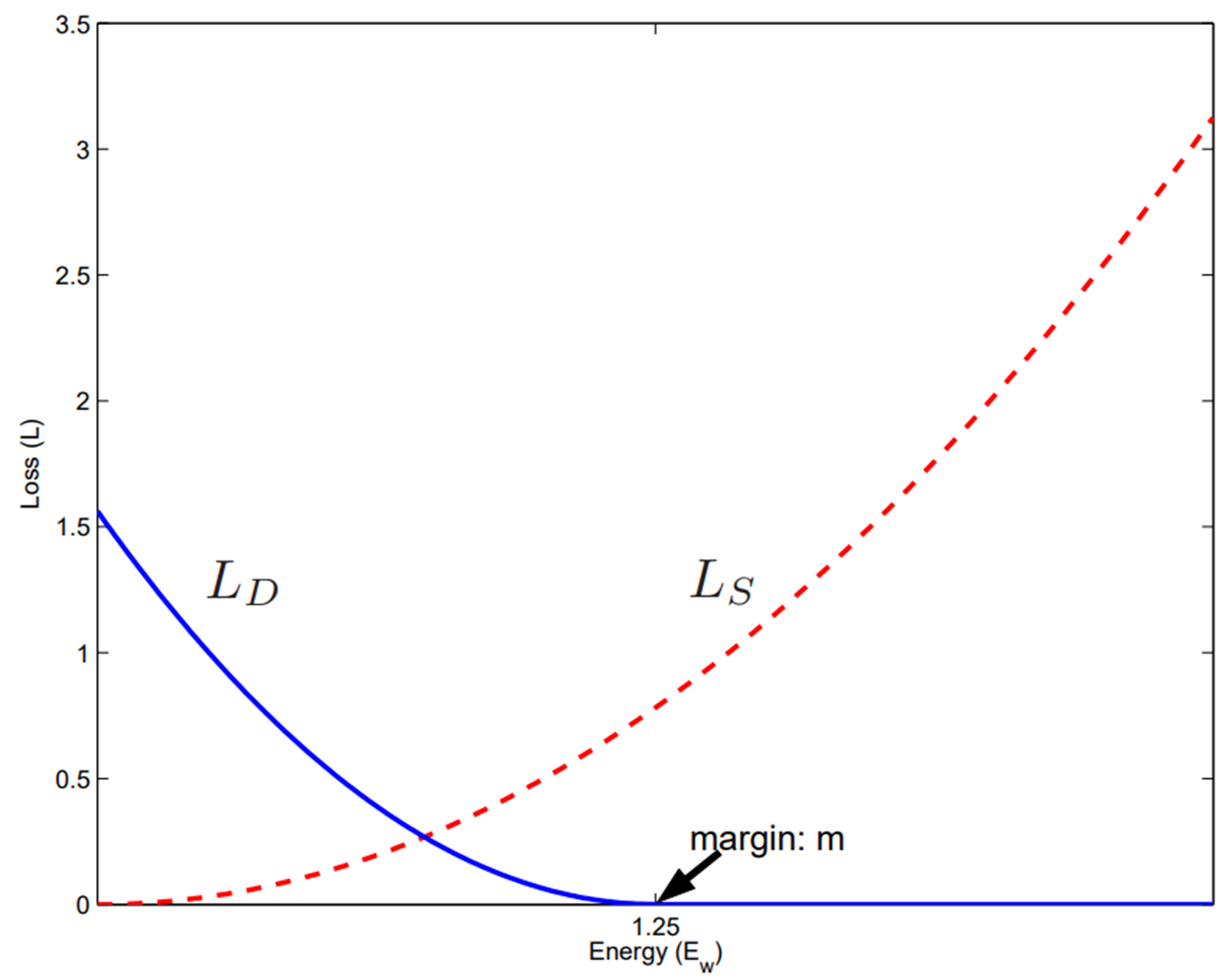

Definition

The pairwise loss compares pairs of items and determines their relative order. Instead of predicting absolute errors for individual items.

where is a binary label if a pair is similar then , dissimilar then , is a distance metric between the pair, parametrized by .

Link to original

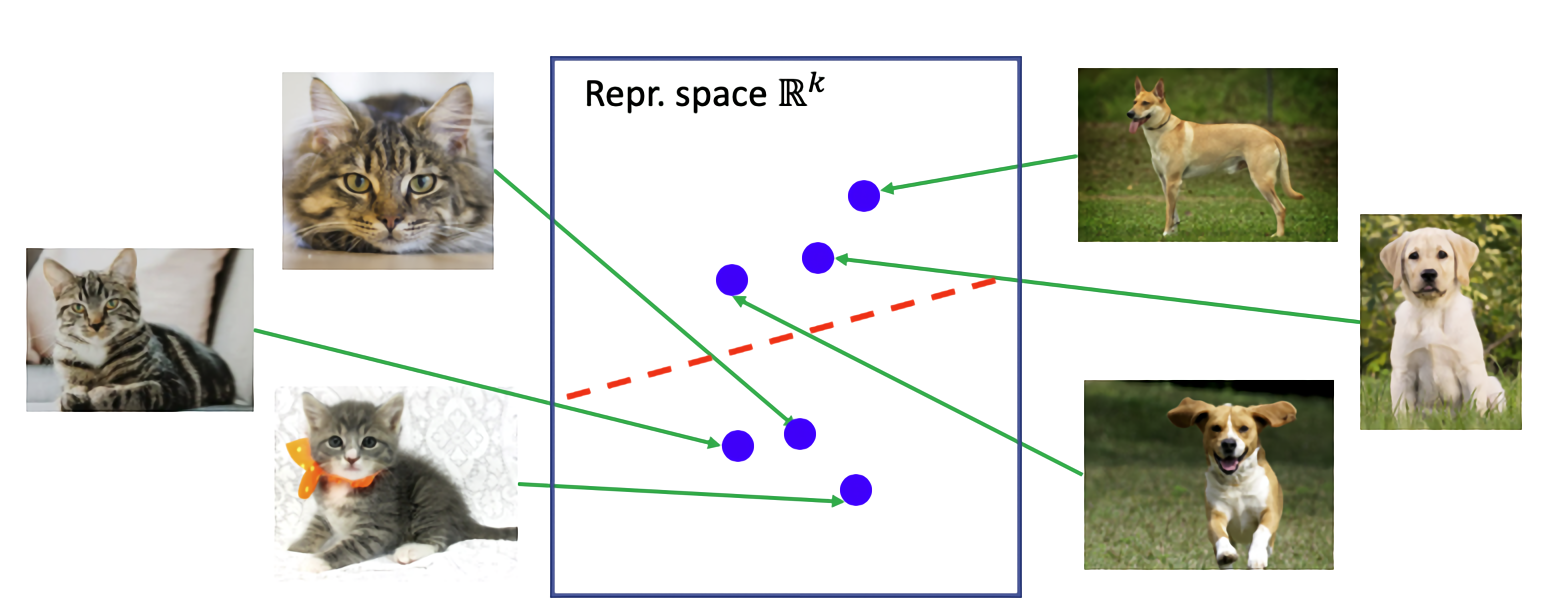

Contrastive Learning

Definition

Contrastive learning is a kind of metric learning that learns representations of data by comparing similar and dissimilar samples. The model is trained to recognize that certain data points are related (positive pairs) or not (negative pairs). It helps the model learn meaningful features and representations without requiring explicit labels for every data point.

Link to original

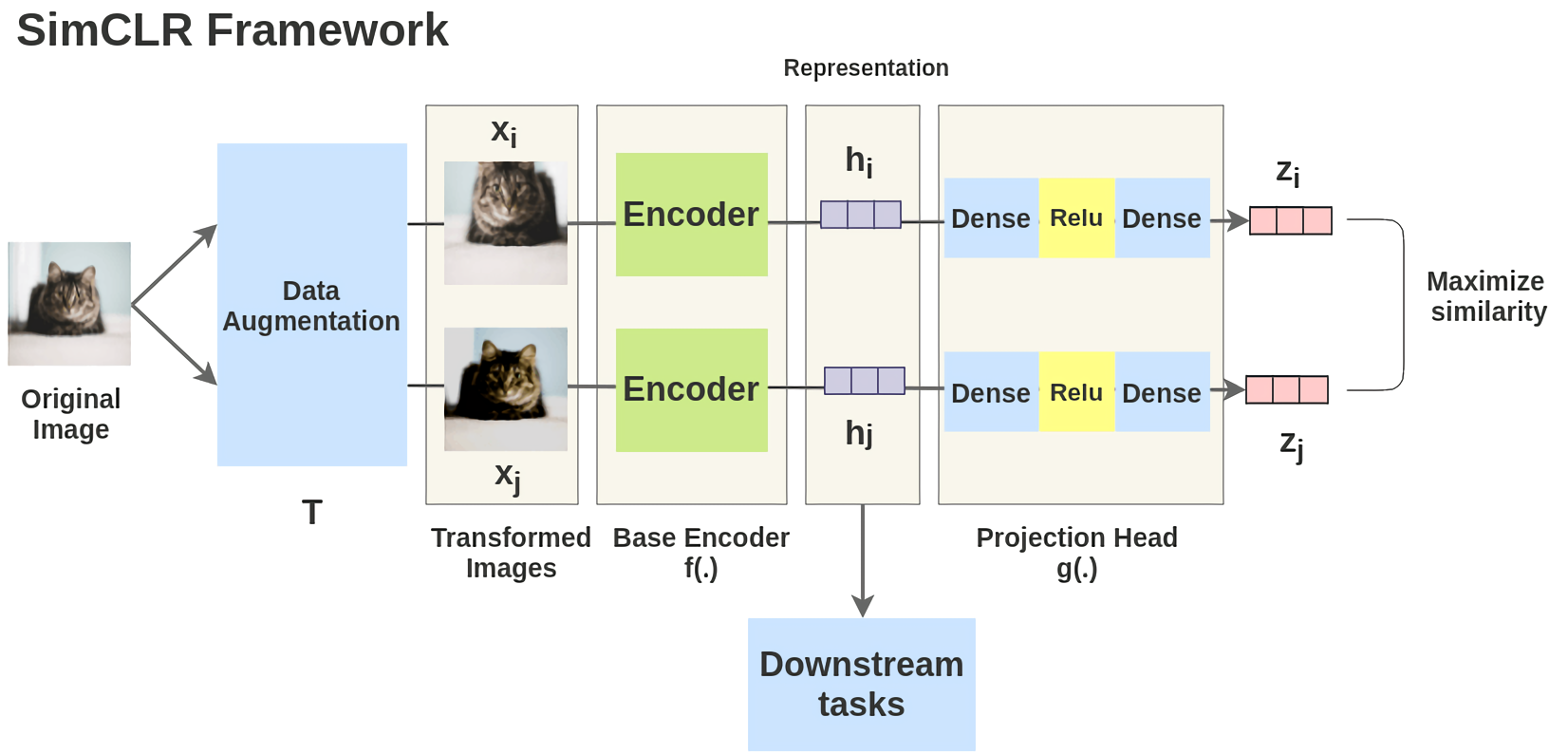

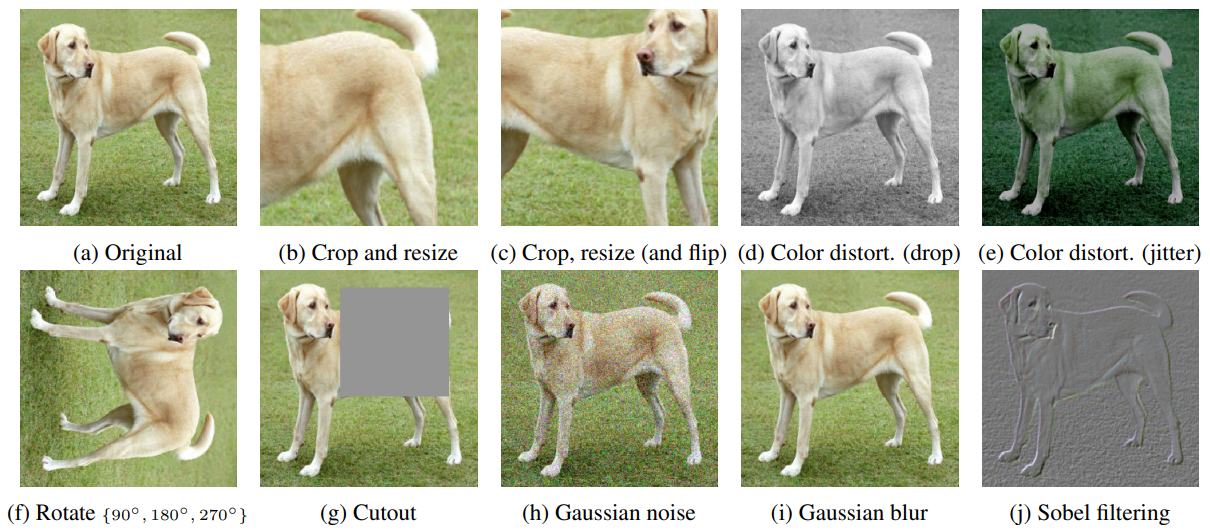

SimCLR

Definition

SimCLR is a self-supervised visual embedding model trained with contrastive loss. For each image twice, some data augmentation is applied, creating two augmented images. The pairs are used as positive pairs and the other pairs in the minibatch are used as negative pairs.

Architecture

Data Augmentation Operators

Contrastive Loss

Link to original

Multimodal Learning

Image Captioning Task

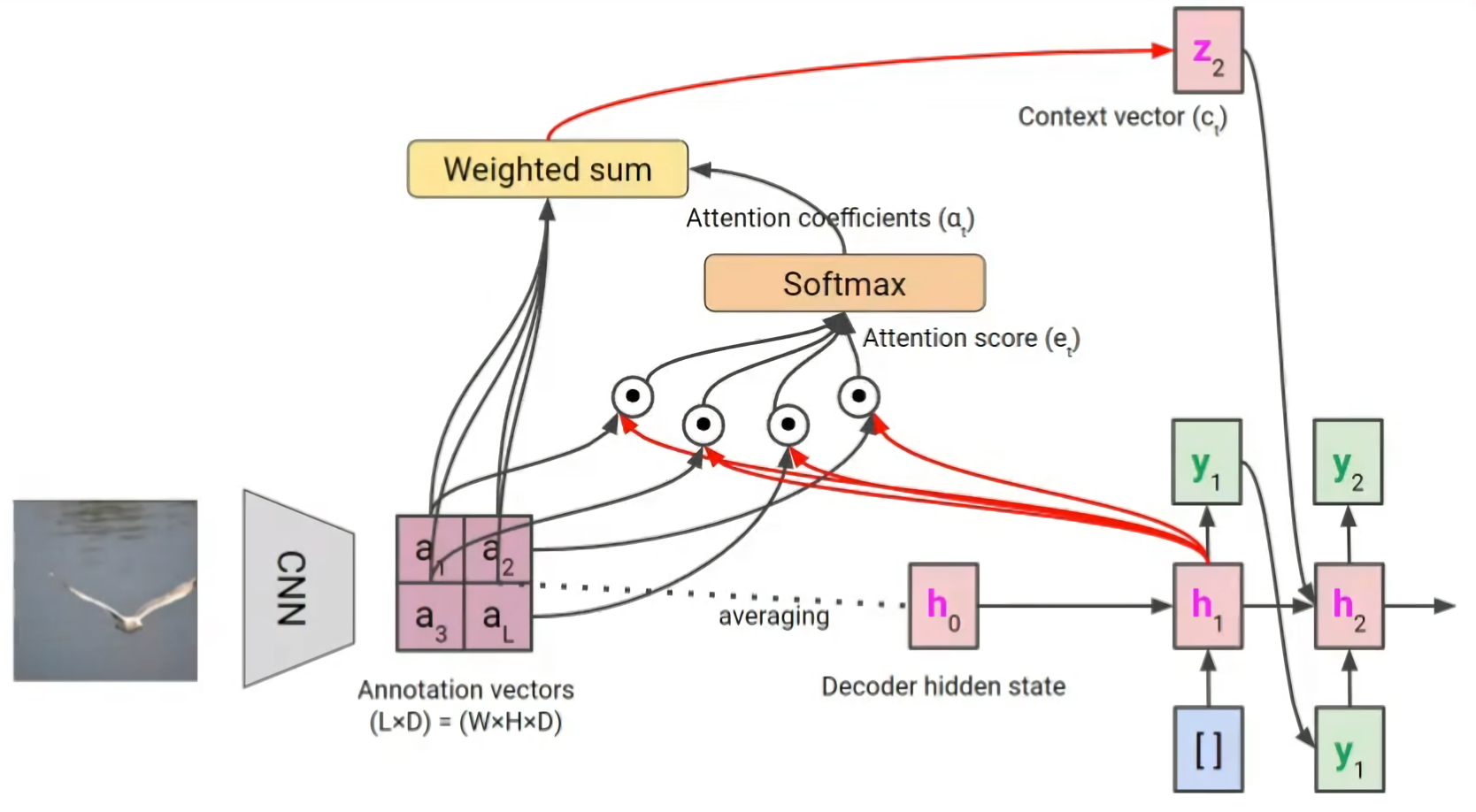

Show, Attend, and Tell

Definition

Show, attend, and tell (SAT) is a Attention based image captioning model.

Architecture

The model has LSTM structure. The feature map is extracted from the input image using CNN and it is fed into the Attention LSTM, where the query (Q) is the previous hidden state, and key (K) and value (V) are the vectors of the feature map.

Link to original

Video Captioning Task

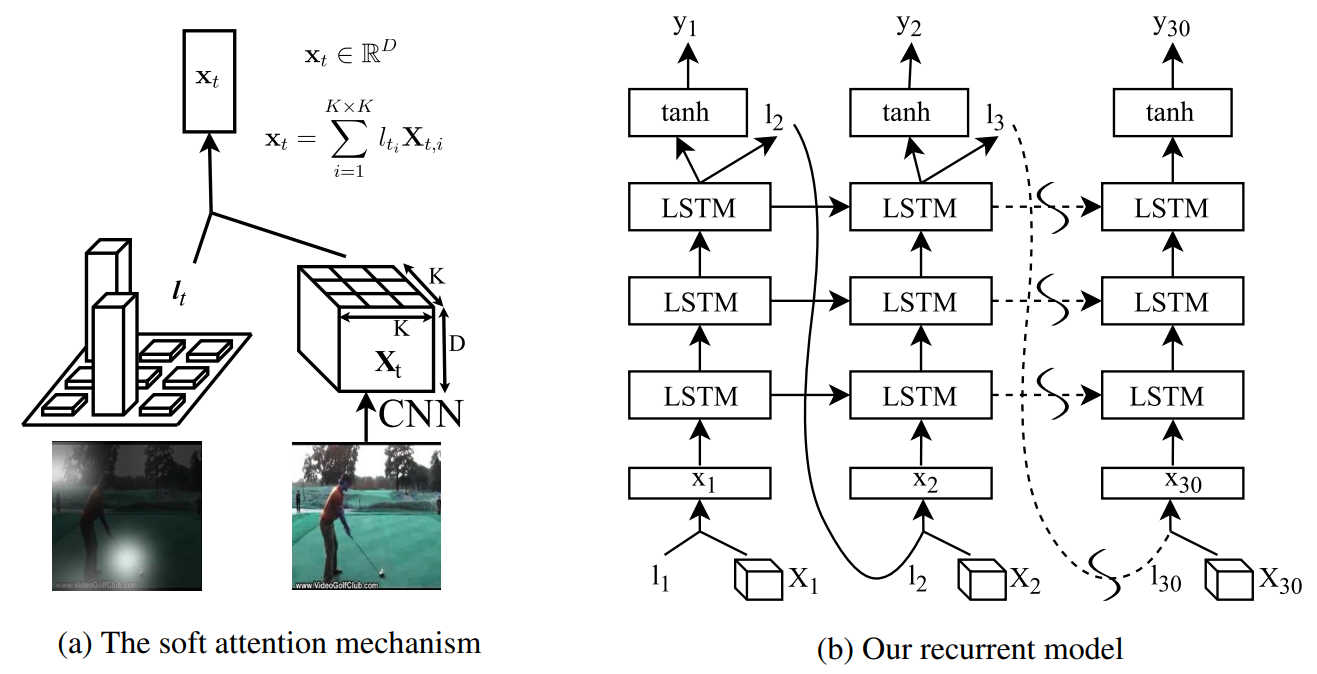

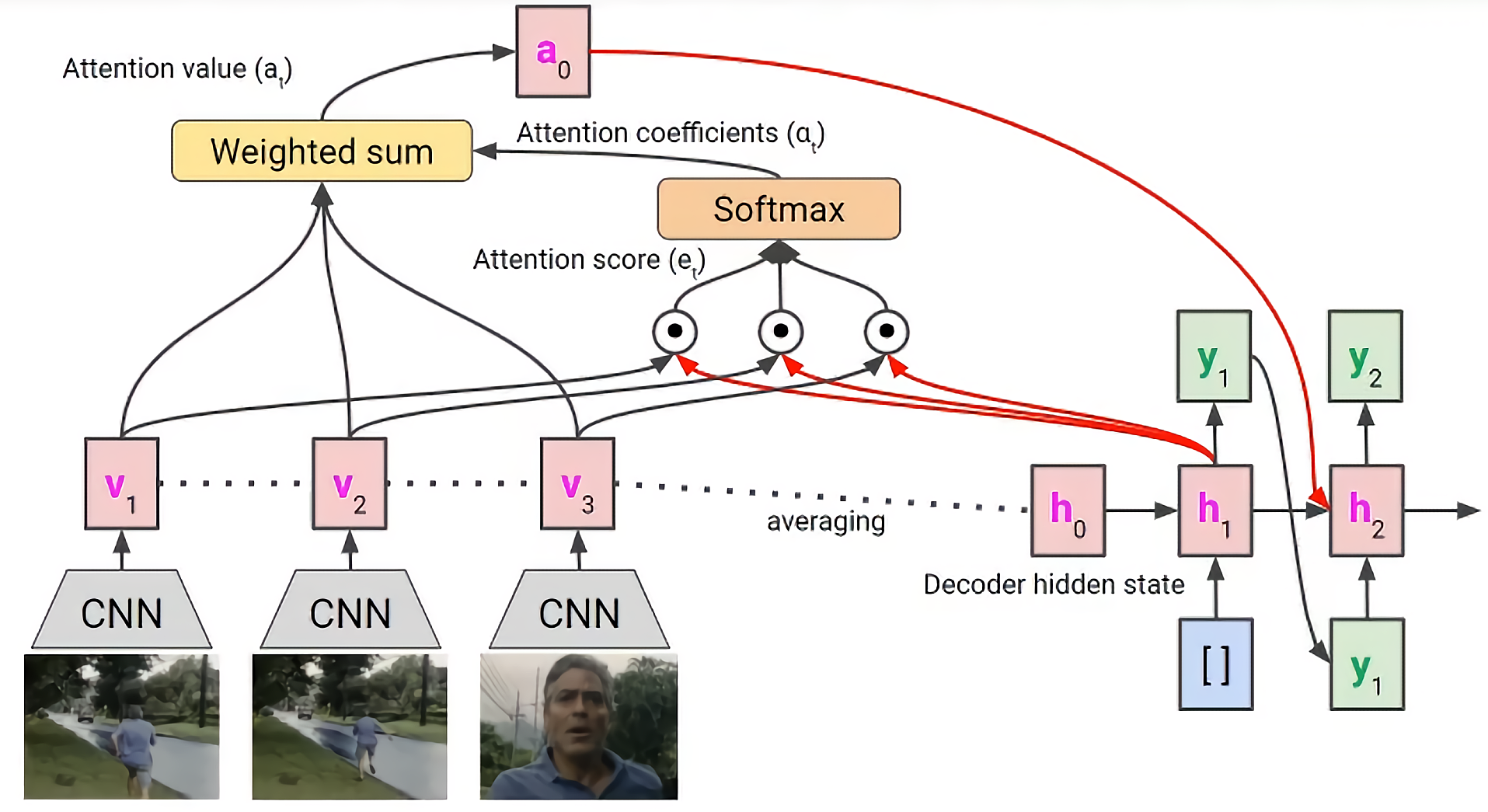

Describing Videos by Exploiting Temporal Structure

Definition

The model uses an LSTM architecture with Attention for video captioning task.

Architecture

The model has LSTM structure. The feature maps are extracted from the input frames using CNN and it is fed into the Attention LSTM, where the query (Q) is the previous hidden state, and key (K) and value (V) are the extracted feature maps.

Link to original

Transformer-Based Image-Text Models

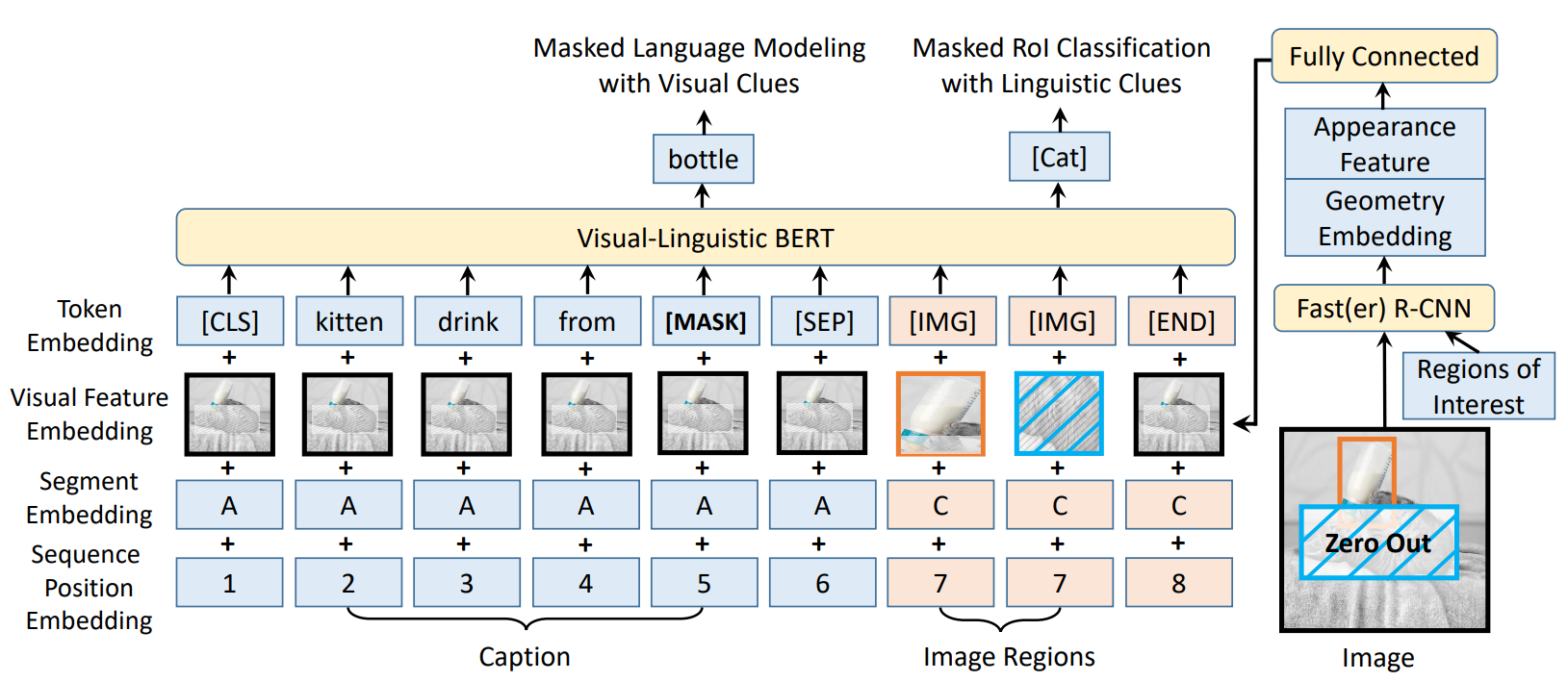

Visual-Linguistic-BERT

Definition

Visual-Linguistic-BERT (VL-BERT) extends the BERT architecture for video captioning task.

Architecture

The model appended visual feature embedding to the BERT architecture. The text part is trained in the same way as the BERT’s masked language modeling, and the image part is trained to estimate the label of the masked visual feature.

Visual Feature Embedding

The visual features embedding of the text tokens are obtained from the entire input image, and those of the image tokens are obtained from the objects estimated by an object detection model (Faster R-CNN).

Link to original

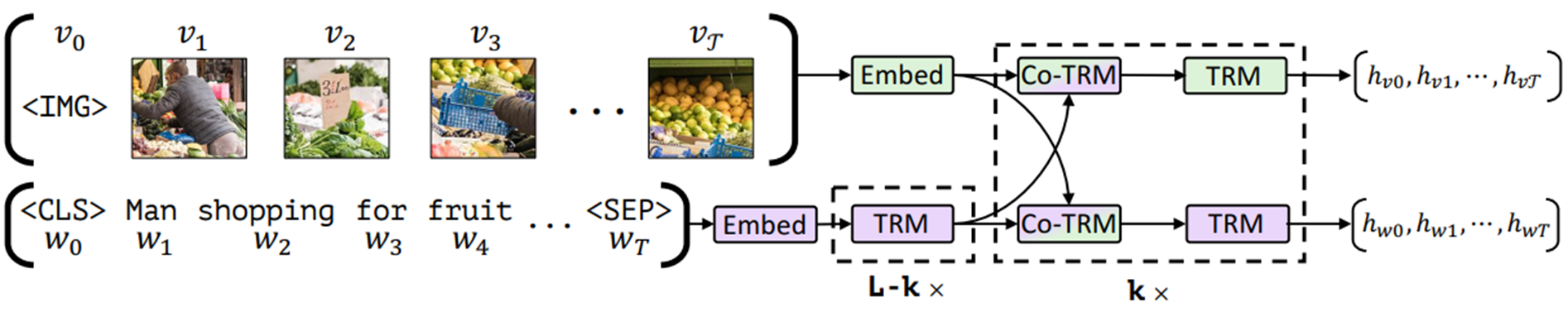

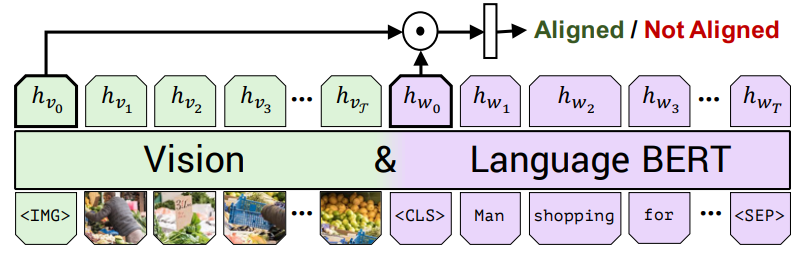

Vision-and-Language BERT

Definition

Vision-and-Language BERT (ViLBERT) extends the BERT architecture to handle both visual and textual inputs.

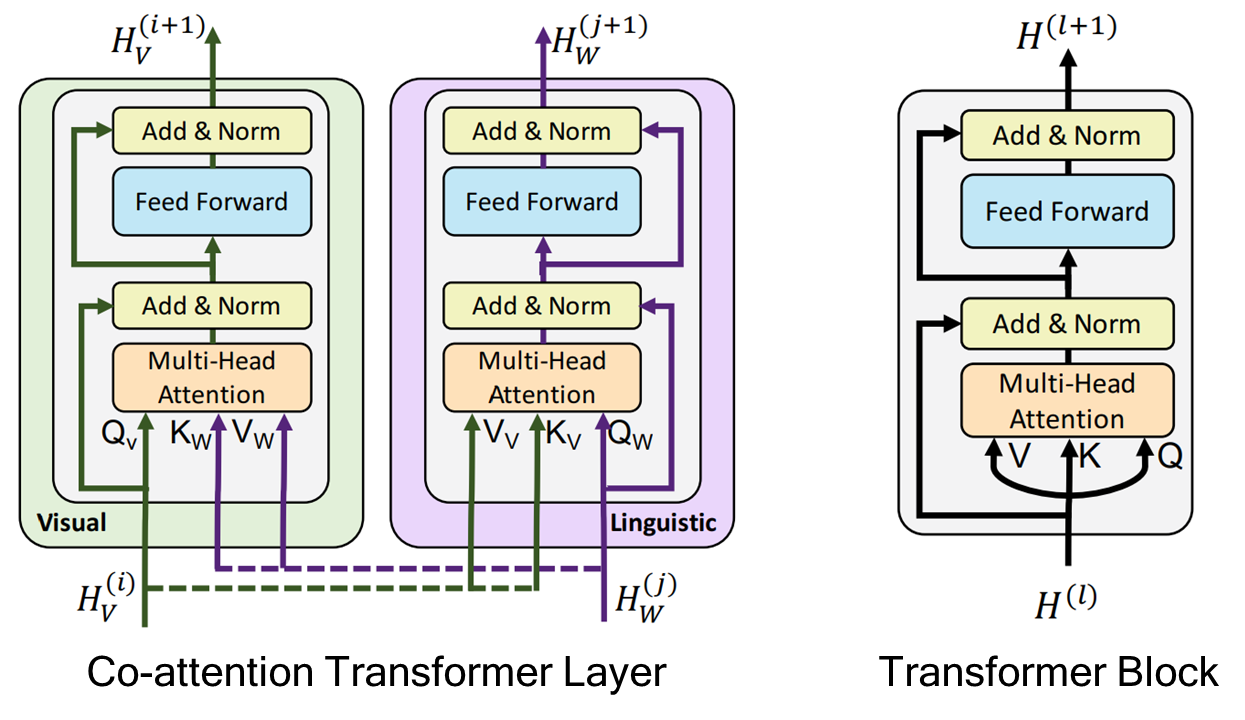

Architecture

ViLBERT model consists of two parallel BERT-like streams: visual stream and textual stream. The two stream are connected through co-attention transformer layers. The tokens of the visual stream are objects estimated by an object detection model (Faster R-CNN). VilBERT is pre-trained on image-caption pairs using two main tasks: masked multimodel learning and multimodel alignment prediction.

Co-Attention Transformer Layer

The co-attention transformer layer allows for bidirectional interaction between the visual and textual streams. In the layer, each stream uses the feature of another stream as key (K) and value (V).

Masked Multimodel Learning

The text stream is trained in the same way as the BERT’s masked language modeling, and the image part is trained to estimate the label of the masked visual feature.

Multimodel Alignment Prediction

The model takes image-text pairs as input and determines if the image and text pair match.

Link to original

Transformer-based Video-Text Models

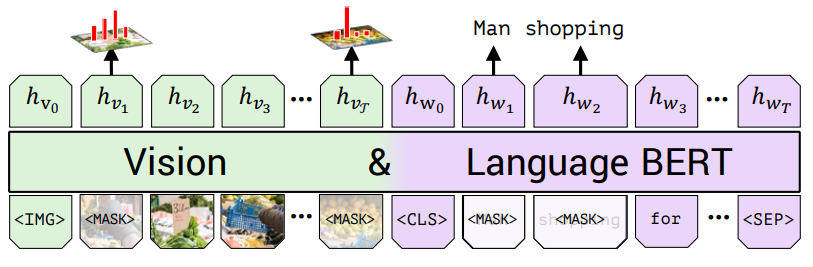

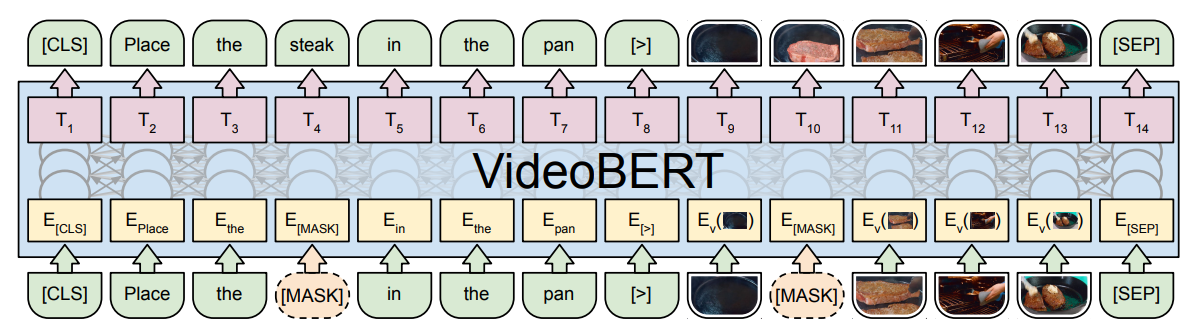

VideoBERT

Definition

VideoBERT extends the BERT architecture to handle both video and text data.

Architecture

The frames are sampled from an input video and the CNN (S3D) features of them are extracted and used as the visual tokens.

Linguistic-Visual Alignment Task

The model takes video segment-text pairs as input and determines if the pairs match.

Masked Language Modeling (MLM)

The textual part is trained in the same way as the BERT’s masked language modeling

Masked Frame Modeling (MFM)

The visual part is trained to estimate the cluster of the visual tokens, assigned by K-Means Clustering.

Link to original

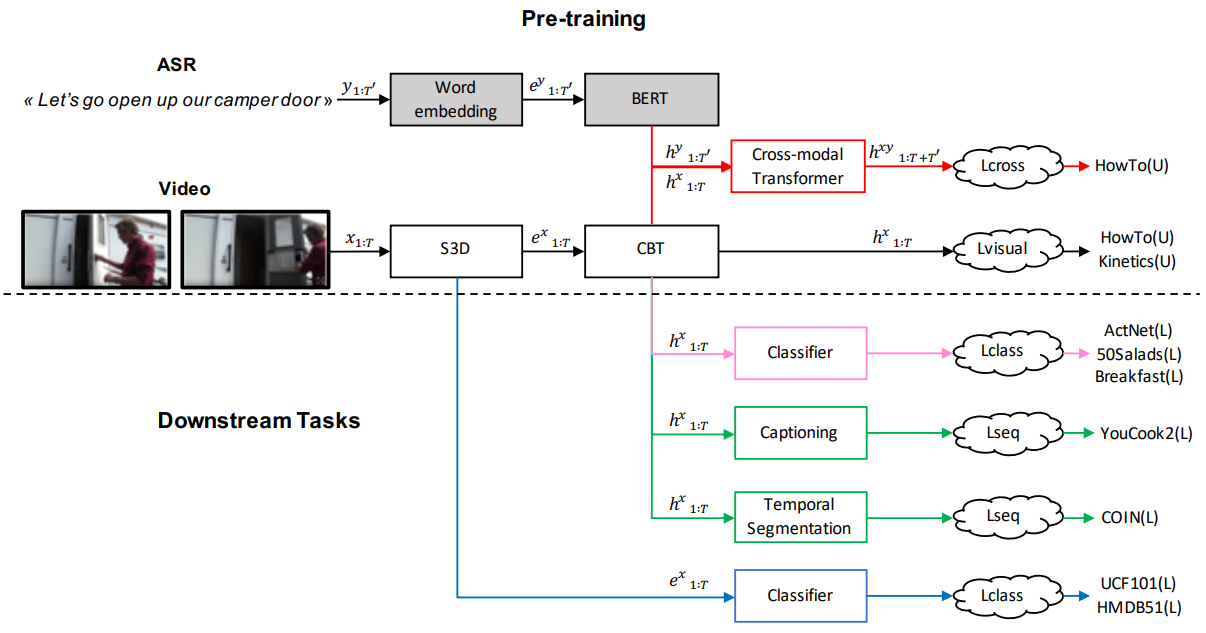

Contrastive Bidirectional Transformer

Definition

Contrastive Bidirectional Transformer (CBT) applied Contrastive Learning to the training of BERT for video captioning task.

Architecture

CBT consists of two streams: video and textual stream. The two streams extracts word and frame features respectively and those features are concatenated and pass through the cross-model transformer to make an estimation. The text stream uses pre-trained BERT, and the video part is trained through Contrastive Learning, if two frame features originated from the same video then positive otherwise negative.

Cross-Model Transformer

The cross-model transformer model takes video segment-text pairs as input and determines if the pairs match.

Link to original

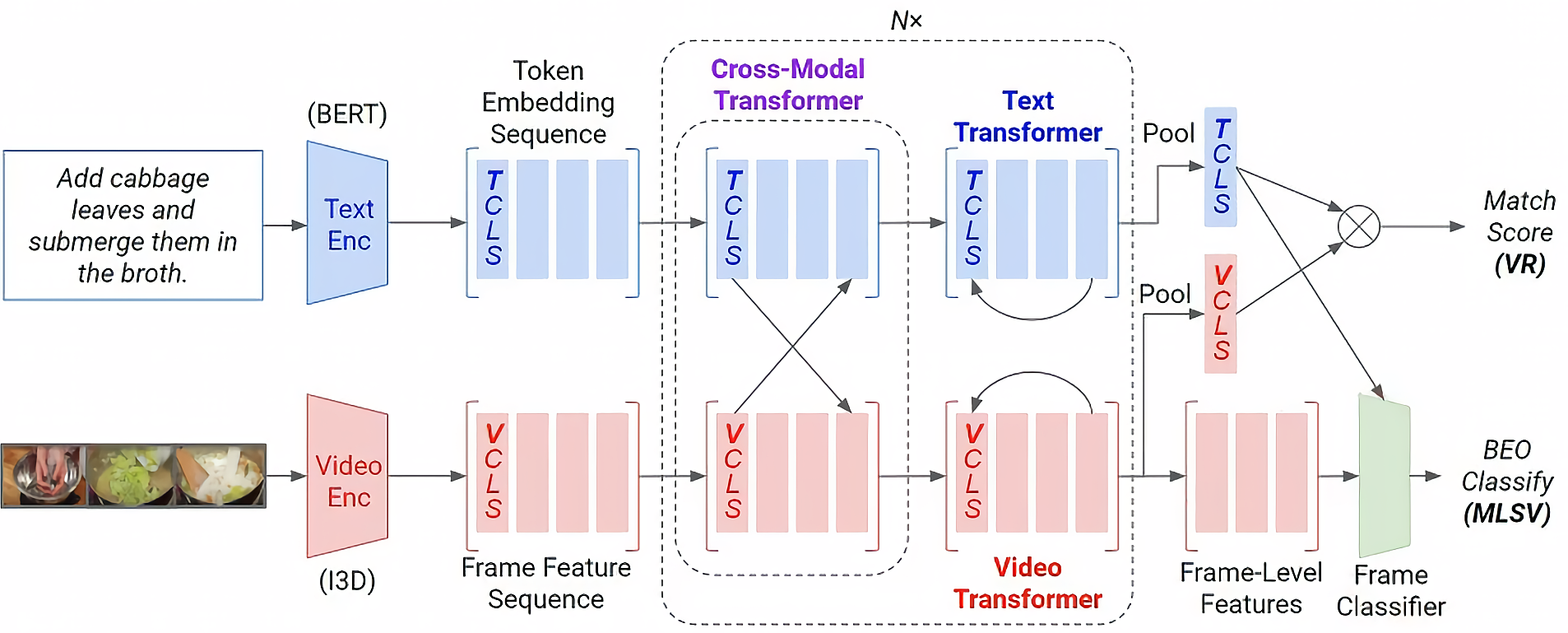

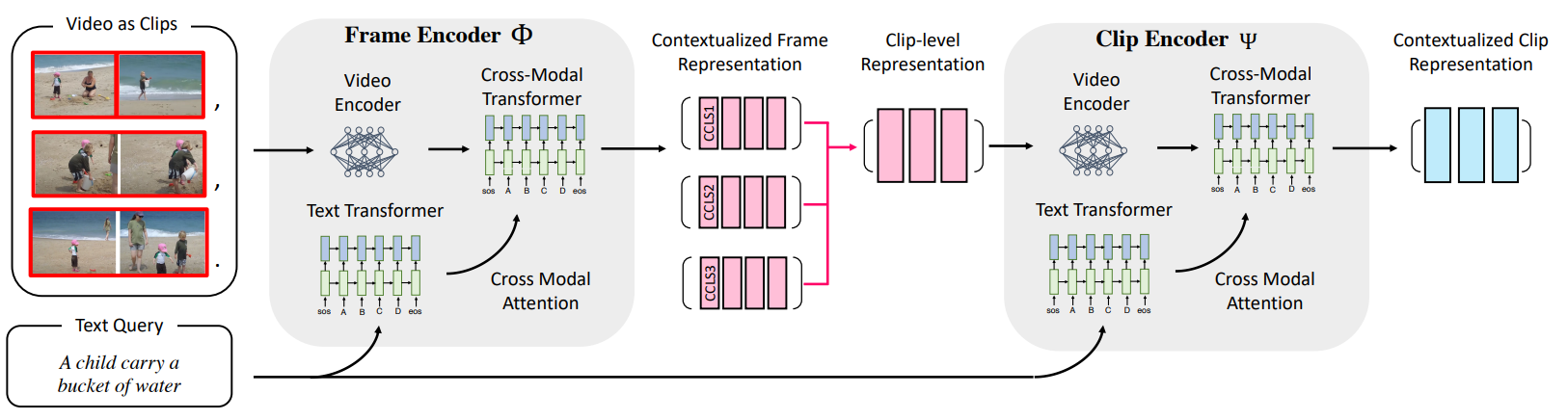

Hierarchical Multi-Modal Encoder

Definition

Hierarchical multi-modal encoder (HAMMER) is a model for moment localization tasks (identifying a short segment in a long video corpus that semantically matches a given text query). It simplified the task with Conditional Probability.

Architecture

Two-Stage MLVC (Moment Localization in Video Corpus)

The original problem is approximated to the simpler problem using the definition of Conditional Probability

Video Retrieval Task

The video retrieval task is trained through Contrastive Learning by promoting the correct video-query pairs.

Moment Localization in Single Video

The temporal localization task is trained as a classification problem with the three labels: begin, end, and other.

Hierarchical Visual Encoders

The frame encoder takes the frame sequence of a video clip and the query as input, and outputs the contextualized visual frame features. The CLS tokens of the frame encoder fed into the clip encoder, yielding a video representation

Link to original

Audio Modeling

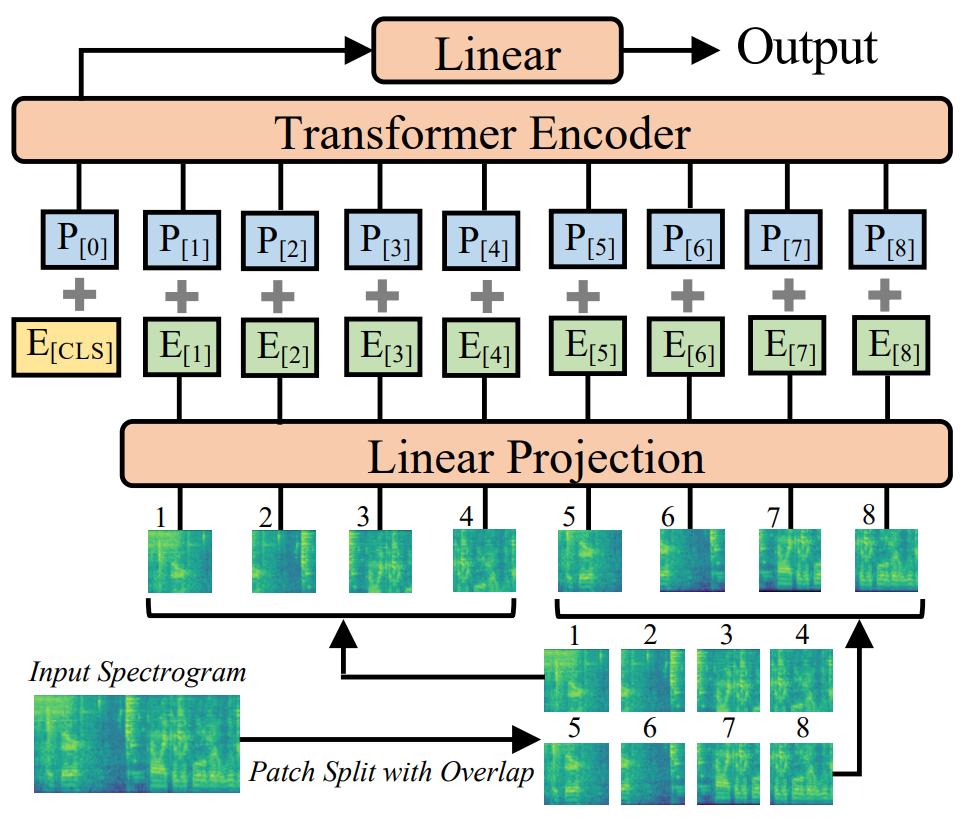

Audio Spectrogram Transformer

Definition

Audio spectrogram transformer (AST) applied ViT architecture to the audio input represented as spectrogram.

Link to original

Visual-Audio-Text Model

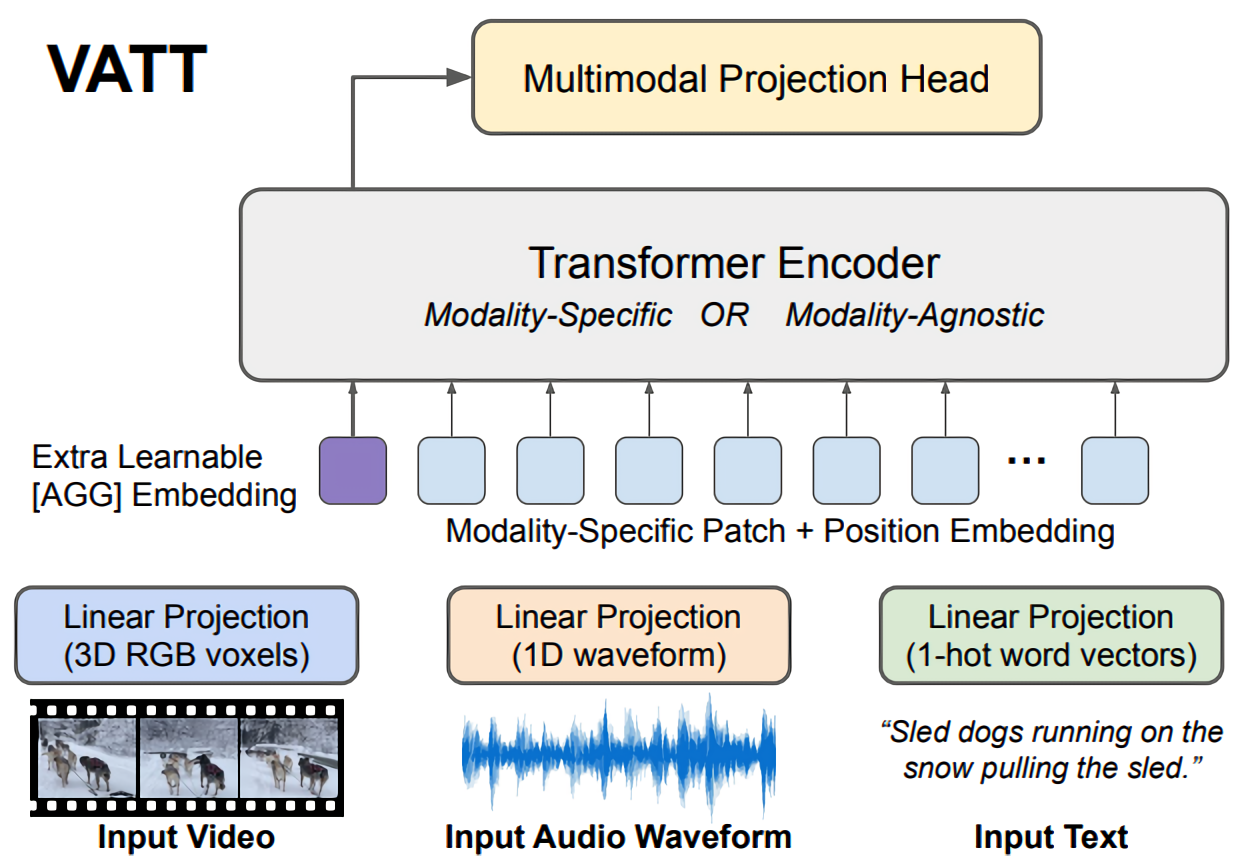

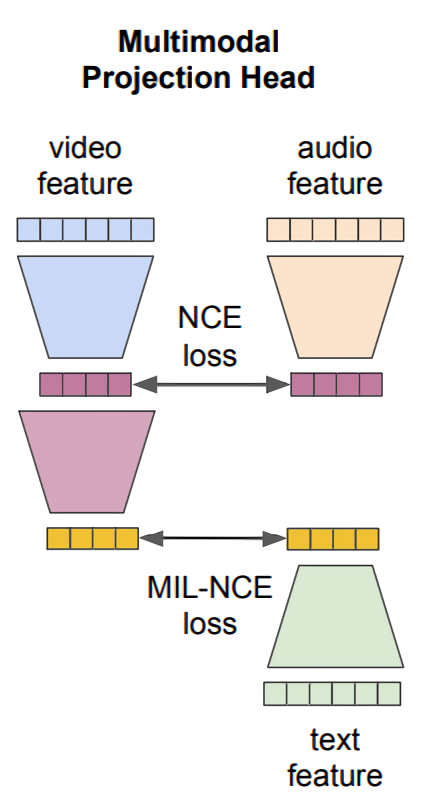

Video-Audio-Text Transformer

Definition

Video-audio-text transformer (VATT) designed to process and understand information from video, audio, and text simultaneously.

Architecture

VATT is based on the Transformer architecture. It consists of three separate encoders for video, audio, and text inputs, followed by a joint multimodal encoder. Each modality feature is embedded to a same-sized vector, and fed into a transformer encoder. The model is trained through multimodal Contrastive Learning.

Multimodal Contrastive Learning

The loss of the model consists of the sum of the loss of video and audio, and the loss of text and video. For the text modality, all word embeddings in the sentence are summed before the comparison.

Link to original

Multimodal Metric Learning

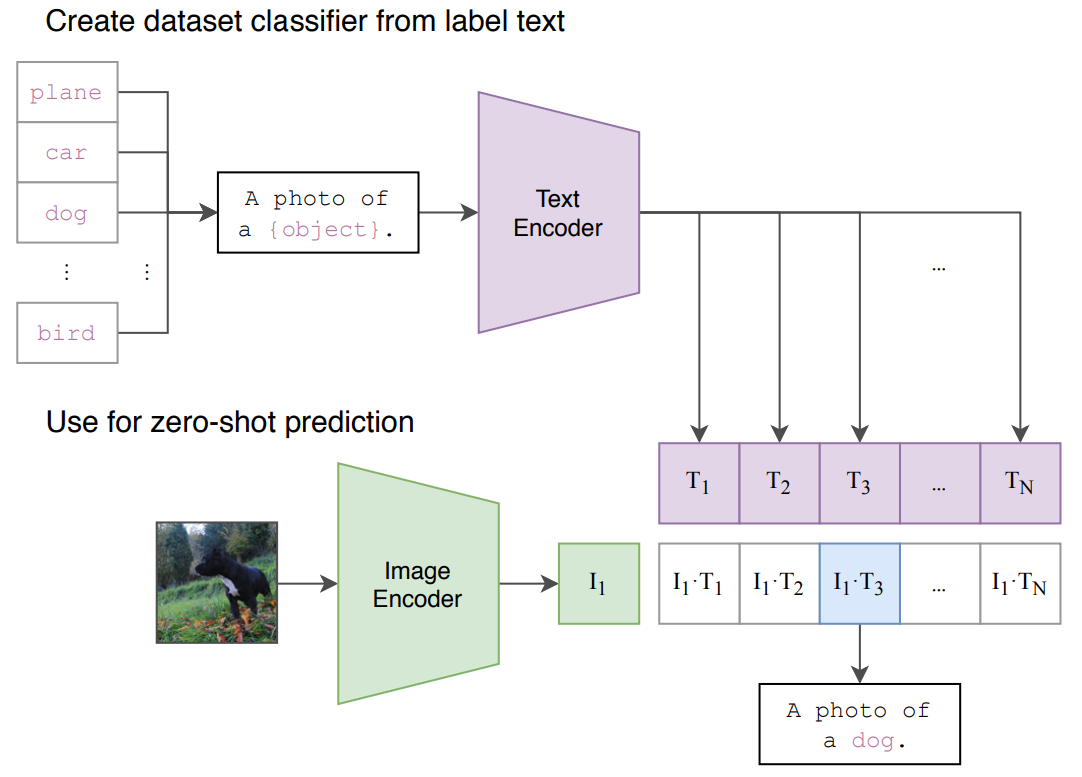

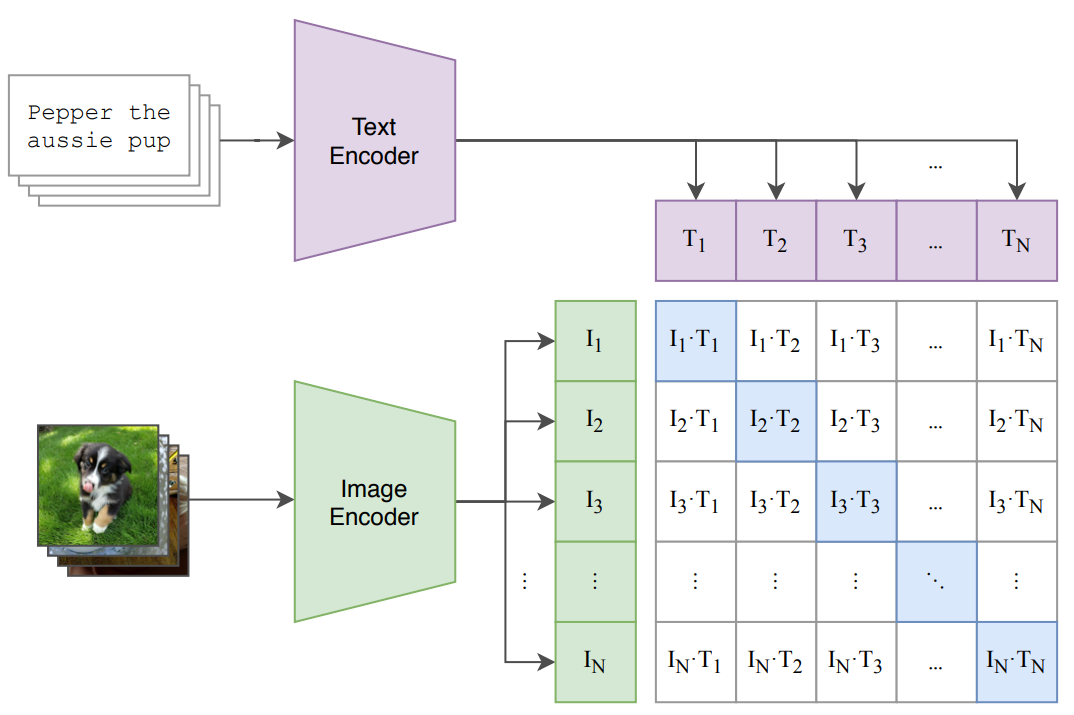

CLIP

Definition

Contrastive language-image pre-training (CLIP) model is designed to learn visual concepts from natural language supervision through Contrastive Learning.

Zero Shot Prediction

CLIP can classify images into categories that are not explicitly been trained on, simply by comparing image embeddings with text embeddings of category names.

Architecture

CLIP consists of two main components: a vision encoder(ViT or CNN) and a text encoder (Transformer-based model)

Contrastive Pre-Training

The input image-text pairs are encoded by the corresponding encoder, and the encoders are updated to maximize the similarity between matching pairs and minimize the similarity non-matching pairs. The similarity is measured by cosine similarity of the two encoded vectors.

Link to original

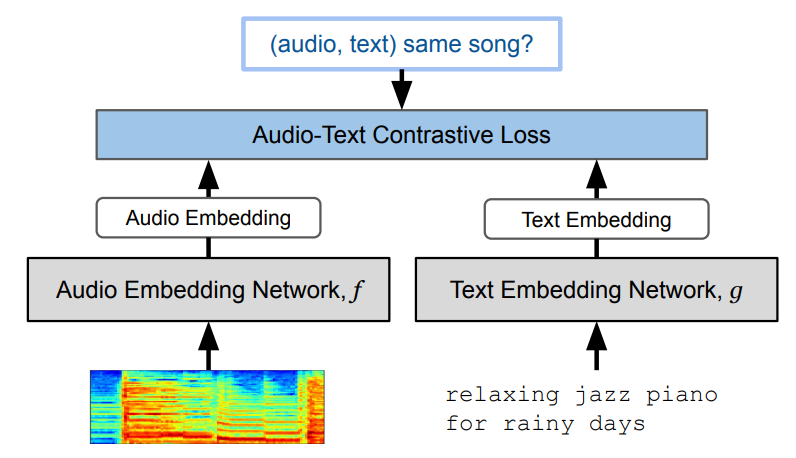

MuLan

Definition

MuLan applied the architecture of CLIP to audio clip (represented as a spectrogram)-caption pairs

Link to original

Generative Models

Likelihood-Based Sequential Approaches

PixelRNN

Definition

PixelRNN assumes that images are sampled from an unknown distribution. The model generate images pixel by pixel, treating the image as a sequence. Each pixel is conditioned on all previously generated pixels. The model is trained to maximize the log-likelihood of the training data.

Architecture

The model uses a multi-layer ConvLSTM

Link to original

PixelCNN

Definition

PixelCNN model substitutes the LSTM used for PixelRNN with a convolutional layer. The model generates the target pixel using previously generated nearby pixels.

Link to original

Pixel Recursive Super Resolution

Definition

The distribution of each pixel is estimated sequentially given previous pixels with PixelCNN and globally given a low-resolution image, and the two estimations are aggregated for the final distribution.

Link to original

Autoencoder-Based Models

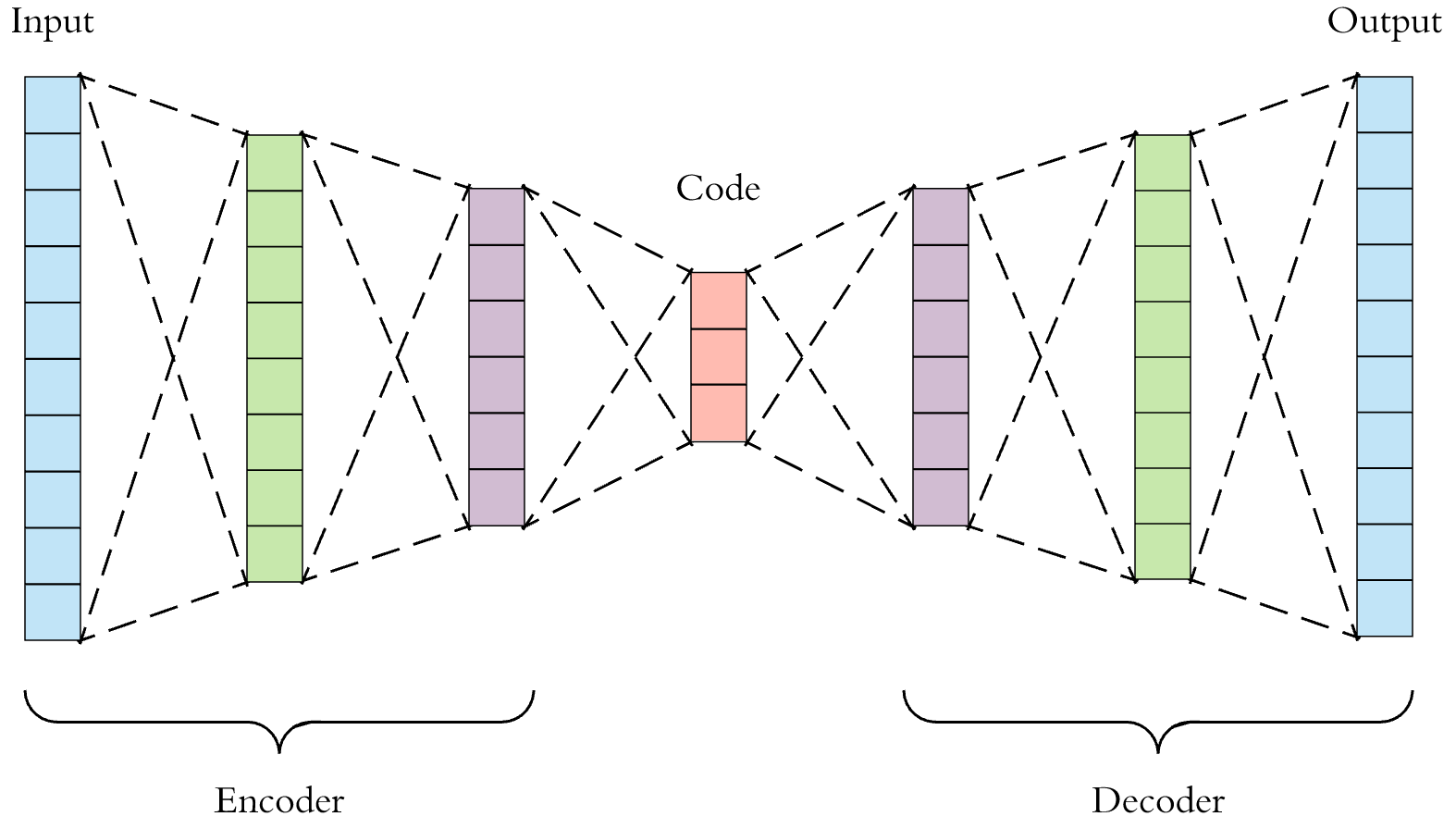

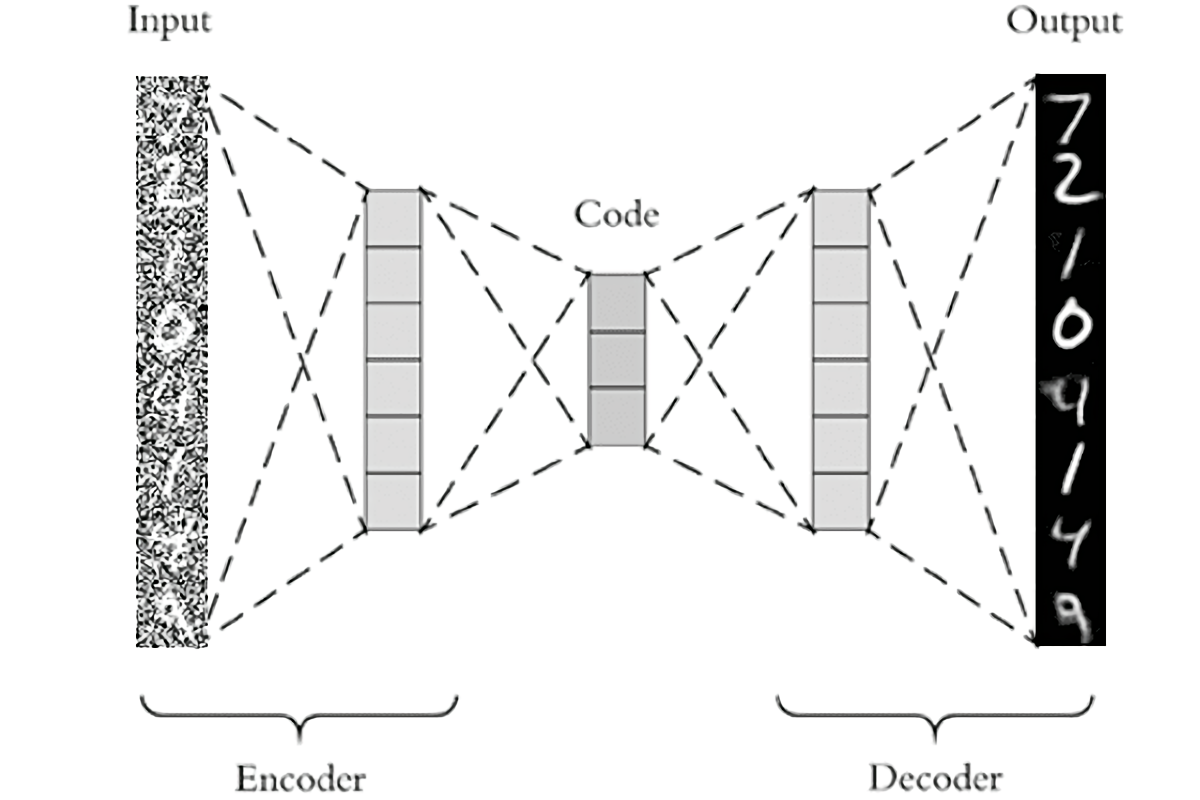

Autoencoder

Definition

Autoencoder is a type of Neural Network used for unsupervised dimensionality reduction or feature extraction. It consists of two main parts: encoder and decoder. Encoder compresses the input data into a lower-dimensional representation, and decoder attempts to reconstruct the original input from the compressed representation. Once autoencoder is trained, decoder is no longer used.

Architecture

The model is trained by minimizing the reconstruction error, typically using mean squared error

Facts

The extracted features may be used to train other supervised models.

Link to originalAutoencoder can be used for anomaly detection task. Typical examples have low reconstruction error, whereas outliers should have high reconstruction error.

Denoising Autoencoder

Definition

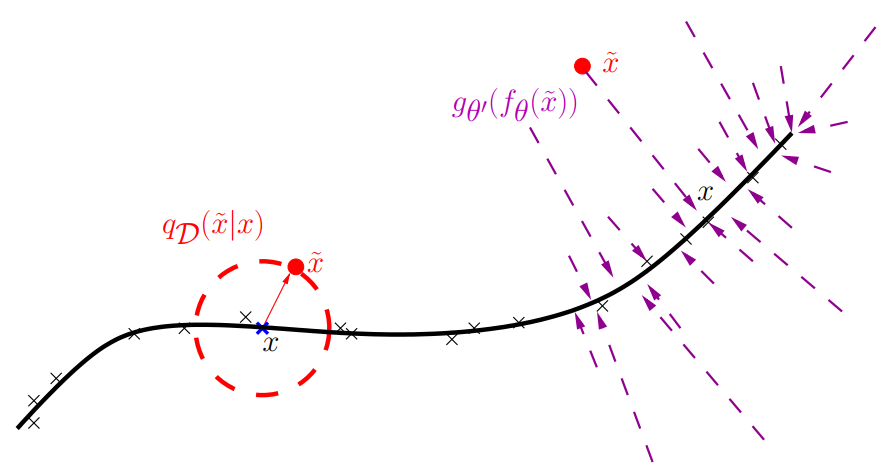

where , here is a random noise, is an encoder, and is a decoder.

Denoising autoencoder is a type of Autoencoder designed to learn robust representations of data by reconstructing clean inputs from noisy versions.

Architecture

The model takes clean input and adds noise to create corrupted input . The noisy input is fed into the mdoel, and the model attempts to reconstruct the original clean input by minimizing MSE.

In a Gaussian noise setting, the estimator minimizing MSE is the mean of posterior distribution , so DAE learns a function that approximates the posterior mean . Therefore, using a DAE with Tweedie’s Formula, we can estimate the Score Function of a sample space.

Link to original

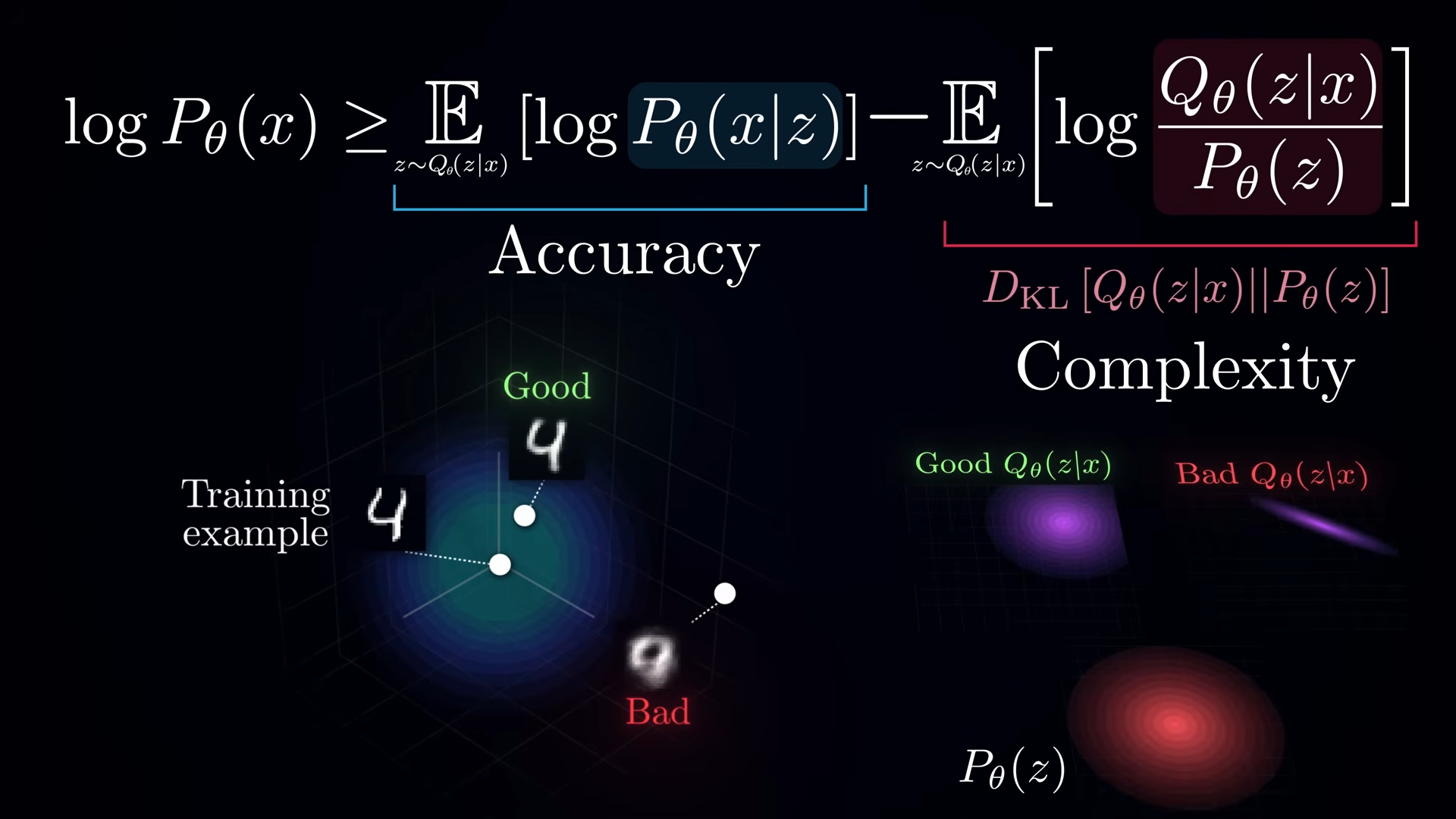

Variational Autoencoder

Definition

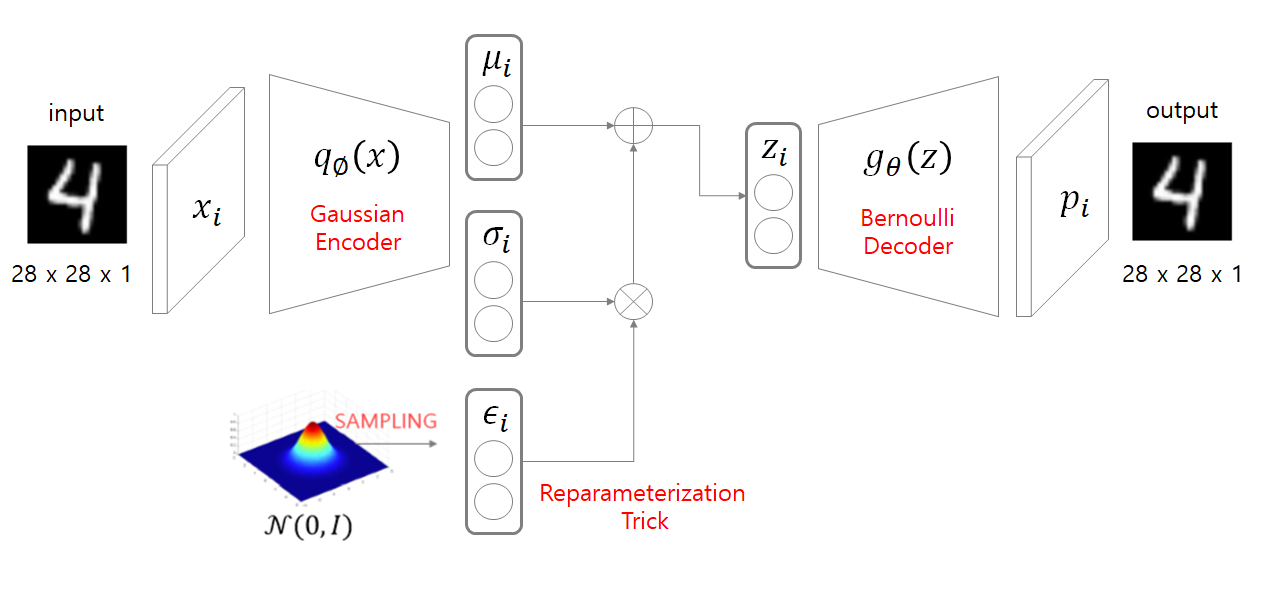

Variational autoencoder (VAE) utilizes the decoder of an Autoencoder as generator. Unlike traditional autoencoders, VAE models the latent space as a probability distribution, typically a Multivariate Normal Distribution.

Architecture

Instead of outputting a single point in latent space, the encoder of VAE produces parameters of a probability distribution on the latent space. The latent vector is sampled from the distribution and is reconstructed by the decoder.

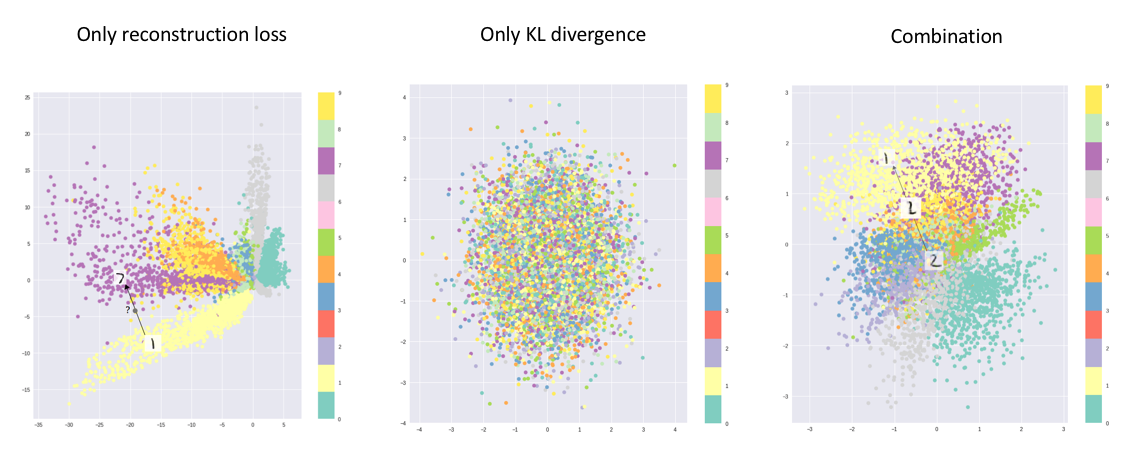

Loss Function (ELBO)

The loss of VAE (also called an evidence lower bound or ELBO) is consists of a reconstruction loss and a KL-Divergence which ensures the latent distribution is close to a normal distribution.

\ln p_{\theta}(x) &= \ln\sum\limits_{z}p_{\theta}(x|z)p_{\theta}(z) \\ &= \ln\sum\limits_{z}p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)}q_{\theta}(z|x) \\ &= \ln\mathbb{E}_{z \sim q_{\theta}(z|x)}\left[ p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)} \right]\\ &\geq \mathbb{E}_{z \sim q_{\theta}(z|x)}\ln\left[ p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)} \right] =: ELBO\\ &= \underbrace{\mathbb{E}_{z\sim q_{\theta}(z|x)}[\ln p_\theta(x|z)]}_{\text{reconstruction loss}} - \underbrace{D_{KL}(q_\theta(z|x) \| p_\theta(z))}_{\text{regularization loss}} \end{aligned}$$ where $q(z|x)$ is the encoder distribution, $p(x|z)$ is the decoder distribution, and $p(z)$ is the prior distribution (usually $\mathcal{N}(0, I)$). The regularization term ensures the continuity and completeness of the latent space. In terms of variational inference, we can see $p_{\theta}(z)$ as a latent distribution, and $q_{\theta}(z|x)$ as a proposal distribution used for [[Importance Sampling]]. i.e. $q_{\theta}(z|x)$ predicts the probable region in the latent space which likely to have generated the observation $x$. ## Reparameterization Trick ![[Pasted image 20240912191141.png|600]] We can not compute gradients for the operations containing a random variable. So, instead of directly sampling from the distribution $N(\mu, \sigma)$, we randomly sample from $\epsilon \sim N(0, 1)$ and make a latent vector $z = \mu + \sigma \epsilon$. When calculating the gradient in the backpropagation, the sampled $\epsilon$ is considered as a constant ($\cfrac{dz}{d\mu} = 1$ and $\cfrac{dz}{d\sigma} = \epsilon$). # Facts > ![[Pasted image 20240910130423.png|800]] > > The data encoded by VAE is semantically well-distinguished in a latent low-dimensional space.Link to original

GAN-Based Models

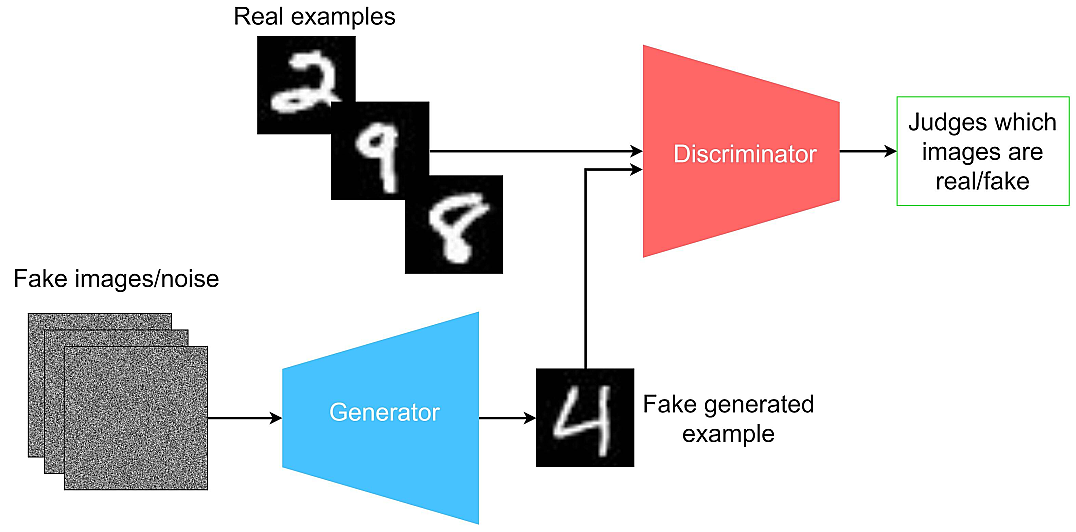

Generative Adversarial Networks

Definition

Generative adversarial network (GAN) is a type of Neural Network model generating data that resembles training data.

Architecture

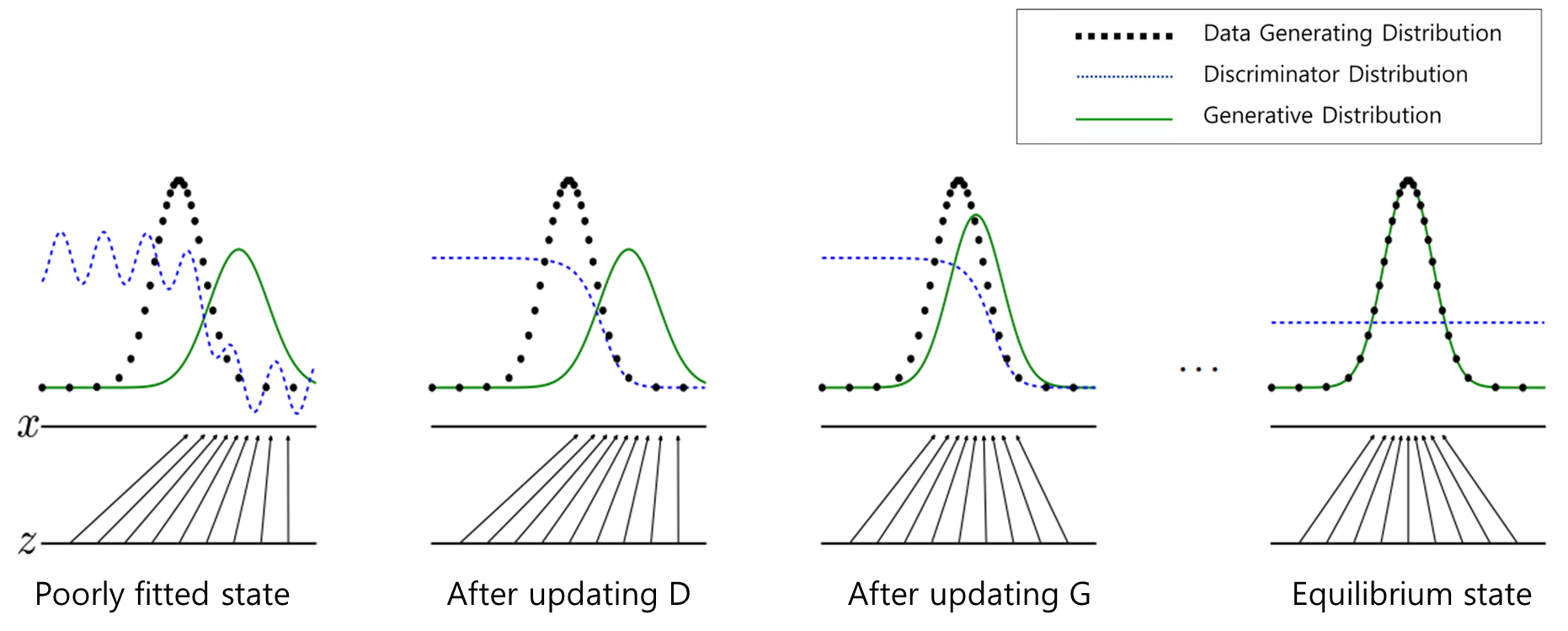

Training

In the training process of GAN, the two neural networks compete against each other. The generator (G) generates fake data and the discriminator (D) tries to distinguish between real and fake data.

- The generator creates fake data from random noise.

- The discriminator is shown both real data from the training set and fake data from the generator. It tries to classify which is which.

- Based on how well the discriminator performs, both networks receive feedback

- The generator aims to improve its fake data to fool the discriminator.

- The discriminator aims to get better at distinguishing real from fake.

- This process continues iteratively, with both networks improving over time.

Objective Function

The objective function of GAN is defined as where:

- is the generator

- is the discriminator

- is the distribution of the input data

- is the distribution of noise

Facts

When the discriminator is optimal, minimizing the objective function with respect to the generator is equivalent to minimizing the JS-Divergence between the real data distribution and the generated distribution .

Link to original

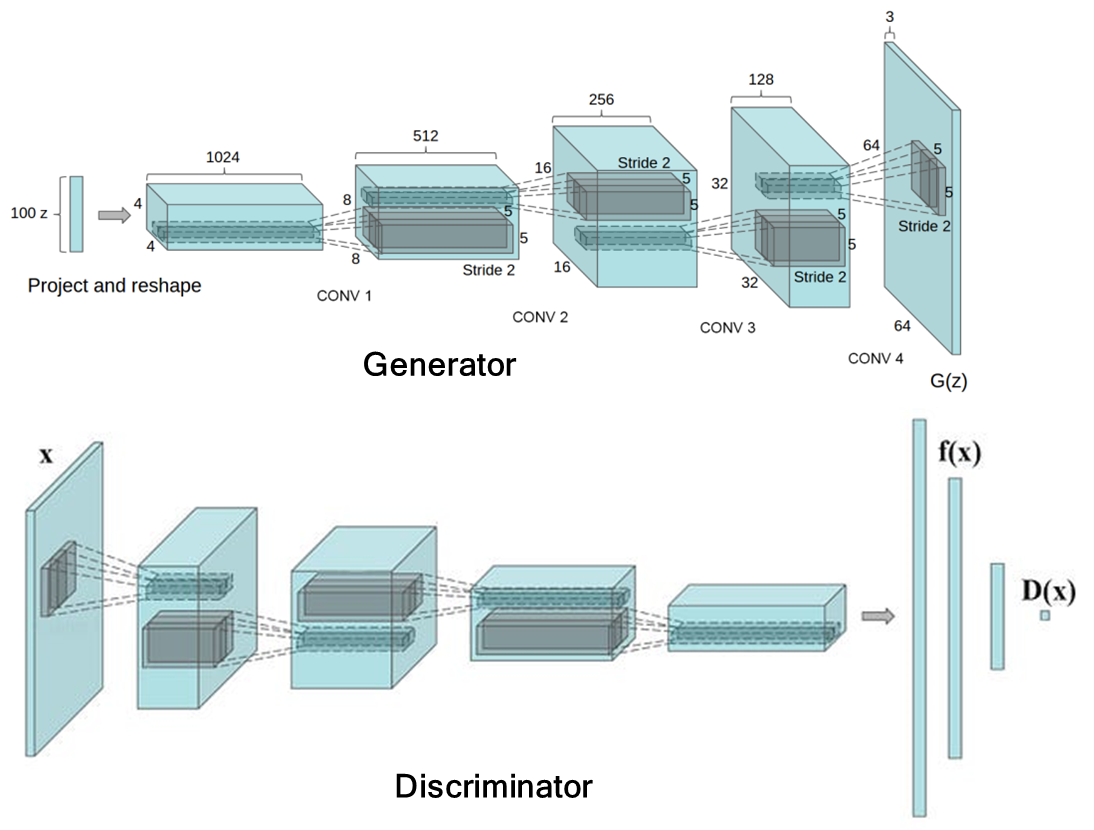

Deep Convolutional GAN

Definition

DCGAN is a specific architecture of GAN. It was designed to improve the stability of GAN training and the quality of generated image. DCGANs use convolutional and deconvolutional layers in the discriminator and generator, respectively.

Link to original

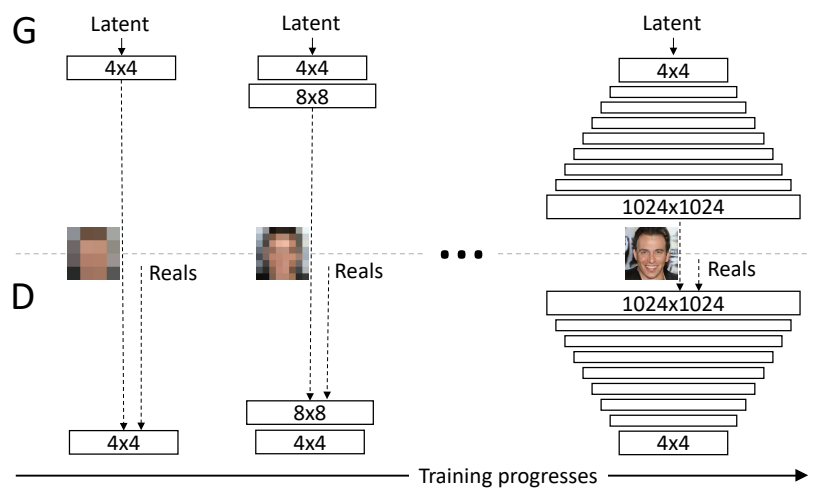

Progressive Growing GAN

Definition

Progressive growing GAN (PGGAN) is a specific architecture of GAN. The model addresses the challenges of traditional GAN by growing both the generator and discriminator, from low-resolution to high-resolution, progressively throughout the training process.

Architecture

The generator and discriminator are symmetric. New layers are added symmetrically to both networks as resolution increases. Convolutional layers are used throughout the network.

Training

Training starts with both D and G having a very low resolution. During training, gradually add new layers to both the D and G to increase the resolution.

Link to original

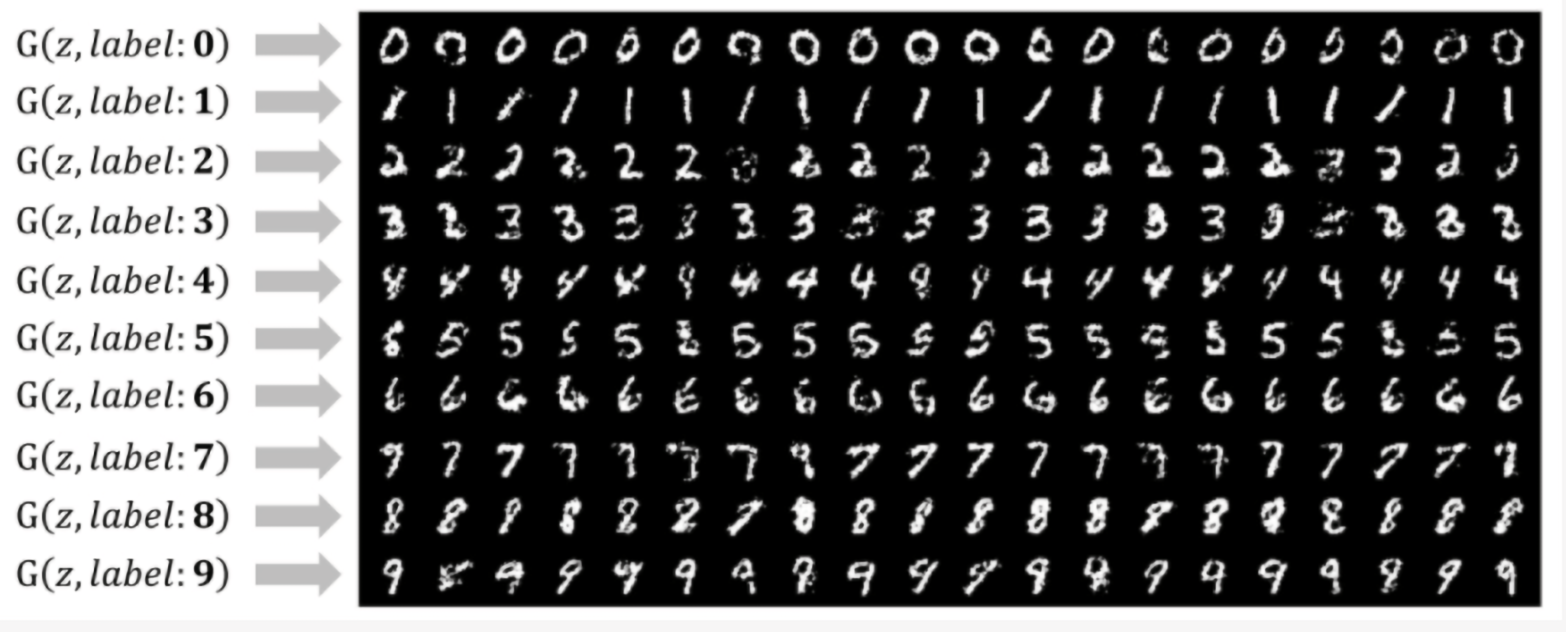

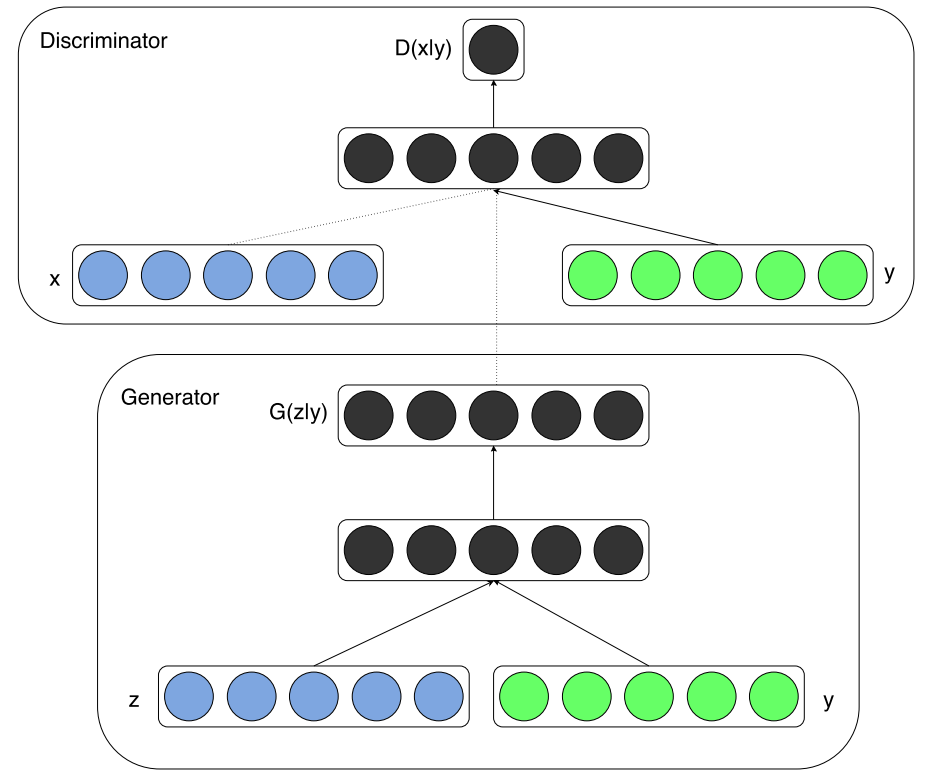

Conditional GAN

Definition

Conditional GAN (cGAN) is an extension of GAN that allows for the generating of data with specific attributes or conditions. In a standard GAN, the generator only takes random noise, while in a cGAN, the generator receives additional information to guide the generation process.

Architecture

The generator learns to create samples that match the given condition, and the discriminator learns to distinguish between real and fake samples, considering the condition.

Objective Function

The objective function of cGAN is defined as where:

Link to original

- is the generator’s output given noise and condition

- is the discriminator’s output given input and condition

- is the condition

- is the distribution of the input data

- is the distribution of noise

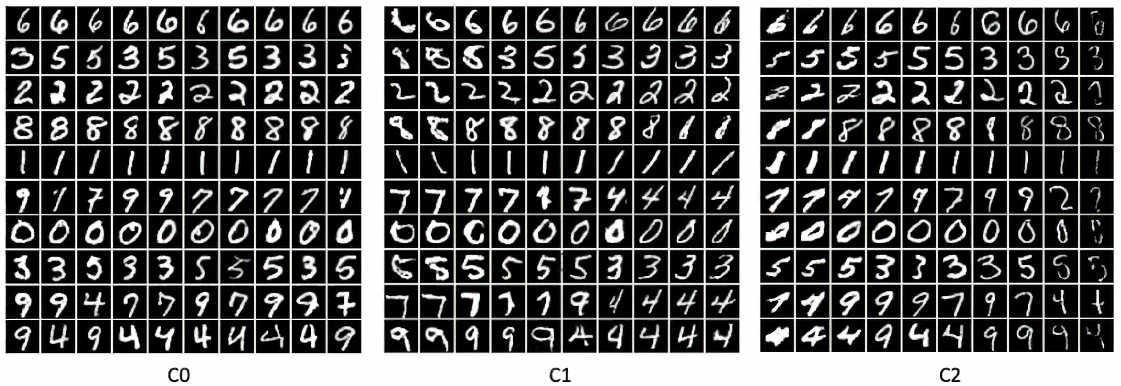

InfoGAN

Definition

Information maximizing GAN (InfoGAN) is an extension of the GAN architecture that aims to learn disentangled representations in an unsupervised manner. InfoGAN introduces a latent code in addition to the noise vector . This latent code is designed to capture interpretable and meaningful features of the generated data.

Architecture

The core idea of InfoGAN is to maximize the Mutual Information between the latent code and the generated samples . This encourages the model to learn representations corresponding to semantic features of the generated output. InfoGAN introduces an auxiliary network that attempts to recover the latent code from the generated samples.

Objective Function

GAN loss: Mutual Information and variational lower bound : where:

- is the generator

- is the discriminator

- is the auxiliary network

- is the distribution of the input data

- is the distribution of noise

- is the Entropy of the latent code distribution

The full objective function for InfoGAN is defined as: Where:

Link to original

- is the standard GAN objective

- is the approximation of the mutual information between and

- is a hyperparameter controlling the importance of the mutual information term

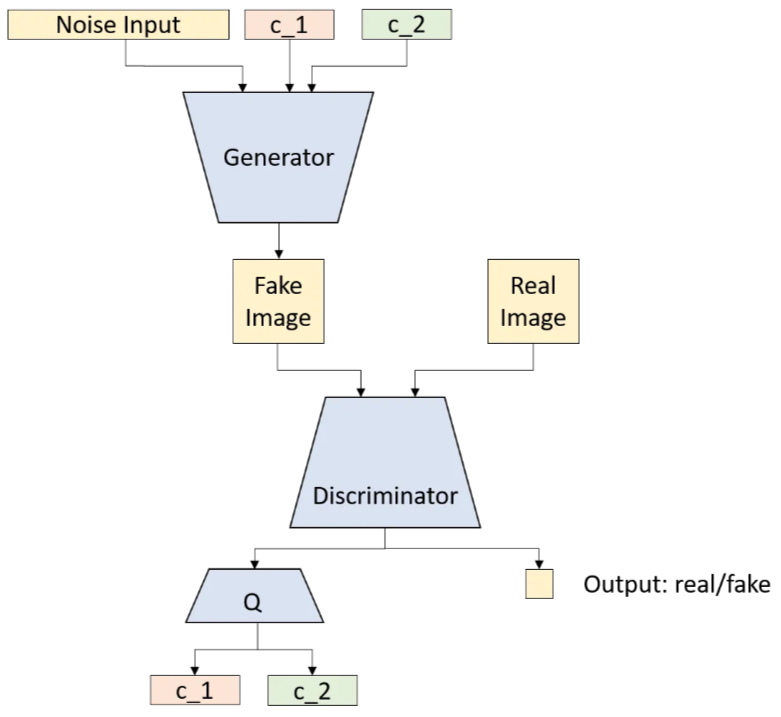

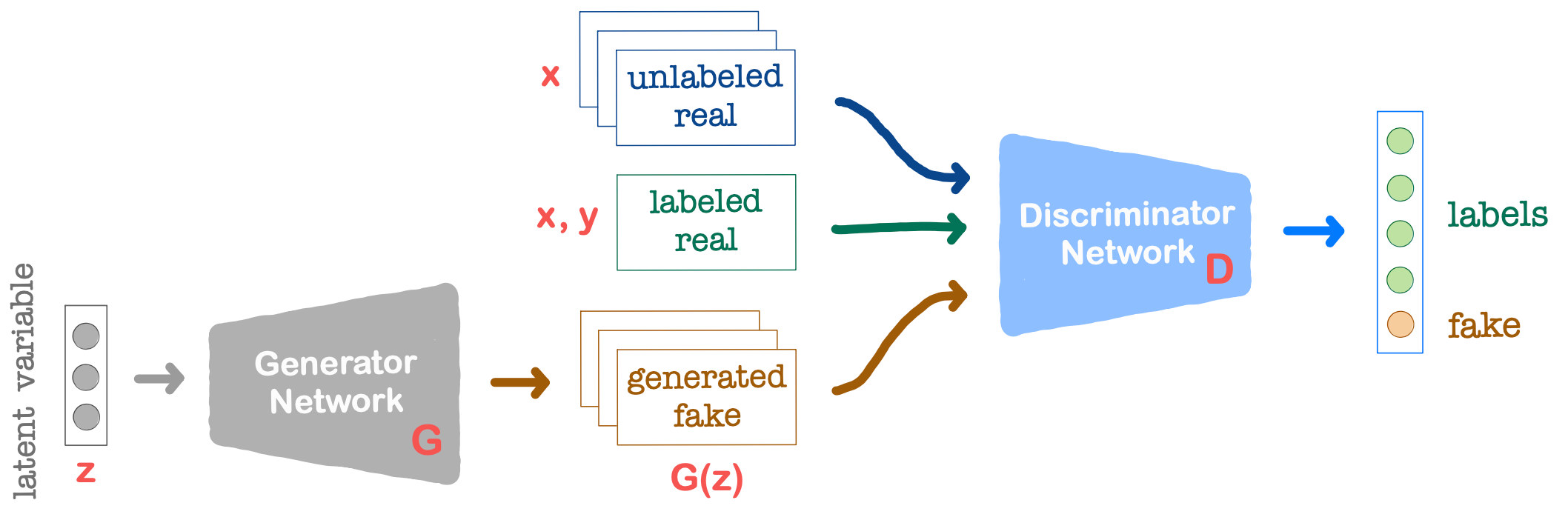

Semi-Supervised GAN

Definition

Semi-supervised GAN (SGAN) is a variation of the GAN that takes both labeled and unlabeled data in the training process. The model aims to create a data-efficient classifier and to improve the generating quality.

Architecture

Objective Function

The objective function of SGAN consists of four losses: supervised loss, unsupervised loss, generator loss, and feature matching loss. The supervised loss is calculated only for labeled samples, and aims to correctly classify the real samples into their correct class. The unsupervised loss is calculated for both unlabeled real samples and generated samples, and encourages the discriminator to distinguish between real and fake samples. The generator loss is used to ensure the generated images are realistic. The feature matching loss encourages the generator to produce samples have similar feature representation to real data in the discriminator’s intermediate layer.

Supervised loss: Unsupervised loss: Generator loss: Feature matching loss where:

- is the generator

- is the discriminator

- is the model’s predicted probability for the correct class.

- is the model’s predicted probability for the fake class.

- is the distribution of the labeled data

- is the distribution of the data (both labeled and unlabeled)

- is the distribution of noise

- is the intermediate layer of the discriminator

The full objective function for SGAN is defined as: where:

Link to original

- and are the parameters of the generator and discriminator, respectively

- is a hyperparameter that controls the weight of the feature matching loss.

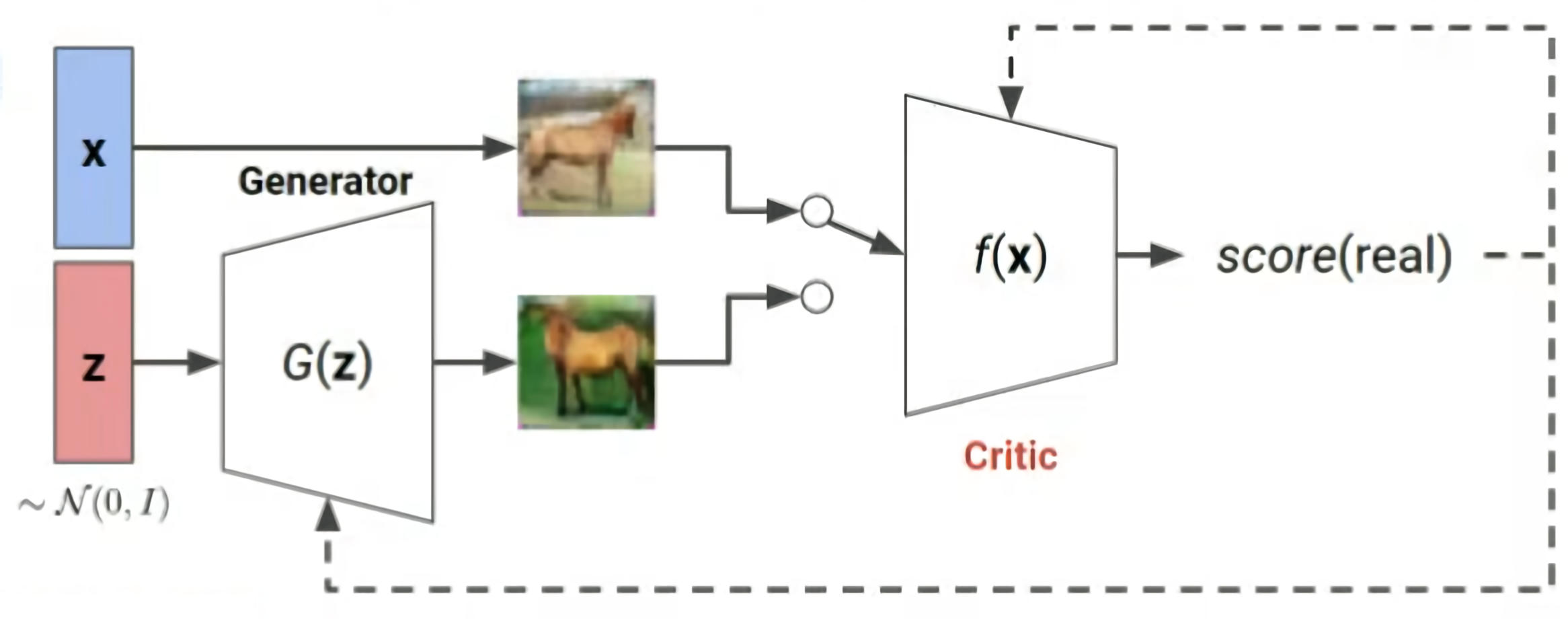

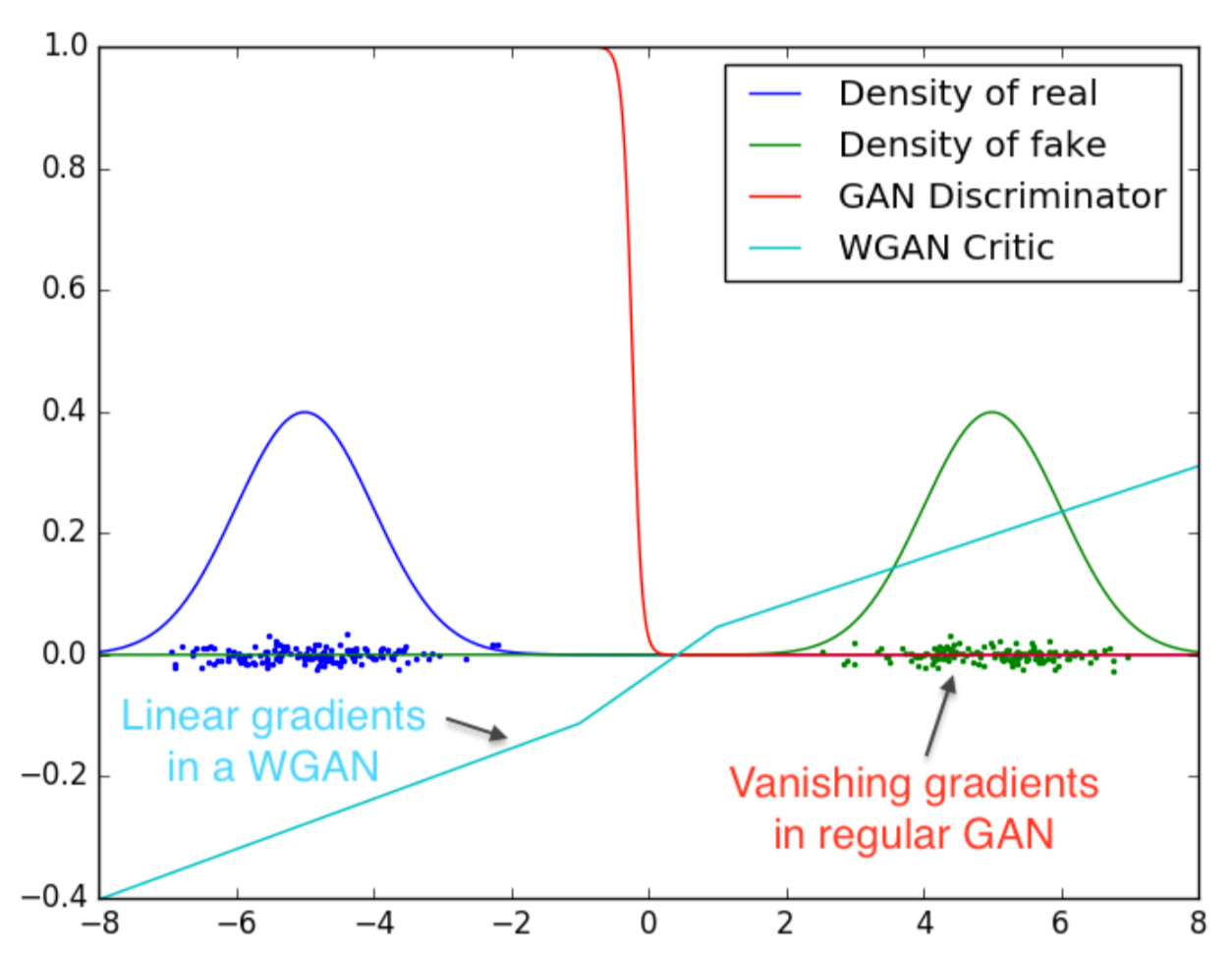

Wasserstein GAN

Definition

Wasserstein GAN (WGAN) is a variant of GAN that uses the Wasserstein Distance instead of the Jensen-Shannon Divergence used in traditional GAN. The Wasserstein distance provides a smoother gradient everywhere.

Architecture

In WGAN, the discriminator of traditional GAN is replaced by a critic that is trained to approximate the Wasserstein Distance. The critic outputs a real number instead of a probability.

Since Wasserstein Distance is highly intractable, the cost function is simplified using Kantorovich-Rubenstein Duality requiring 1-Lipschitz continuous. To satisfy the condition the weights of the critic are clipped.

Objective Function

The objective function of WGAN is defined as where:

- is the generator

- is the critic

- is the distribution of the input data

- is the distribution of noise

WGAN-GP

Instead of clipping the weights, WGAN-GP penalizes the model if the gradient norm moves away from its target norm value .

The additional gradient penalty term of WGAN-GP where is the critic loss.

This enforces the Lipschitz constraint more effectively than weight clipping.

Algorithm

: the learning rate, : the clipping parameter, : the batch size, : the number of iterations of the critic per generator iteration. : the initial critic parameters. : the initial generator’s parameters.

While has not converged:

Link to original

- for :

- Sample a batch from the real data.

- Sample a batch from the noise distribution.

- Sample

Gan-Based Image-to-Image Translation Models

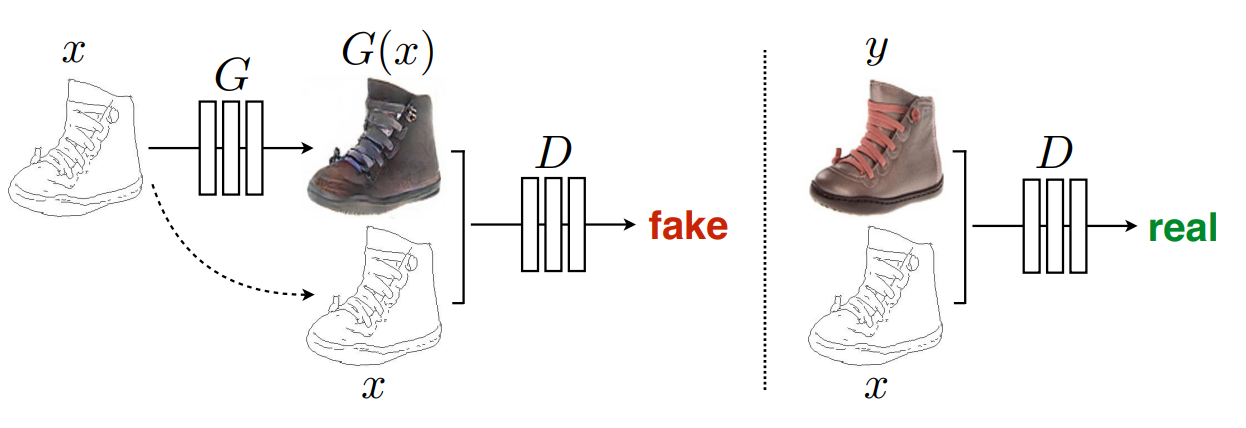

Pix2pix

Definition

Pix2pix model is a Conditional GAN model designed for image-to-image translation tasks.

Architecture

The generator of the model has a U-Net architecture. The pix2pix model takes an input image and learns to generate a corresponding output image. The training set consists of pairs of two images

Objective Function

The objective function of Pix2pix model is defined as

Link to original

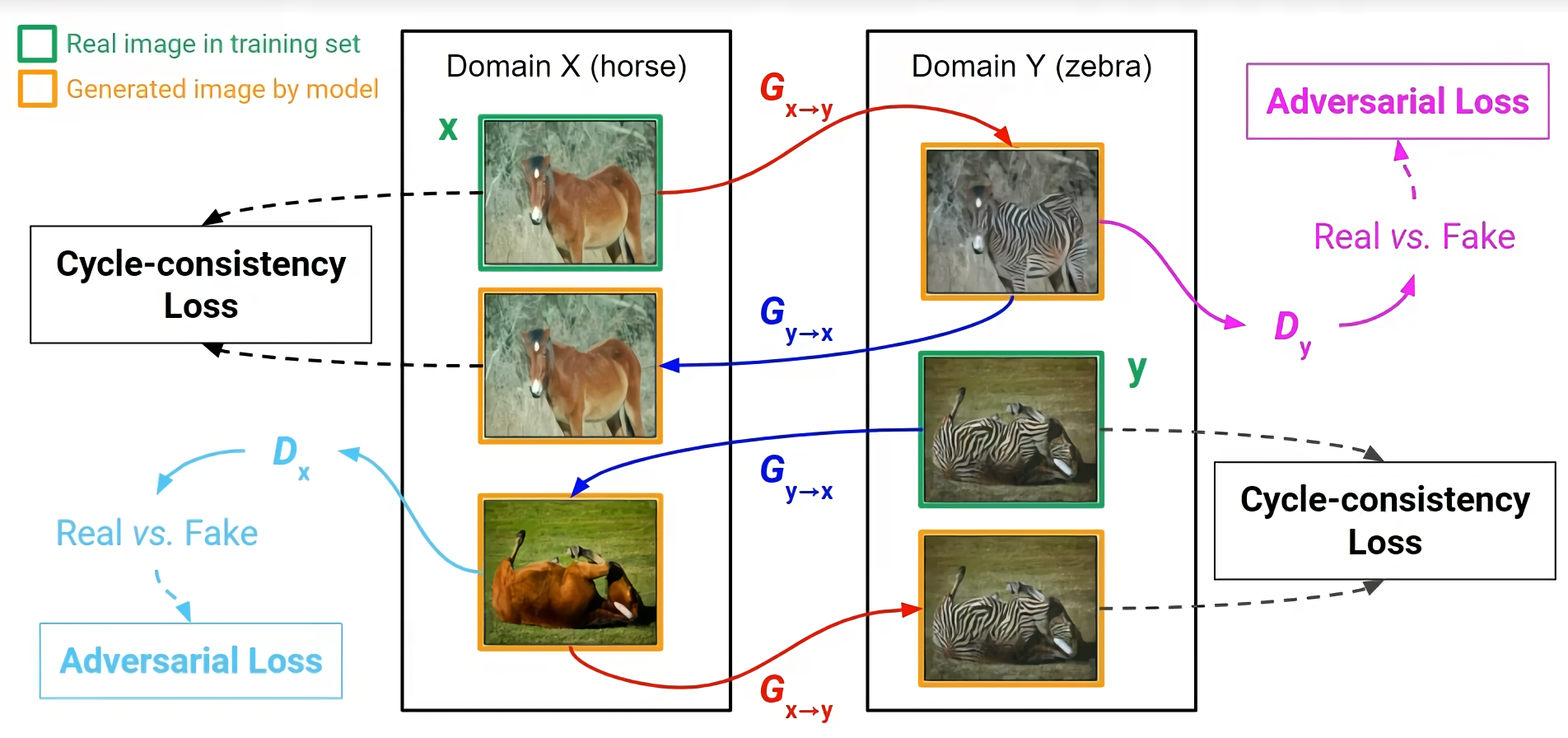

CycleGAN

Definition

Cycle GAN is unsupervised image-to-image translation model. The model can be trained without paired training examples.

Architecture

CycleGAN consists of two GANs and , and is trained by minimizing the cycle consistency loss for each image from both domain and

Objective Function

The objective function of CycleGAN consists of three losses: adversarial loss (), adversarial loss (), and cycle-consistency loss.

Adversarial loss (): Adversarial loss (): Cycle-consistency loss: where:

- is the generator

- is the discriminator

- is the distribution of the data from domain

- is the distribution of the data from domain

The full objective function for CycleGAN can be written as:

where is the weight for the cycle consistency loss.

Link to original

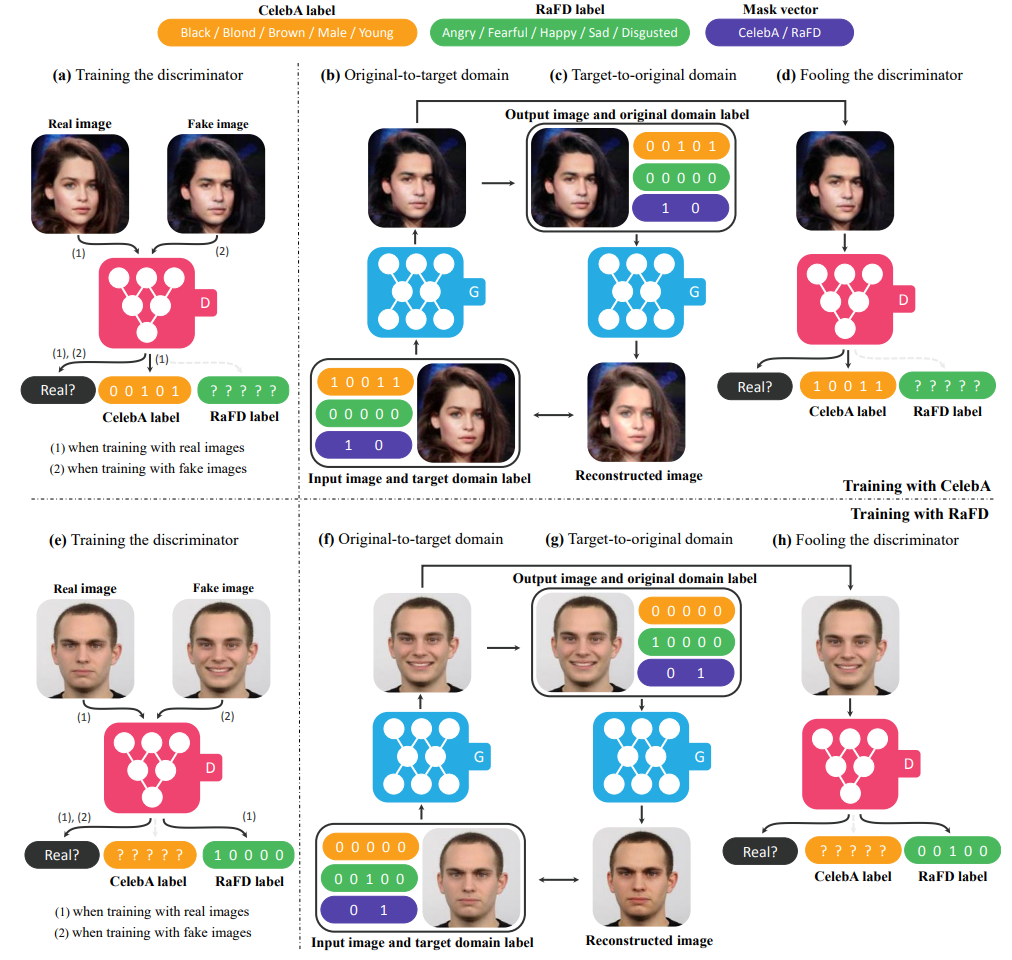

StarGAN

Definition

StarGAN is a GAN model designed for multi-domain image-to-image translation tasks. Unlike previous models that required separate networks for each domain pair, StarGAN can perform image translations across multiple domains using a single generator network.

Architecture

The generator takes an input image and a target domain label to produce the translated image, and the discriminator network that not only distinguishes between real and fake images but also classifies the domain of the input image.

Mask Vector

StarGAN introduces a mask vector to handle datasets with partial domain labels, allowing it to ignore unspecified labels during training.

Objective Function

The objective function of StarGAN consists of three losses: adversarial loss, domain classification loss, and reconstruction loss. The adversarial loss ensures the generated images are realistic, the domain classification loss helps the model learn domain-specific features, and the reconstruction loss ensures the original image can be reconstructed when translating back to the source domain.

Adversarial loss: Domain classification loss: Reconstruction loss: where:

- is the generator

- is the discriminator

- is the discriminator’s source prediction

- is the discriminator’s domain classification

- is the distribution of the input data

- is the target domain label.

- is the original domain label.

The full objective function for StarGAN can be written as: where:

Link to original

- and are the parameters of the generator and discriminator, respectively

- and are hyperparameters controlling the importance of each loss term

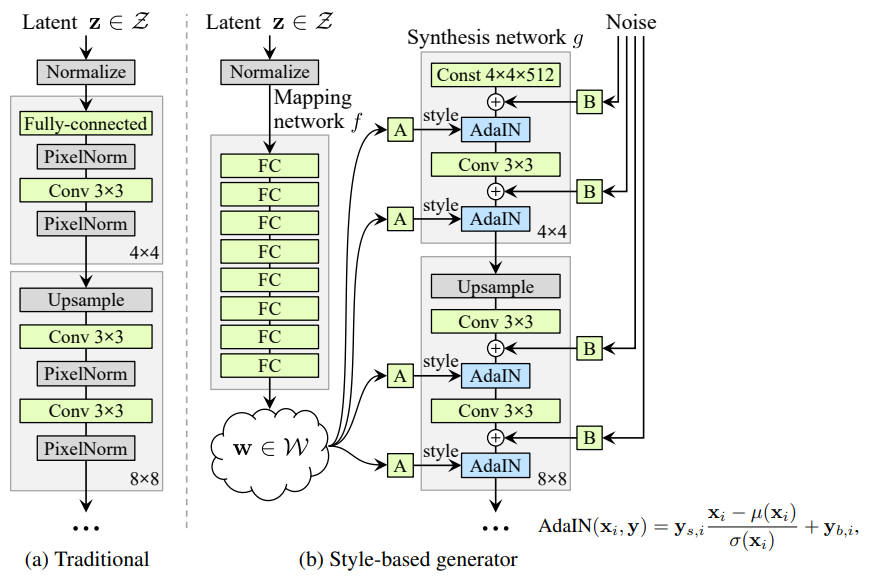

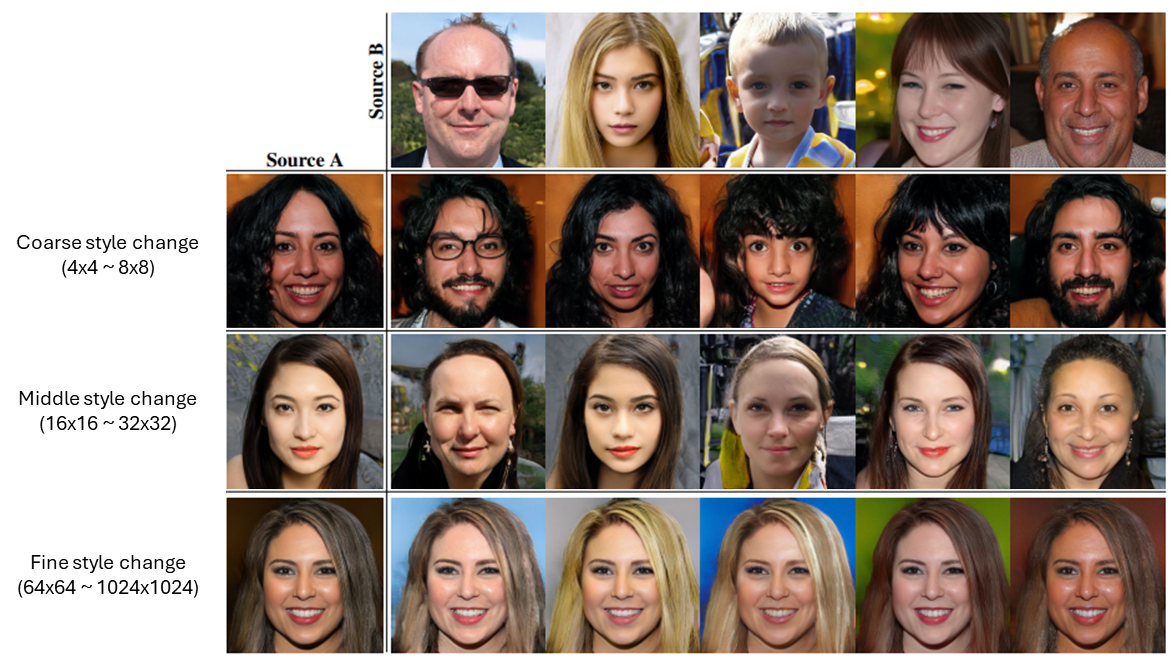

StyleGAN

Definition

StyleGAN is a GAN architecture with a newly designed generator. The generator is structured with unsupervised separation of high-level attributes (e.g. pose) and stochastic variation in the generated images.

Architecture

Mapping Network

Instead of feeding random noise directly into the generator, styleGAN uses a mapping network to map the input latent vector into an intermediate latent space .

Adaptive Instance Normalization (AdaIN)

The AdaIN operation is defined as where is the -th feature map, and are style scale and bias, and and are the mean and standard deviation of .

The are learning parameters, and are generated by affine transformation of the intermediate latent vector . where and are the trained weight and bias respectively.

It is used to inject the style information at each layer of the generator.

Stochastic variation

The noise image () is added to each layer of the synthesis network. It provides the stochastic variation in the generated images.

Progressive Growing

The network starts generating low-resolution images and progressively increases the resolution. We can control the rate of style change by controlling the stage AdaIN appended.

Link to original

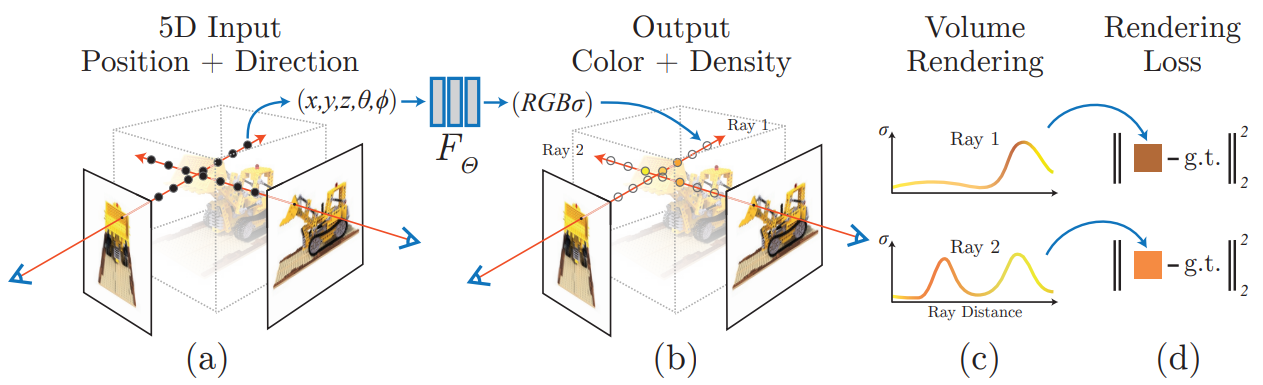

Neural Radiance Fields

Neural Radiance Fields

Definition

Neural Radiance Fields (NeRF) is a 3D scene representation and rendering model.

Architecture

NeRF represents a 3D scene as a continuous volumetric function, learned by a Neural Network. The function maps 3D coordinates and 2D viewing directions to color and density values. where is the 3D location, is the viewing direction, is the color, and is the volume density.

Volume Rendering

where:

- and are near and far bounds.

- is the camera ray through a pixel.

- denotes the accumulated transmittance along the ray from to .

Loss Function

The loss function of NeRF model is defined as where is the set of rays in the training images, is the predicted color for poins on the ray , and is the gound truth color for ray .

Link to original

D-Residual-Networks/../../Files/Pasted-image-20240905014351.png)