Definition

ResNet is a deep Convolutional Neural Network architecture. It was designed to address the degradation problem in very deep neural networks.

Architecture

Skip Connection

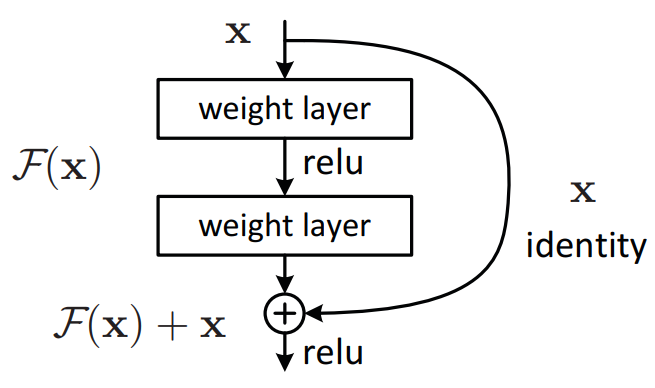

The core innovation of ResNet is the introduction of skip connections (shortcut connections or residual connections). These connections allow the network to bypass one or more layers, creating a direct path for information flow. It performs identity mapping, allowing the network to easily learn the identity function if needed.

The residual block is represented as where is the input to the block, is the learnable residual mapping typically including multiple layers, and is the output of the block

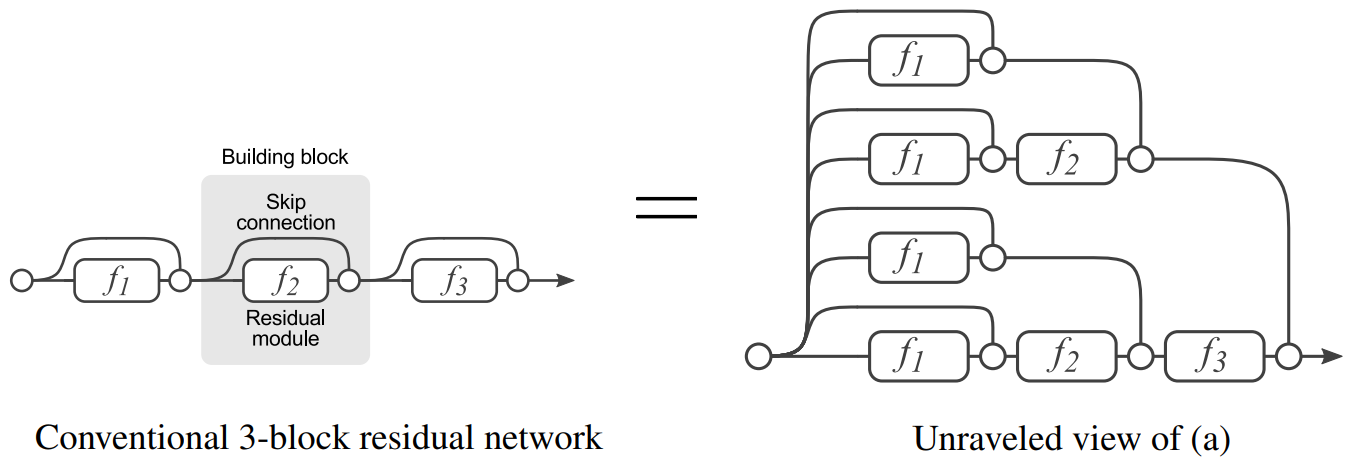

Skip connection create a mixture of deep and shallow models. skip connections, makes possible paths, where each path could have up to modules.

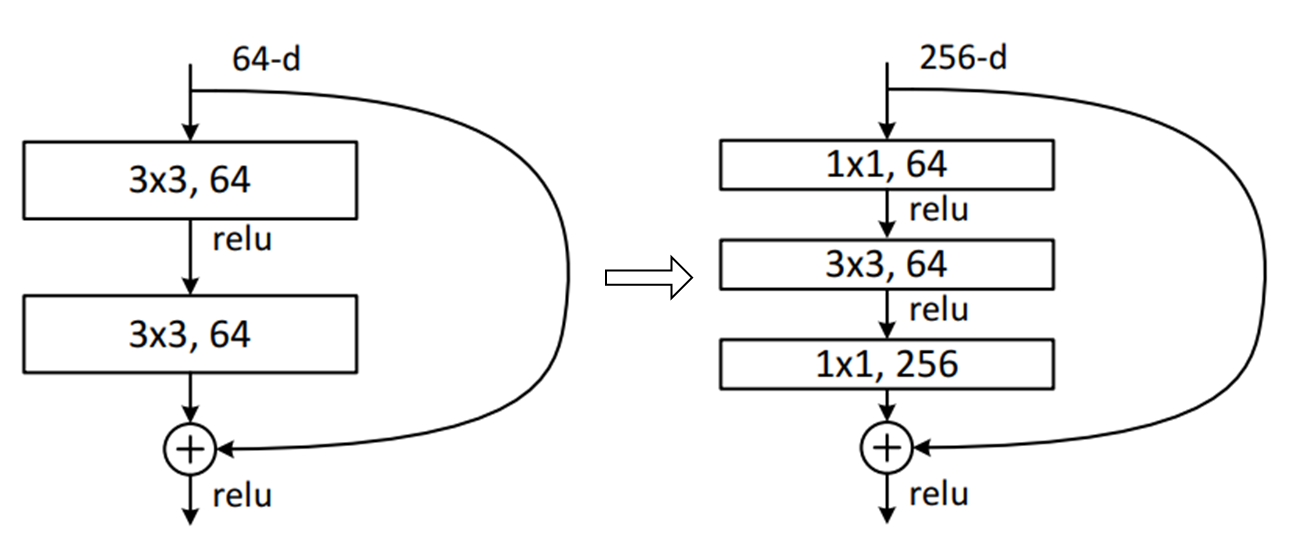

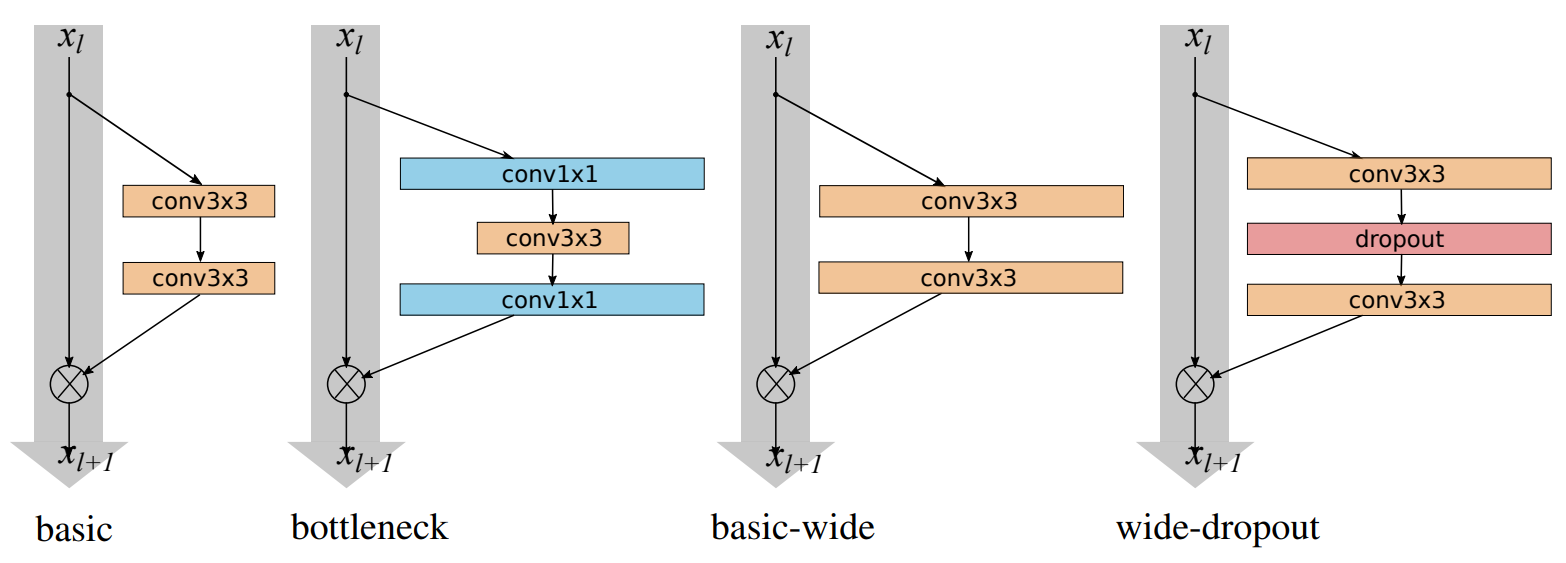

Bottleneck Block

The bottleneck architecture is used in deeper versions of ResNet to improve computational efficiency while maintaining or increasing the network’s representational power. The bottleneck block consists of three layers in sequence: convolutions. The first convolution reduces the number of channels, the convolution operates on the reduced representation, and the second convolution increases the number of channels back to the original.

Architecture Variants

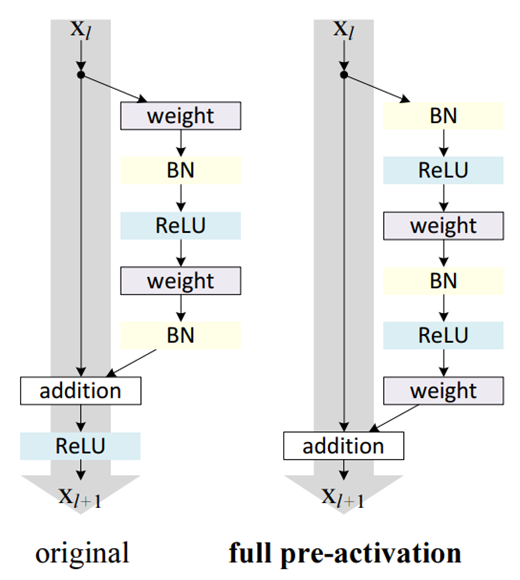

Full Pre-Activation

Full pre-activation is an improvement to the original ResNet architecture. This modification aims to improve the flow of information through the network and make training easier. In full pre-activation, the order of operations in each residual block is changed to move the batch normalization and activation functions before the convolutions.

WideResNet

WideResNet increases the number of channels in the residual blocks rather than increasing Network’s depth.

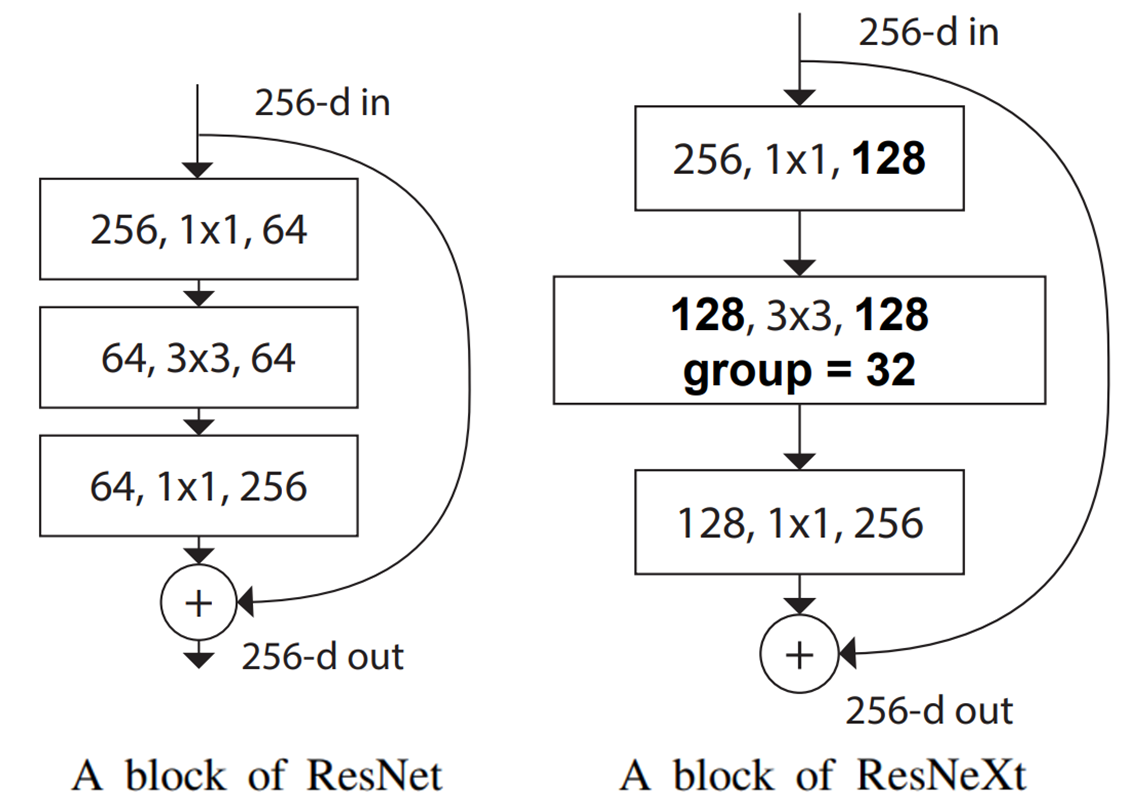

ResNeXt

The ResNeXt model substitutes the convolution of residual block of ResNet with the Grouped Convolution. ResNeXt achieve better performance than ResNet with the same number of parameters, thanks to its more efficient use of model capacity through the grouped convolution.