Definition

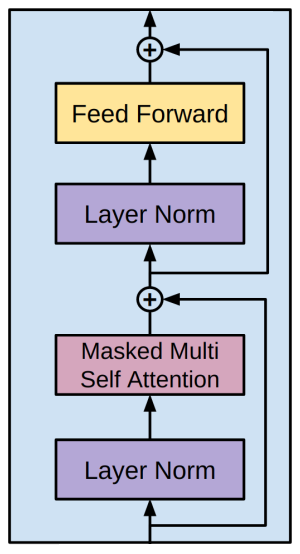

GPT is based on the Transformer architecture, specifically using only the decoder portion of the original Transformer model. It utilizes self-attention mechanisms to process input sequences.

GPT-1

Next Token Prediction

GPT-1 was trained on a diverse corpus of web pages, using semi-supervised learning to predict the next token in a sequence. This pre-training allowed the model to learn general language patterns and representations (vector representations of words).

GPT-2

GPT-2 was trained on a more large-size dataset. It performs tasks without specific fine-tuning, demonstrating strong zero-shot learning capabilities.

In the GPT-2 model, the Layer Normalization is moved to the input of each sub-block, similar to a pre-activation of ResNet.

In the GPT-2 model, the Layer Normalization is moved to the input of each sub-block, similar to a pre-activation of ResNet.

GPT-3

GPT-3 scales the architecture of GPT-2 up dramatically with larger dataset. The research shows the effectiveness of few-shot learning.

where the gray region is masked

GPT-3 uses dense and locally banded sparse attention patterns in the layers of the transformer alternatively.