Definition

Transformer model uses self-attention, the Attention in which the Q, K, and V derived from the same source, for sentences. The result vector of the self-attention reflects its context. Usually, self-attention is repreated multiple times to further contexualize.

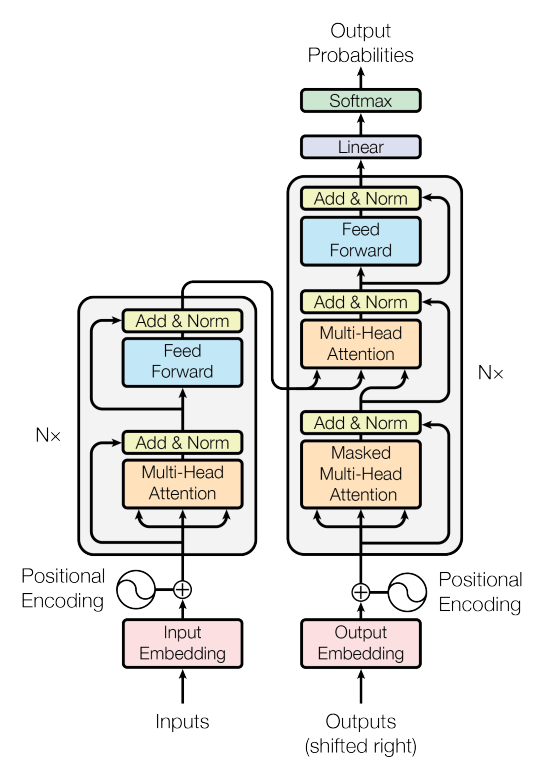

Architecture

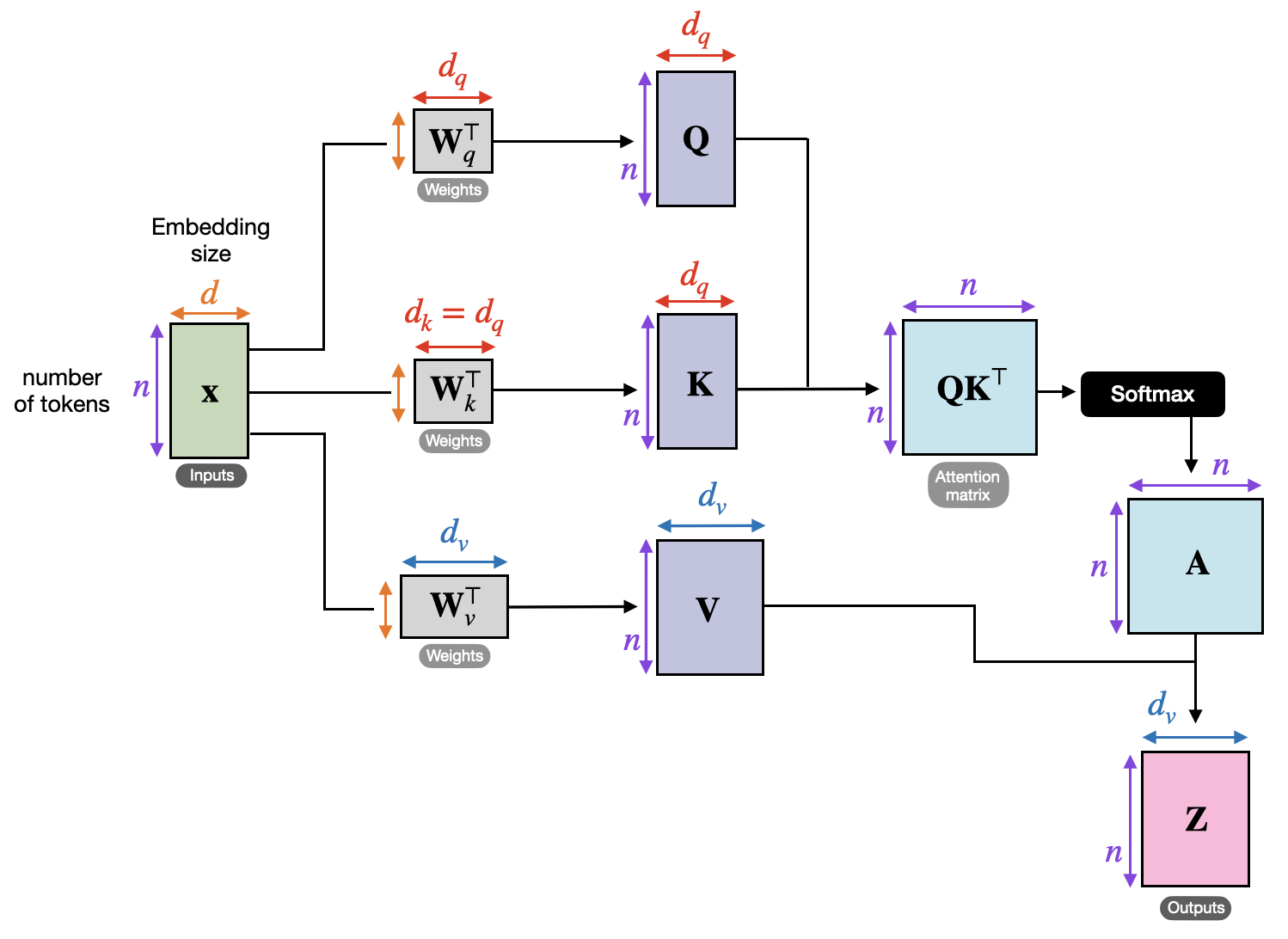

Self-Attention

The initial query (Q), key (K), and value (V) are matrices are the result of linear transformation of the input sequence.

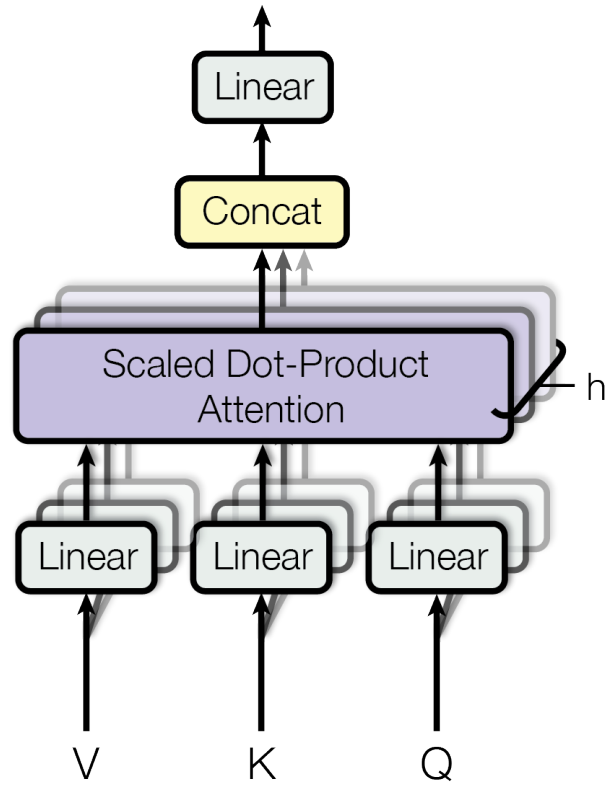

Multi-head Self-Attention

The Transformer uses multiple attention heads in parallel like the channel in CNN, allowing it to focus on different aspects of the input simultaneously. The output of multi-head attention is a concatenation of the outputs from individual attention heads, followed by a linear transformation.

where

Feed-Forward Layer

Each layer in the Transformer also contains a feed-forward layer applied to each position separately, i.e. there is no cross-token dependency. The linear transformations are the same across different positions in the same layer, but different in other layers.

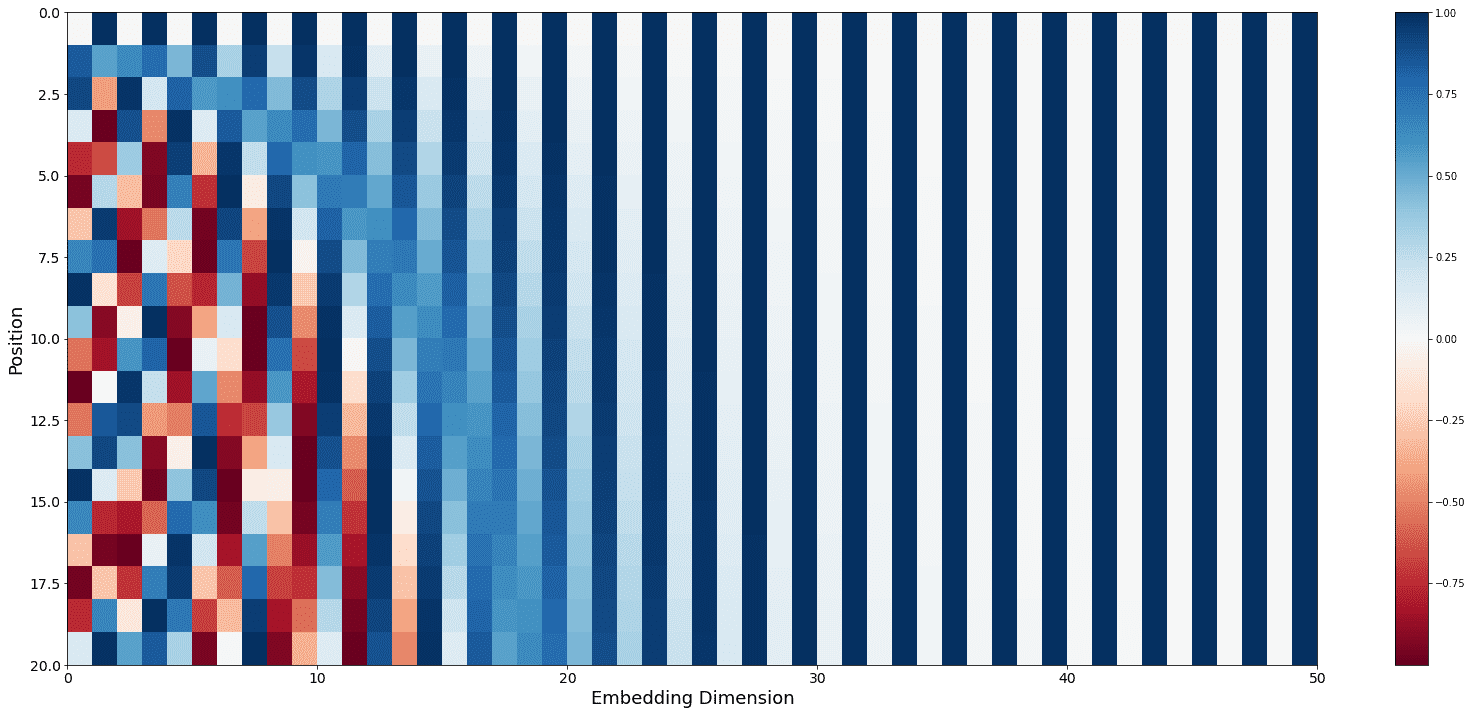

Positional Encoding

Since the Transformer doesn’t inherently capture sequence order, positional encodings are added to the input embeddings. These are typically sine and cosine functions of different frequencies:

PE_{(\text{pos},2i)} &= sin(\text{pos} / 10000^{2i/d_{\text{model}}})\\ PE_{(\text{pos},2i+1)} &= cos(\text{pos} / 10000^{2i/d_{\text{model}}}) \end{aligned}$$ ## Masked Multi-Head Self-Attention In the decoder, the self-attention layer is modified to prevent attending to later positions. This is achieved by masking future positions with negative infinity before the [[Softmax Function|softmax]] step. ## Encoder-Decoder Attention The decoder has an additional attention layer that performs multi-head attention over the output of the encoder. Where the query (Q) comes from the previous layer in the decoder, and the key (K) and value (V) come from the output of the encoder.