Definition

Video vision transformer (ViViT) extended the idea of ViT to video classification task.

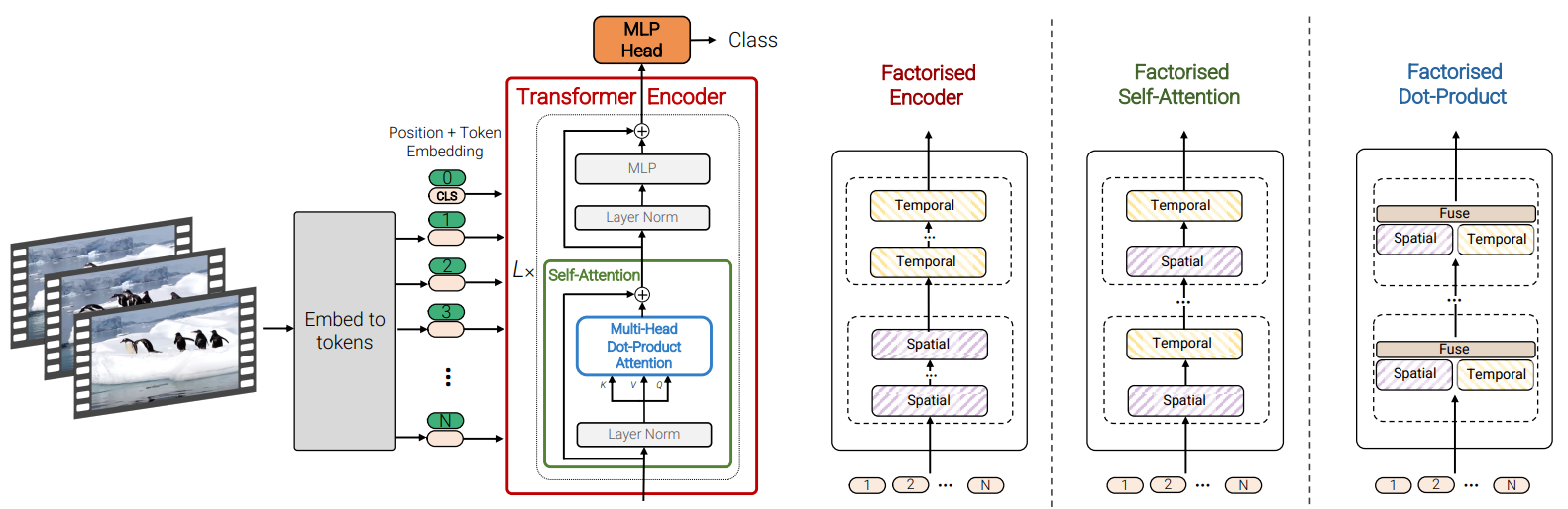

Architecture

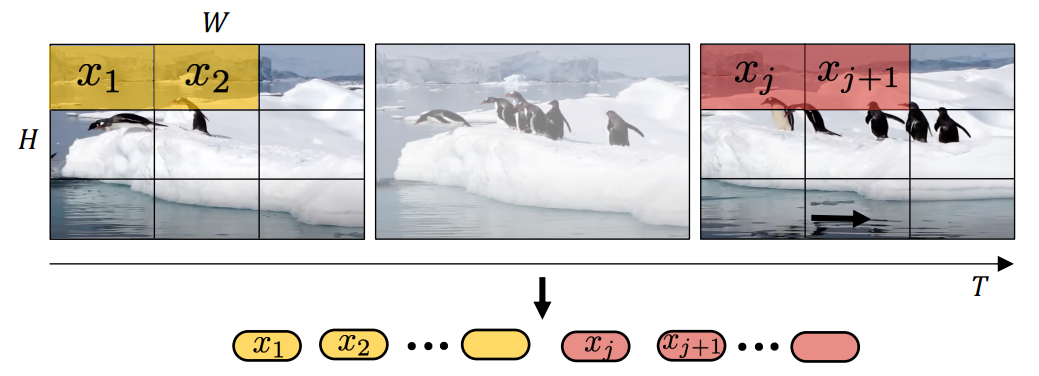

Uniform Frame Sampling

The fixed number of frames of the input video are uniformly sampled to handle videos of varying lengths and to reduce computational complexity.

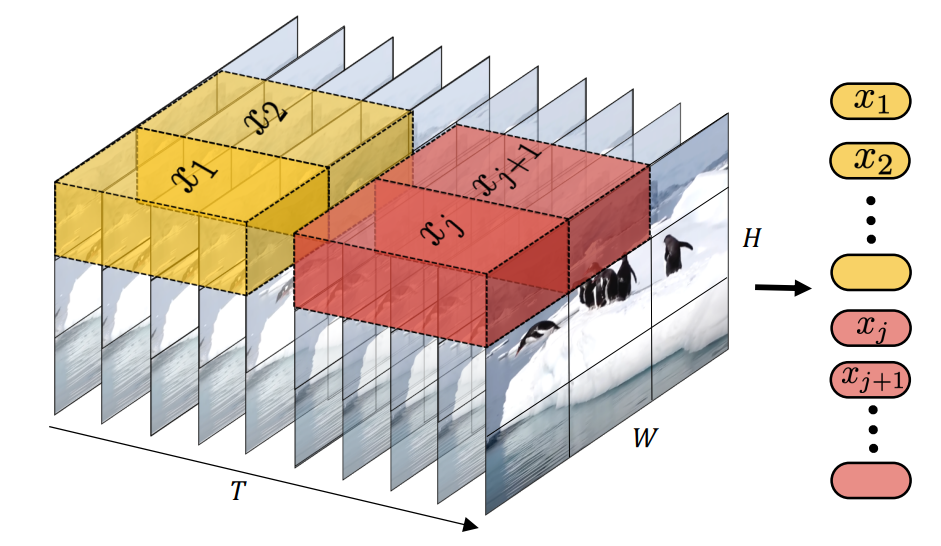

Tublet Embedding

The input video is divided into a sequence of non-overlapping tubelet tokens. Each tubelet is a 3D patch of the video with dimensions (frames, height, width). The tubelet tokens are linearly projected to obtain embedding vectors. Positional embeddings are added to provide spatial and temporal information.

Model Variants

ViViT paper proposed four different model variants.

Spatio-Temporal Attention

This is the most direct extension of the ViT to video. It treats the entire video as a single stream of tokens, applying self-attention across both spatial and temporal dimensions simultaneously.

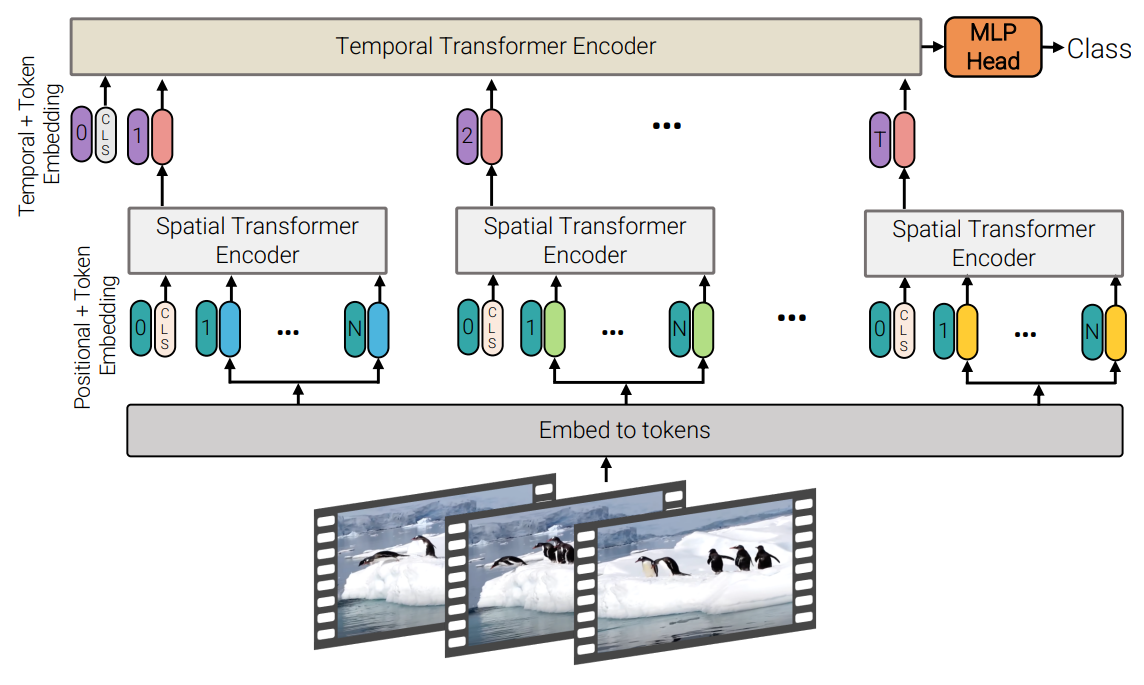

Factorized Encoder

This variant uses two separate transformer encoders: one for spatial, and another for temporal. Each frame is fed into the spatial encoder (ViT), the sequence of the output CLS token is used as an input of the temporal encoder (Transformer).

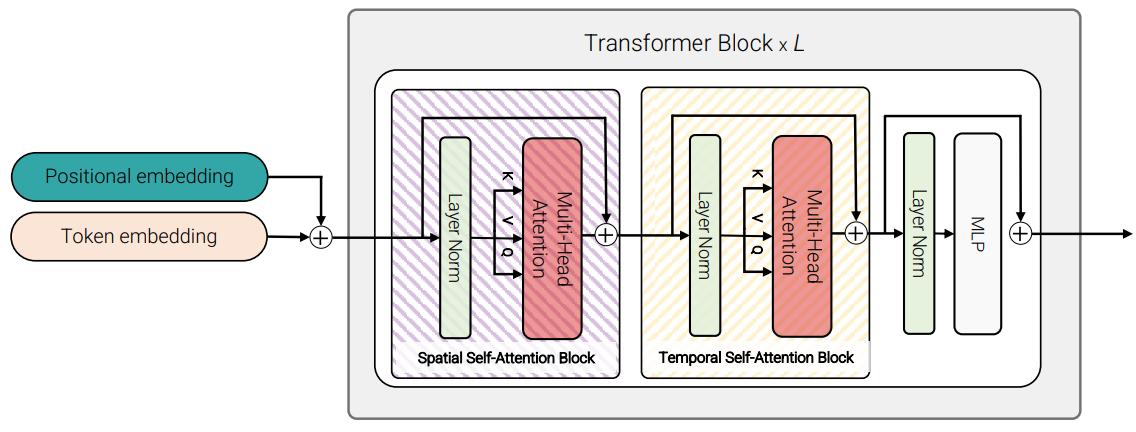

Factorized Self-Attention

This variant factorizes the self-attention operation within each transformer layer into spatial and temporal attention operations. The spatial attention is applied to tokens within the same frame, and the temporal attention is applied to tokens at the same spatial location across frames.

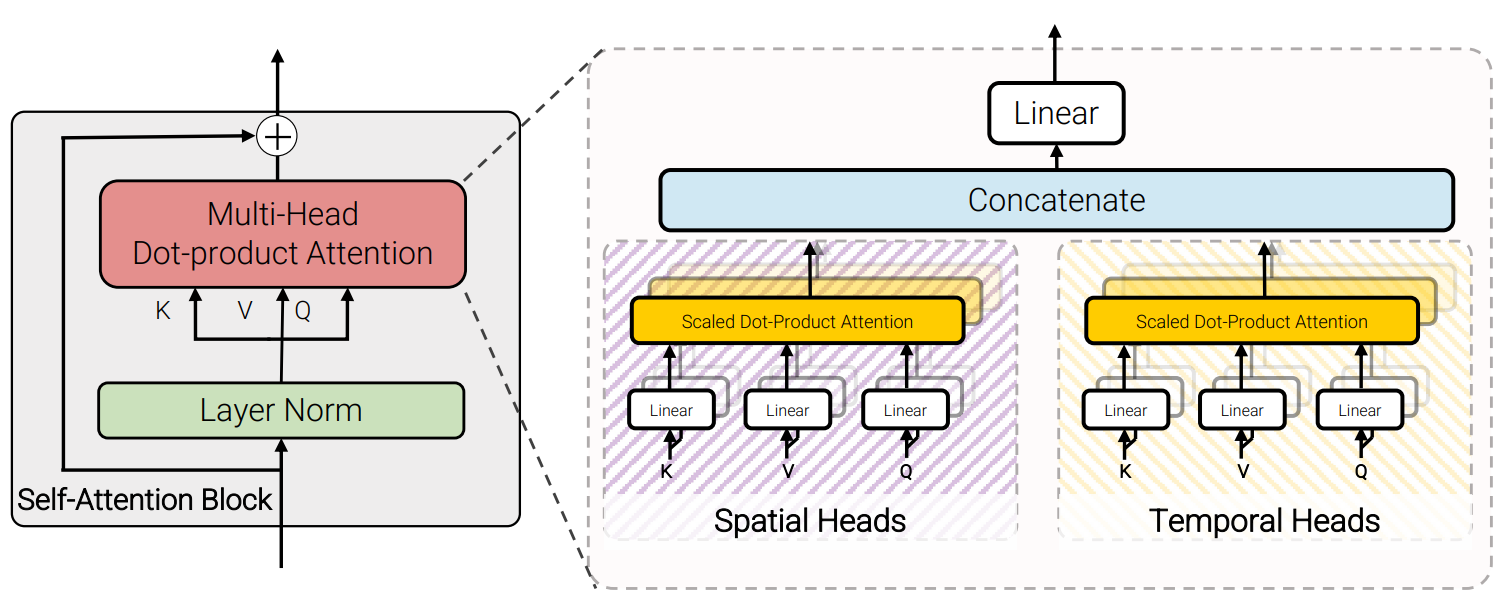

Factorized Dot-Product Attention

This variant factorizes the multi-head dot-product attention. The half of the heads only compute the spatial attention, and the other half only compute the temporal attention.