Definition

Attention is a method that determines the relative importance of each component in a sequence relative to the other components in that sequence.

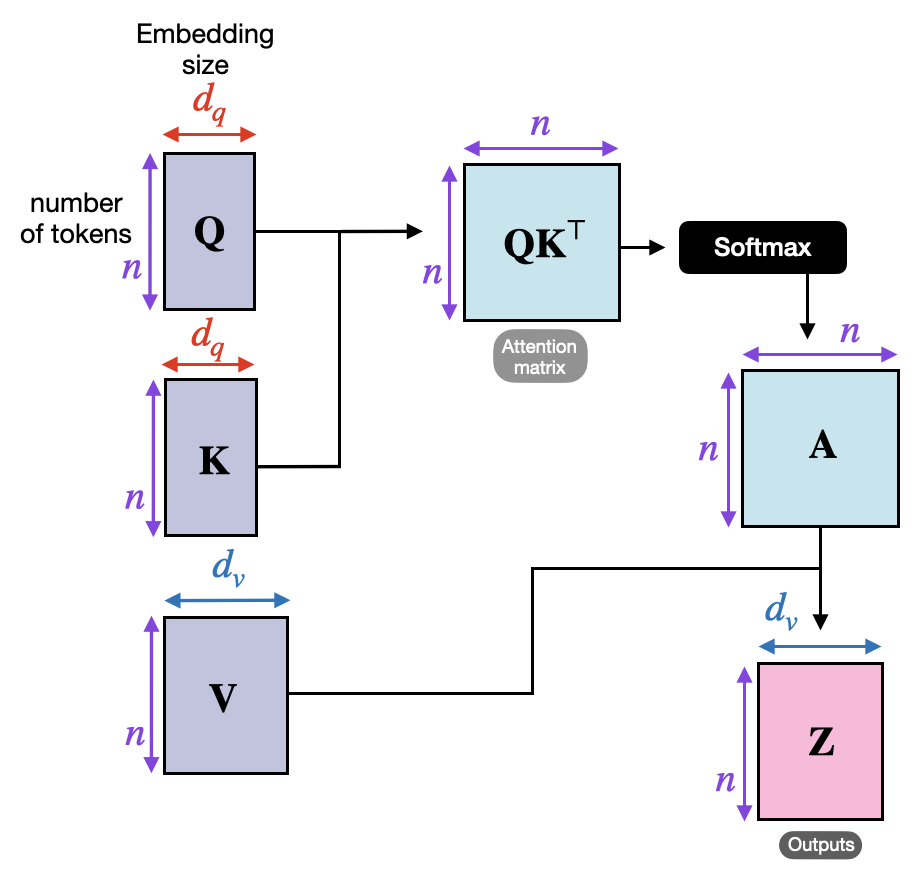

The attention function is formulated as where Q (query) represents the current context, K (key) and V (value) represents the references, and is the dimension of the keys.

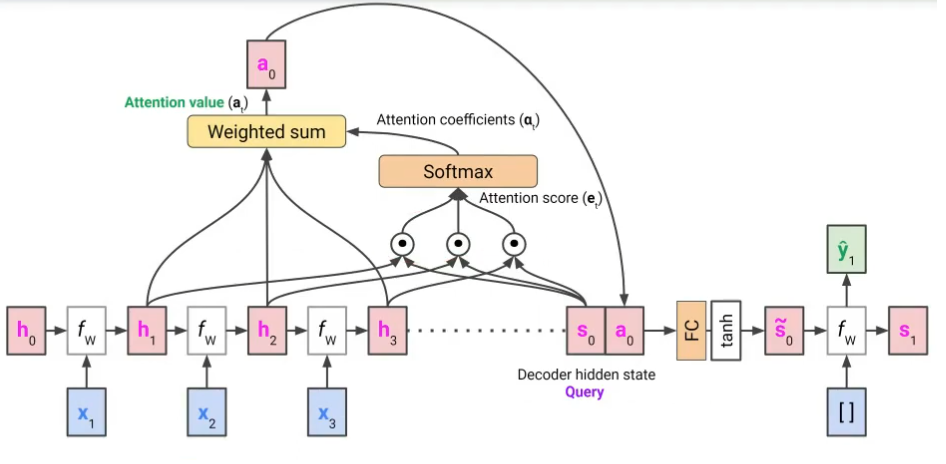

The attention value is the convex combination of values, where each weight is proportional to the relevance between the query and the corresponding key.

Examples

Seq-to-Seq with RNN