Word2Vec

Definition

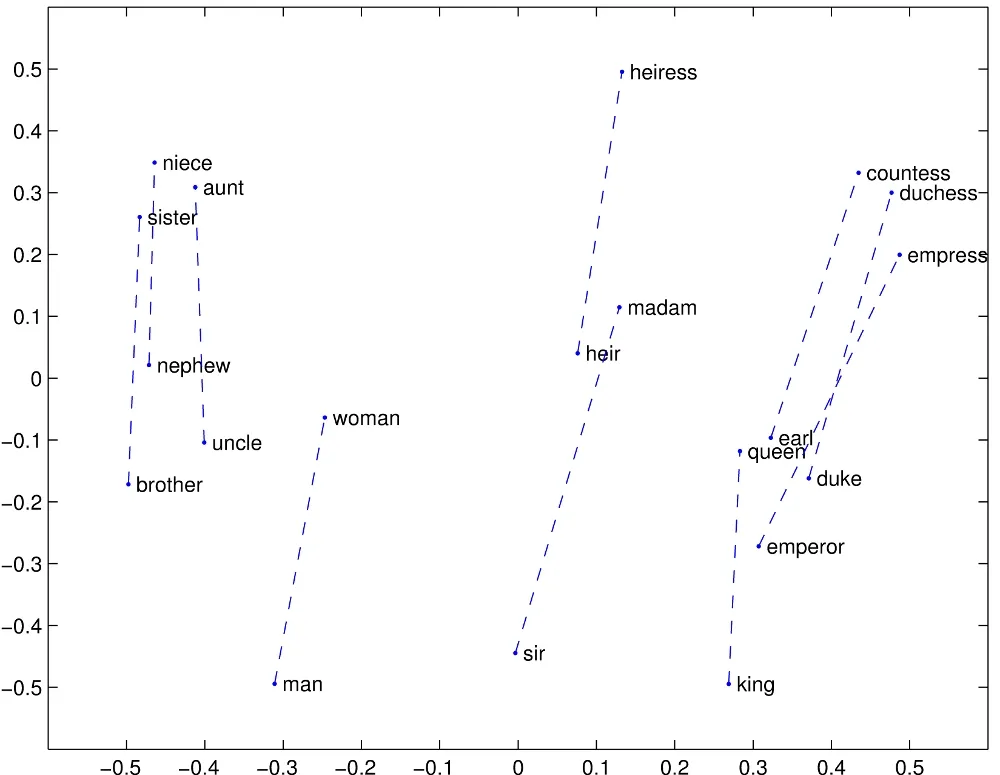

The Word2Vec model learns vector representations of words that effectively capture the semantic relationships them using large corpus of text.

Continuous Bag-Of-Words

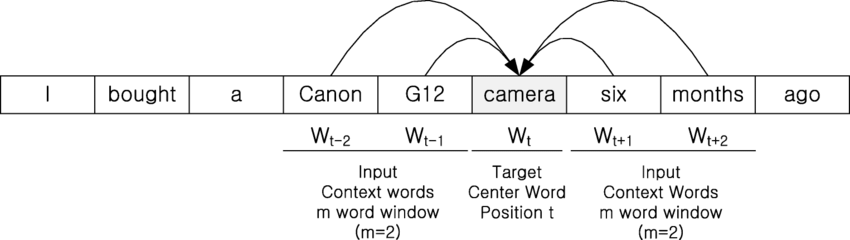

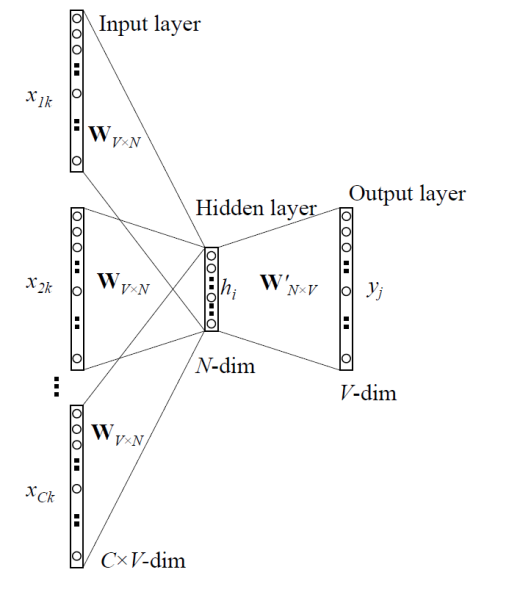

Continuous bag-of-words (CBOW) predicts a target word given its context words. The model takes a window of context words around a target word. The context words are fed into the network, and it tries to predict the target word. The model is trained to maximize the probability of the target word given the context words.

Algorithm

- Generate one-hot encodings of the context words of window size .

- Get embedded words vectors using linear transformation, and take average of them where the weight is shared

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the one-hot encoding of actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output word vector.

Skip-Gram

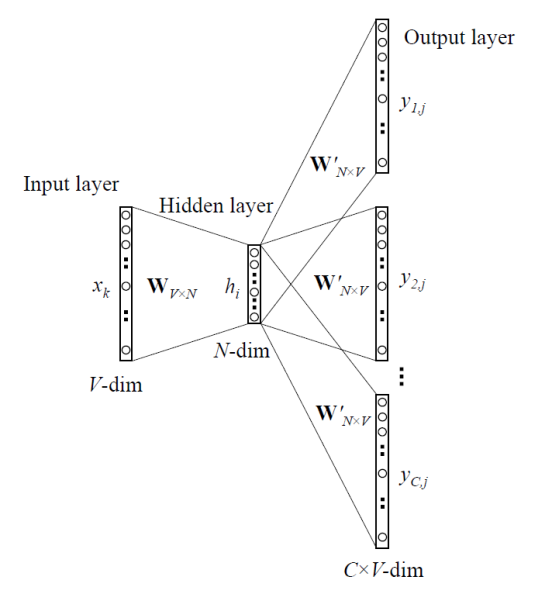

The Skip-gram model does the opposite of CBOW. It predicts the context words given a target word The model takes a single target word as input. The target word is fed into the network, and it tries to predict the surrounding context words. The model is trained to maximize the probability of each context word given the target word.

Algorithm

Link to original

- Generate one-hot encoding of the input word

- Get an embedded word vector using linear transformation

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the many one-hot encodings of the actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output -th context word vector.

Noise Contrastive Estimation

Definition

Noise contrastive estimation (NCE) transforms the problem of density estimation to binary classification between data samples and noise samples. Given a sample of data points that follow unknown probability distribution parametrized by . Noise contrastive estimation (NCE) is used to find an estimator that best approximate the true parameter . Although MLE has good properties, it requires the parametric family to be normalized while calculating. NCE finds the estimator by maximizing an objective function (like in MLE) but without needs of normalizing while calculating, so that it treats normalization coefficient as another estimation parameter. It is desirable property, since the normalization constant may be difficult or expensive to calculate.

The idea is to pollute sample with noise, data points that come from a known distribution, and perform nonlinear logistic regression to discriminate between data and noise.

Consider a data sample and noise sample and the union of the two samples . A binary class label is assigned to each , where .

By the definition of the function, and . By the Bayes Theorem, and , where is the sample-noise ratio. The log-likelihood function of the binary classification problem is derived as The NCE estimator is obtained by maximizing the objective function (log-likelihood) with respect to

Examples

Word Embedding (Skip-Gram Model)

In the context of word embeddings, particularly the Skip-gram model, the unknown distribution becomes , the probability of a context word given an input word . The represents the parameters of the word embedding model.

The noise distribution , where is the count of word in the corpus, is the unigram distribution of contexts raised to the power of to balance frequent and rare words.

For each word-context pair from the true data, we sample negative examples from . The NCE objective function is derived as: where is the set of observed word-context pairs, and is the number of noise samples per data sample.

In practice, is often modeled as , without explicit normalization. By maximizing this objective, we obtain word embeddings (vectors and ) that can discriminate between true context words and random noise words, effectively capturing semantic relationships in the vector space.

Link to original

Negative Sampling

Definition

Negative sampling (NS) is a simplified version of NCE. It shares the core idea of transforming the problem of density estimation into binary classification between true data samples and noise samples.

In the context of word embeddings, particularly the Skip-gram model, NS aims to learn good word representations without the need to estimate a full probability distribution. The goal is to find parameters that best represent the relationship between words and their contexts. Given a word-context pair from the true data distribution, NS samples negative examples (noise) from a noise distribution . The objective is to maximize the probability of the true pair while minimizing the probability of the noise pairs.

NS models with the Sigmoid Function:

The NS objective function is derived as: where is the set of negative samples drawn from the noise distribution.

The NS objective function is similar to the Skip-gram objective but replaces the expensive Softmax Function with a simpler binary classification task between true and noise samples.

Link to original

Global Vectors for Word Representation

Definition

Global vectors for word representation (GloVe) model learns word embedding vectors such that their Dot Product is proportional to the logarithm of the words’ probability of co-occurrence.

where and are word vectors, is the co-occurence count between words and .

Link to original

Attention

Definition

Attention is a method that determines the relative importance of each component in a sequence relative to the other components in that sequence.

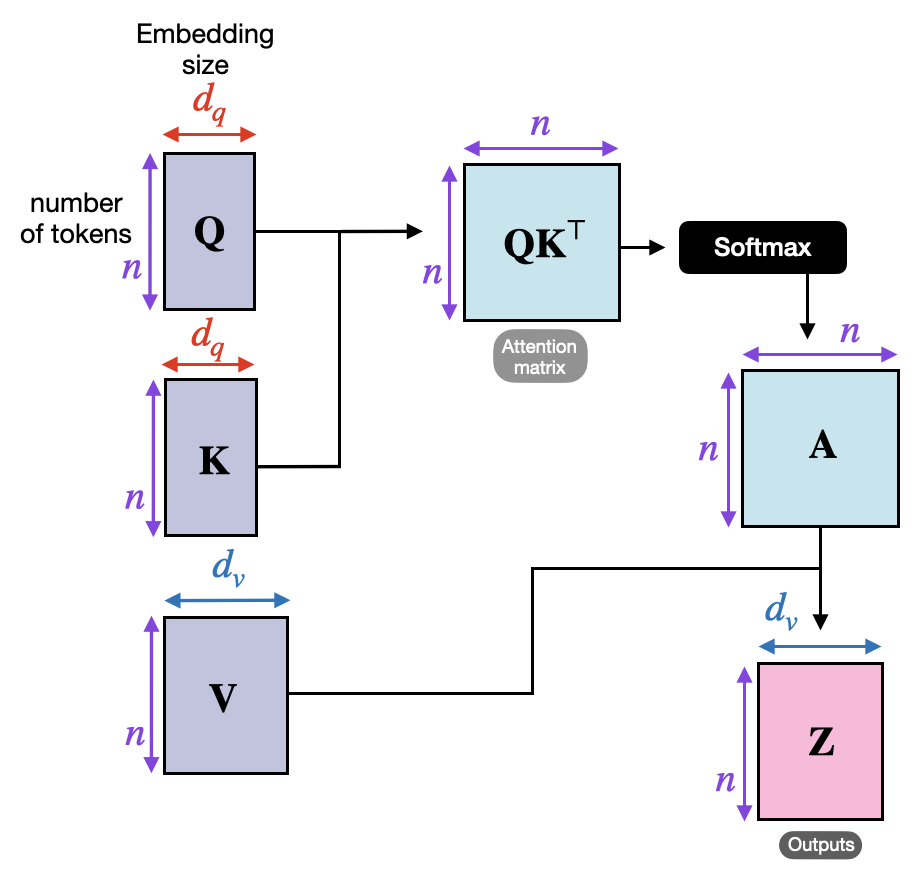

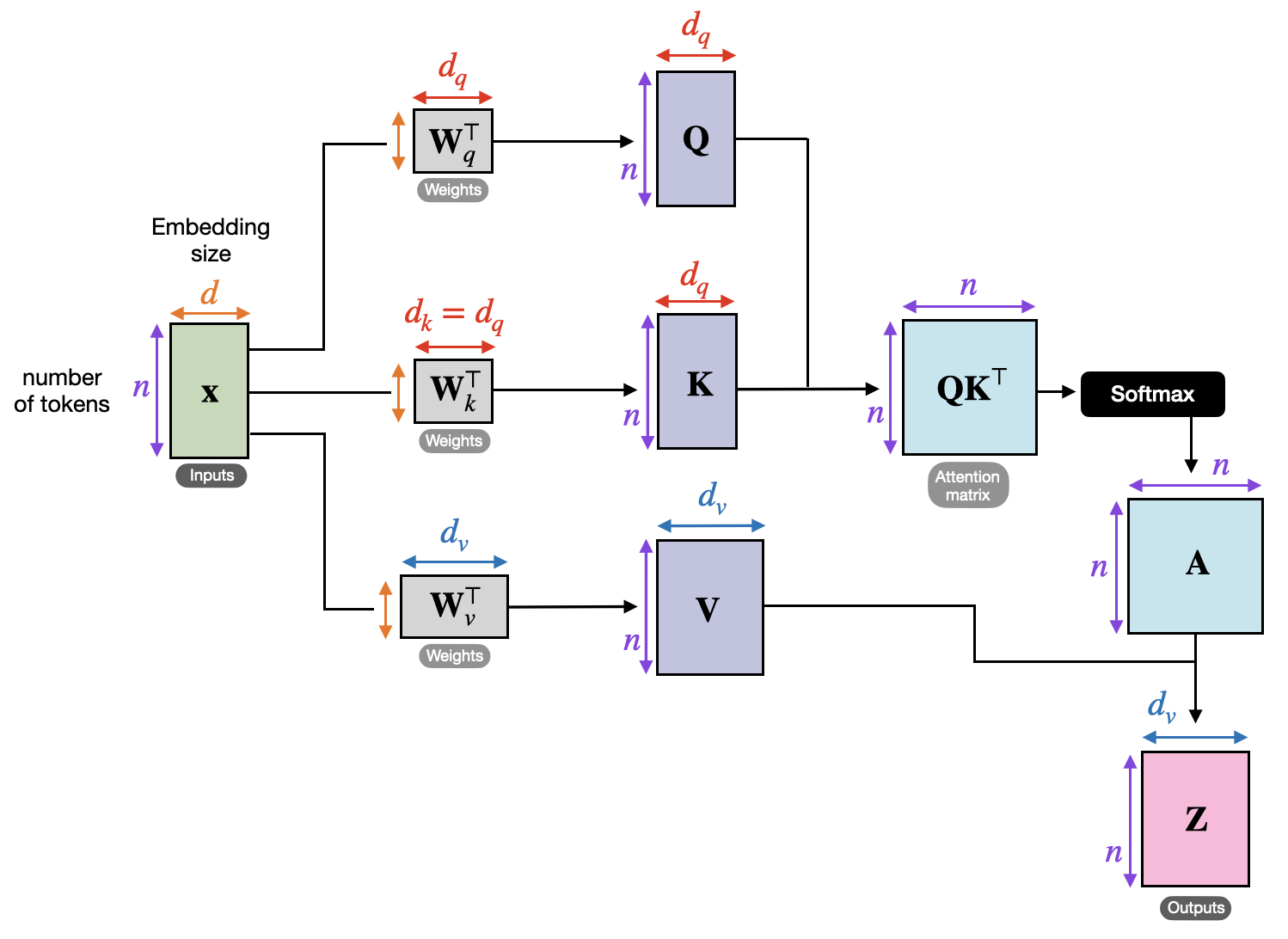

The attention function is formulated as where Q (query) represents the current context, K (key) and V (value) represents the references, and is the dimension of the keys.

The attention value is the convex combination of values, where each weight is proportional to the relevance between the query and the corresponding key.

Examples

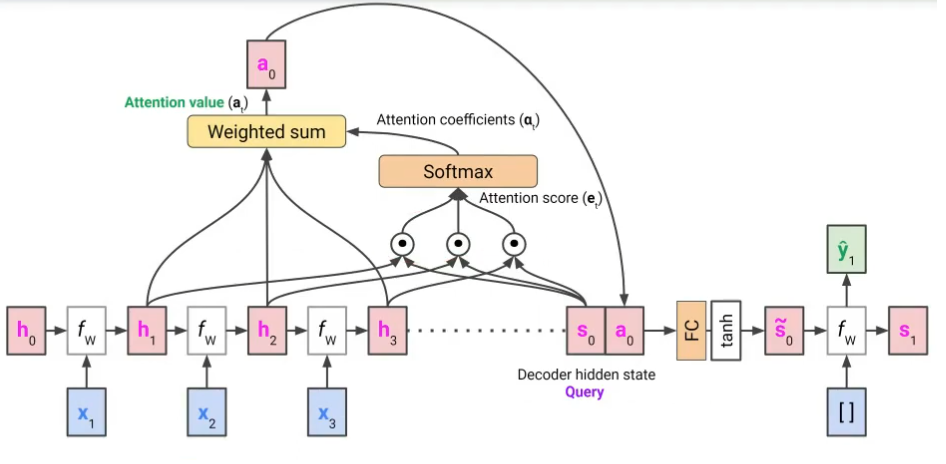

Seq-to-Seq with RNN

Link to original

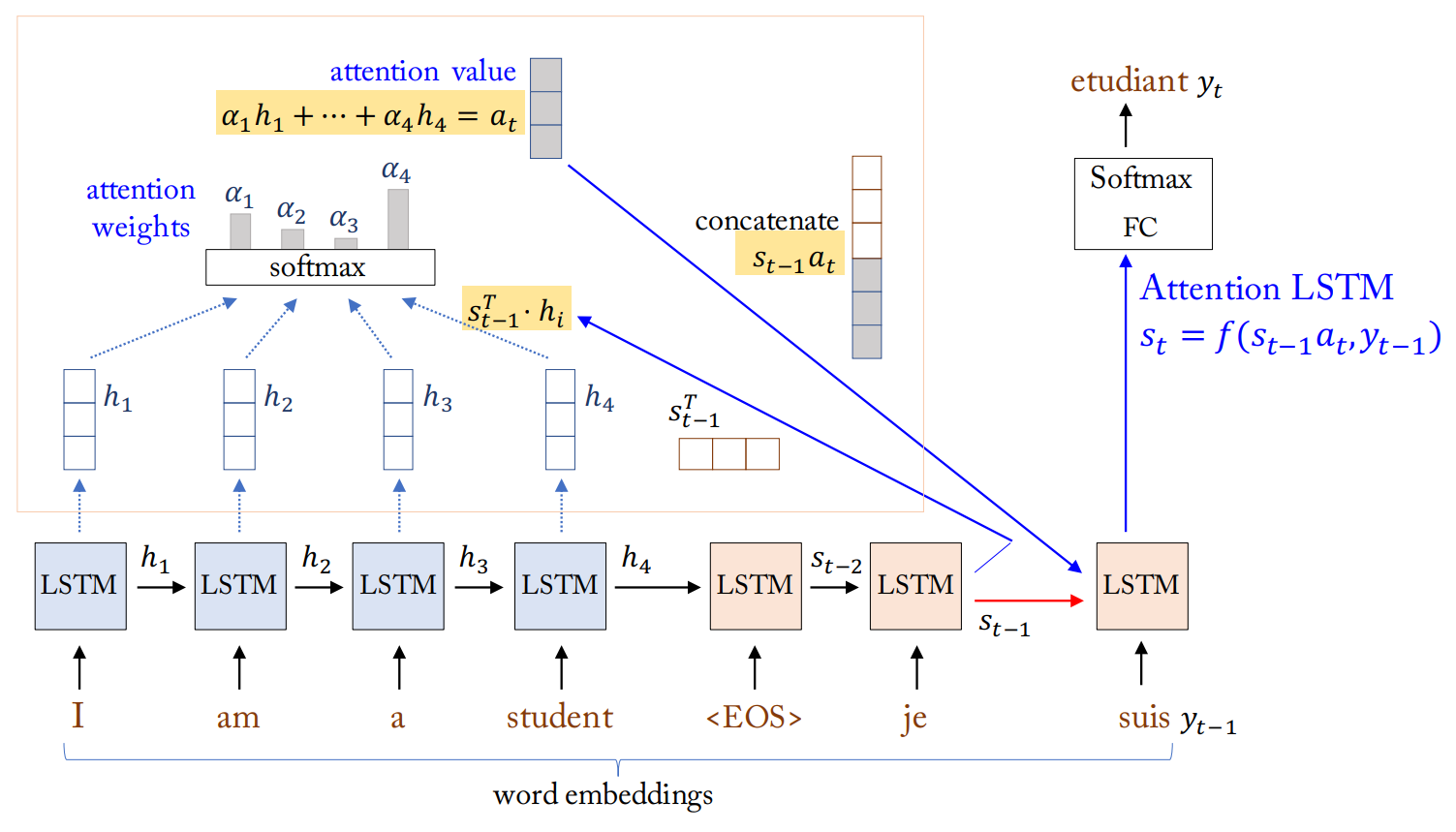

Attention LSTM

Definition

Attention LSTM is a variant of LSTM architecture incorporating Attention mechanism. In a sequence-to-sequence setting, the model uses Attention in the decoding stage. The previous hidden state of LSTM cell is used as the query, and the hidden states of LSTM cell of the encoder are used as the key and value.

Link to original

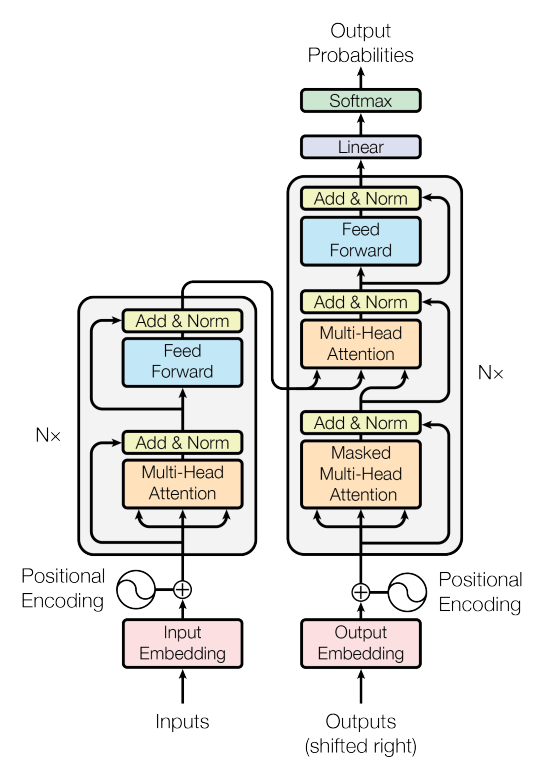

Transformer

Definition

Transformer model uses self-attention, the Attention in which the Q, K, and V derived from the same source, for sentences. The result vector of the self-attention reflects its context. Usually, self-attention is repreated multiple times to further contexualize.

Architecture

Self-Attention

The initial query (Q), key (K), and value (V) are matrices are the result of linear transformation of the input sequence.

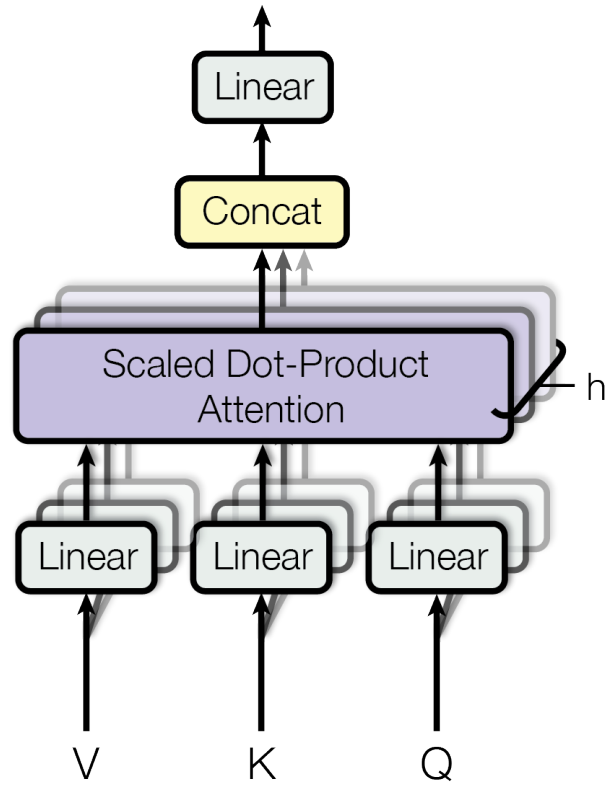

Multi-head Self-Attention

The Transformer uses multiple attention heads in parallel like the channel in CNN, allowing it to focus on different aspects of the input simultaneously. The output of multi-head attention is a concatenation of the outputs from individual attention heads, followed by a linear transformation.

where

Feed-Forward Layer

Each layer in the Transformer also contains a feed-forward layer applied to each position separately, i.e. there is no cross-token dependency. The linear transformations are the same across different positions in the same layer, but different in other layers.

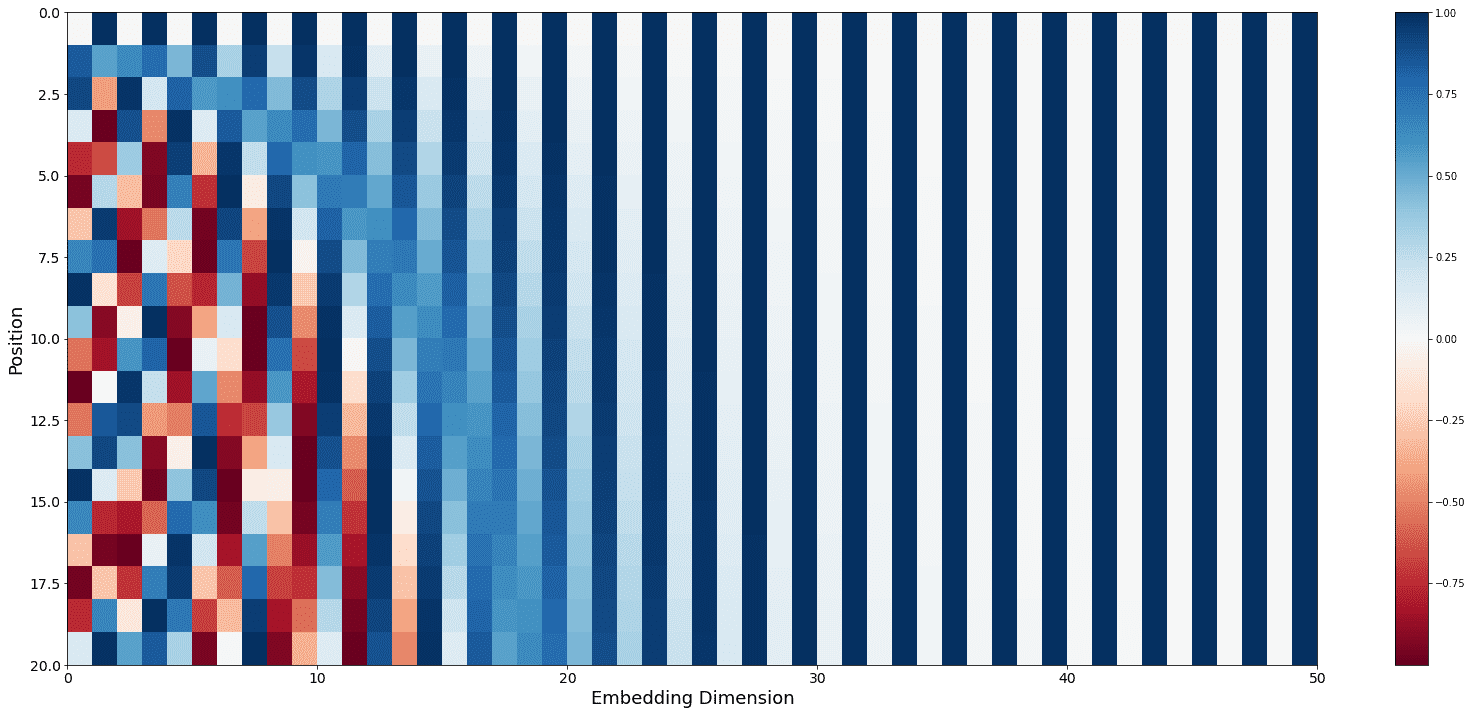

Positional Encoding

Since the Transformer doesn’t inherently capture sequence order, positional encodings are added to the input embeddings. These are typically sine and cosine functions of different frequencies:

PE_{(\text{pos},2i)} &= sin(\text{pos} / 10000^{2i/d_{\text{model}}})\\ PE_{(\text{pos},2i+1)} &= cos(\text{pos} / 10000^{2i/d_{\text{model}}}) \end{aligned}$$ ## Masked Multi-Head Self-Attention In the decoder, the self-attention layer is modified to prevent attending to later positions. This is achieved by masking future positions with negative infinity before the [[Softmax Function|softmax]] step. ## Encoder-Decoder Attention The decoder has an additional attention layer that performs multi-head attention over the output of the encoder. Where the query (Q) comes from the previous layer in the decoder, and the key (K) and value (V) come from the output of the encoder.Link to original

GPT

Definition

GPT is based on the Transformer architecture, specifically using only the decoder portion of the original Transformer model. It utilizes self-attention mechanisms to process input sequences.

GPT-1

Next Token Prediction

GPT-1 was trained on a diverse corpus of web pages, using semi-supervised learning to predict the next token in a sequence. This pre-training allowed the model to learn general language patterns and representations (vector representations of words).

GPT-2

GPT-2 was trained on a more large-size dataset. It performs tasks without specific fine-tuning, demonstrating strong zero-shot learning capabilities.

In the GPT-2 model, the Layer Normalization is moved to the input of each sub-block, similar to a pre-activation of ResNet.

GPT-3

GPT-3 scales the architecture of GPT-2 up dramatically with larger dataset. The research shows the effectiveness of few-shot learning.

where the gray region is masked

GPT-3 uses dense and locally banded sparse attention patterns in the layers of the transformer alternatively.

Link to original

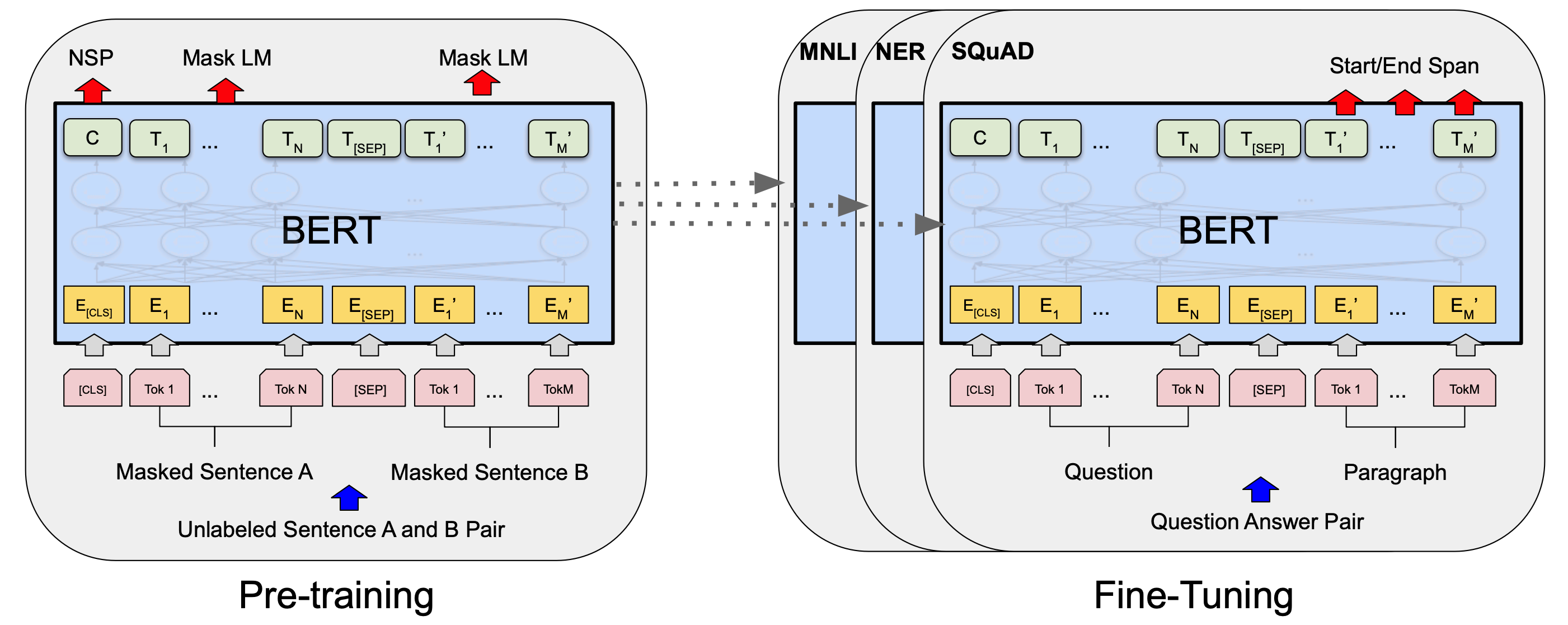

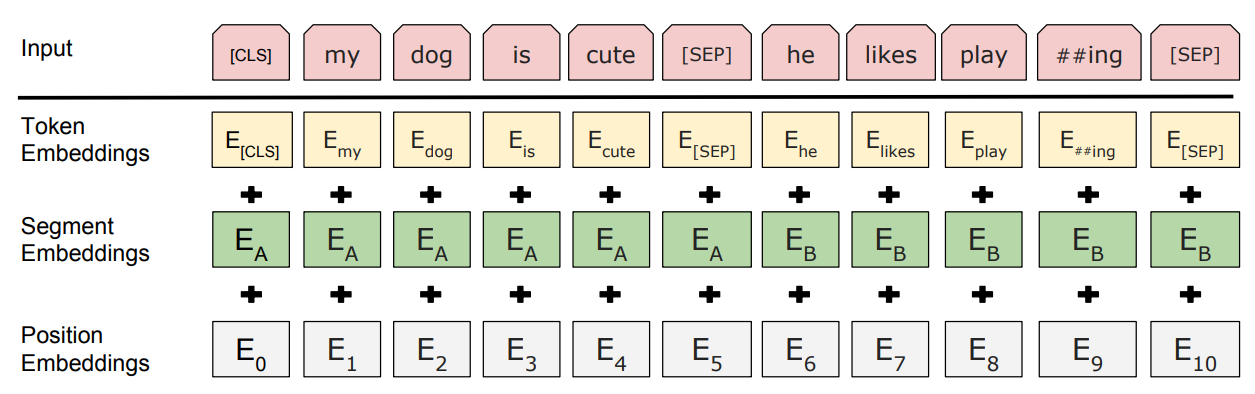

BERT

Definition

BERT model appended a CLS token to the input, and uses it as the aggregated embedding. The model learn word embedding by solving the masked token prediction and next sentence prediction problems.

Tasks

Masked Language Modeling

Figuring out the hidden words using the context.

Next Sentence Prediction

A binary classification problem, predicting if the two sentences in the input are consecutive or not.

Link to original