Definition

The Word2Vec model learns vector representations of words that effectively capture the semantic relationships them using large corpus of text.

Continuous Bag-Of-Words

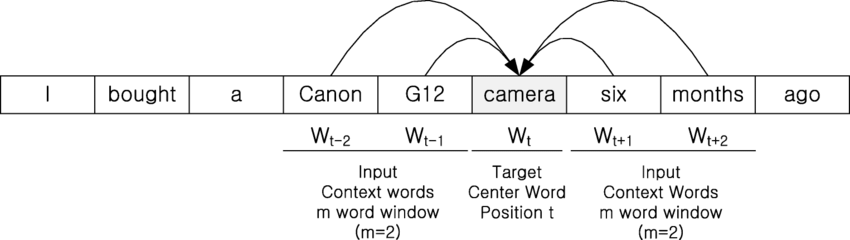

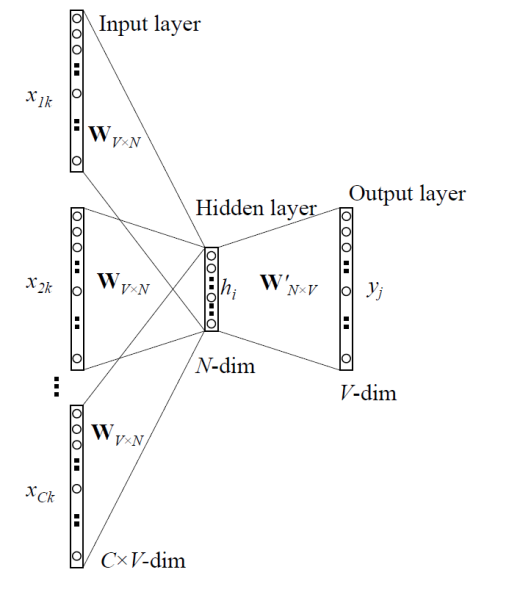

Continuous bag-of-words (CBOW) predicts a target word given its context words. The model takes a window of context words around a target word. The context words are fed into the network, and it tries to predict the target word. The model is trained to maximize the probability of the target word given the context words.

Algorithm

- Generate one-hot encodings of the context words of window size .

- Get embedded words vectors using linear transformation, and take average of them where the weight is shared

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the one-hot encoding of actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output word vector.

Skip-Gram

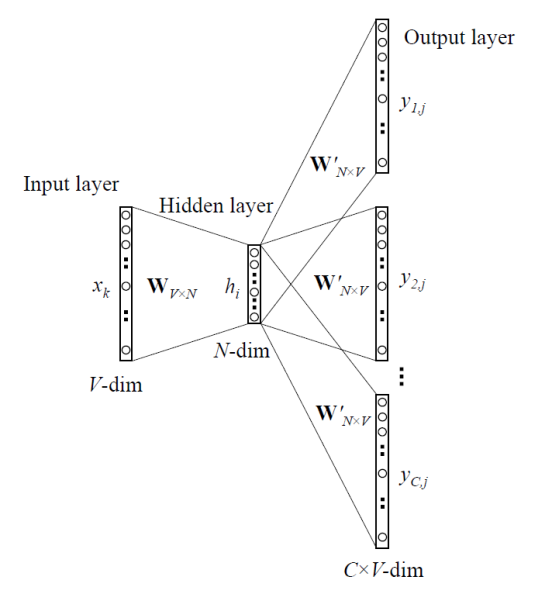

The Skip-gram model does the opposite of CBOW. It predicts the context words given a target word The model takes a single target word as input. The target word is fed into the network, and it tries to predict the surrounding context words. The model is trained to maximize the probability of each context word given the target word.

Algorithm

- Generate one-hot encoding of the input word

- Get an embedded word vector using linear transformation

- Generate a score vector and turn the scores into probability with Softmax Function

- Adjust the weights and to match the result to the many one-hot encodings of the actual output word by minimizing the loss where is the dimension of the vectors, and is the -th element of the one-hot encoded output -th context word vector.