Definition

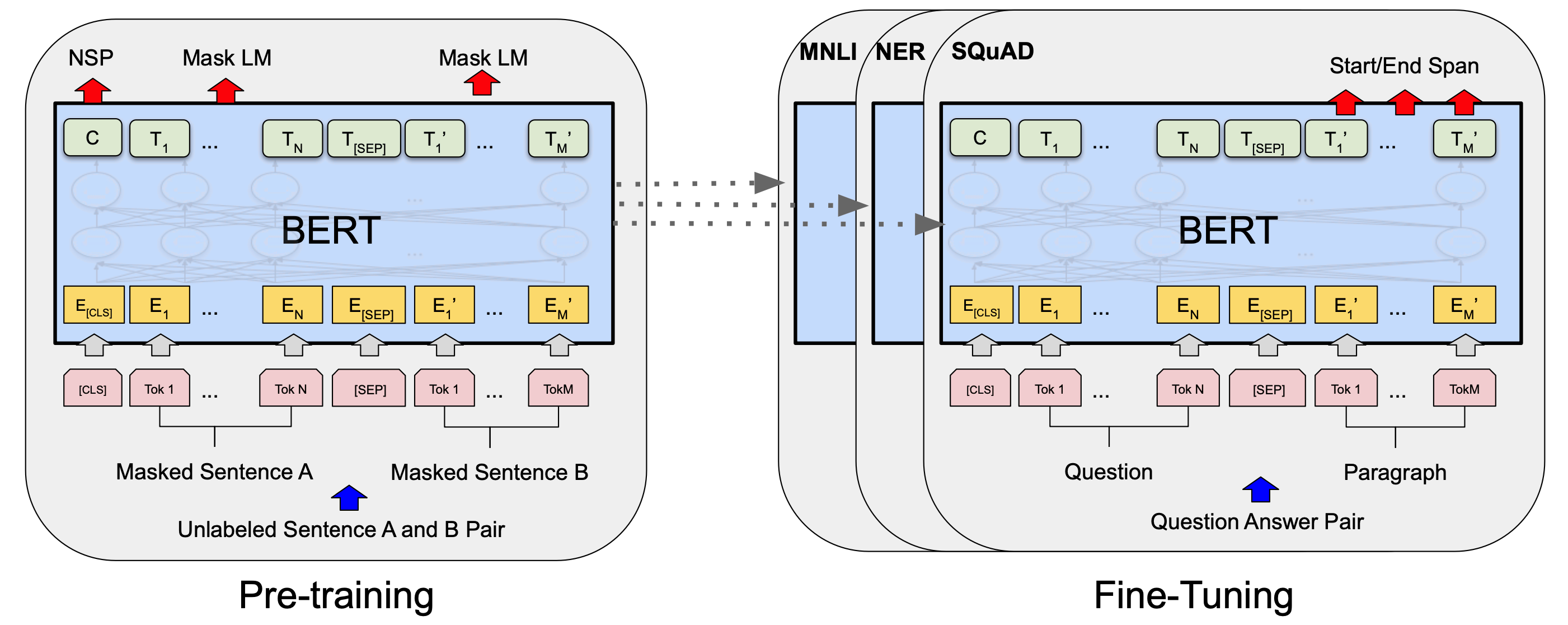

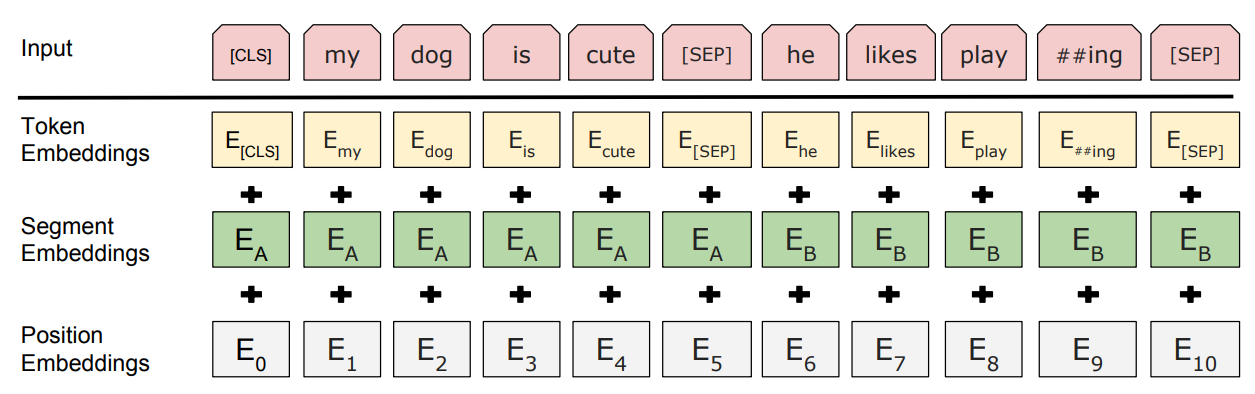

BERT model appended a CLS token to the input, and uses it as the aggregated embedding. The model learn word embedding by solving the masked token prediction and next sentence prediction problems.

Tasks

Masked Language Modeling

Figuring out the hidden words using the context.

Next Sentence Prediction

A binary classification problem, predicting if the two sentences in the input are consecutive or not.