Definition

Vision-and-Language BERT (ViLBERT) extends the BERT architecture to handle both visual and textual inputs.

Architecture

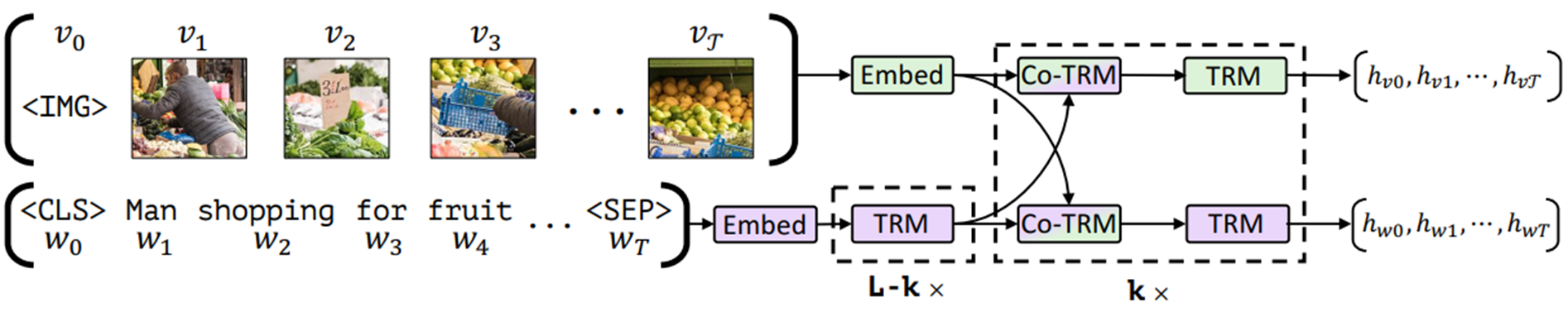

ViLBERT model consists of two parallel BERT-like streams: visual stream and textual stream. The two stream are connected through co-attention transformer layers. The tokens of the visual stream are objects estimated by an object detection model (Faster R-CNN). VilBERT is pre-trained on image-caption pairs using two main tasks: masked multimodel learning and multimodel alignment prediction.

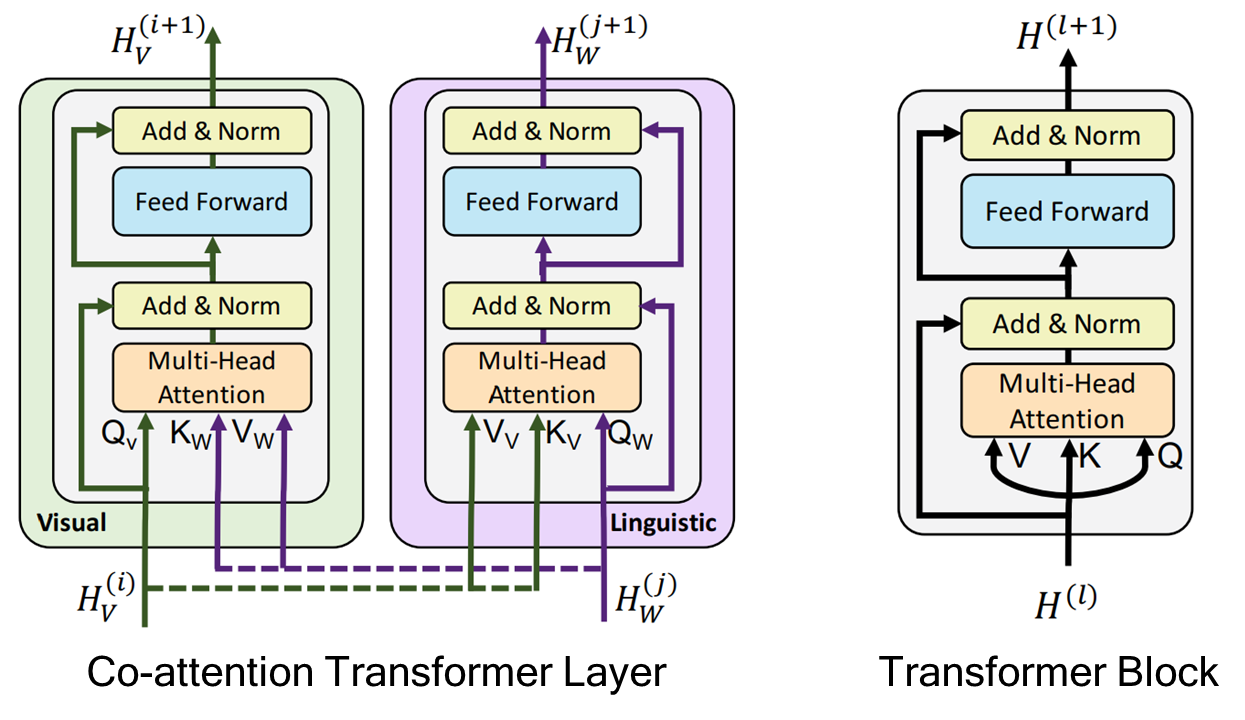

Co-Attention Transformer Layer

The co-attention transformer layer allows for bidirectional interaction between the visual and textual streams. In the layer, each stream uses the feature of another stream as key (K) and value (V).

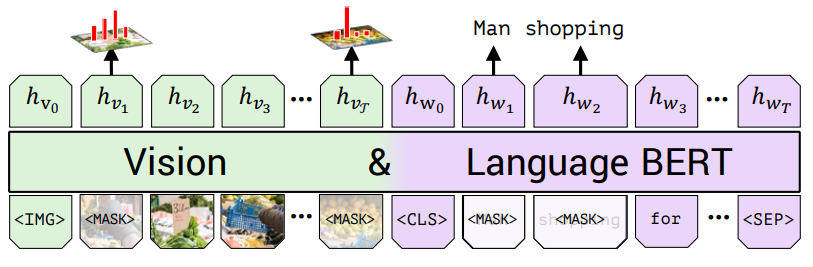

Masked Multimodel Learning

The text stream is trained in the same way as the BERT’s masked language modeling, and the image part is trained to estimate the label of the masked visual feature.

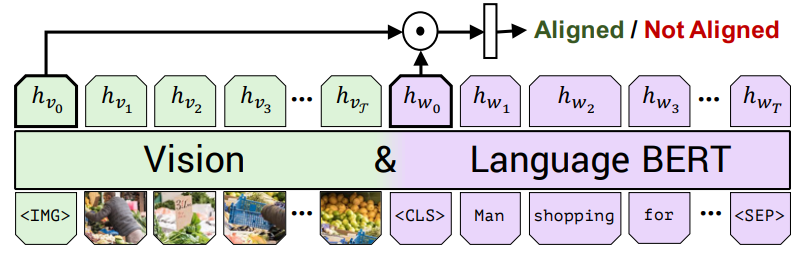

Multimodel Alignment Prediction

The model takes image-text pairs as input and determines if the image and text pair match.