Definition

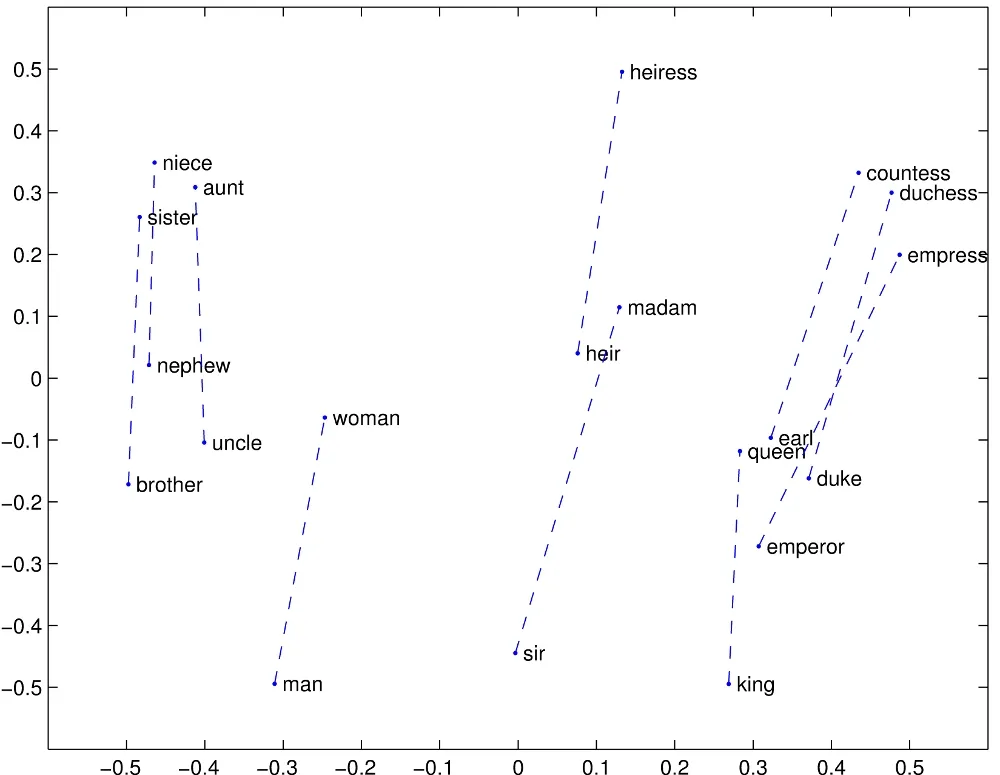

Global vectors for word representation (GloVe) model learns word embedding vectors such that their Dot Product is proportional to the logarithm of the words’ probability of co-occurrence.

where and are word vectors, is the co-occurence count between words and .