Definition

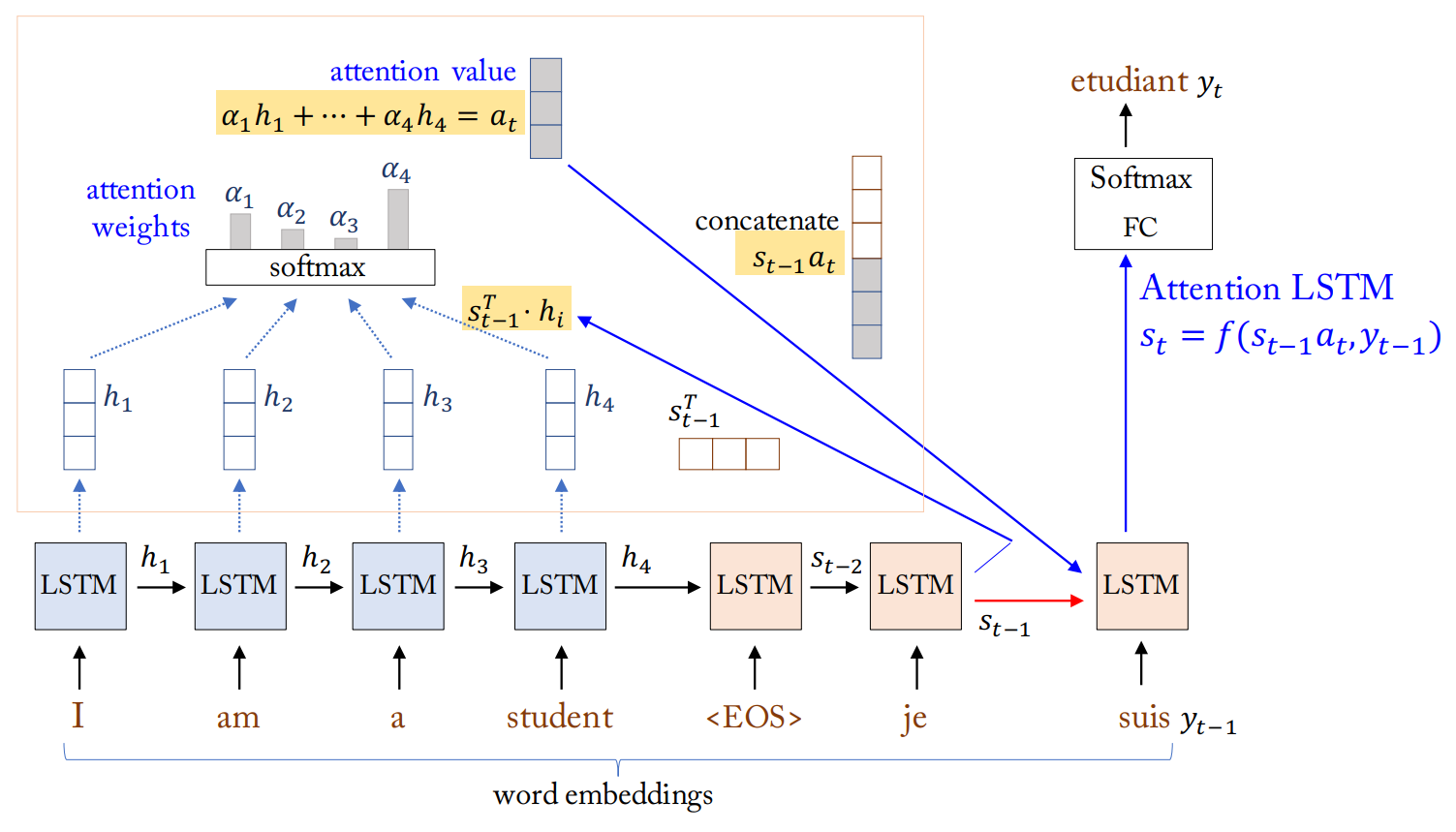

Attention LSTM is a variant of LSTM architecture incorporating Attention mechanism. In a sequence-to-sequence setting, the model uses Attention in the decoding stage. The previous hidden state of LSTM cell is used as the query, and the hidden states of LSTM cell of the encoder are used as the key and value.