Definition

Transformer-based GNN address some of the limitations of traditional GNNs (such as cycle counting) while leveraging the Transformer architecture for processing Graph-structured data.

Architecture

Node Embedding

Each node is embedded into a vector using their features.

Positional Encoding

Unlike in standard Transformer where positional encodings represent sequential order, in graph scenarios, these encodings are used to capture the structural information of the graph. It is constructed from the Adjacency Matrix of the graph.

There exists various approaches constructing positional encoding vectors.

Relative Distance

This method directly using the idea of Position-Aware GNN. The distance of a given target node to each anchor-set as a positional encoding.

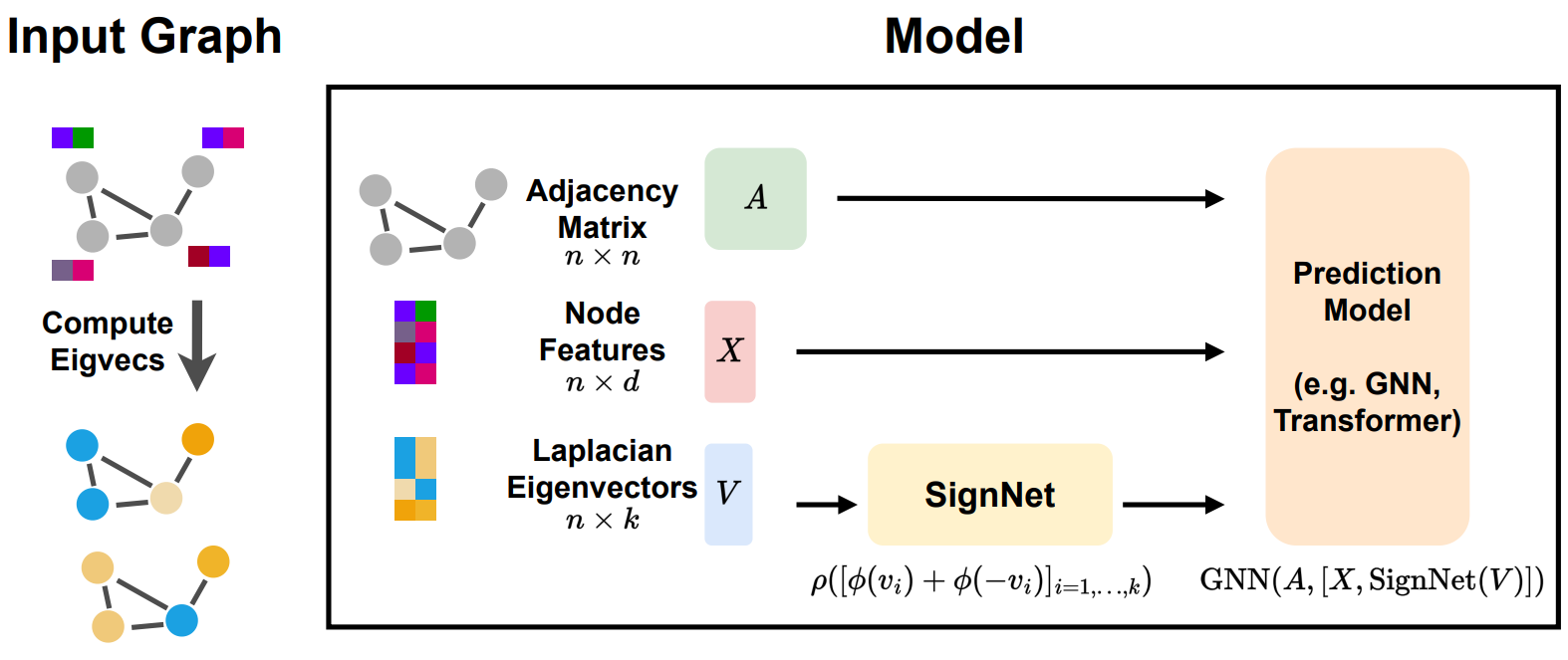

Laplacian Eigenvectors

Calculate the eigenvector matrix of the Laplacian Matrix, and use each row of the eigenvector matrix as a positional encoding of each node.

The signs of the eigenvectors may change model’s prediction. SigNet uses a neural network to get sign-invariant positional encoding to prevent this problem. where is the -th Eigenvector of the Laplacian Matrix, and and are neural network (MLP, GNN, etc.)

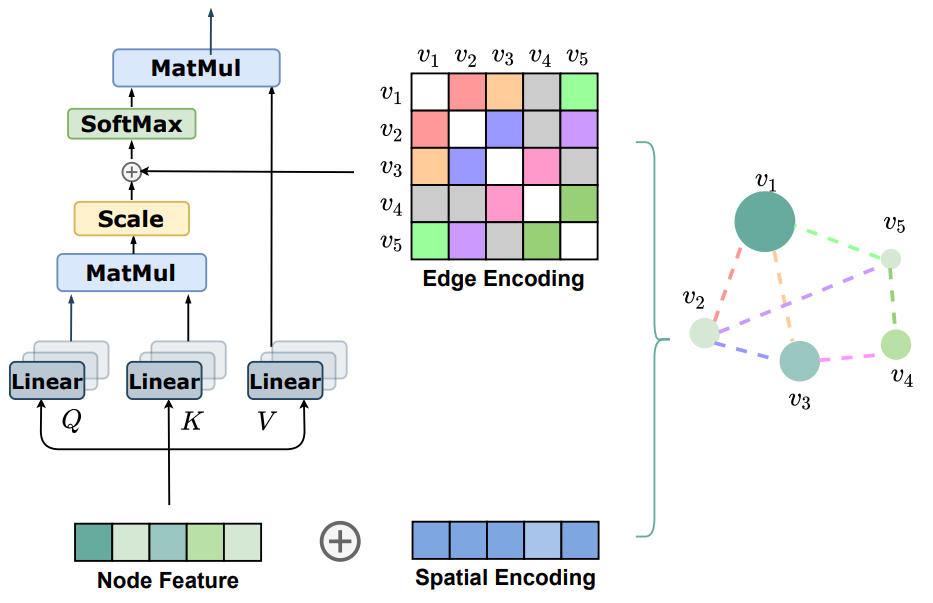

Self Attention

The edge features are used for adjusting the attention weights of the nodes.

If there is edge between nodes and with features , it is linearly transformed If there is no edge, find the shortest edge path between and , and define Then, it is added to the corresponding attention weight. where are learnable parameters.