Definition

Contrastive language-image pre-training (CLIP) model is designed to learn visual concepts from natural language supervision through Contrastive Learning.

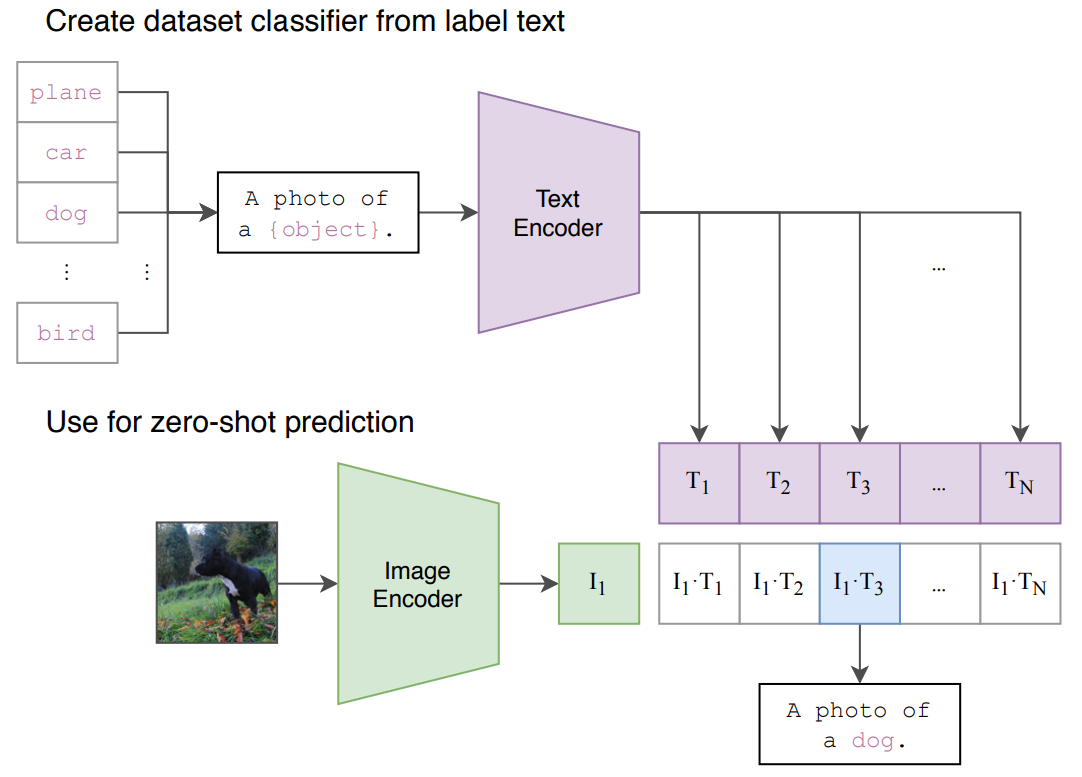

Zero Shot Prediction

CLIP can classify images into categories that are not explicitly been trained on, simply by comparing image embeddings with text embeddings of category names.

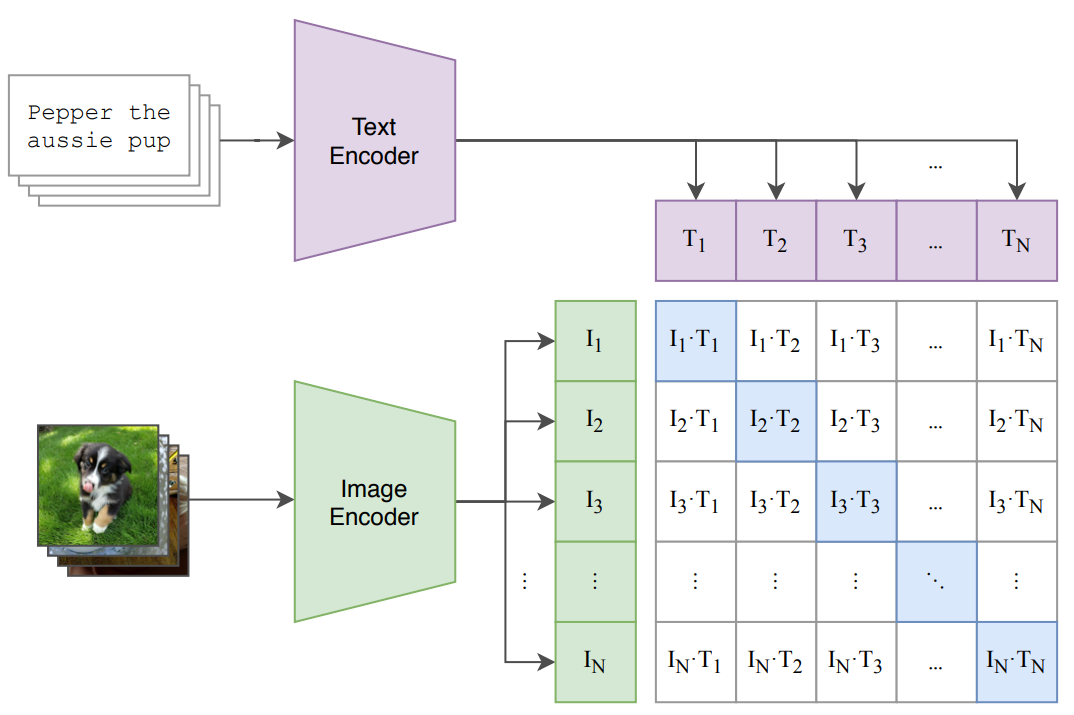

Architecture

CLIP consists of two main components: a vision encoder(ViT or CNN) and a text encoder (Transformer-based model)

Contrastive Pre-Training

The input image-text pairs are encoded by the corresponding encoder, and the encoders are updated to maximize the similarity between matching pairs and minimize the similarity non-matching pairs. The similarity is measured by cosine similarity of the two encoded vectors.