Definition

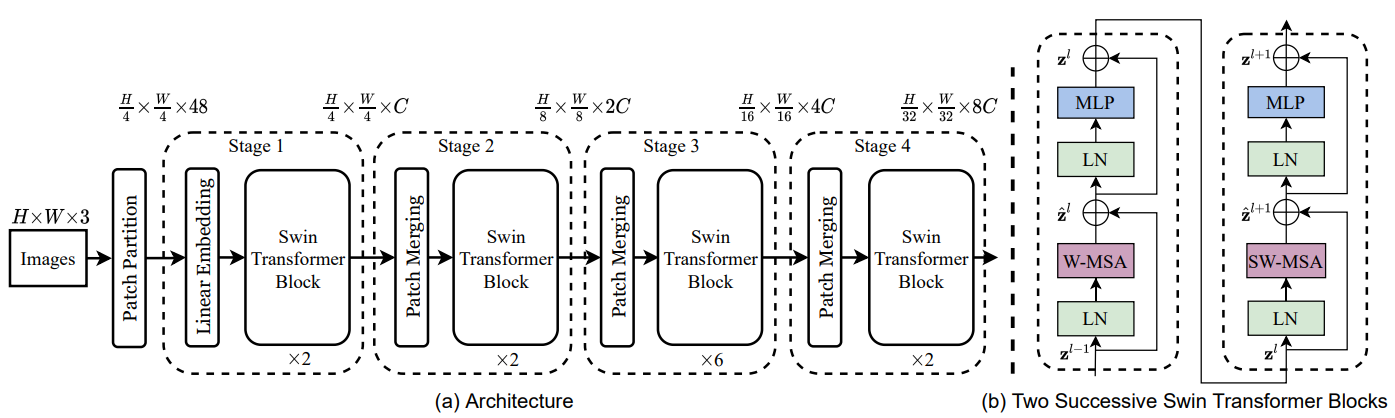

Swin transformer reintroduced the inductive bias by using the local self-attention. The problems of the local self-attention are solved with the hierarchical structure, shifted window partitioning, and relative position bias.

Architecture

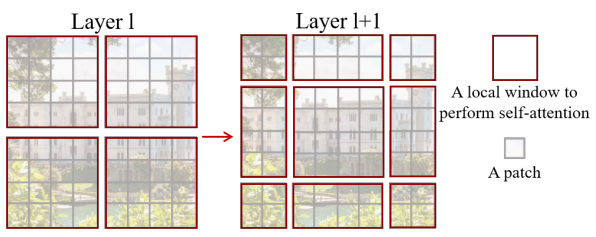

Local Self-Attention

The self-attention is computed within local windows instead of globally, reducing computational cost.

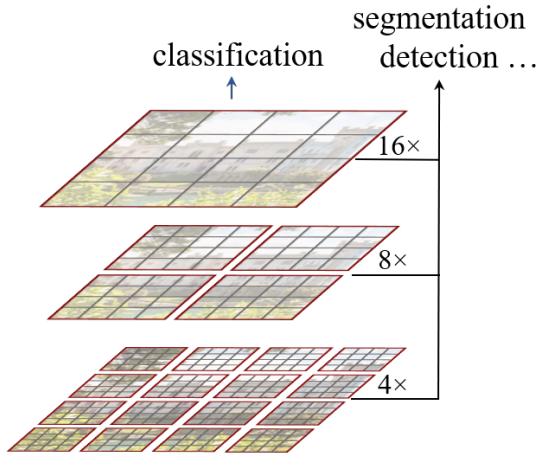

Hierarchical Structure

Swin Transformer starts with small-sized patches and gradually merges neighboring patches in deeper layers, similar to CNN. This allows the model to capture both fine and coarse features effectively.

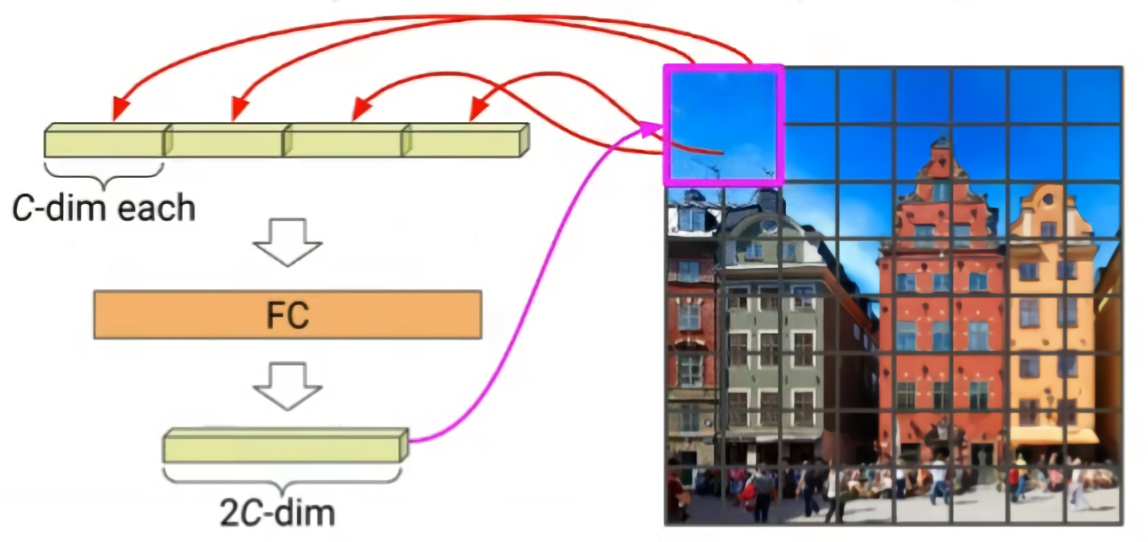

Patch Merging:

To reduce the spatial resolution and increase the channel dimension, Swin Transformer uses a patch merging layer. The layer concatenates the features of each neighboring patches and applies a linear transformation. The output feature represents the merged patch and has doubled patch size.

Shifted Window Partitioning

The image is divided into non-overlapping windows, these windows are shifted in alternate layers for cross-window information exchange.

Relative Position Bias

Instead of using absolute position embeddings like in the original Transformer, Swin Transformer uses a relative position bias. This is a learnable parameter added to the attention scores before the softmax operation.