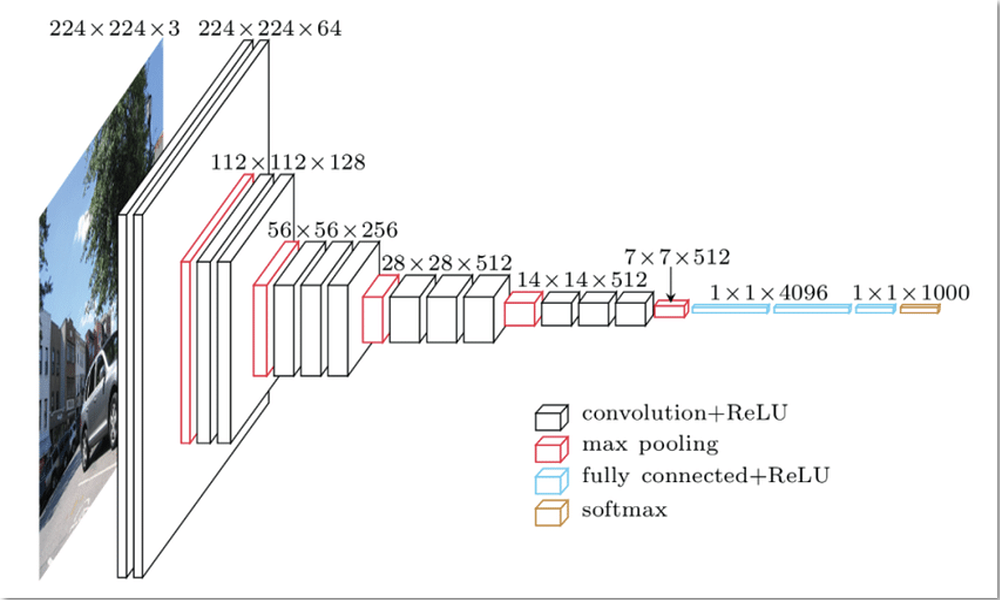

VGG Net

Definition

VGG model is a deep Convolutional Neural Network architecture. The VGG model is characterized by its depth and uniformity. It consists of a series of convolutional layers followed by fully connected layers.

Link to original

Inception Net

Definition

Inception Net model is a deep Convolutional Neural Network architecture using the inception module.

Architecture

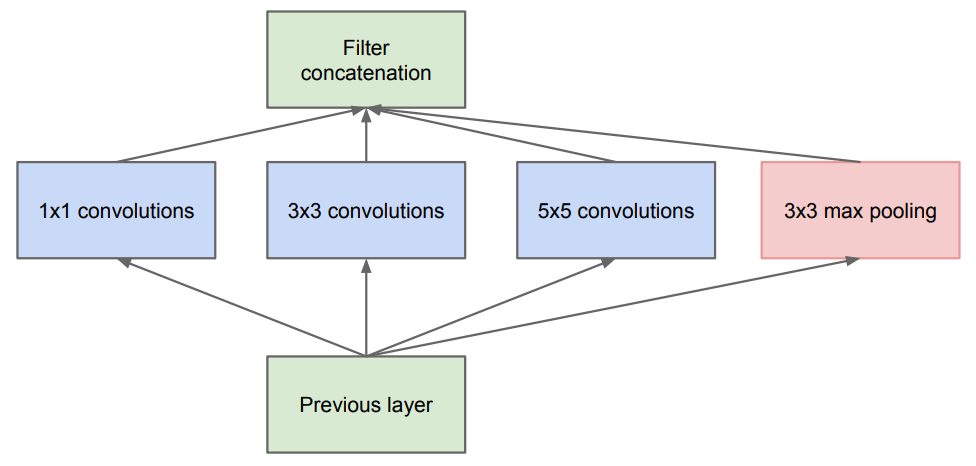

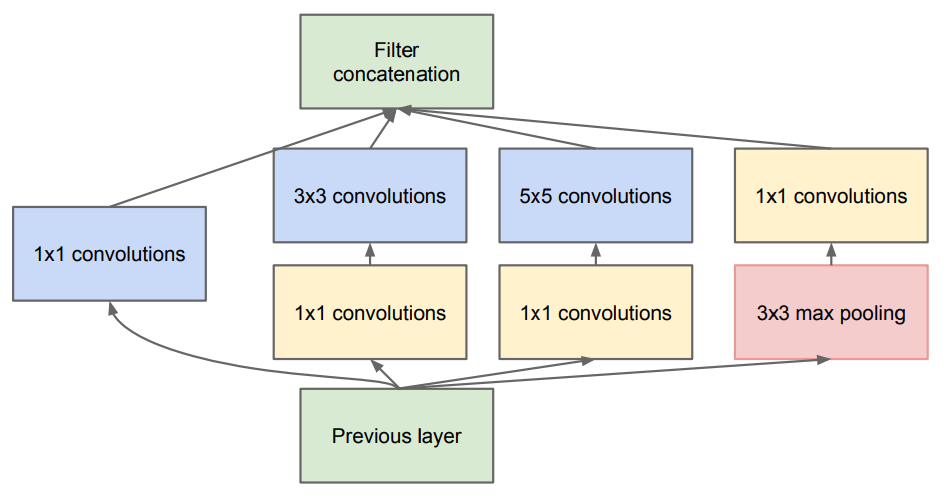

Inception Net V1

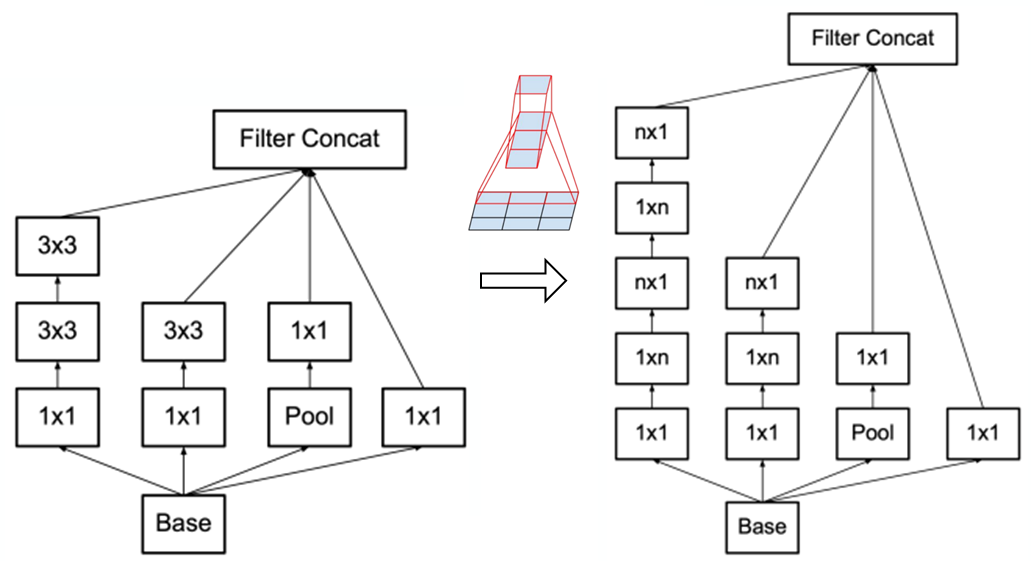

Inception Module

Inception module is a building block of the inception net. It uses multiple filter sizes (, , and ) and pooling operations in parallel, allowing the network to capture features at different scales simultaneously.

The are used for dimensionality reduction, helping to reduce computational complexity.

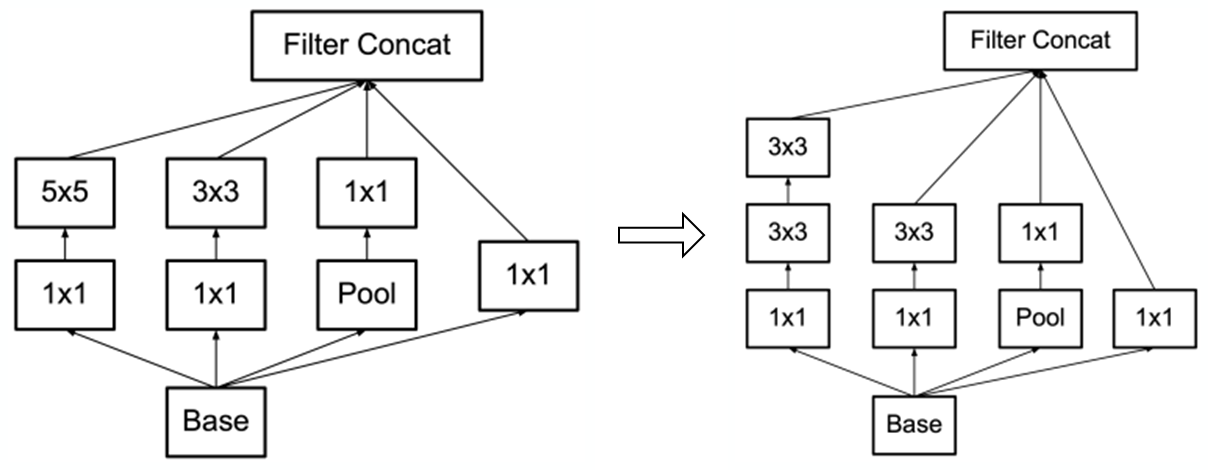

Inception Net V2, V3

Factorized Convolution

The large convolutions in the inception module were replaced with multiple smaller convolutions reducing parameters and computational cost.

Asymmetric Convolution

are decomposed into and convolution.

Label Smoothing

The model prevent from becoming overconfident applying the label smoothing to the labels. where is the smoothed label, is the original one-hot encoded label, is the smoothing parameter, and is the number of classes.

Link to original

ResNet

Definition

ResNet is a deep Convolutional Neural Network architecture. It was designed to address the degradation problem in very deep neural networks.

Architecture

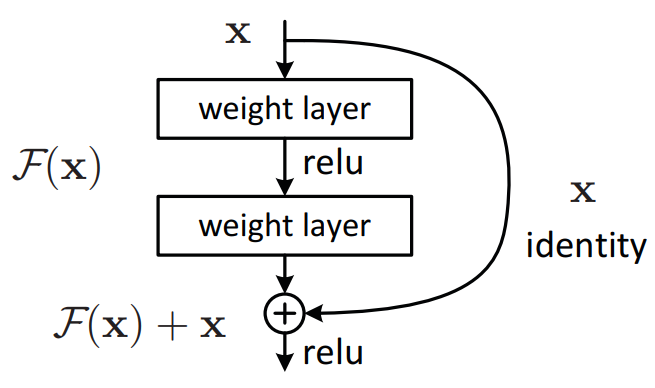

Skip Connection

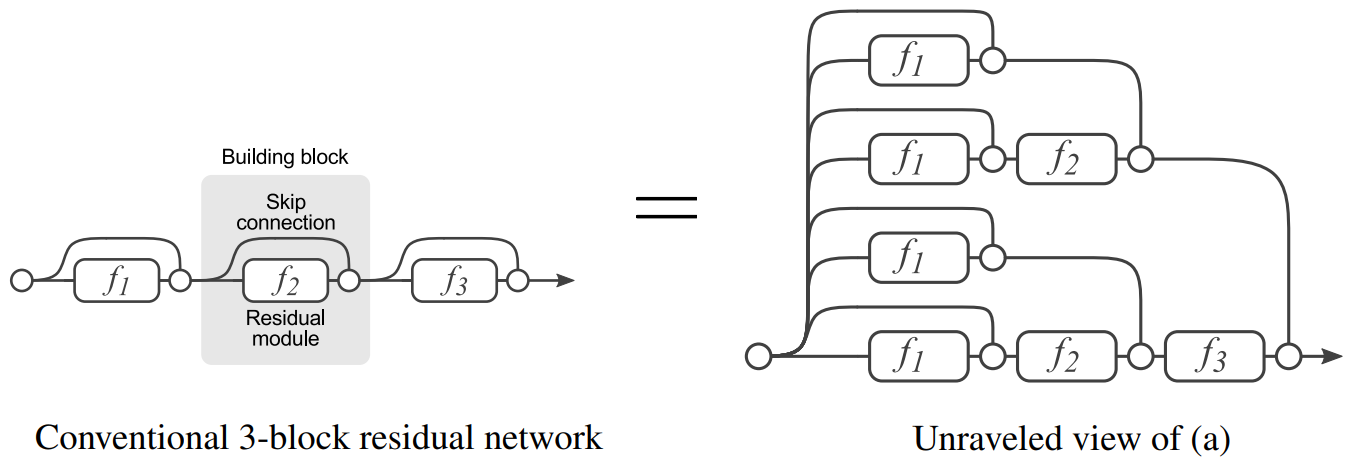

The core innovation of ResNet is the introduction of skip connections (shortcut connections or residual connections). These connections allow the network to bypass one or more layers, creating a direct path for information flow. It performs identity mapping, allowing the network to easily learn the identity function if needed.

The residual block is represented as where is the input to the block, is the learnable residual mapping typically including multiple layers, and is the output of the block

Skip connection create a mixture of deep and shallow models. skip connections, makes possible paths, where each path could have up to modules.

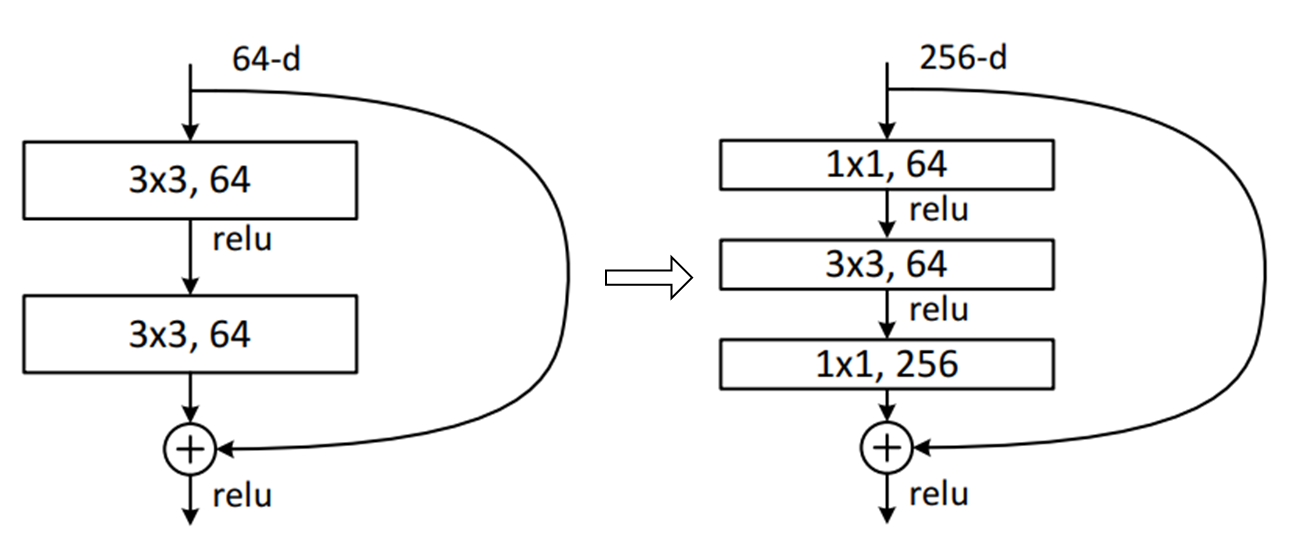

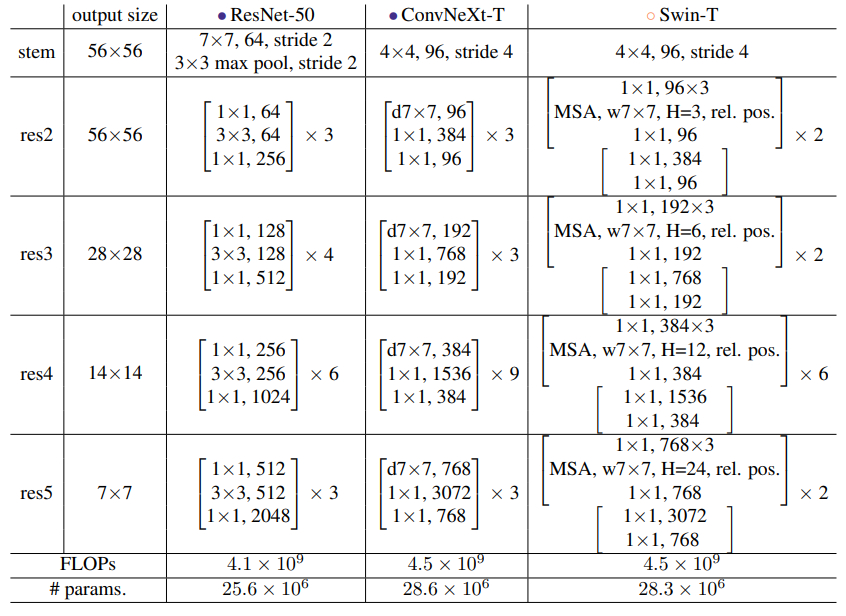

Bottleneck Block

The bottleneck architecture is used in deeper versions of ResNet to improve computational efficiency while maintaining or increasing the network’s representational power. The bottleneck block consists of three layers in sequence: convolutions. The first convolution reduces the number of channels, the convolution operates on the reduced representation, and the second convolution increases the number of channels back to the original.

Architecture Variants

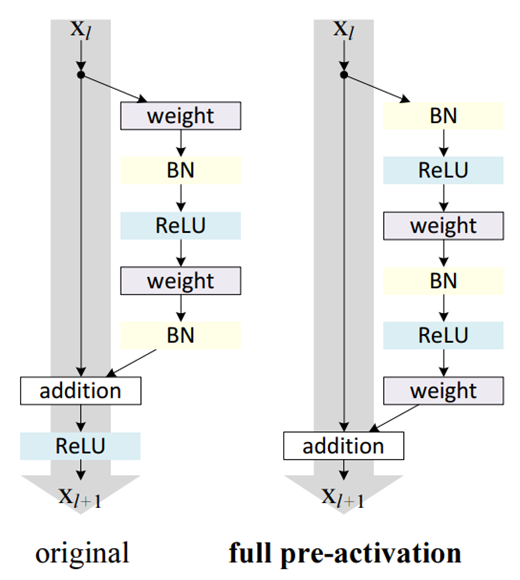

Full Pre-Activation

Full pre-activation is an improvement to the original ResNet architecture. This modification aims to improve the flow of information through the network and make training easier. In full pre-activation, the order of operations in each residual block is changed to move the batch normalization and activation functions before the convolutions.

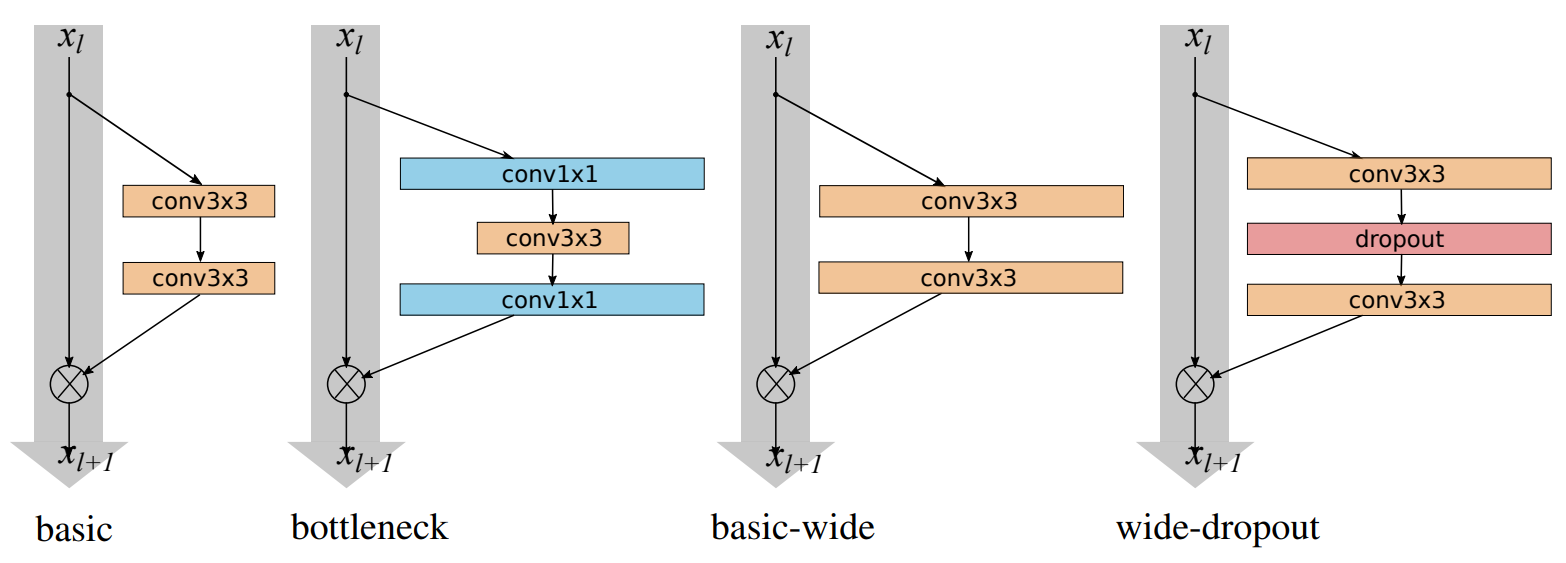

WideResNet

WideResNet increases the number of channels in the residual blocks rather than increasing Network’s depth.

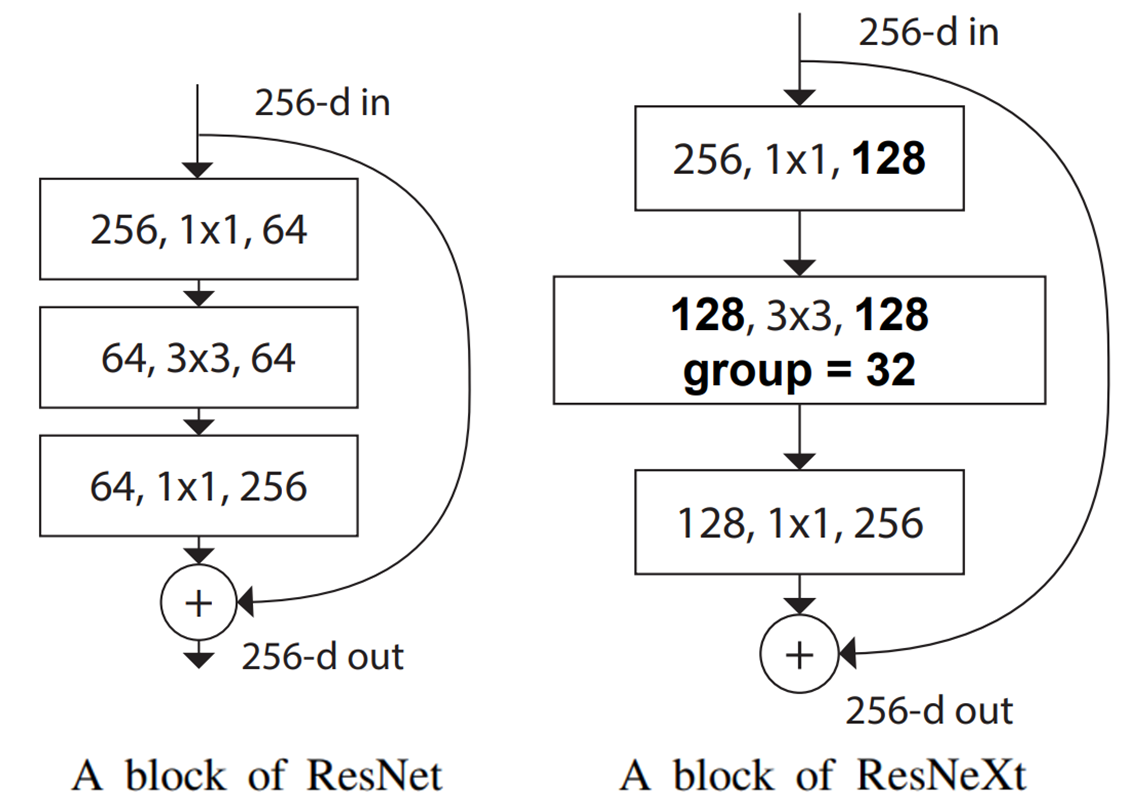

ResNeXt

The ResNeXt model substitutes the convolution of residual block of ResNet with the Grouped Convolution. ResNeXt achieve better performance than ResNet with the same number of parameters, thanks to its more efficient use of model capacity through the grouped convolution.

Link to original

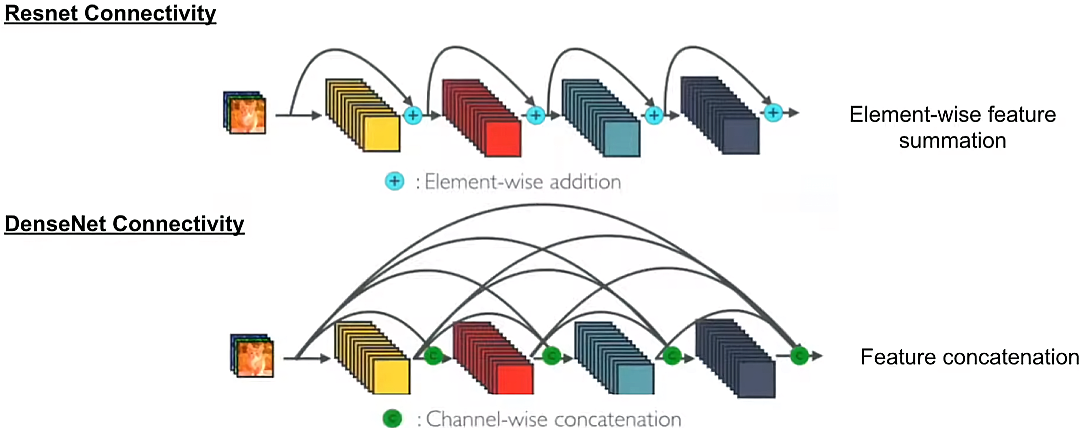

DenseNet

Definition

DenseNet is a deep Convolutional Neural Network architecture.

Architecture

In DenseNet, each layer’s input consists of the feature maps from all preceding layers, not just the immediately previous layer, allowing significant feature reuse and learn more compact representations. The dense connections also facilitate better gradient flow during backpropagation, making it easier to train deeper networks. The dense connections adopt full pre-activation structure.

Link to original

SENet

Definition

SENet is a deep Convolutional Neural Network architecture that explicitly modeling interdependencies between channels.

Architecture

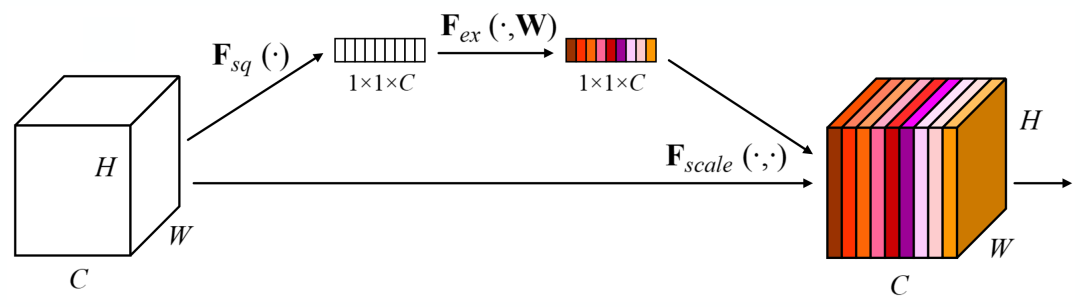

SE Block

SE block consists of the two operations: squeeze and excitation operations. The original feature maps are rescaled using the channel-wise weights produced by the excitation operation. SE block can be integrated into various existing architectures, not just used as a standalone network.

Squeeze Operation

The squeeze operation aggregates feature maps across spatial dimensions to produce a channel descriptor. It’s typically done using Global Average Pooling. For a given feature map of size , the squeeze operation produces a vector.

Excitation Operation

The excitation operation takes the output of the squeeze operation and produces a set of per-channel modulation weights. It’s typically implemented using two fully connected layers with a non-linearity in between. where is a Sigmoid Function, and and are learnable parameters.

Link to original

MobileNet

Definition

MobileNet is a lightweight deep Convolutional Neural Network architecture.

Architecture

MobileNet V1

MobileNet v1 introduced the depthwise separable convolutions consist of two operations: depthwise convolution and pointwise convolution. It significantly reduces computational cost and model size maintaining the model performance.

Inspired by ResNeXt, the Depthwise Convolution applies a filter to each input channel. It aggregates spatial information only. The pointwise convolution uses convolutions to combine the outputs from the depthwise step. It channel-wisely combines the information.

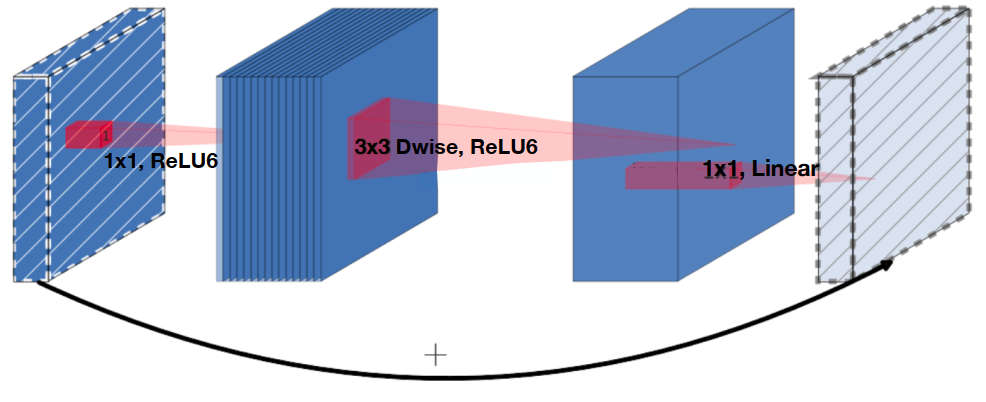

MobileNet V2

MobileNet v2 introduced the inverted residual block consists of three layers: expansion, depthwise convolution, and projection layer. The expansion layer expands the input to a higher dimension The projection layer reduces back the channel size , where . Some of the ReLU activation functions in the narrow layers are replaced with the other (ReLU6 or Linear) to prevent information loss.

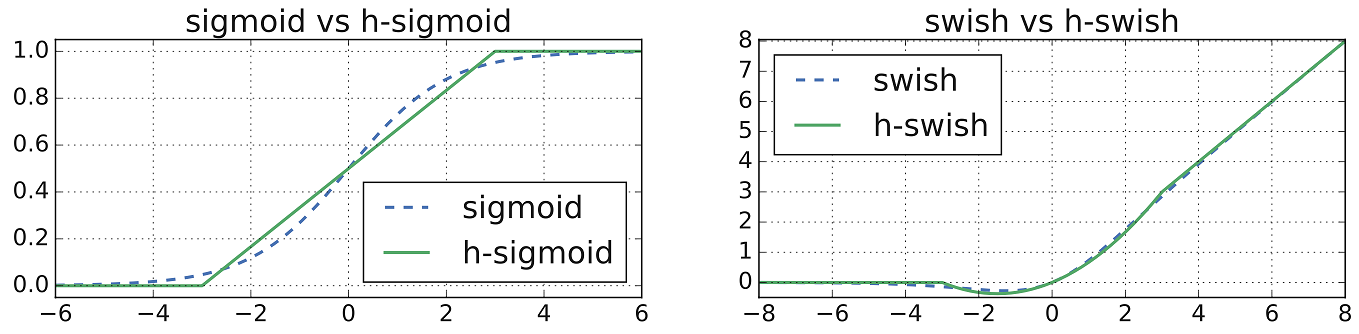

MobileNet V3

MobileNet v3 appended SE-block insider the inverted residual block.

The sigmoid functions used for SE-block are substituted with the hard sigmoid function more computationally light. And the ReLU used in the mobileNet v2 is replaced with hard swish activation function

The model architecture is optimized using the auto-ml technique network architecture search (NAS).

Link to original

EfficientNet

Definition

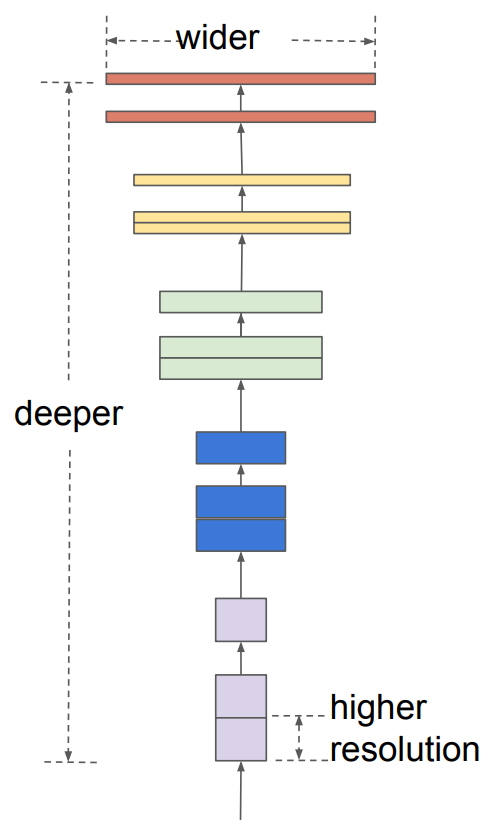

EfficientNet is a deep Convolutional Neural Network architecture. It suggests a method to scaling CNN to achieve better performance while maintaining efficiency.

Architecture

As the baseline model of EfficientNet, ResNet and MobileNet are considered.

Compound Scaling

EfficientNet introduces a compound scaling method that uniformly scales network width, depth, and resolution using a compound coefficient .

Link to original

- Perform a grid search about the depth , the width , and the resolution for a fixed .

- Control the model size by changing , for the fixed , , and .

ConvNeXt

Definition

ConvNeXt is a deep Convolutional Neural Network architecture. It was designed to bridge the gap between CNN and Vision Transformer by incorporating some of the design principles from transformers into a purely convolutional model.

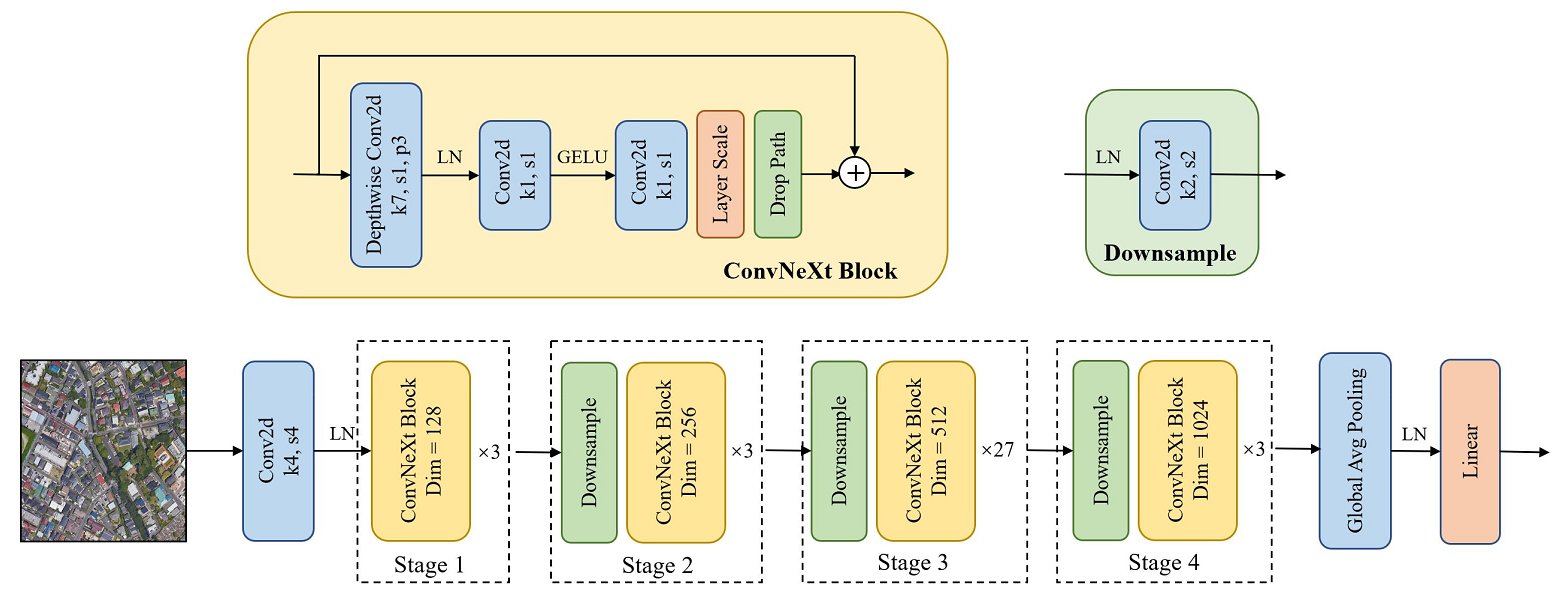

Architecture

Macro Design

The overall structure is similar to ResNet, with four stages of processing. Each stage consists of multiple blocks, and the spatial resolution is downsampled between the stages.

Stem Layer

Instead of the traditional convolution with stride , ConvNext uses a convolution with stride (non-overlapping) for initial downsampling. It mimics the patchify stem used in ViT.

ConvNeXt Block

ConvNeXt block is the basic building block of the network. It is a modernized version of the ResNet block. It consists of the following layers in sequence:

Link to original

- Depthwise Convolution inspired by ResNeXt, increasing the receptive and performance.

- Layer Norm

- Pointwise convolution to increase channel dimension (depthwise separable convolution)

- GELU activation function

- pointwise convolution to reduce channel dimension

- skip connection