Definition

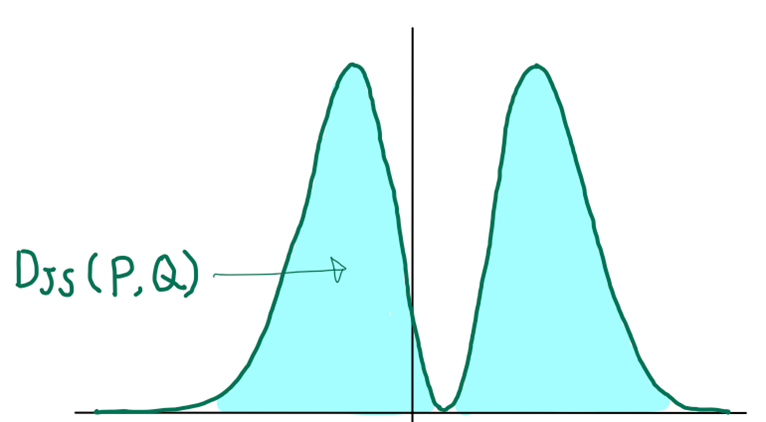

Jensen–Shannon divergence is a method of measuring the similarity between two Probability Distributions defined by KL-Divergence

Assume that two Probability Distributions and are given. Then the Jensen–Shannon divergence between and is defined as where is the KL-Divergence

Facts

Link to originalLet be a sequence of distributions. Then, The convergence of the KL-Divergence to zero implies that the JS-Divergence also converges to zero. The convergence of the JS-Divergence to zero is equivalent to the convergence of the Total Variation Distance to zero. The convergence of the Total Variation Distance to zero implies that the Wasserstein Distance also converges to zero. The convergence of the Wasserstein Distance to zero is equivalent to the Convergence in Distribution of the sequence.