Definition

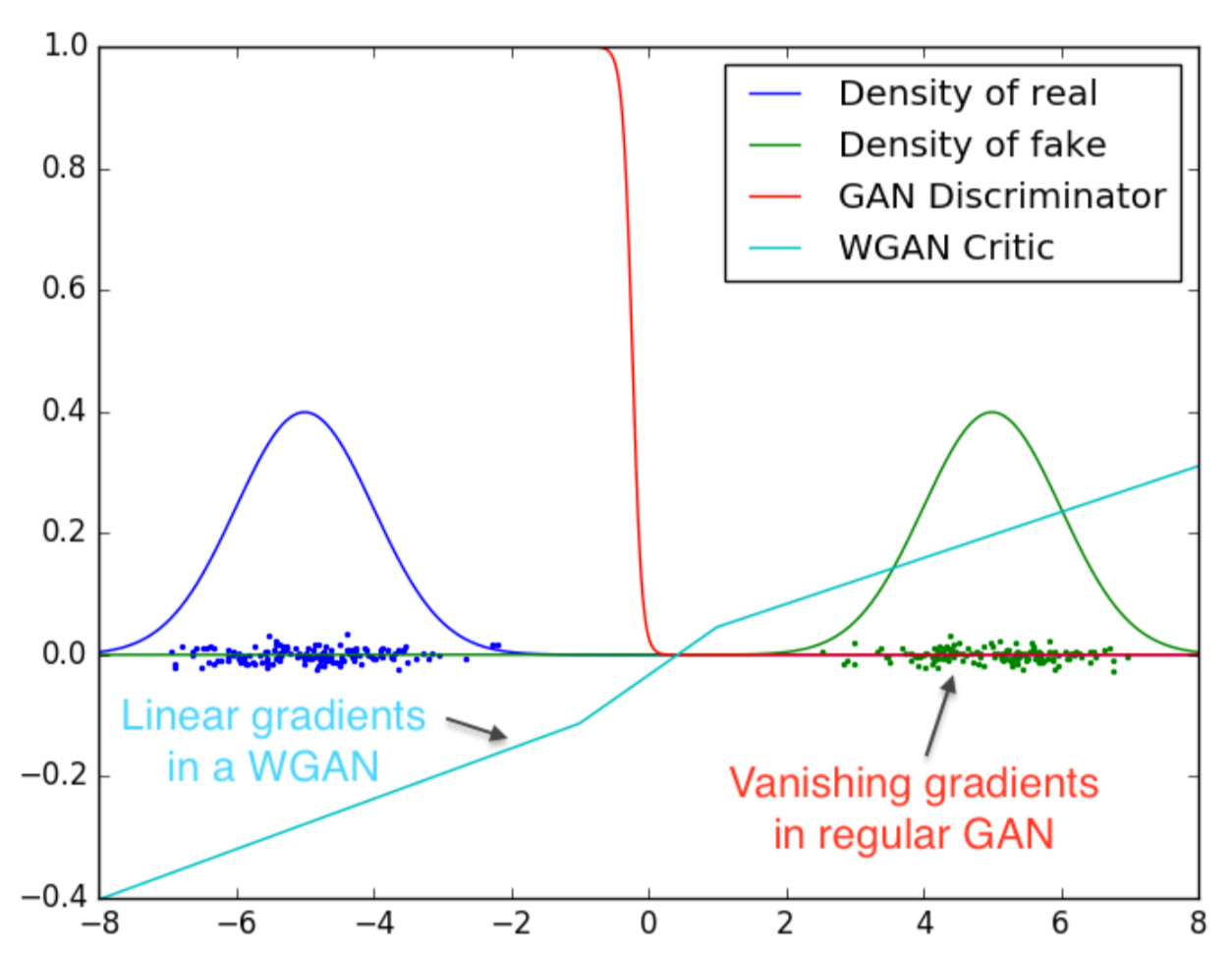

Wasserstein GAN (WGAN) is a variant of GAN that uses the Wasserstein Distance instead of the Jensen-Shannon Divergence used in traditional GAN. The Wasserstein distance provides a smoother gradient everywhere.

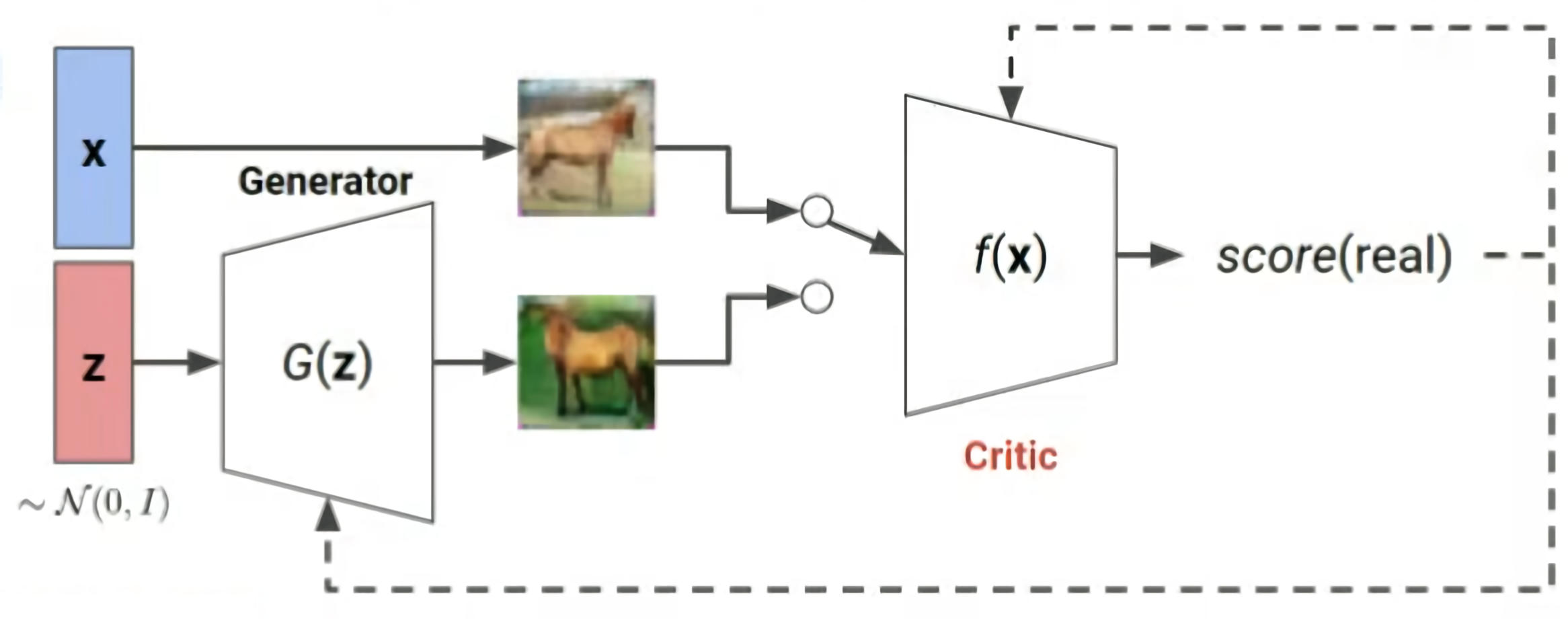

Architecture

In WGAN, the discriminator of traditional GAN is replaced by a critic that is trained to approximate the Wasserstein Distance. The critic outputs a real number instead of a probability.

Since Wasserstein Distance is highly intractable, the cost function is simplified using Kantorovich-Rubenstein Duality requiring 1-Lipschitz continuous. To satisfy the condition the weights of the critic are clipped.

Objective Function

The objective function of WGAN is defined as where:

- is the generator

- is the critic

- is the distribution of the input data

- is the distribution of noise

WGAN-GP

Instead of clipping the weights, WGAN-GP penalizes the model if the gradient norm moves away from its target norm value .

The additional gradient penalty term of WGAN-GP where is the critic loss.

This enforces the Lipschitz constraint more effectively than weight clipping.

Algorithm

: the learning rate, : the clipping parameter, : the batch size, : the number of iterations of the critic per generator iteration. : the initial critic parameters. : the initial generator’s parameters.

While has not converged:

- for :

- Sample a batch from the real data.

- Sample a batch from the noise distribution.

- Sample