Definition

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on the linear combination of its inputs. It is used to add a non-linearity to the model.

Examples

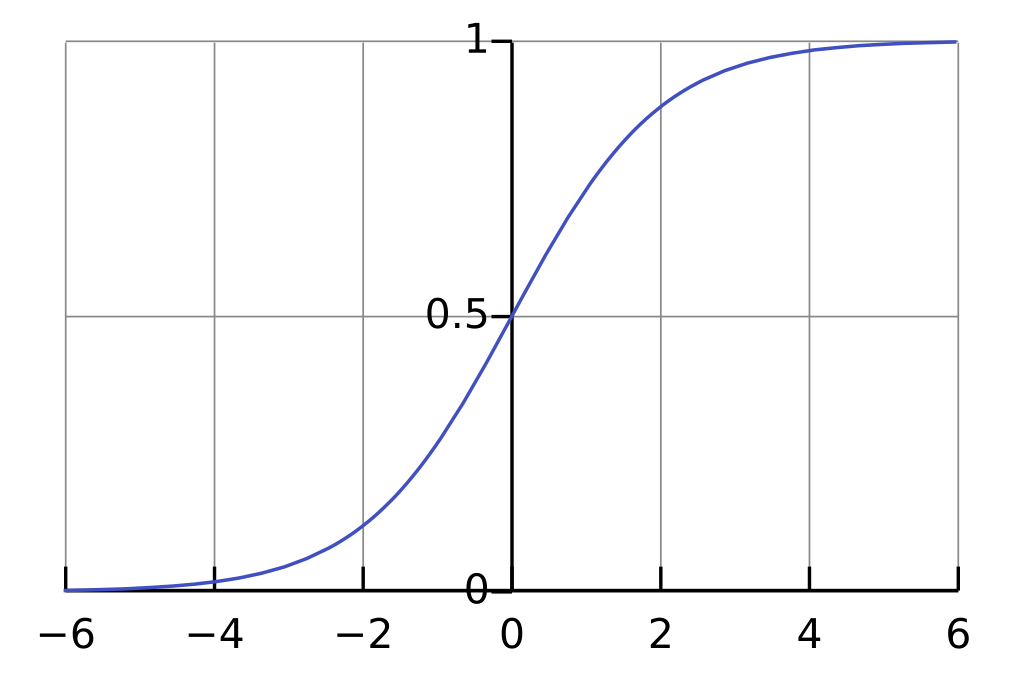

Logistic Function

Definition

The logistic function is inverse function of Logit.

Facts

Link to originalSigmoid activation function is vulnerable to vanishing gradient problem. The image of the derivative of the sigmoid function is . For this reason, after passing node with sigmoid Activation Function, the gradient is decreased

Also, with the sigmoid Activation Function, if all the inputs are positive, then all the gradients also positive.

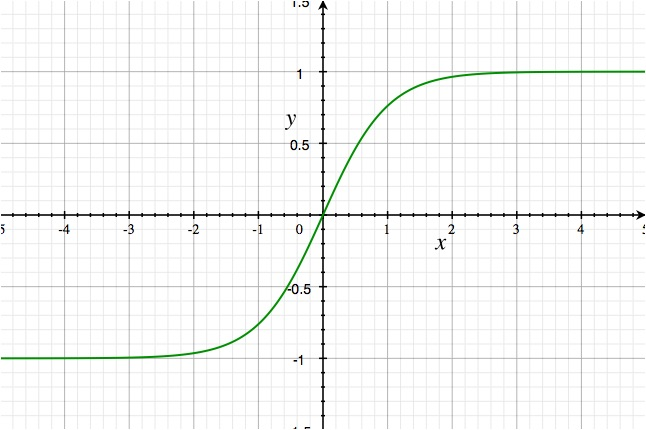

Hyperbolic Tangent Function

Definition

Link to original

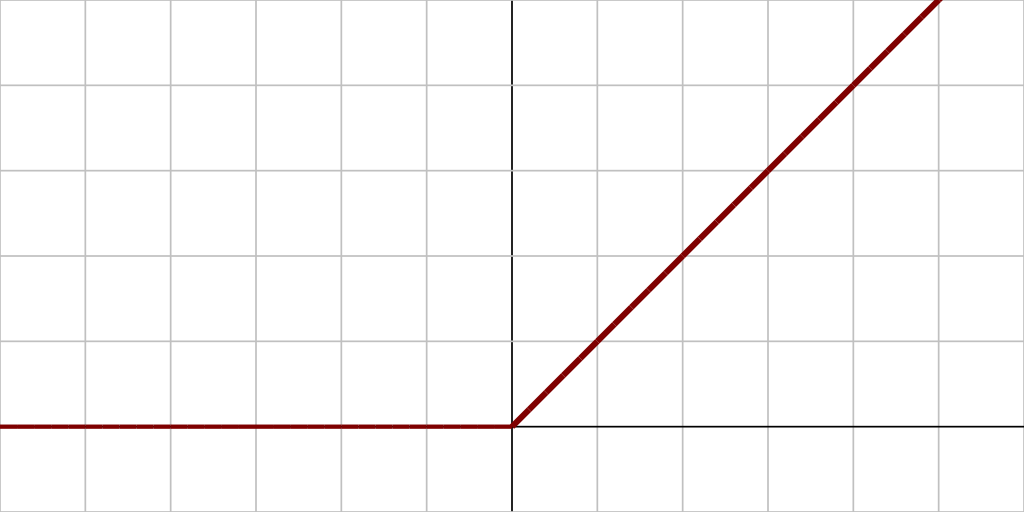

Rectified Linear Unit Function

Definition

Facts

Link to originalIf an initial value is negative, it is never updated.

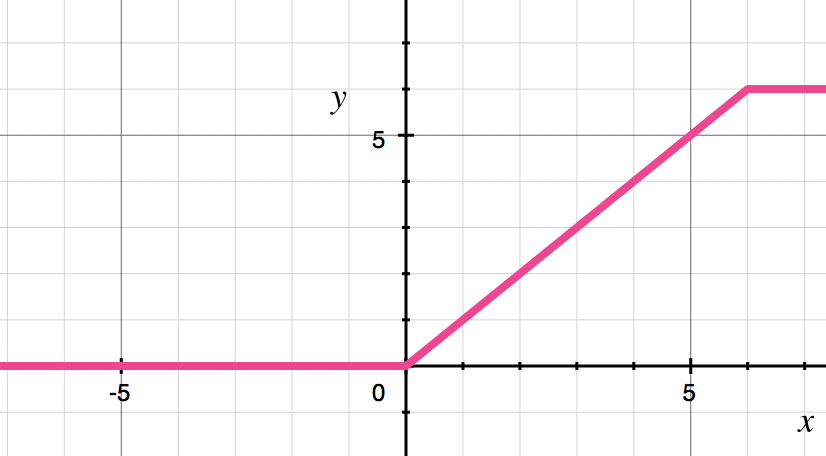

ReLU6

Definition

Link to original

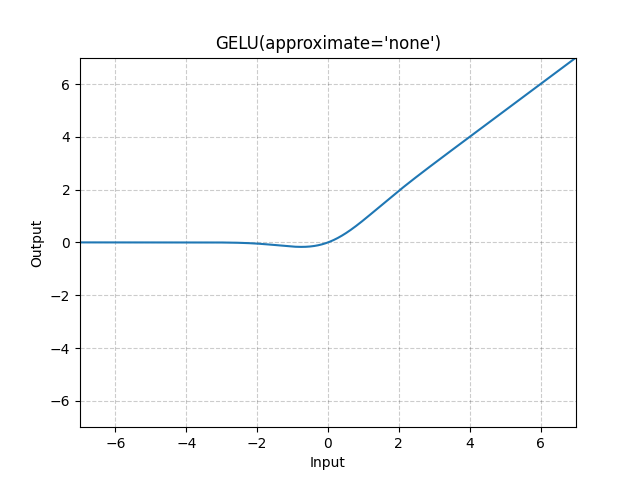

Gaussian-Error Linear Unit

Definition

GELU is a smooth approximation of ReLU.

where is the CDF of the standard normal distribution.

Link to original

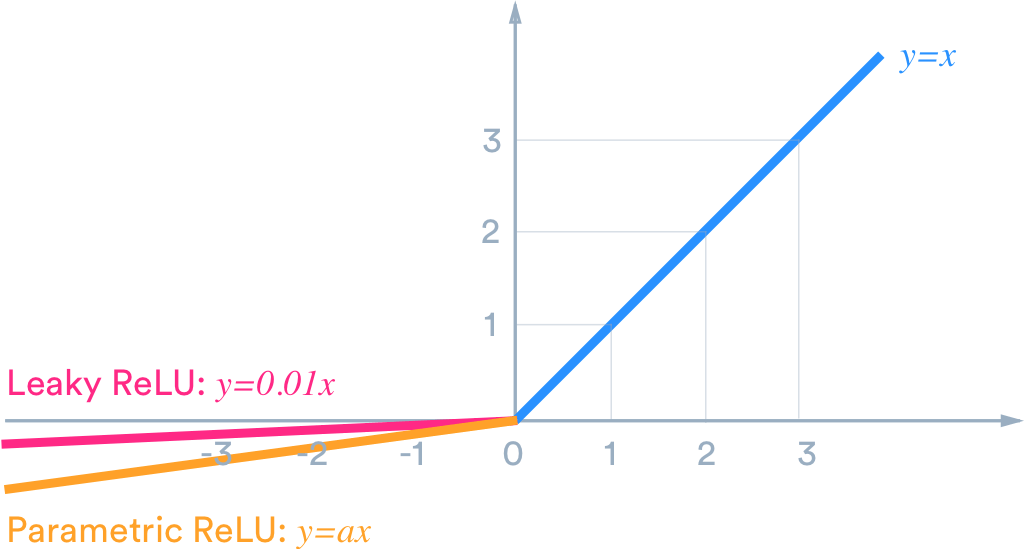

Parametric ReLU

Definition

where is a hyperparameter

Facts

Link to originalIf , it is called a Leaky ReLU

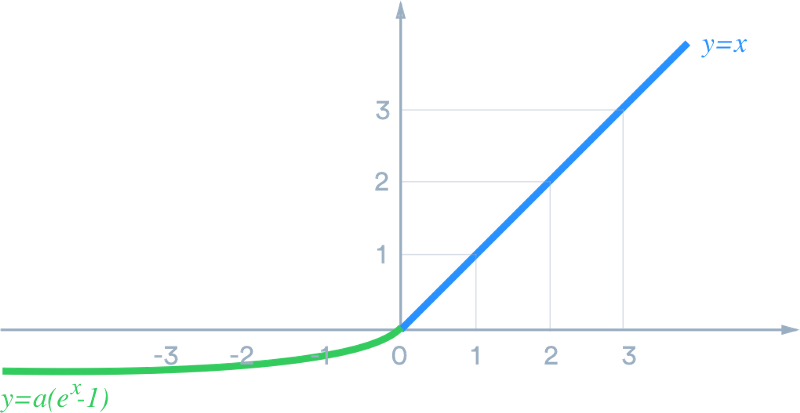

Exponential Linear Unit

Definition

where is a hyperparameter

Link to original

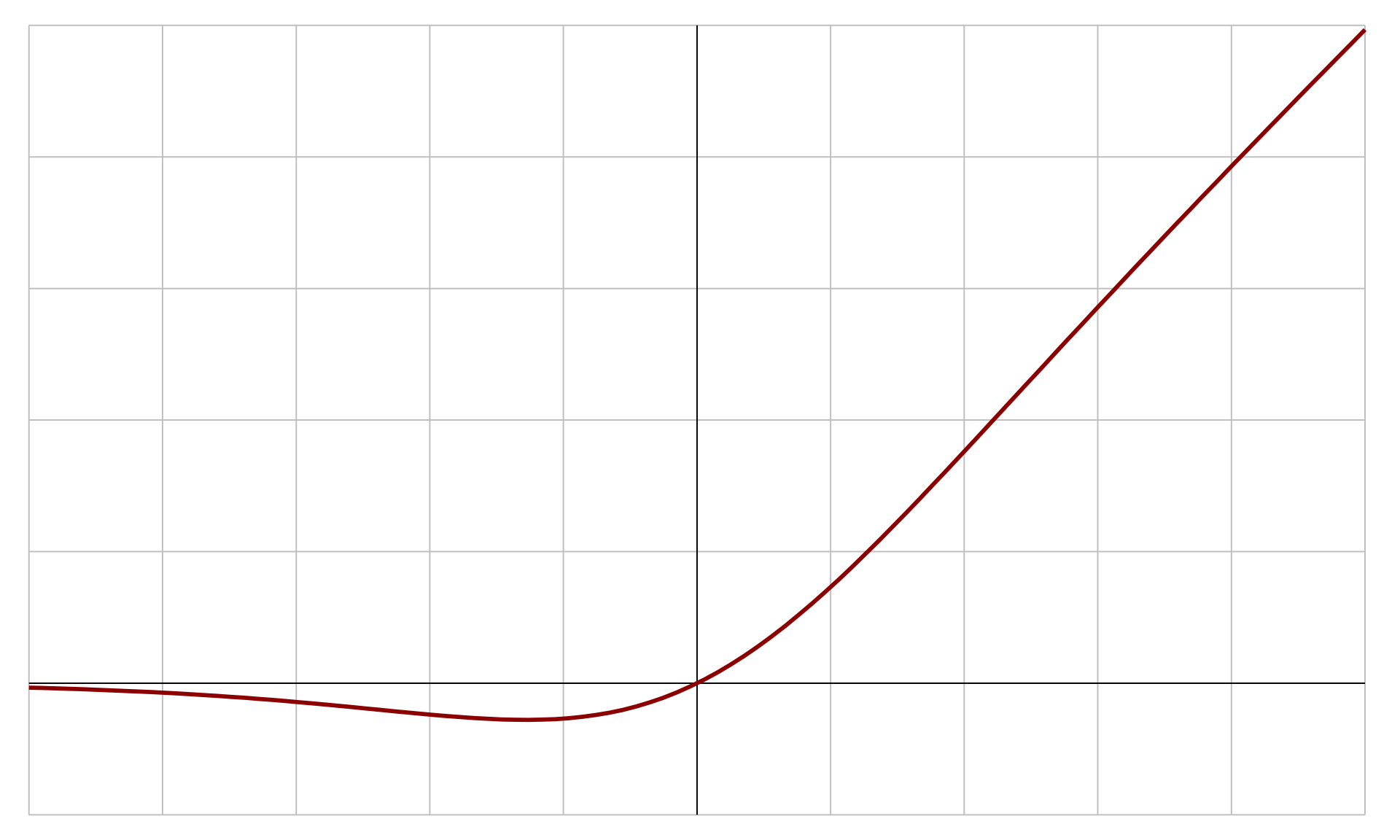

Swish Function

Definition

where is Sigmoid Function, and is a hyperparameter

When , the function is called a sigmoid liniear unit (SiLU).

Link to original