Preliminaries

Matrix Algebra

Orthonormal Matrix

Definition

The matrix whose column and rows are orthonormal vectors.

Examples

Facts

Every orthonormal matrix is Unitary Matrix.

Link to originalFor a vector , which can be expressed as a linear combination of column vectors of the orthonormal matrix , we can easily find the coefficients of the linear combination using the Inner product.

Eigendecomposition

Definition

where is a matrix and

If is a scalar multiple of non-zero vector , then is the eigenvalue and is the eigenvector.

Characteristic Polynomial

The values satisfying the characteristic polynomial, are the eigenvalues of the matrix

Eigenspace

The set of all eigenvectors of corresponding to the same eigenvalue, together with the zero vector.

The Kernel of the matrix

Eigenvector: non-zero vector in the eigenspace

Algebraic Multiplicity

Let be an eigenvalue of an matrix . The algebraic multiplicity of the eigenvalue is its multiplicity as a root of a Characteristic Polynomial, that is, the largest k such that

Geometric Multiplicity

Let be an eigenvalue of an matrix . The geometric multiplicity of the eigenvalue is the dimension of the Eigenspace associated with the eigenvalue.

Computation

- Find the solution^[eigenvalues] of the Characteristic Polynomial.

- Find the solution^[eigenvectors] of the Under-Constrained System using the found eigenvalue.

Facts

There exists at least one eigenvector corresponding to the eigenvalue

Eigenvectors corresponding to different eigenvalues are always linearly independent.

When is a normal or real Symmetric Matrix, the eigendecomposition is called Spectral Decomposition

The Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

The Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

Not all matrices have linearly independent eigenvectors.

When holds,

- the eigenvalues of are and the eigenvectors of the matrix are the same as .

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

An eigenvalue’s Geometric Multiplicity cannot exceed its Algebraic Multiplicity

the matrix is diagonalizable the Geometric Multiplicity is equal to the Algebraic Multiplicity for all eigenvalues

Link to originalLet be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Link to originalThe non-zero eigenvalues of are the same as those of .

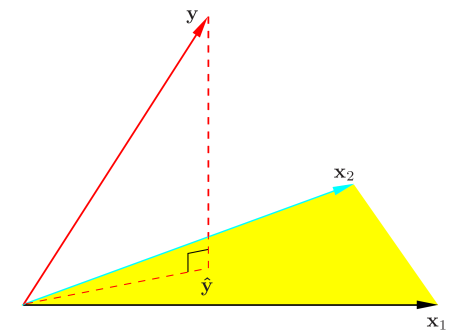

Projection Matrix

Definition

For some vector , is the projection of onto

Facts

The projection matrix is symmetric Idempotent Matrix

Consider a Symmetric Matrix , then is idempotent with rank if and only if eigenvalues are and eigenvalues are .

The projection matrix is Positive Semi-Definite Matrix

Link to originalIf and are projection matrices, and is Positive Semi-Definite Matrix, then

- is a projection matrix.

QR Decomposition

Definition

Decomposition of matrix into a product of an orthonormal matrix and an upper triangular matrix .

Computation

Using the Gram Schmidt Orthonormalization

QR decomposition can be computed by Gram Schmidt Orthonormalization. Where is the matrix of orthonormal column vectors obtained by the orthonormalization and

Link to original

Cholesky Decomposition

Definition

Decomposition of a Positive-Definite Matrix into the product of lower triangular matrix and its Conjugate Transpose.

Computation

Let be Positive-Definite Matrix. Then, By setting the first-row first-column entry , can find other entries using substitution. By summarizing, ,

Link to original

Spectral Theorem

Definition

where is a Unitary Matrix, and

A matrix is a Hermitian Matrix if and only if is Unitary Diagonalizable Matrix.

Facts

Every Hermitian Matrix is diagonalizable, and has real-valued Eigenvalues and orthonormal eigenvector matrix.

For the Hermitian Matrix , the every eigenvalue is real.

Proof For the eigendecomposition , . Since is hermitian, and norm is always real. Therefore, every eigenvalue is real.

Link to originalFor the Hermitian Matrix , the eigenvectors from different eigenvalues are orthogonal.

Proof Let the eigendecomposition where . By the property of hermitian matrix, and So, . Since , . Therefore, are orthogonal.

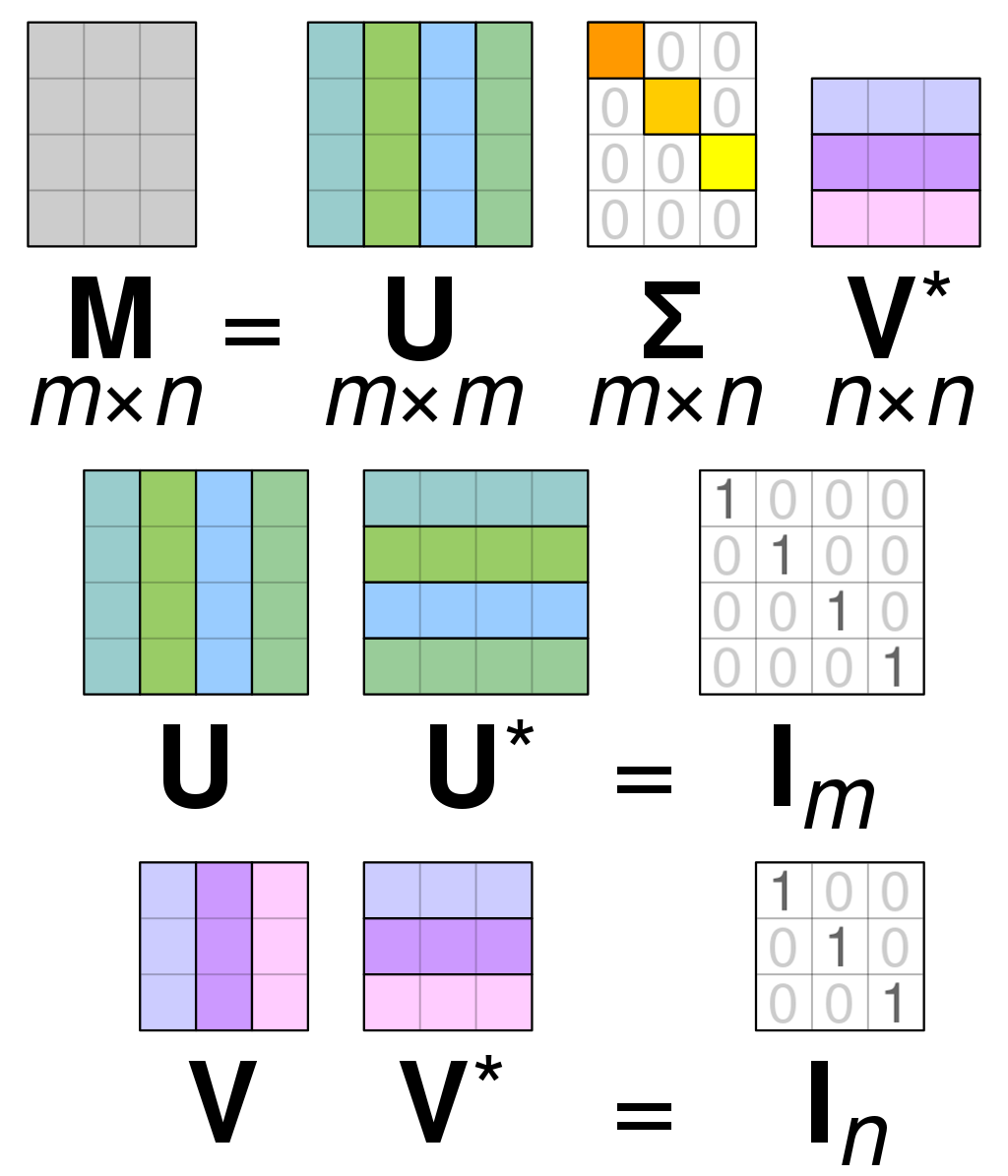

Singular Value Decomposition

Definition

An arbitrary matrix can be decomposed to .

For where and and is Diagonal Matrix

For where and and is Diagonal Matrix

Calculation

For matrix

is the matrix of orthonormal eigenvectors of is the matrix of orthonormal eigenvectors of is the Diagonal Matrix made of the square roots of the non-zero eigenvalues of and sorted in descending order.

If the eigendecomposition is , then the eigenvalues and the eigenvectors are orthonormal by Spectral Theorem. If the , then . Where are called the singular values.

Now, the Orthonormal Matrix is calculated using the singular values. Where is the Orthonormal Matrix of eigenvectors corresponding to the non-zero eigenvalues and is the Orthonormal Matrix of eigenvectors corresponding to zero eigenvalues where each , and is a rectangular Diagonal Matrix.

Since is an orthonormal matrix and is a Diagonal Matrix, . Now, the Orthonormal Matrix is calculated using the linear system and the null space of , Where is the Orthonormal Matrix of the vectors obtained from the system equation And is the Orthonormal Matrix of the vectors . which is corresponding to zero eigenvalues

The Orthonormal Matrix can also be formed by the eigenvectors of similarly to calculating of .

Facts

are the Unitary Matrix

Let be a real symmetric Positive Semi-Definite Matrix Then, the Eigendecomposition(Spectral Decomposition) of and the singular value decomposition of are equal.

where and are non-negative and the shape of the every matrix is

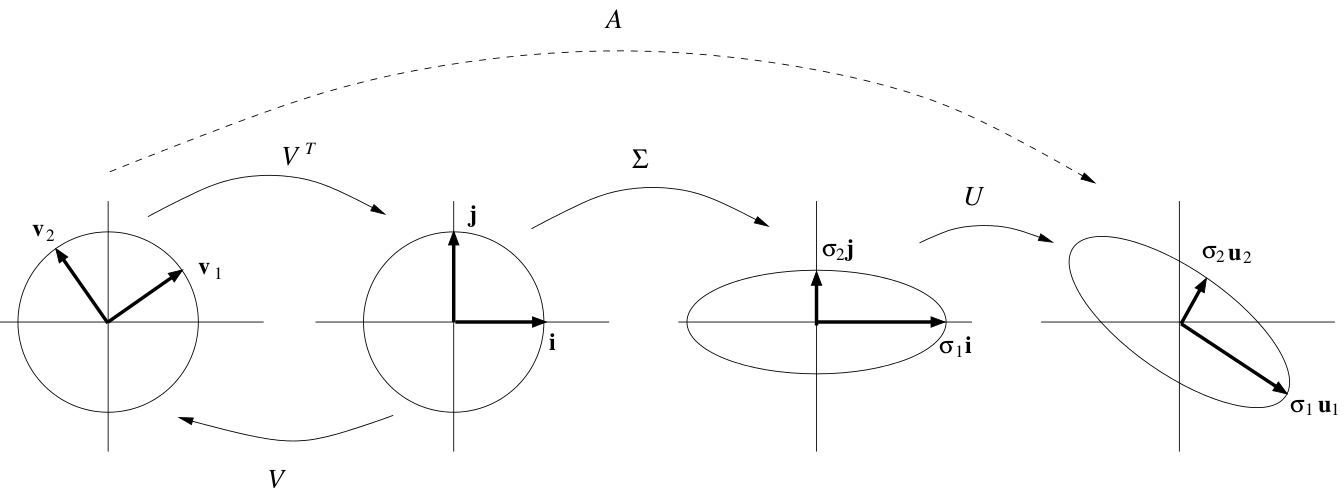

Visualization

every matrix can be decomposed as a

Link to original

- : rotation and reflection

- : scaling

- : rotation and reflection

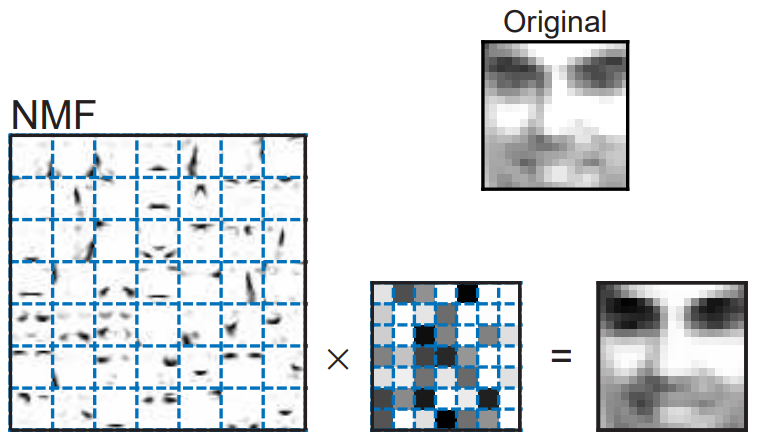

Non-Negative Matrix Factorization

Definition

where

Non-negative matrix factorization (NMF) is an algorithm where a matrix is factorized into two matrices and have no negative elements.

The matrices and are found by maximizing

Link to original

Model Assessment and Selection

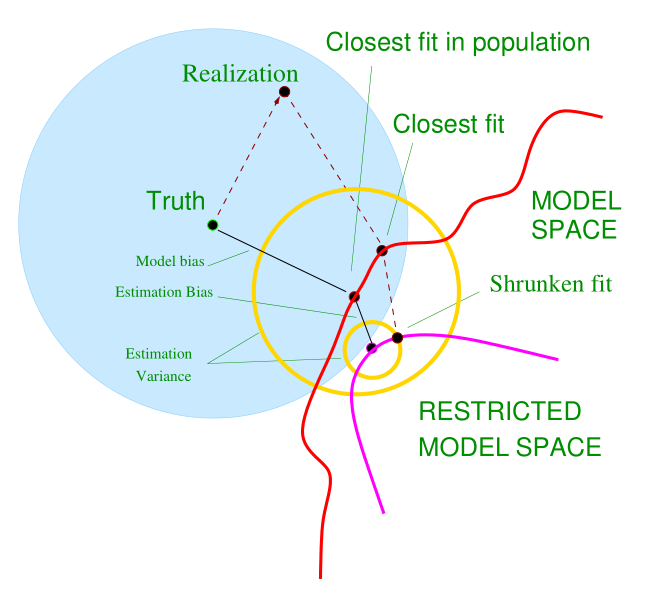

Bias-Variance Decomposition

Definition

Assume that where and . Then, under squared error loss.

Link to original

Mallow's Cp

Definition

where is the number of explanatory variables, is a sample variance under the full model, and

Mallow’s is used to assess the fit of a regression model. A small value of means that the model is relatively precise.

Link to original

Akaike Information Criterion

Definition

where is the MLE of the postulated model’s parameter

Link to original

Bayesian Information Criterion

Definition

Facts

BIC more penalize than AIC when is large

Link to original

Vapnik–Chervonenkis Dimension

Definition

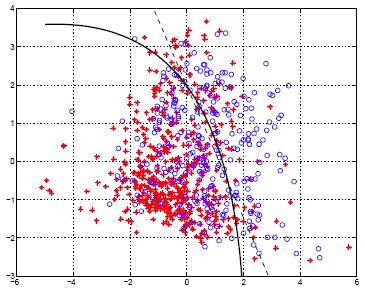

Vapnik–Chervonenkis (VC) dimension is measure of the size of a class of sets. The VC dimension of the class is the largest number of points that can be shattered (can always learn a perpect classifier for any labeling) by members of .

Examples

Link to original

- The VC dimension of linear indicator functions in the plane is 3.

- has infinite VC dimension.

Cross Validation

Definition

Partition a set of data into sets and denote a function such that . For the dataset , let be the estimator based on observations except , then the cross validation estimation for the prediction error is defined as where is a loss function

Facts

Link to originalIf the data is partitioned into group with equal size, then it is called a -fold cross validation.

Leave-One-Out-Cross-Validation

Definition

Cross Validation with

Link to original

Generalized Croos Validation

Convenient approximation to Leave-One-Out-Cross-Validation of a linear model ,

Link to original

Maximum Likelihood Methods

Maximum Likelihood Estimation

Definition

MLE is the method of estimating the parameters of an assumed Distribution

Let be Random Sample with PDF , where , then the MLE of is estimated as

Regularity Conditions

- R0: The pdfs are distinct, i.e.

- R1: The pdfs have same supports

- R2: The true value is an interior point in

- R3: The pdf is twice differentiable with respect to

- R4:

- R5: The pdf is three times differentiable with respect to , , and interior point

Properties

Functional Invariance

If is the MLE for , then is the MLE of

Consistency

Under R0 ~ R2 Regularity Conditions, let be a true parameter, is differentiable with respect to , then has a solution such that

Asymptotic Normality

Under the R0 ~ R5 Regularity Conditions, let be Random Sample with PDF , where , be a consistent Sequence of solutions of MLE equation , and , then where is the Fisher Information.

By the asymptotic normality, the MLE estimator is asymptotically efficient under R0 ~ R5 Regularity Conditions

Asymptotic Confidence Interval

By the asymptotic normality of MLE, Thus, confidence interval of for is

Delta method for MLE Estimator

Under the R0 ~ R5 Regularity Conditions, let be a continuous function and , then

Facts

Link to originalUnder R0 and R1 regularity conditions, let be a true parameter, then

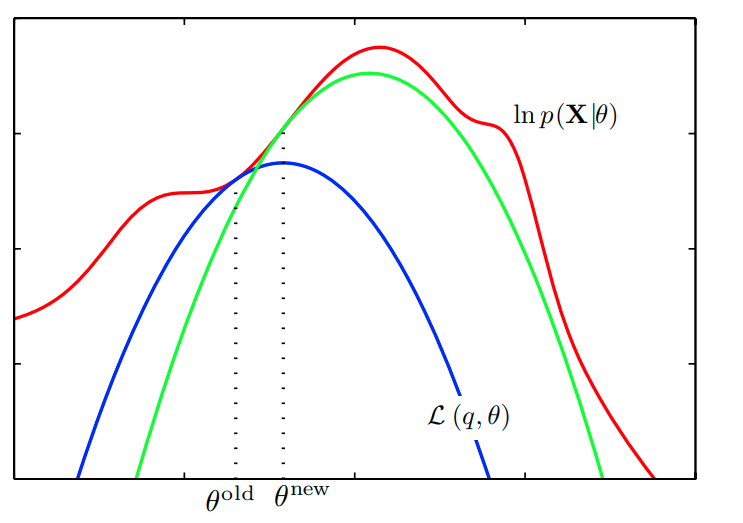

The EM Algorithm

Expectation-Maximization Algorithm

Definition

Let be an observed data, be an unobserved (latent) variable, are independent, be a joint pdf of , be a joint pdf of , be a conditional pdf of given

By the definition of a conditional pdf, we have the identity

The goal of the EM algorithm is maximizing the observed likelihood using the complete likelihood .

Using the definition conditional pdf, we derive the identity for an arbitrary but fixed

&= \int \ln[h(\mathbf{X}, \mathbf{Z} | \boldsymbol{\theta})] k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0}) d \mathbf{Z} - \int \ln[k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta})]k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0})d\mathbf{Z}\\ &= E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}] - E_{\boldsymbol{\theta}_{0}}[\ln k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}) | \boldsymbol{\theta}_{0}, \mathbf{X}] \end{aligned}$$ Let the first term of RHS be a quasi-likelihood function $$Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X}) := E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}]$$ EM algorithm maximizes $Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X})$ instead of maximizing $\ln L(\boldsymbol{\theta}|\mathbf{X})$ # Algorithm 1. Expectation Step: Compute $$Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X}) := E_{\hat{\boldsymbol{\theta}}^{(m)}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \hat{\boldsymbol{\theta}}_{m}, \mathbf{X}]$$ where the $m = 0, 1, \dots$, and the expectation is taken under the conditional pdf $k(\mathbf{Z} | \mathbf{X}, \hat{\boldsymbol{\theta}}^{(m)})$ 2. Maximization Step: $$\hat{\boldsymbol{\theta}}^{(m+1)} = \underset{\boldsymbol{\theta}}{\operatorname{arg max}} Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X})$$ # Properties ## Convergence The [[Sequence]] of estimates $\hat{\boldsymbol{\theta}}^{(m)}$ satisfies $$L(\hat{\boldsymbol{\theta}}^{(m+1)}|\mathbf{X}) \leq L(\hat{\boldsymbol{\theta}}^{(m)}|\mathbf{X})$$ Therefore the [[Sequence]] of EM estimates converge to (at least local) optimalLink to original

Bayesian Methods

Bayes Risk

Definition

r(\theta, \delta) &= \int_{\Theta} R(\theta, \delta) \pi(\theta) d\theta = E_\theta[R(\theta, \delta)] = E_\theta[E_{x}[L(\theta, \delta(x))]]\\ &= \int_{\Theta} \int_{X} L(\theta, \delta(x))p(x|\theta) \pi(\theta) dx d\theta = \int_{X} \int_{\Theta} L(\theta, \delta(x))p(\theta|x)p(x) d\theta dx\\ &= \int_{X}E_\theta[L(\theta, \delta(x))|X=x]p(x)dx = \int_{X}\rho(x, \pi)p(x)dx \end{aligned}$$ where $L(\theta, \delta)$ is [[Loss Function]], $R(\theta, \delta)$ is [[Risk Function]], and $\rho(x, \pi)$ is a [[Posterior Risk]].Link to original

Posterior Risk

Definition

where is Loss Function, is Risk Function, and is a posterior probability

Link to original

Bayes Estimator

Definition

Estimator that minimizes the Bayes Risk or Posterior Risk

Facts

Under Squared Error Loss, the Bayes estimator is a posterior mean, and a posterior mode under Absolute Error Loss.

Link to originalConsider a regression model and the prior distribution for , . Then, the Bayes estimator under the Squared Error Loss is obtained as If for some and , then the Bayes estimator is the same as ridge estimator If and , then the Bayes estimator is the James-Stein regression estimator

Monte Carlo Integration

Definition

where and is a PDF

Link to original

Gibbs Sampling

Definition

A MCMC algorithm for sampling from a specified Multivariate Distribution when direct sampling from the joint distribution is difficult, but sampling from the Conditional Distribution is more practical.

Algorithm

- Begin with some initial value

- For generate from

- Repeat the above step times.

Examples

Consider a bivariate random variable , and suppose we want to obtain samples from the marginals . Take samples from the conditional distributions and . As , the samples are eventually samples from and respectively.

Facts

It is common to discard some number of samples at the beginning (burn-in period).

Link to original

Regression

Parametric Regression

Simple Linear Regression

Definition

where ‘s are i.i.d. error terms, with and

Link to original

Multiple Linear Regression

Definition

where ‘s are i.i.d. error terms, with and

Matrix Notations

Let and , then the regression model can be express the model as

Let , , , then the regression model can be express as where , and

Link to original

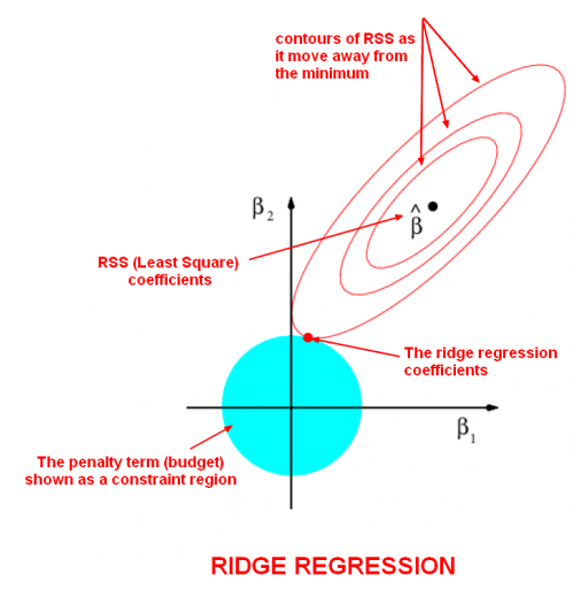

Ridge Regression

Definition

where is a complexity parameter that controls the amount of shrinkage.

Ridge regression is particularly useful to mitigate the problem of Multicollinearity in linear regression

Facts

Link to originalwhere by Singular Value Decomposition

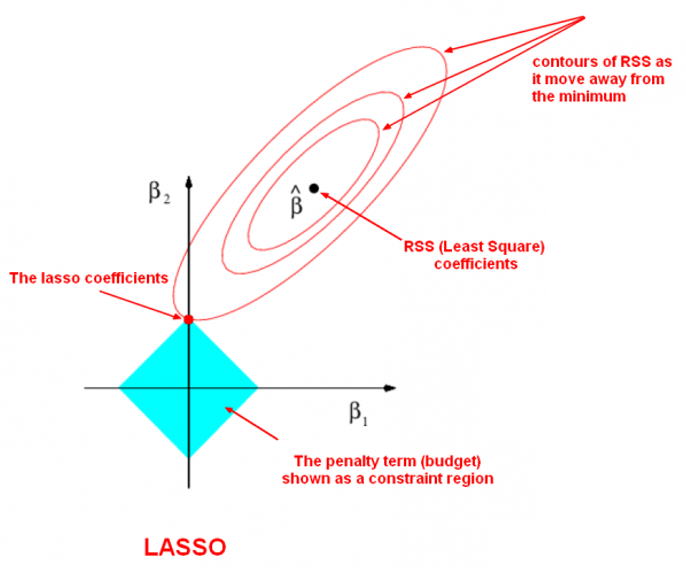

Lasso Regression

Definition

Lasso model assume that the coefficients of the model are sparse.

Link to original

Elastic Net Regularization

Definition

Elastic net is a regularized regression method that linearly combined the penalties of the lasso and ridge regressions.

Link to original

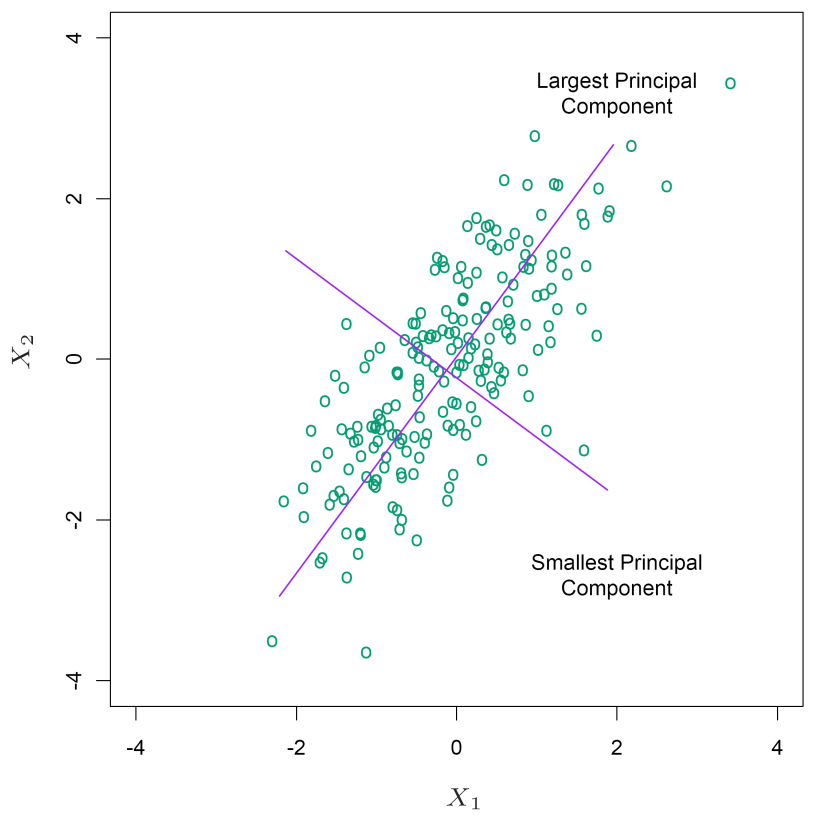

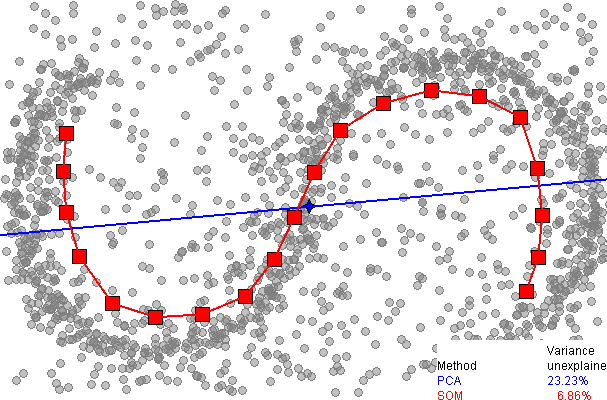

Principal Component Analysis

Definition

PCA is a linear dimensionality reduction technique. The correlated variables are linearly transformed onto a new coordinate system such that the directions capturing the largest variance in the data.

Population Version

Given a random vector , we find a such that is maximized: Equivalently, by the Method of Lagrange Multipliers with , By differentiation, the is given by the eigen value problem Thus the maximizing the variance of is the eigenvector corresponding to the largest Eigenvalue.

Sample Version

Given a data matrix , by Singular Value Decomposition, A matrix can be factorized as . By algebra, , where we call the -th principal component.

Facts

Link to originalSince and

Partial Least Squares Regression

Definition

where is the sample covariance matrix.

Unlike PCR maximizing only, PLS finds directions maximizing both and

Link to original

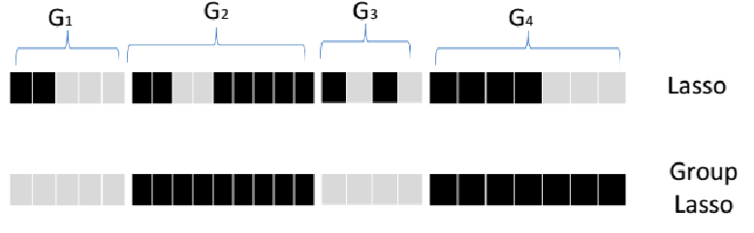

Grouped Lasso

where is the number of groups, is the number of variables within the group , and is Euclidean Norm

In grouped lasso, the variables in each group share a regularization parameter.

Link to original

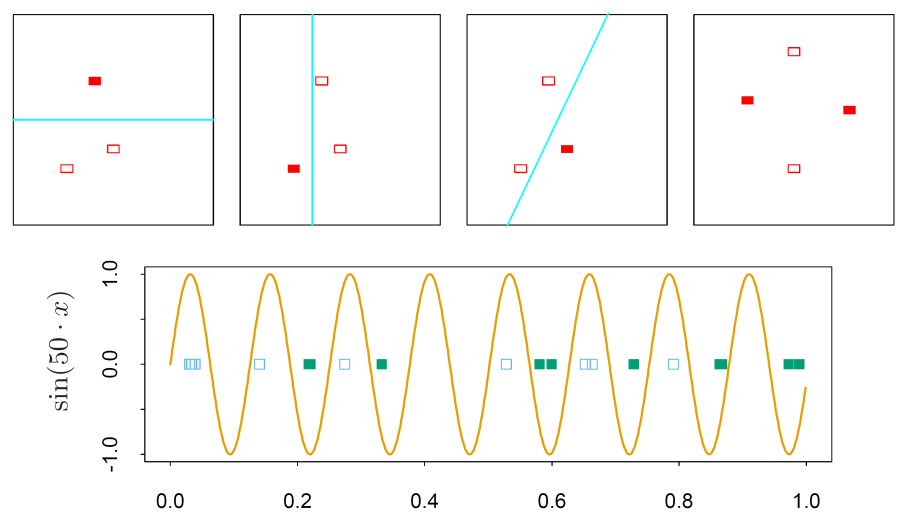

nonparametricNonparametric Regression

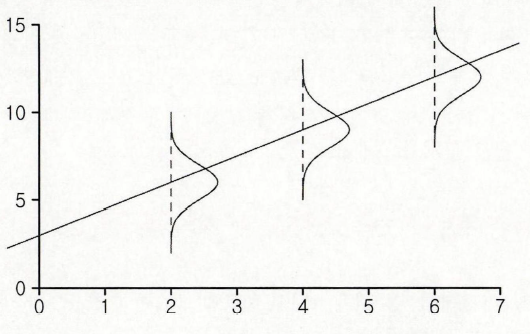

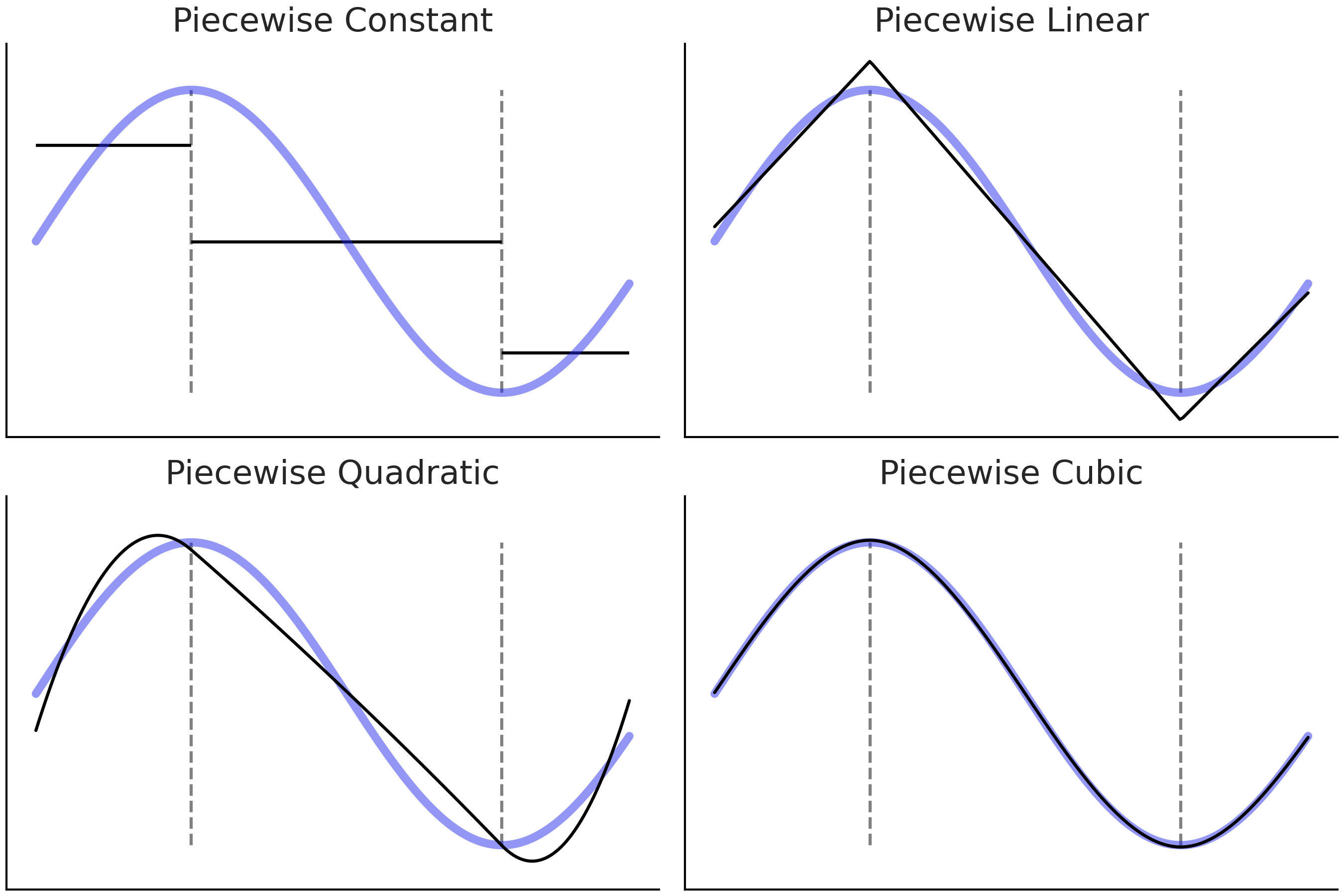

Piecewise Polynomials

Definition

Suppose that knots are . Then, the basis functions for order-M spline are defined as: where

Examples

For cubic Polynomials with two knots , the basis functions are:

Facts

Order of piecewise polynomials

- constant: 1

- linear: 2

- quadratic: 3

- cubic: 4

Link to original

Natural Cubic Splines

Definition

Suppose that knots are . Then, the basis functions for natural cubic Spline are defined as: where

Link to original

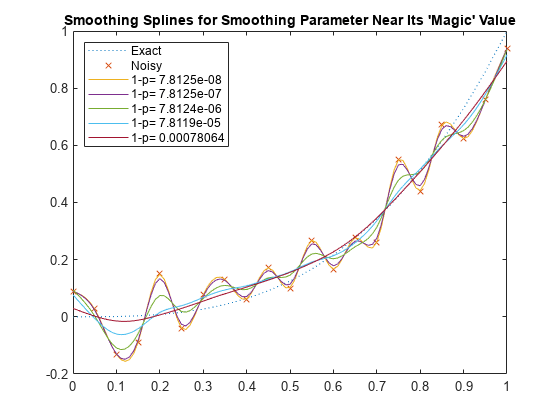

Smoothing Splines

Definition

where is a smoothing parameter. If , the smoothing spline is any interpolating spline. If , the smoothing spline is Least Square line.

Smoothing splines is a Spline basis method that avoids the knot selection problem. Among all functions , find one that minimizes the penalized RSS.

Facts

Link to originalThe unique analytic solution of smoothing spline is a Natural Cubic Splines with knots at the unique values of the ‘s

Classification

Introduction

Bayes Classifier

Definition

where is conditional PDF of given and is prior

Properties

Optimality of Bayes Rule

Bayes classifier minimizes the prediction risk over all classifiers

Facts

For a given , the Bayes classifier is deterministic, i.e. it does not depend on the training sample. It can not be used in practice since is unknown.

Link to originalBayes classifier maximizes the posterior pdf.

Parametric Classifier

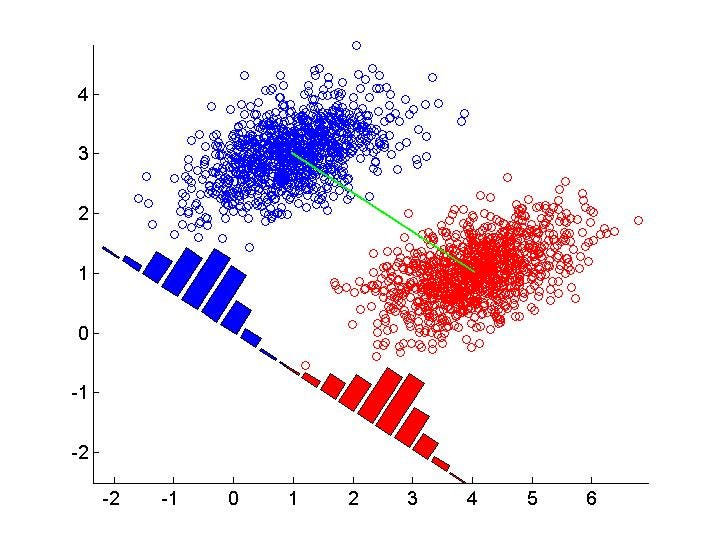

Linear Discriminant Analysis

Definition

where is the sample ratio, is the sample mean, and is the sample variance covariance matrix

LDA is a Bayes Classifier with an assumption that

Facts

Link to originalLDA assume homogeneity of variance-covariance among classes. Quadratic Discriminant Analysis may be used when the covariances are not equal.

Quadratic Discriminant Analysis

Definition

where is the sample ratio, is the sample mean, and is the sample variance covariance matrix

Bayes Classifier with an assumption that

Link to original

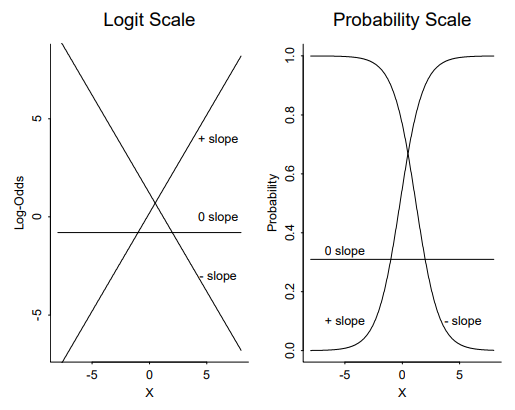

Logistic Regression

Definition

Logistic regression is a Generalized Linear Model with Logit link function. Logistic regression models the log-odds of an event as a linear combination of independent variables. The probability is calculated as

Link to original

Nonparametric Classifier

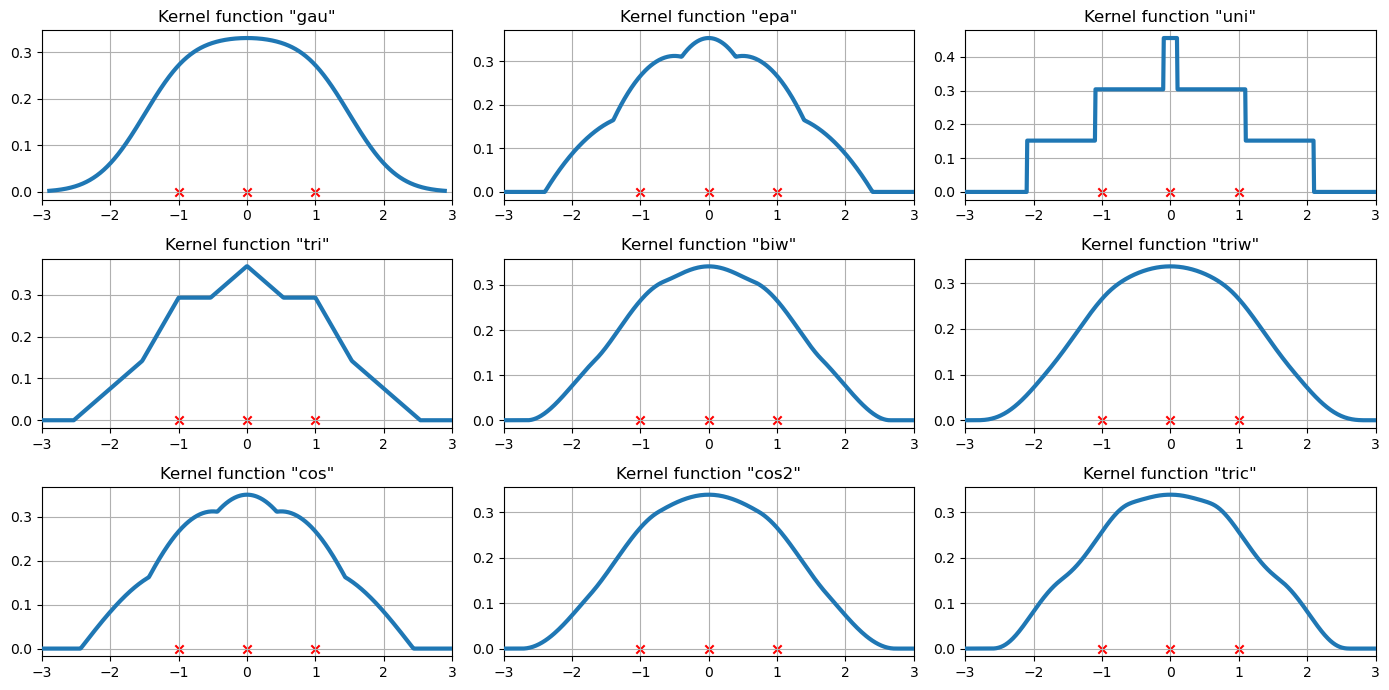

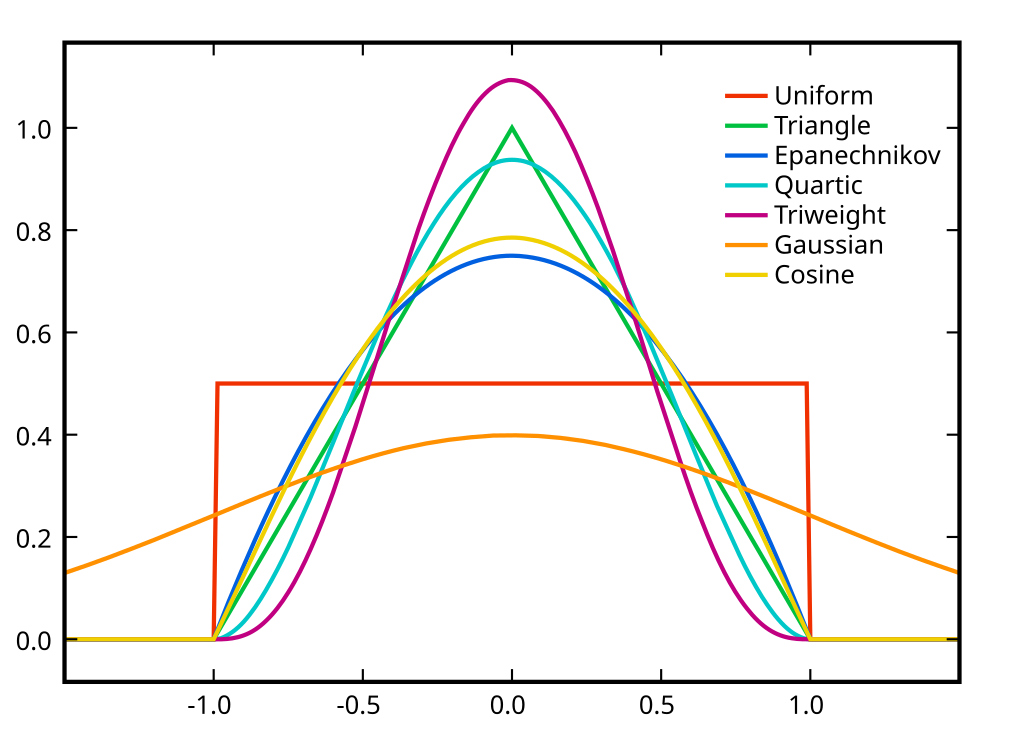

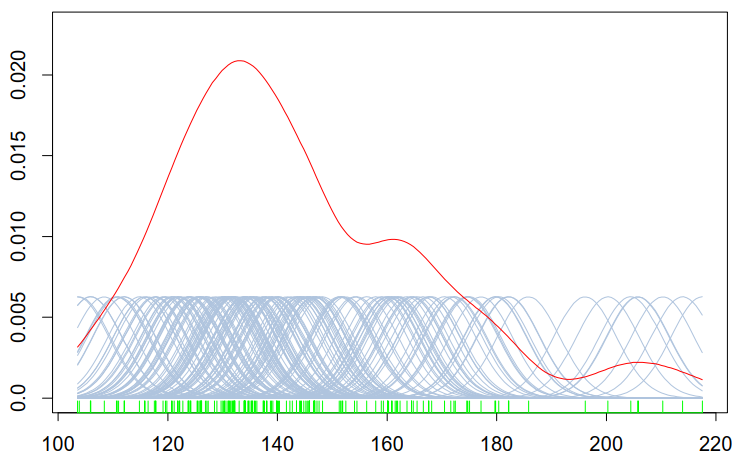

Kernel Density Estimation

Definition

where is the kernel, is the scaled kernel, and is a smoothing parameter (bandwidth, or window width)

KDE is a non-parametric method to estimate PDF of a random variable based on kernel and wrights.

Multidimensional KDE

where is the kernel, is the scaled kernel, and is a positive-definite bandwidth matrix.

Facts

Link to original

Widely used kernels

Kernel Based Classifier

Definition

where and

Bayes Classifier with an assumption that is the Kernel Density Estimation.

Link to original

K-Nearest Neighbors Algorithm

Definition

Suppose is the distance between the training sample and the given point . Let

The k-NN determines a sample’s class using the -nearest training datum.

Facts

Link to originaland are hyperparameters.

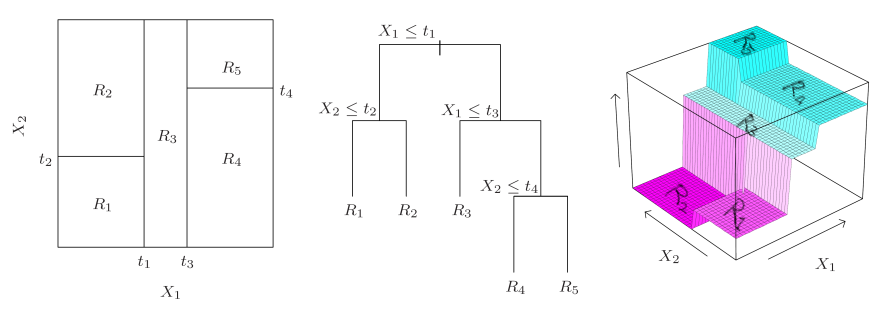

Decision Tree

Definition

Decision tree consists of Classification Tree and Regression Tree.

Link to original

Regression Tree

Definition

Decision tree algorithm consists of growing and pruning step. Suppose

- growing: seek the splitting variable and split point that solves

- pruning: Suppose the number of terminal nodes in a tree is and is the split regions corresponding to the terminal node. For a tree , define the cost complexity function. where is a tuning parameter regularizing complexity.

Regression function is estimated by A non-parametric regression that partitions explanatory variables into a set of rectangles and takes the average of the response variables in each rectangle as an estimate of the regression function in that region.

Link to original

Classification Tree

Definition

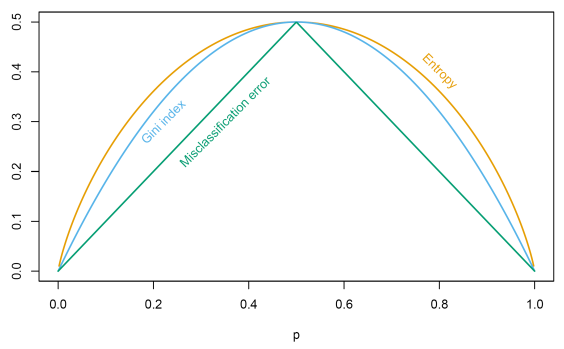

Suppose , and Define a variable representing the proportion of class observations in a node We classify the observations in a node to class Suppose the number of terminal nodes in a tree is and is the split regions corresponding to the terminal node. For a tree , define the cost complexity function. where is an impunity function, is a tuning parameter regularizing complexity.

The tree minimizes is the selected as optimal.

Impunity Functions

Link to original

- Misclassification error:

- Gini index:

- Cross entropy on deviance:

Information Gain

Definition

The information gain of a node of a decision tree is defined as where is the impunity of node , is the number of samples used in , are children nodes of the .

Link to original

Feature Importance

Definition

The feature importance of a feature used for Decision Tree is defined as where is the Information Gain of a node , and is a set of nodes using the feature

Link to original

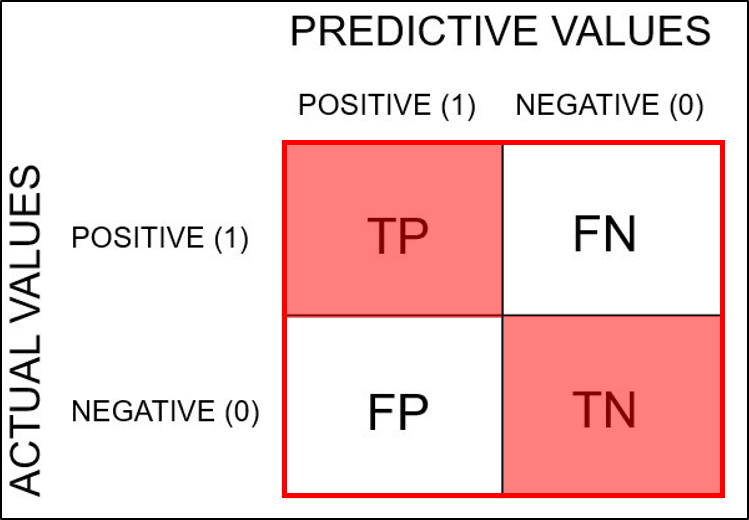

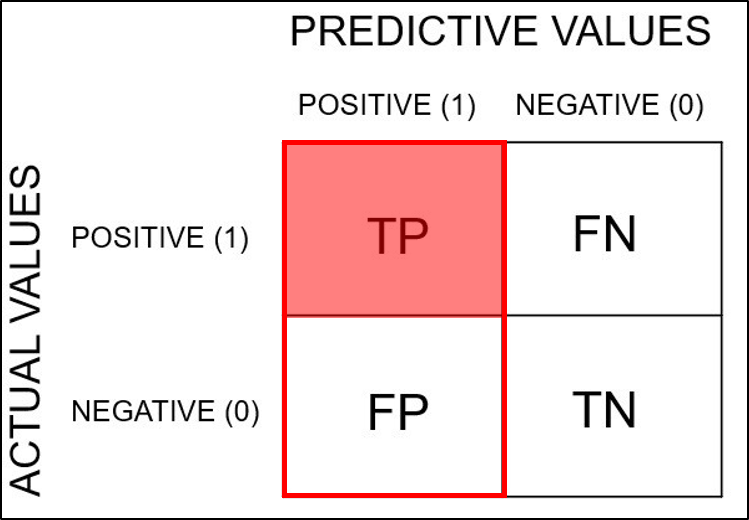

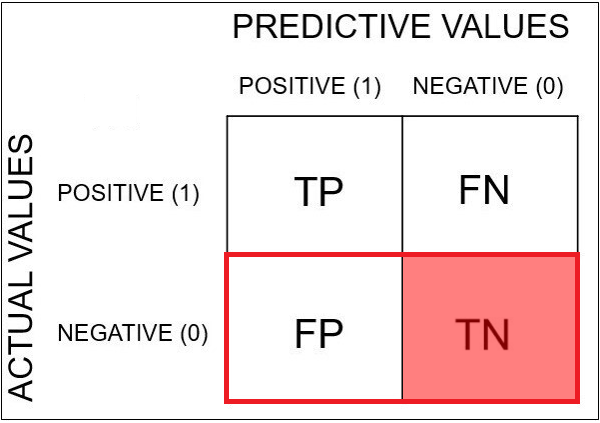

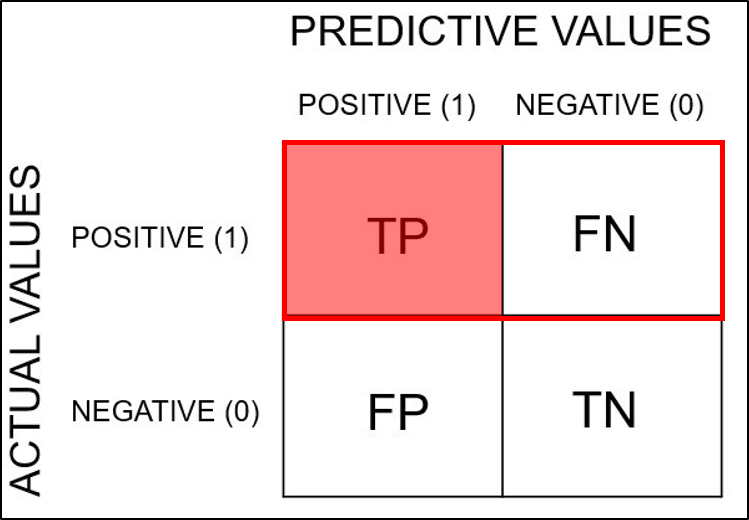

Confusion Matrix

Definition

Predicted Positive (PP) Predicted Negative (PN) Actual Positive True Positive (TP) False Negative (FN) Actual Negative False Positive (FP) True Negative (TN) Metrics

Accuracy

Definition

Link to original

Recall

Definition

Recall, Sensitivity, or True positive rate means that the rate of correctly predicted cases out of all the actual positive cases..

Link to originalPrecision

Definition

Precision means that the rate of actually positive cases out of cases predicted as positive.

Link to originalSpecificity

Definition

Specificity means that the rate of correctly predicted cases out of all the actual negative cases.

Link to originalType 1 Error

Definition

Link to original

Type 2 Error

Definition

Link to original

F-Score

Definition

F1 Score

The harmonic mean of Precision and Recall.

F-beta score

where

Recall is considered times as important as Precision.

Link to originalLink to originalPositive Predictive Value

Definition

where

Positive predictive value (in medical statistics and epidemiology) means that the rate of actually positive cases out of cases predicted as positive.

Link to original

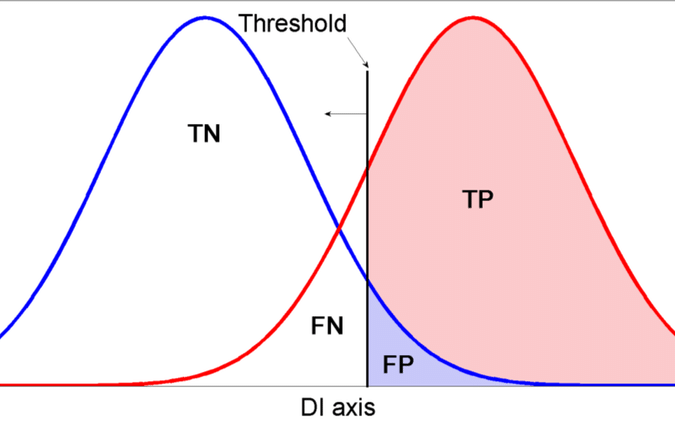

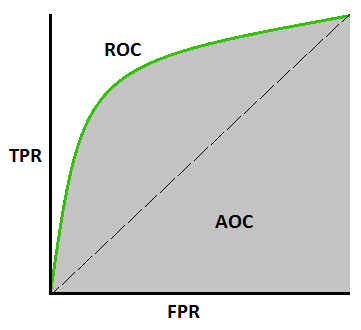

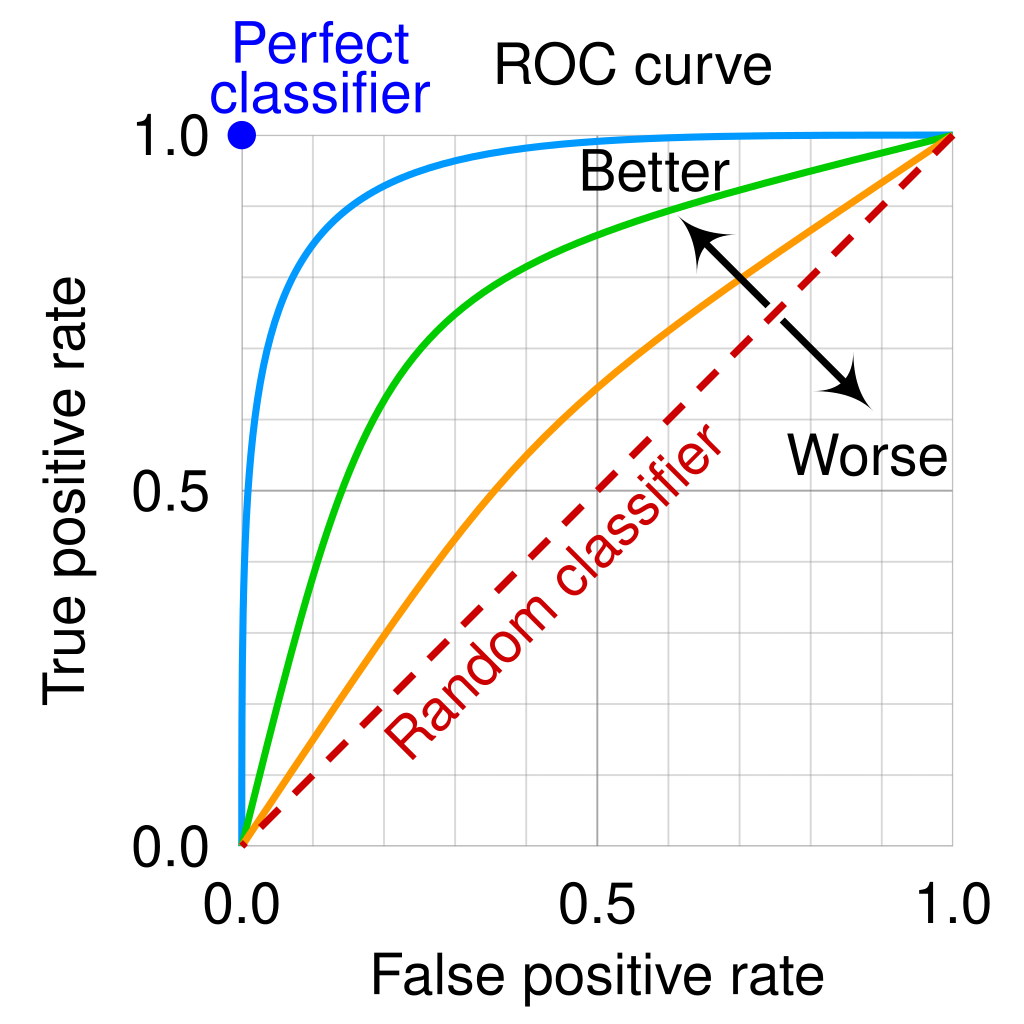

Receiver Operating Characteristic Curve

Definition

A receiver operating characteristic curve (ROC curve) is a plot of the True Positive Rate and False Negative Rate at each threshold setting.

AUC

The area under the ROC curve is called AUC (Area under curve)

Link to original

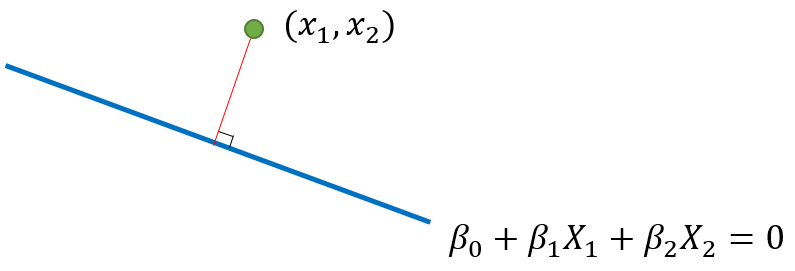

Support Vector Machines

Hyperplane

Definition

Vector Equation for a hyperplane

Facts

Suppose the dimension of is

- : point in -dimensional space.

- : line in -dimensional space.

- : plane in -dimensional space.

- : hyperplane in -dimensional space.

is perpendicular to the surface

Link to originalwhere is a signed distance, , and

Kernel Function

Definition

where

The kernel function returns the result of Inner product in by using only the original input data.

Examples

Some common kernel functions are as follows:

Link to original

- Linear:

- Polynomial:

- Gaussian radial basis function:

- Sigmoid:

Support Vector Machine

Definition

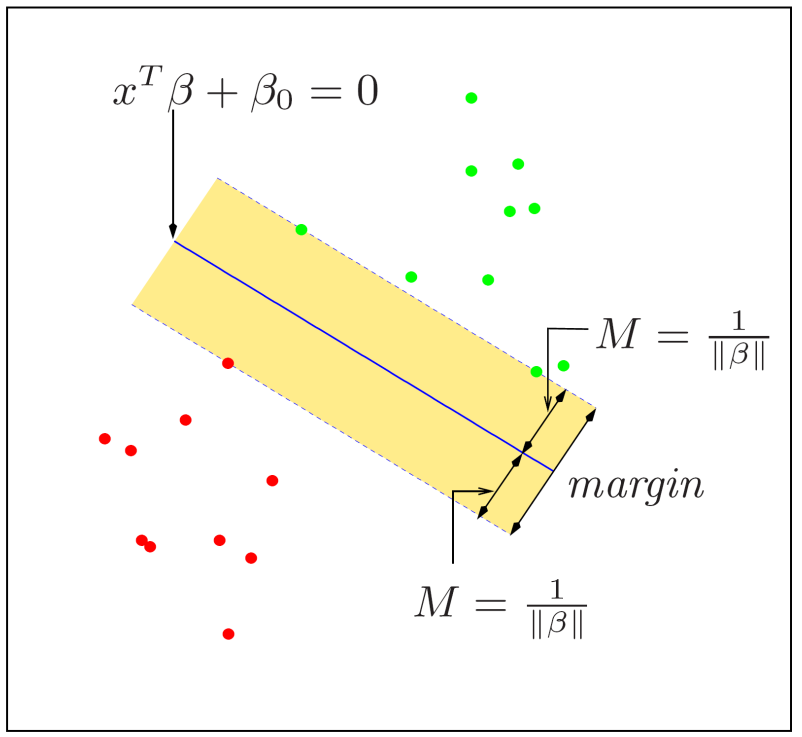

Hard-Margin Support Vector Machine

Assume that we have a learning set Suppose given two classes of data can separate by a Hyperplane without error. The hyperplane is called a separating hyperplane (SH)

Define , , and the margin of SH A SH which maximizes its margin () is called an optimal separating hyperplane (OSH). To find OSH, set linear constraints and satisfy where the minimum distance is arbitrary and may differ.

Let and . Then the points lying either on or are called support vectors. If and , then and

Therefore, the OHS can be obtained by a Convex Optimization problem. It can be solved by Method of Lagrange Multipliers with

By Duality of optimization problem, we have the dual optimization problem is defined as where

And the dual Lagrangian function is defined as

The primal optimization problem is convex and satisfies KKT Conditions. Thus, holds Strong Duality and the solution of the dual problem is the same as the primal problem.

The optimal parameter is obtained as where is an index set of support vectors

The optimal hyperplane can be written as and the classification rule is given by

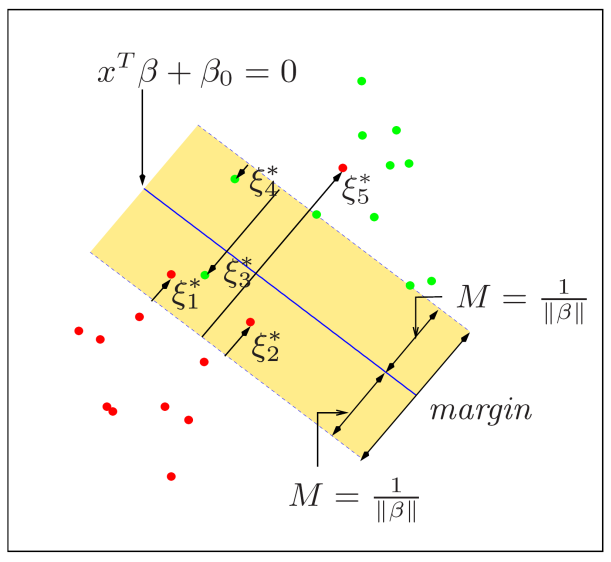

Soft-Margin Support Vector Machine

where is the regularization parameter, and is called a slack variable. If , the point is out of margin. On the other hand, if , then the point is within the margin.

The Lagrangian primal function is defined as with and .

And the dual function is defined as with and where

and the dual optimization problem is defined as The primal optimization problem is convex and satisfies KKT Conditions. Thus, holds Strong Duality and the solution of the dual problem is the same as the primal problem.

The optimal parameter is obtained as where is an index set of support vectors.

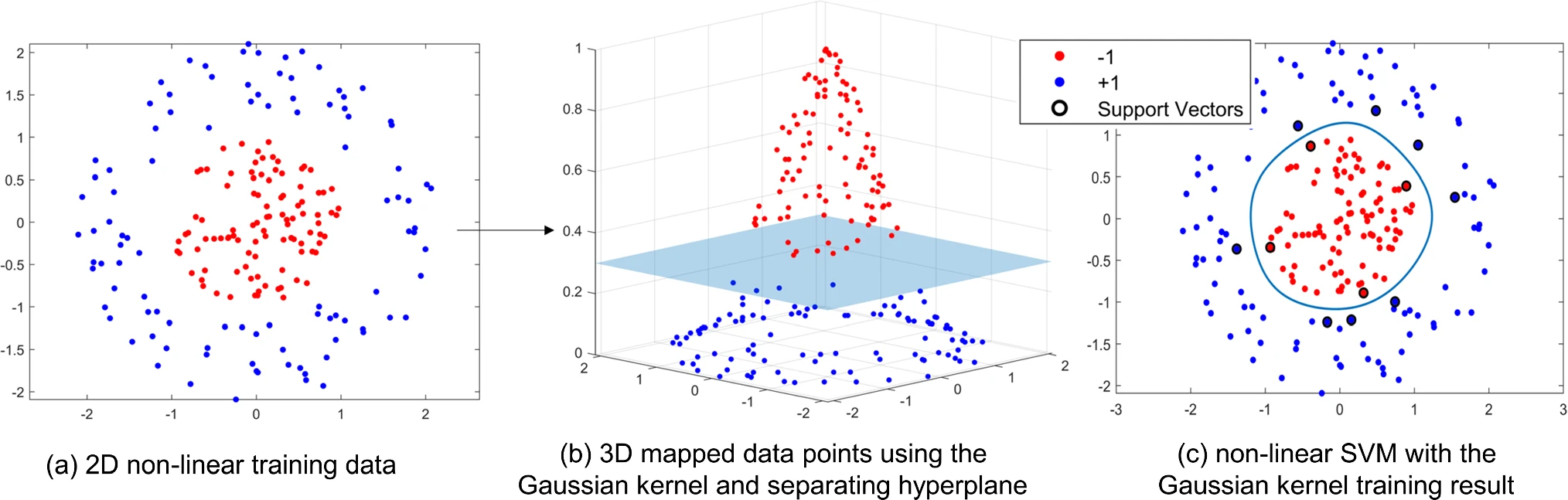

Non-Linear Support Vector Machine

Non-linear SVM finds an optimal separating Hyperplane in high-dimensional feature space . It accomplished by the kernel trick. The kernel trick is that instead of computing inner products in , compute them using a non-linear Kernel Function in input space.

Hard-Margin Non-Linear Support Vector Machine

If the data can be separated in , then the dual optimization problem is defined as where

The optimal separating Hyperplane in the is

and the decision rule is defined as

Soft-Margin Non-Linear Support Vector Machine

In the non-separable case, the dual problem is defined as where

The optimal separating Hyperplane in the is

and the decision rule is defined as

Link to original

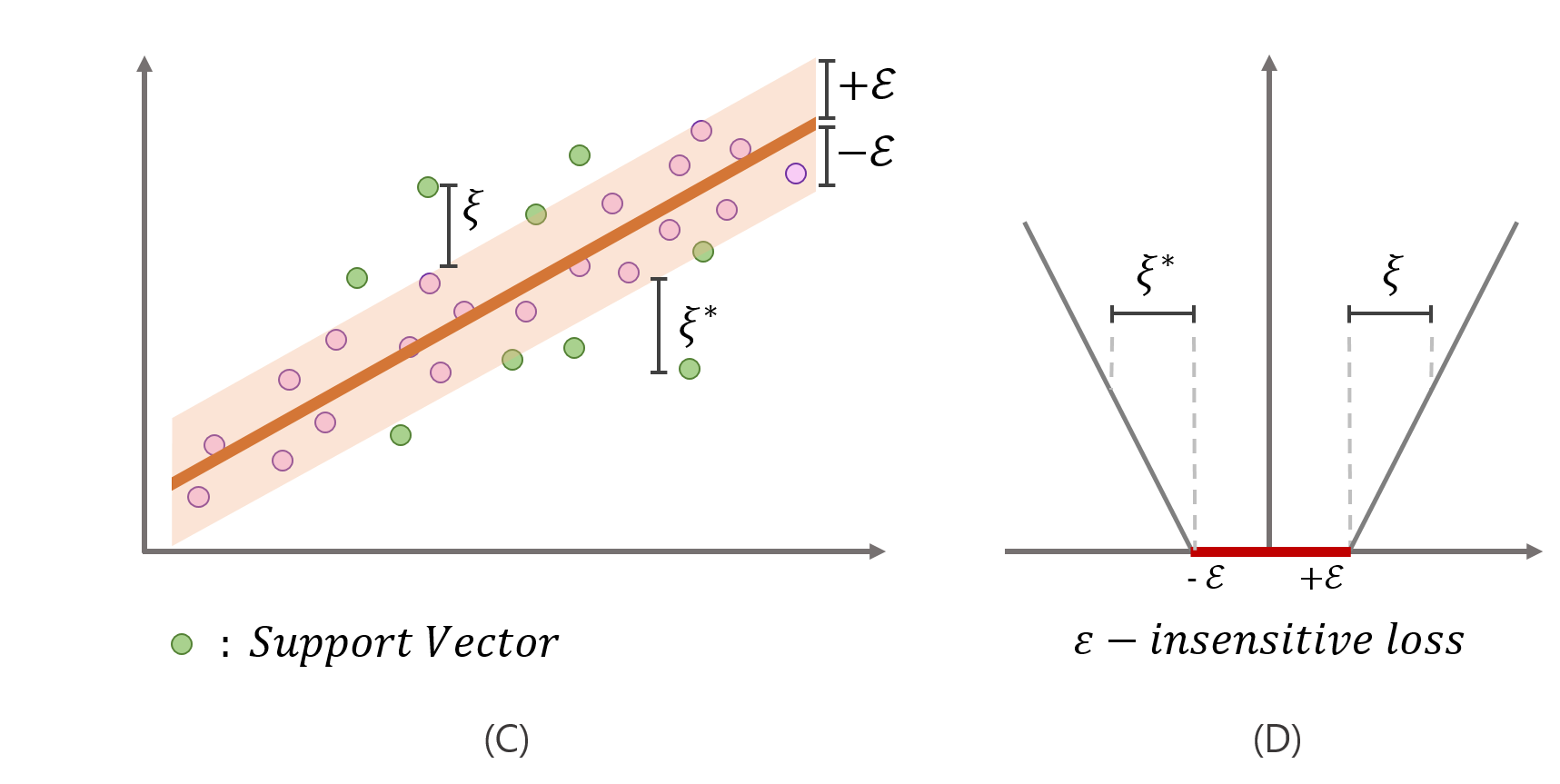

Support Vector Regression

Definition

Support vector regression (SVR) depends only on a subset of the training data, because the loss function ignores any training data close to the model prediction, within a band or tube.

The primal optimization problem is defined as

\min &\left( \cfrac{1}{2}||\boldsymbol{\beta}||^{2} + C\sum\limits_{i=1}^{n}(\xi_{i} + \xi'_{i}) \right)\\ \text{subject to}\ &y_{i} - (\beta_{0} + \boldsymbol{\beta}^{\intercal}\mathbf{x}_{i}) \leq \epsilon + \xi'_{i},\\ &(\beta_{0} + \boldsymbol{\beta}^{\intercal}\mathbf{x}_{i}) - y_{i} \leq \epsilon + \xi_{i},\\ &\ \xi'_{i} \geq 0, \ \xi_{i} \geq 0\quad i=1,\dots,n \end{aligned}$$ The term $\cfrac{1}{2}||\boldsymbol{\beta}||^{2}$ in the objective function, which appears with the intention of maximizing the margin of SVM, acts as a regularization parameter in SVR. The Lagrangian primal function is defined as $$ \begin{aligned} L_{P} &= \frac{1}{2}||\boldsymbol{\beta}||^{2} + C\sum_{{i=1}}^{{n}} (\xi_{{i}} + \xi'_{{i}}) \\ &+ \sum_{{i=1}}^{{n}} \alpha'_{{i}} \left( y_{{i}} - (\beta_{0} + \boldsymbol{\beta}^{\intercal} \mathbf{x}_{{i}}) - \epsilon - \xi'_{{i}} \right) + \sum_{{i=1}}^{{n}} \alpha_{{i}} \left( (\beta_{0} + \boldsymbol{\beta}^{\intercal} \mathbf{x}_{{i}}) - y_{{i}} - \epsilon - \xi_{{i}} \right)\\ &- \sum_{{i=1}}^{{n}} \mu'_{{i}} \xi'_{{i}} - \sum_{{i=1}}^{{n}} \mu_{{i}} \xi_{{i}} \end{aligned} $$ with $\mathbb{\alpha}, \mathbb{\alpha}', \boldsymbol{\mu}, \boldsymbol{\mu}' \geq \mathbf{0}$ The dual optimization is defined as $$ \begin{aligned} \max\ &\left( \mathbf{y}^{\intercal} (\mathbf{\alpha} - \mathbf{\alpha'}) - \epsilon \mathbf{1}^{\intercal} (\mathbf{\alpha} + \mathbf{\alpha'}) - \frac{1}{2} (\mathbf{\alpha} - \mathbf{\alpha'})^{\intercal} \mathbf{K} (\mathbf{\alpha} - \mathbf{\alpha'}) \right) \\ \text{subject to}\ &\mathbf{1}_{n}^{\intercal} (\mathbf{\alpha} - \mathbf{\alpha'}) = 0, \mathbf{0} \leq \mathbf{a}, \mathbf{a}' \leq C\mathbf{1}_{n}\quad i=1,\dots,n \end{aligned}$$ And the dual Lagrangian function is defined as $$\begin{aligned} L_{D} = &\ \mathbf{y}^{\intercal} (\mathbf{\alpha} - \mathbf{\alpha'}) - \epsilon \mathbf{1}^{\intercal} (\mathbf{\alpha} + \mathbf{\alpha'}) - \frac{1}{2} (\mathbf{\alpha} - \mathbf{\alpha'})^{\intercal} \mathbf{K} (\mathbf{\alpha} - \mathbf{\alpha'}) \\ &+ \lambda \mathbf{1}_{n}^{\intercal} (\mathbf{\alpha} - \mathbf{\alpha'})\\ &- \boldsymbol{\mu}^{\intercal} \mathbf{\alpha} - \boldsymbol{\mu}'^{\intercal} \mathbf{\alpha'}+ \boldsymbol{\mu}_{C}^{\intercal} (C\mathbf{1}_{n} - \mathbf{\alpha}) + \boldsymbol{\mu}_{C}'^{\intercal} (C\mathbf{1}_{n} - \mathbf{\alpha'}) \end{aligned}$$ with $\boldsymbol{\mu}, \boldsymbol{\mu}', \boldsymbol{\mu}_{C}, \boldsymbol{\mu}_{C}' \geq \mathbf{0}$ where $\mathbf{K}$ is a [[Kernel Function]].Link to original

Ensemble Learning

Bootstrap Aggregating

Definition

Regression Setting

Given a training set , draw a bootstrap sample from training data. Bagging estimator of at is defined as where is the prediction at based on a new training set

Classification Setting

Given a training set , draw a bootstrap sample from training data. Bagging estimator of at is defined as where is the prediction at based on a new training set

Facts

Bagging in a classification setting with zero-one loss often fails. Bagging a good classifier can make it better, but bagging a bad classifier can make it worse.

Link to originalBagging reduces the variance of an estimated prediction function. It seems to work especially well for high-variance, low-bias procedures, such as trees.

Boosting

Definition

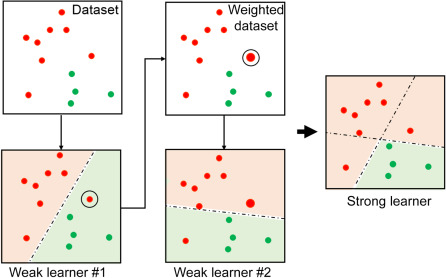

Boosting builds a strong learner by combining many weak learners whose accuracies are slightly better than a random guess.

Examples

Link to original

AdaBoost

Definition

The AdaBoost (Adaptive Boosting) is the weighted sum of weak learners that are robust to outliers and noise.

Algorithm

Consider a 2-class problem with

- Initialize the weights

- Repeat for :

- Fit a weak classifier that minimizes the weighted error rate and call the fitted classifier , and its corresponding error rate

- Compute

- Update the weights by

- Output the classifier

Facts

AdaBoost is equivalent to Gradient Boosting using the exponential loss

Link to original

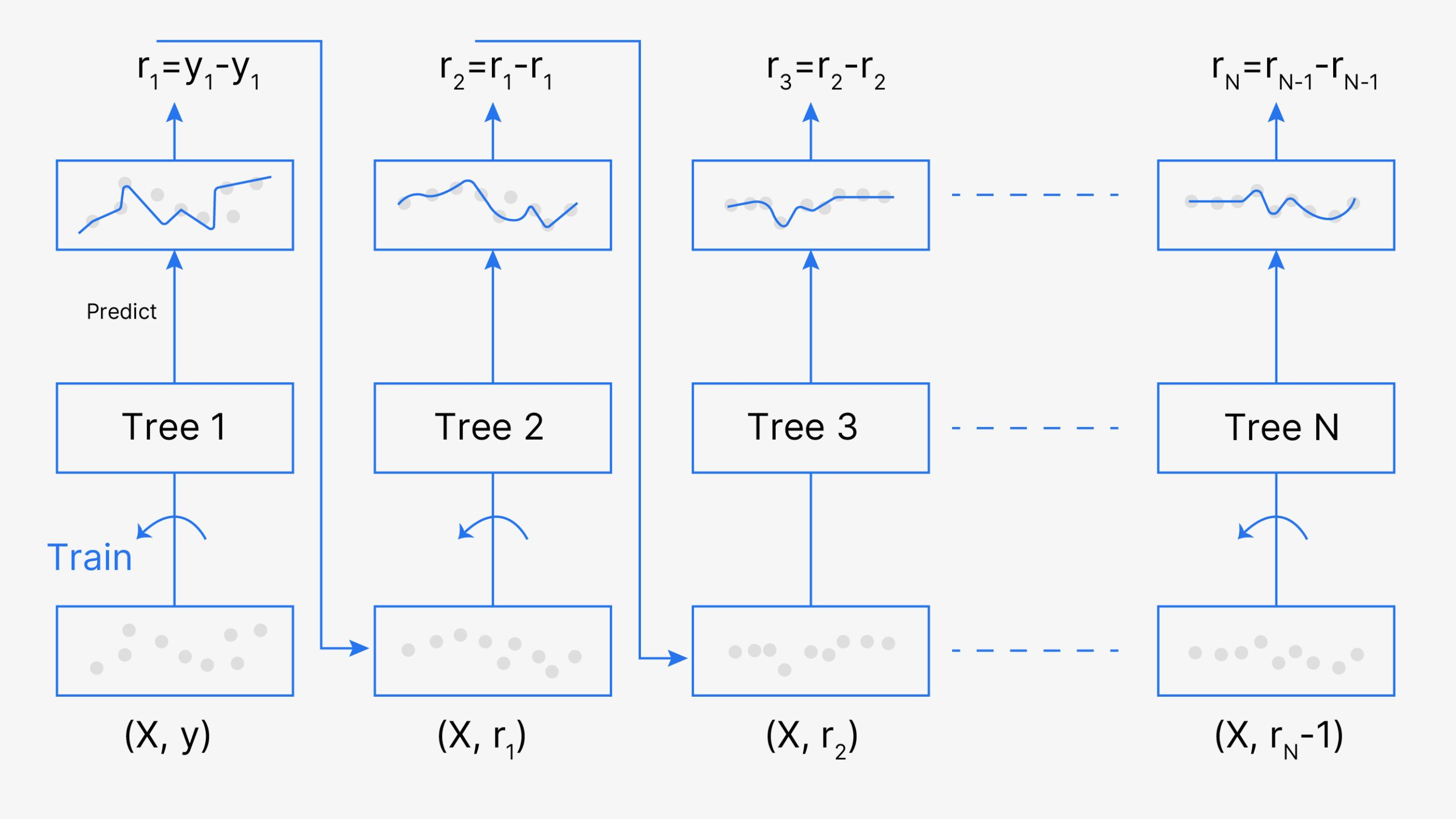

Gradient Boosting

Definition

The gradient boosting is a type of Forward Stagewise Additive Modeling that uses gradients to calculate the residuals.

Algorithm

Link to original

- Initialize the model

- Repeat for :

- Calculate the residuals for each data point where is a Loss Function

- Fit weak learners to the residuals

- Calculate a learning rate

- Update model using the fitted weak learners

- Output the final model

Forward Stagewise Additive Modeling

Definition

The forward stagewise additive modeling sequentially adds new basis functions to the model of the previous step without adjusting the parameters and coefficients of those that have already been added.

Algorithm

Link to original

- Initialize

- For

- Compute

- Set where is a basis function and is a Loss Function

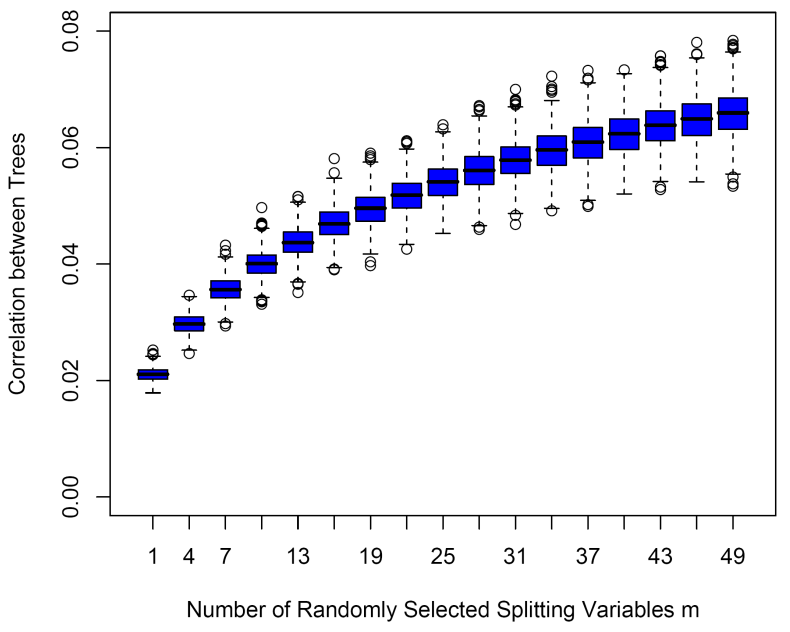

Random Forest

Definition

Random forest constructs a multitude of de-correlated decision trees at training time and make a prediction through Bagging.

Algorithm

- For :

- Draw a size bootstrap sample from training data.

- Grow a random-forest tree to the bootstrapped data, by recursively repeating the following steps for each terminal node of the tree, until the minimum node size is reached.

- Select variables at random.

- Pick the best variable and split point among the selected variables.

- Split the node into two children nodes.

- Output the ensemble of trees to make a prediction at a new point

- Regression:

- Classification: where is the class prediction of the th random-forest tree.

Facts

Link to originalSelection of hyperparameter is recommended as

- For classification:

- For regression:

Neural Networks

Projection Pursuit Regression

Definition

where are unspecified function

Facts

Link to originalPPR is a single-layered Neural Network where each neuron uses a different activation function and has no bias term.

Activation Function

Definition

The activation function of a node in an artificial neural network is a function that calculates the output of the node based on the linear combination of its inputs. It is used to add a non-linearity to the model.

Examples

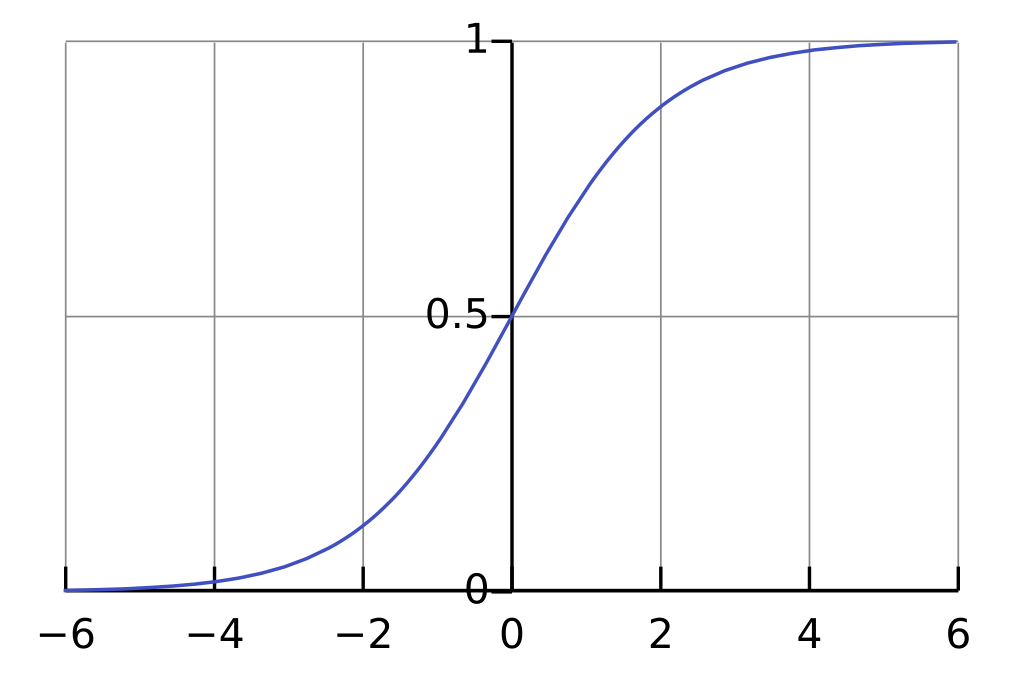

Logistic Function

Definition

The logistic function is inverse function of Logit.

Facts

Link to originalSigmoid activation function is vulnerable to vanishing gradient problem. The image of the derivative of the sigmoid function is . For this reason, after passing node with sigmoid Activation Function, the gradient is decreased

Also, with the sigmoid Activation Function, if all the inputs are positive, then all the gradients also positive.

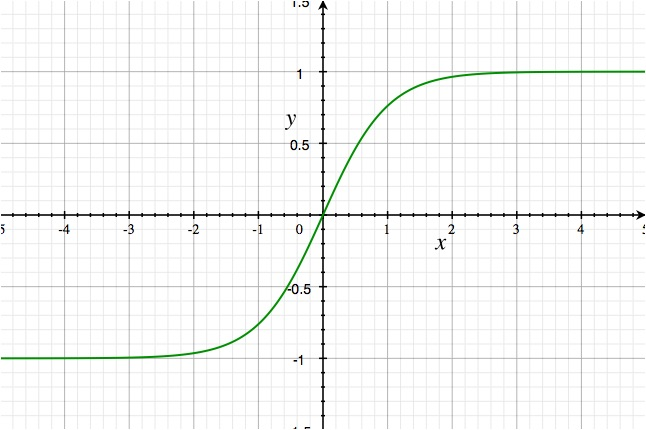

Hyperbolic Tangent Function

Definition

Link to original

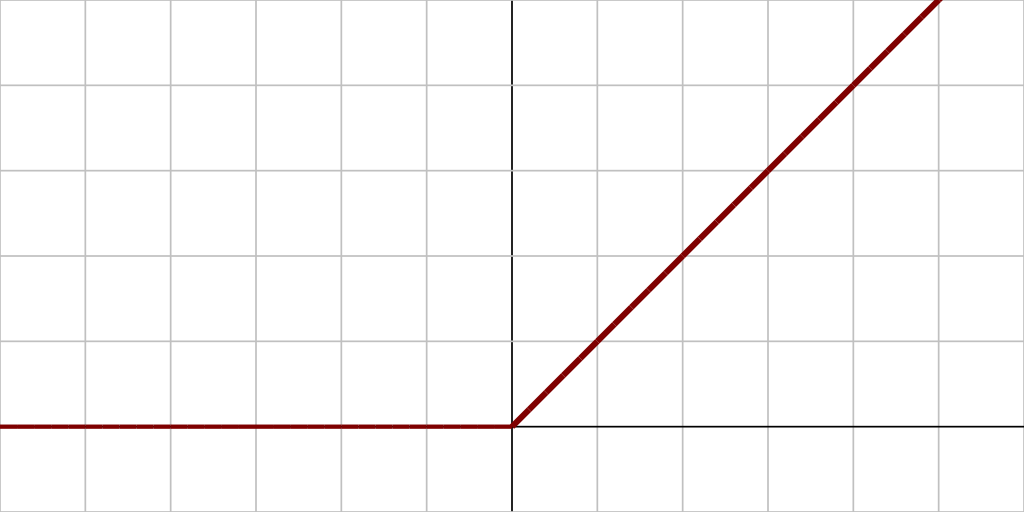

Rectified Linear Unit Function

Definition

Facts

Link to originalIf an initial value is negative, it is never updated.

ReLU6

Definition

Link to original

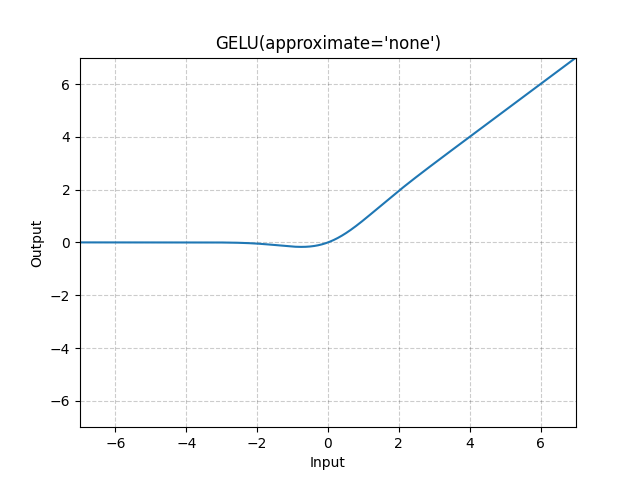

Gaussian-Error Linear Unit

Definition

GELU is a smooth approximation of ReLU.

where is the CDF of the standard normal distribution.

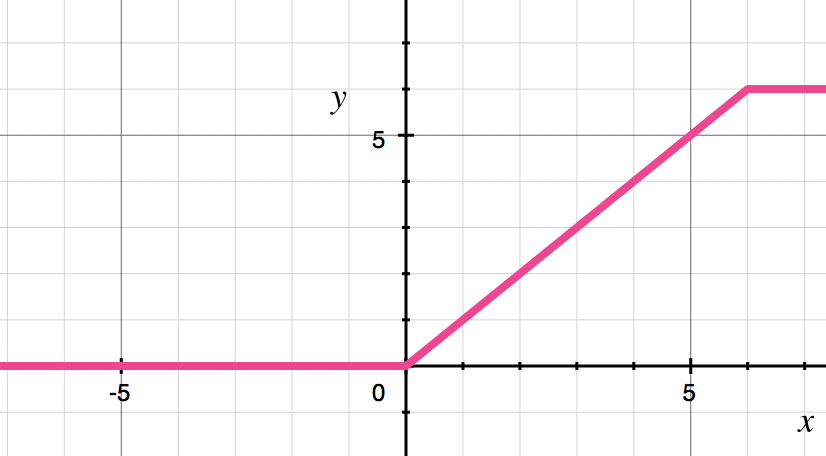

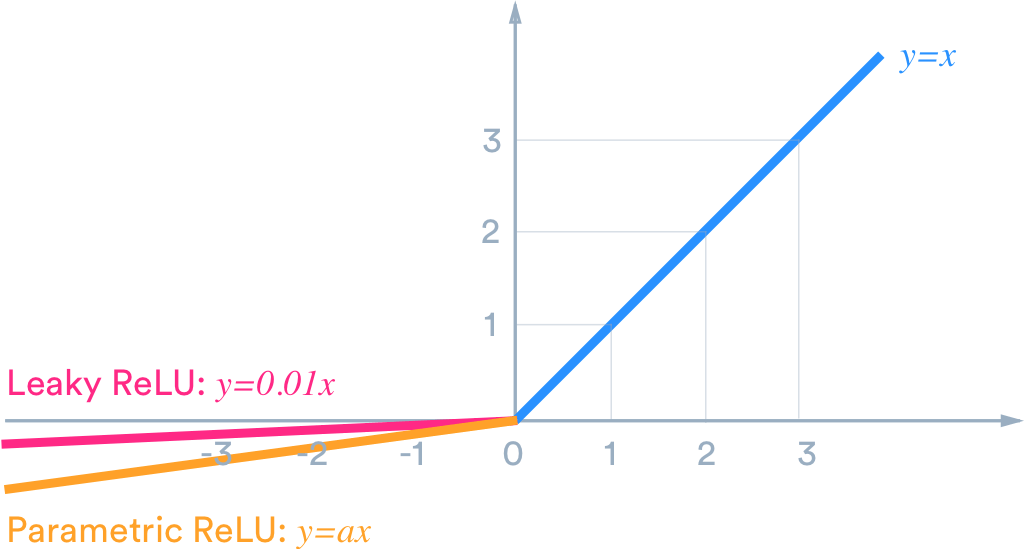

Link to originalParametric ReLU

Definition

where is a hyperparameter

Facts

Link to originalIf , it is called a Leaky ReLU

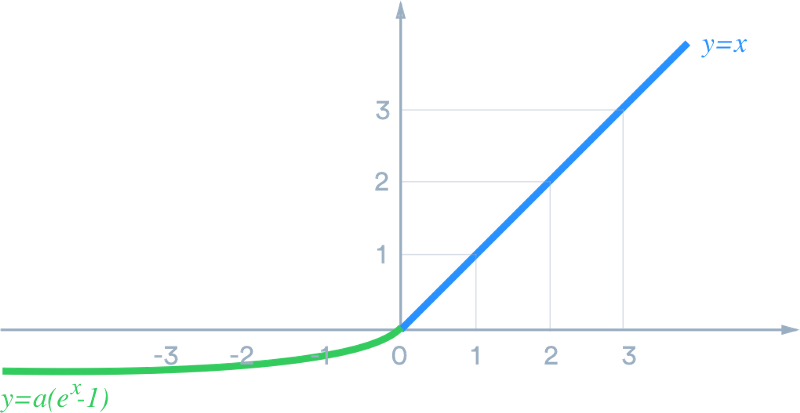

Exponential Linear Unit

Definition

where is a hyperparameter

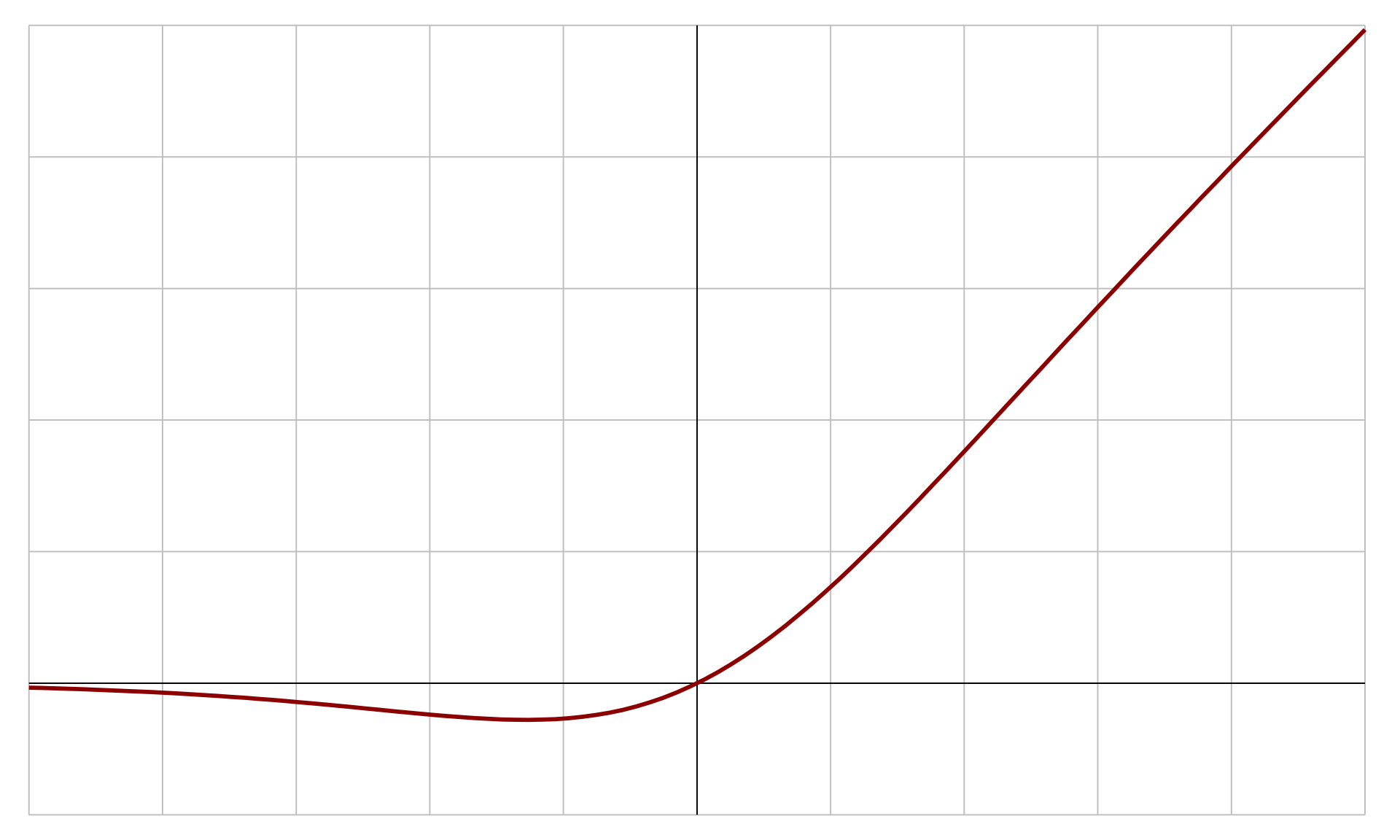

Link to originalLink to originalSwish Function

Definition

where is Sigmoid Function, and is a hyperparameter

When , the function is called a sigmoid liniear unit (SiLU).

Link to original

Sum of Squared Errors Loss

Definition

Suppose the number of data is , then the sum of squared error loss (SSE) is defined as the sum of Squared Error Loss of all data.

Link to original

Cross-Entropy Loss

Definition

Suppose the number of data is and the number of classes is , then the cross entropy loss is defined as

Link to original

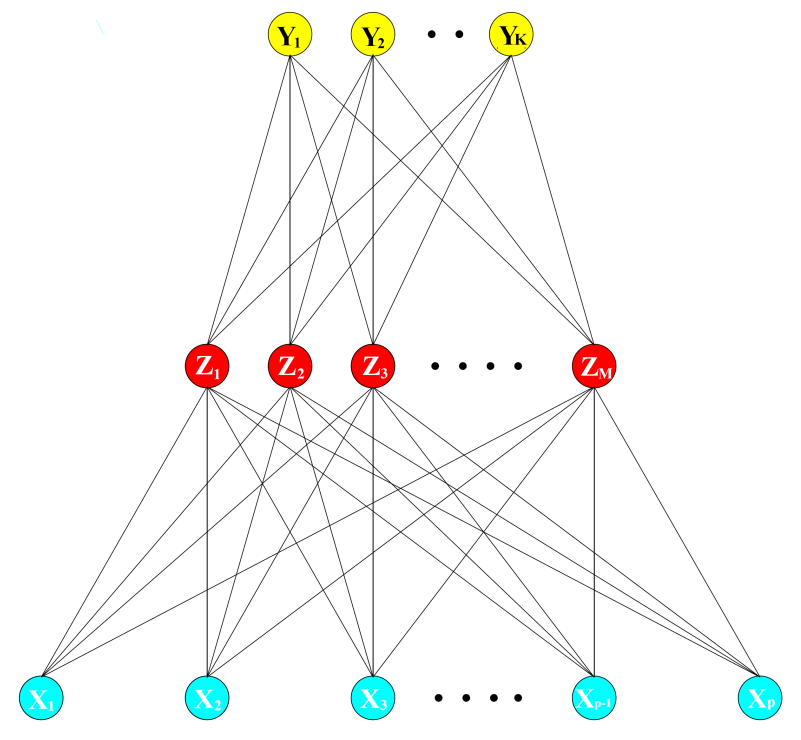

Neural Network

Definition

Neural network can be thought as a non-linear generalization of linear model.

The derived features are constructed by an Activation Function and linear combinations of the inputs. where is an Activation Function

Output nodes are the linear combinations of And the output is modeled by a function of a linear combinations of where is called an output function.

Facts

The output function varies by the problem. For regression is Identity Function, and for -class classification Softmax Function is used as the .

For regression problem, Sum of Squared Errors Loss is used as Loss Function. For classification problem, we use Cross-Entropy Loss

With the softmax activation function and the Cross-Entropy Loss, the neural network model is exactly a linear Logistic Regression model in the hidden units.

The parameters of a neural network are estimated by Backpropagation.

Link to originalNeural network is especially effective in problem with a high signal-to-noise ratio.

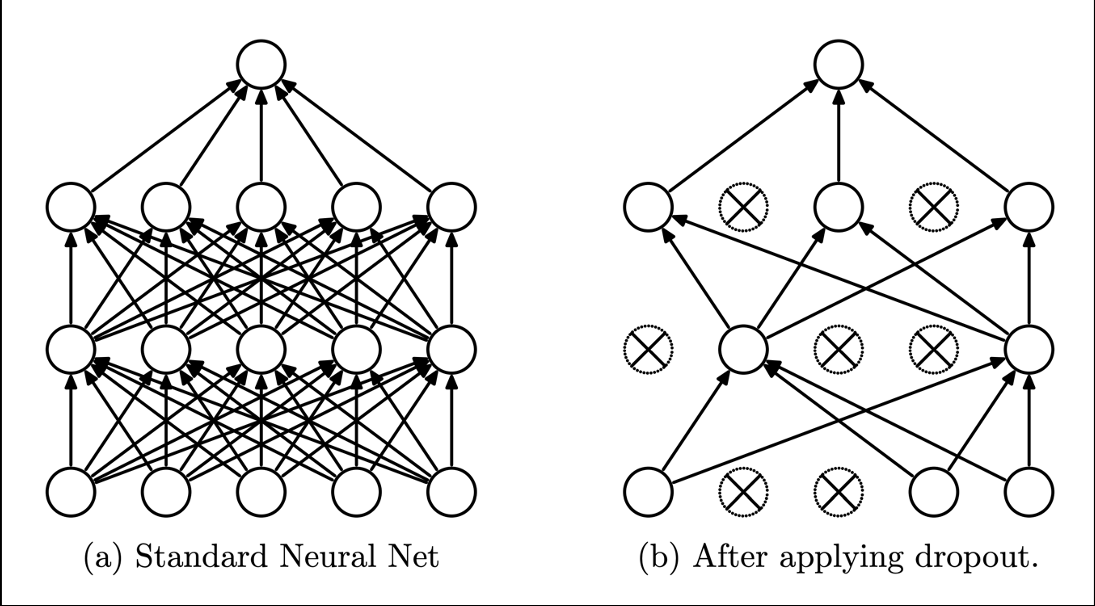

Dropout

Definition

Dropout is a regularization technique used for Neural Network. Dropout randomly dropping out or omitting units during training process of a Neural Network.

Link to original

Clustering Analysis

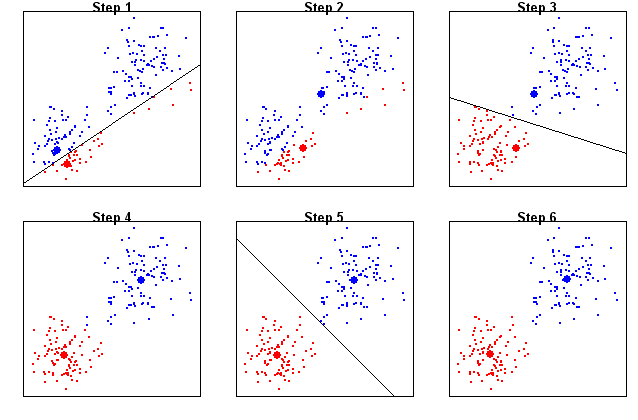

K-Means Clustering

Definition

The k-means clustering algorithm solves the following optimization problem where is the number of clusters, a Partition is a set of clusters, and The k-means clustering algorithm aims to partition observations into clusters in which each observation belongs to the cluster with the nearest mean.

Algorithm

Initialize .

Assignment step: Assign each observation to the cluster with the nearest mean

where each is assigned to exactly one

Update step: Recalculate means for observations assigned to each cluster.

Iterate step 2 and 3 until the within cluster variation converges.

Facts

The k-means clustering uses spherical metric (Euclidean distance) to group data, so that it is useful only when clusters are convex sets.

Link to original

Self-Organizing Map

Definition

A self-organizing map (SOM) is a clustering method that produces a low-dimensional representation of a higher-dimensional data set while preserving the Topological Manifold structure of the data.

The algorithm fits a grid to high-dimensional data and assigns the data to the fitted nodes (prototypes) of the grid.

Link to original

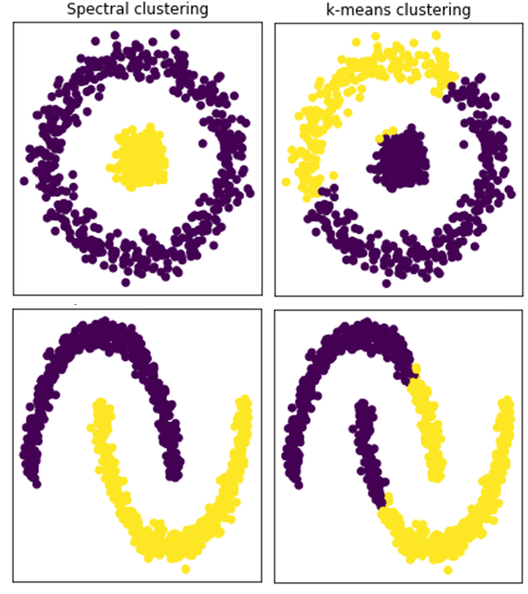

Spectral Clustering

Definition

Spectral clustering technique make use of spectrum of the similarity matrix of the data to perform dimensionality reduction before clustering.

Algorithms

Apply Laplacian Eigenmap to the given data .

Form an sub matrix using the first -columns of the embedded matrix , and normalize each row to norm .

Apply clustering algorithm (e.g. K-Means Clustering) to , where is the -th row of .

Mathematical Background

Consider a cluster assignment vector for each data point. Then, clustering observations is corresponding to estimating ‘s The entries of the Adjacency Matrix represents the similarity between points and .

Under the assumption that the close data points (large ) have a similar label (), the optimization problem is set up that minimizing the difference between assignments for similar points. where the constraint is imposed to avoid the trivial solution .

In a matrix notation, the problem is

When , is large and when , is small. So, works as the weight for each pair in the optimization.

The Lagrangian function is defined as And the solution of the problem is given by an Eigenvalue problem. The solution is an matrix of eigenvectors sorted in ascending by their corresponding eigenvalues.

Link to original

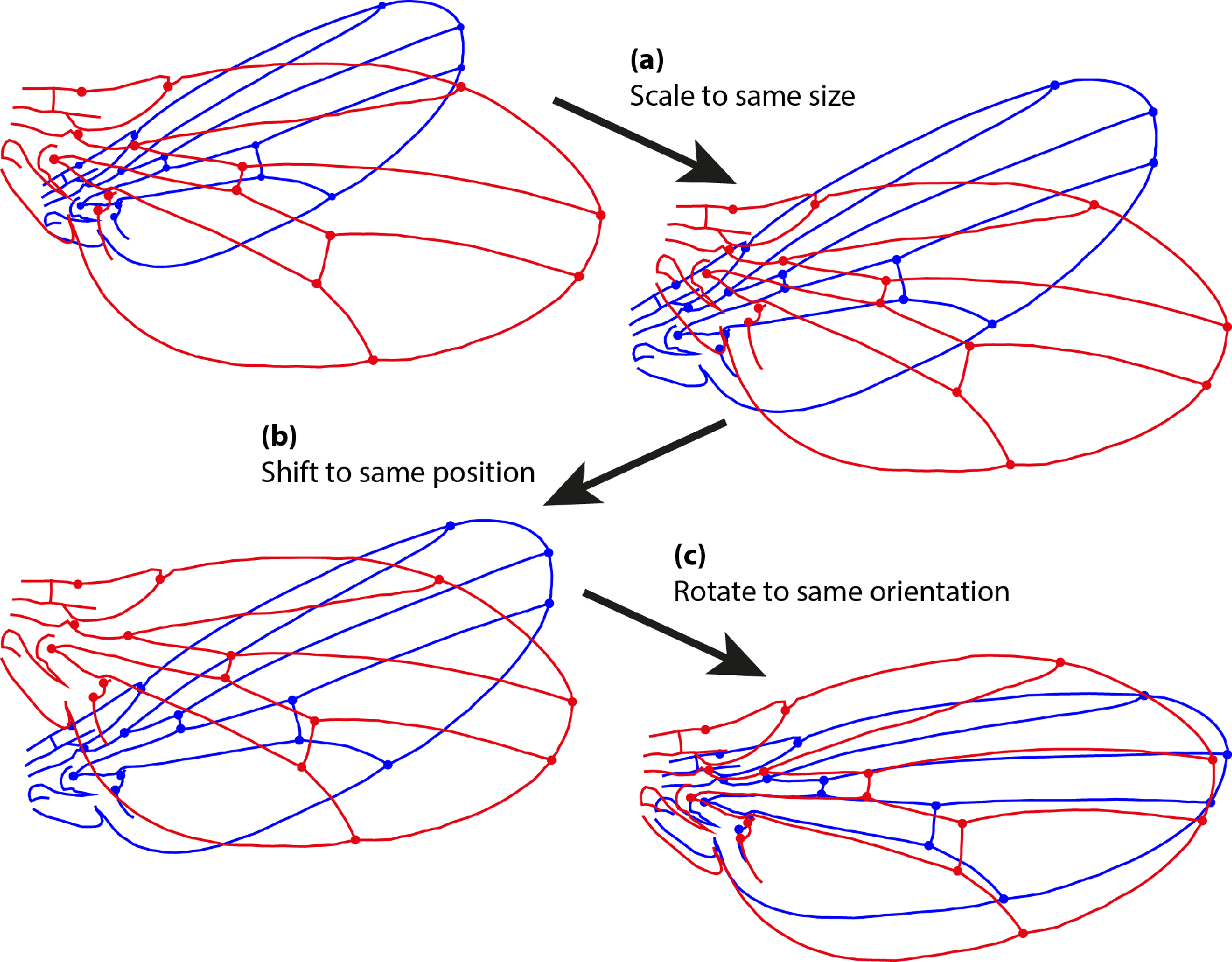

Procrustes Analysis

Definition

Procrustes Transformation without Scaling

Consider two matrices and a target . What we want is to find a Procrustes transformation of whose result is closest to ,

The optimization problem is defined as where is a Orthonormal Matrix (perform rotation and reflection), act as location parameter, and is a Frobenius Norm.

Let and be the columnwise mean vectors of the matrices, and and be the demeaned matrices. Consider the SVD . Then, the solution of the optimization problem is given by and the minimal distance is referred to as the Procrustes distance.

Procrustes Transformation with Scaling

Consider demeaned matrices and . The Procrustes distance with scaling is obtained from more general optimization problem. where is a positive scalar.

The solution for is as before (), with

Procrustes Average

Consider demeaned and scaled matrices . Procrustes average problem finds the shape closest in average squared Procrustes distance to all the given shapes. This is solved by a simple algorithm

Link to original

- Initialize

- Solve the Procrustes rotation problems with fixed, yielding

- Let

- Iterate step 1 and 2 until it converges.

Kernel Principal Component Analysis

Definition

Consider an demeaned matrix By SVD , where are orthonormal matrices and is a Diagonal Matrix. Then, By PCA . For a linear kernel , . is an Orthonormal Matrix, The kernel principal components are given by solution of the optimization problem. where

Link to original