Definition

Backpropagation is a gradient estimation method used for training neural networks. The gradient of a loss function with respect to the weights is computed iteratively from the last layer to the input.

Without backpropagation, we would need to calculate the gradient of the weights of each layer independently. However, we can reuse past layers’ gradients and can avoid redundant calculations with backpropagation. Also, in a computational aspect, the calculations of gradients within the same layer can be parallelized.

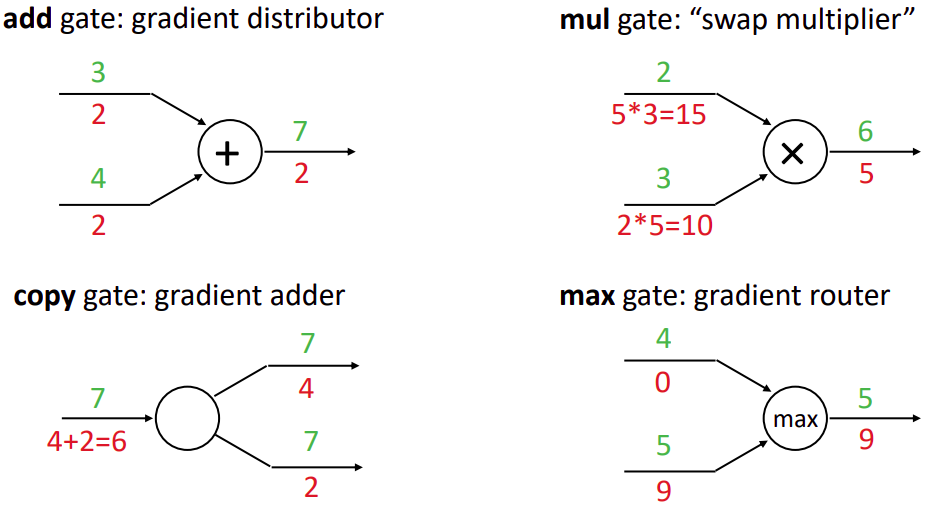

Patterns in Gradient Flow

Algorithm

- Make a prediction and calculate the loss using the data (feedforward step)

- Update gradients using the chain rule and obtained results from feedforward step (backpropagation)

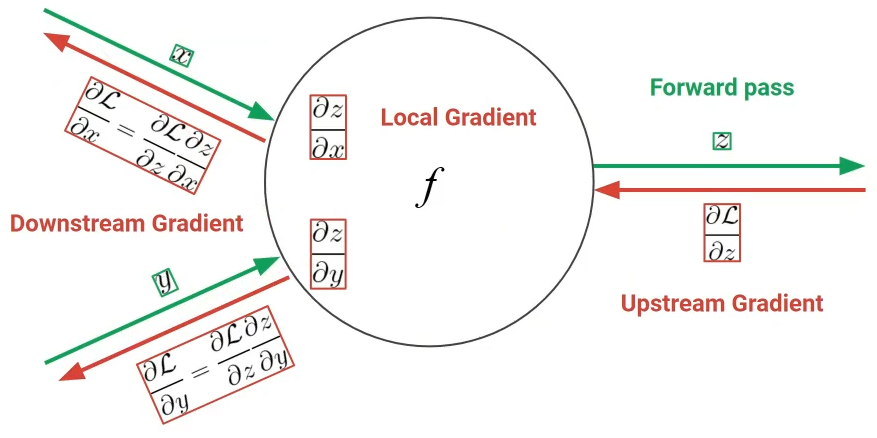

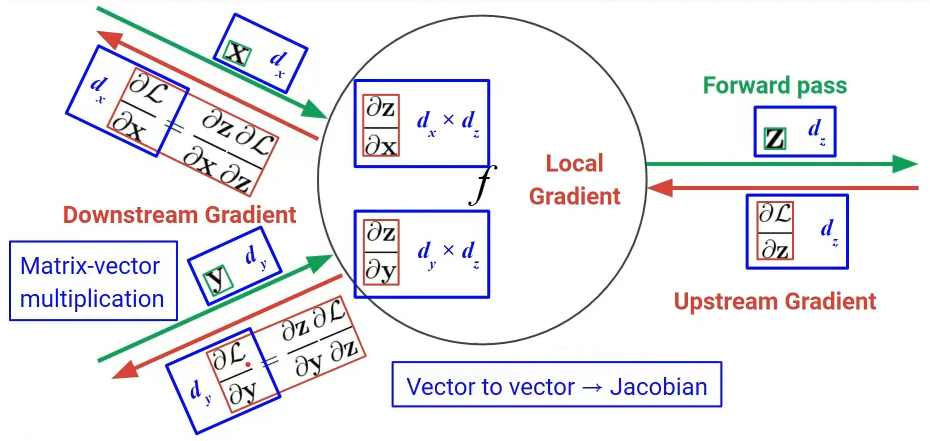

The downstream gradients of a node are calculated by the product of the upstream gradient and the local gradient.

Scalar Case

Vector Case

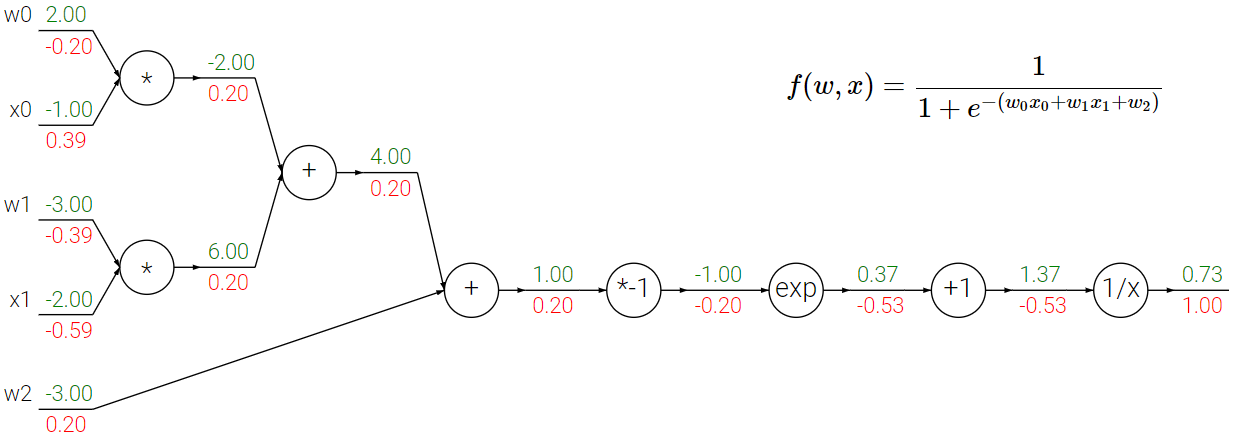

Examples