Definition

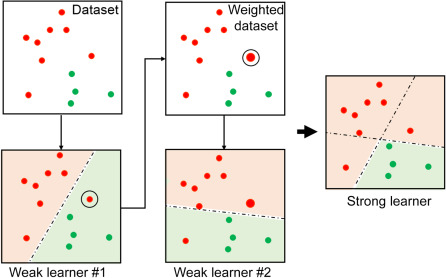

The AdaBoost (Adaptive Boosting) is the weighted sum of weak learners that are robust to outliers and noise.

Algorithm

Consider a 2-class problem with

- Initialize the weights

- Repeat for :

- Fit a weak classifier that minimizes the weighted error rate and call the fitted classifier , and its corresponding error rate

- Compute

- Update the weights by

- Output the classifier

Facts

AdaBoost is equivalent to Gradient Boosting using the exponential loss