Definition

Hard-Margin Support Vector Machine

Assume that we have a learning set Suppose given two classes of data can separate by a Hyperplane without error. The hyperplane is called a separating hyperplane (SH)

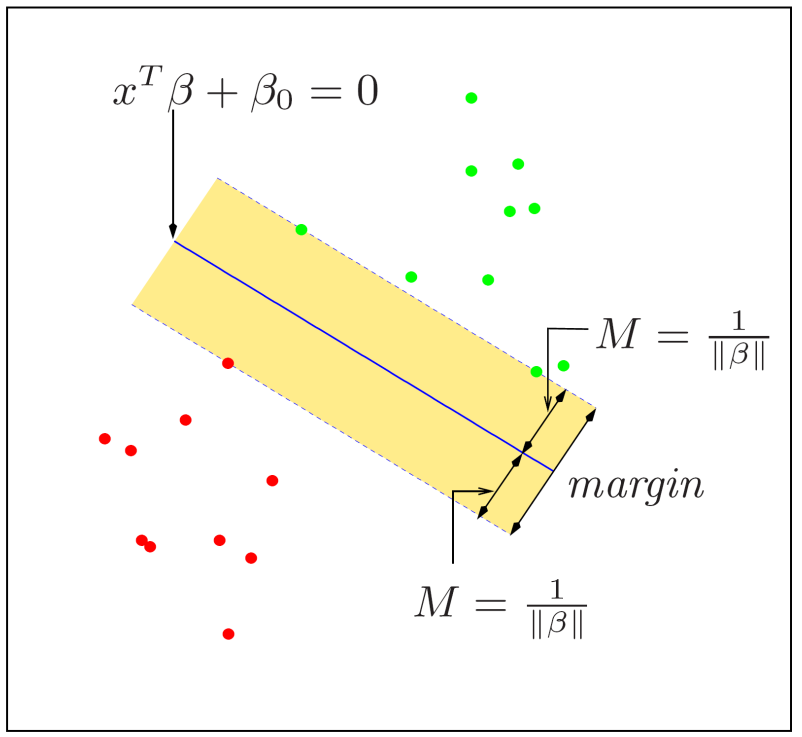

Define , , and the margin of SH A SH which maximizes its margin () is called an optimal separating hyperplane (OSH). To find OSH, set linear constraints and satisfy where the minimum distance is arbitrary and may differ.

Let and . Then the points lying either on or are called support vectors. If and , then and

Therefore, the OHS can be obtained by a Convex Optimization problem. It can be solved by Method of Lagrange Multipliers with

By Duality of optimization problem, we have the dual optimization problem is defined as where

And the dual Lagrangian function is defined as

The primal optimization problem is convex and satisfies KKT Conditions. Thus, holds Strong Duality and the solution of the dual problem is the same as the primal problem.

The optimal parameter is obtained as where is an index set of support vectors

The optimal hyperplane can be written as and the classification rule is given by

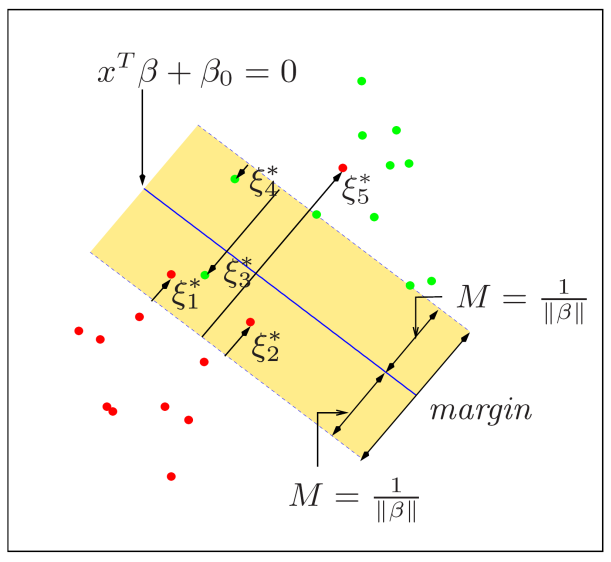

Soft-Margin Support Vector Machine

where is the regularization parameter, and is called a slack variable. If , the point is out of margin. On the other hand, if , then the point is within the margin.

The Lagrangian primal function is defined as with and .

And the dual function is defined as with and where

and the dual optimization problem is defined as The primal optimization problem is convex and satisfies KKT Conditions. Thus, holds Strong Duality and the solution of the dual problem is the same as the primal problem.

The optimal parameter is obtained as where is an index set of support vectors.

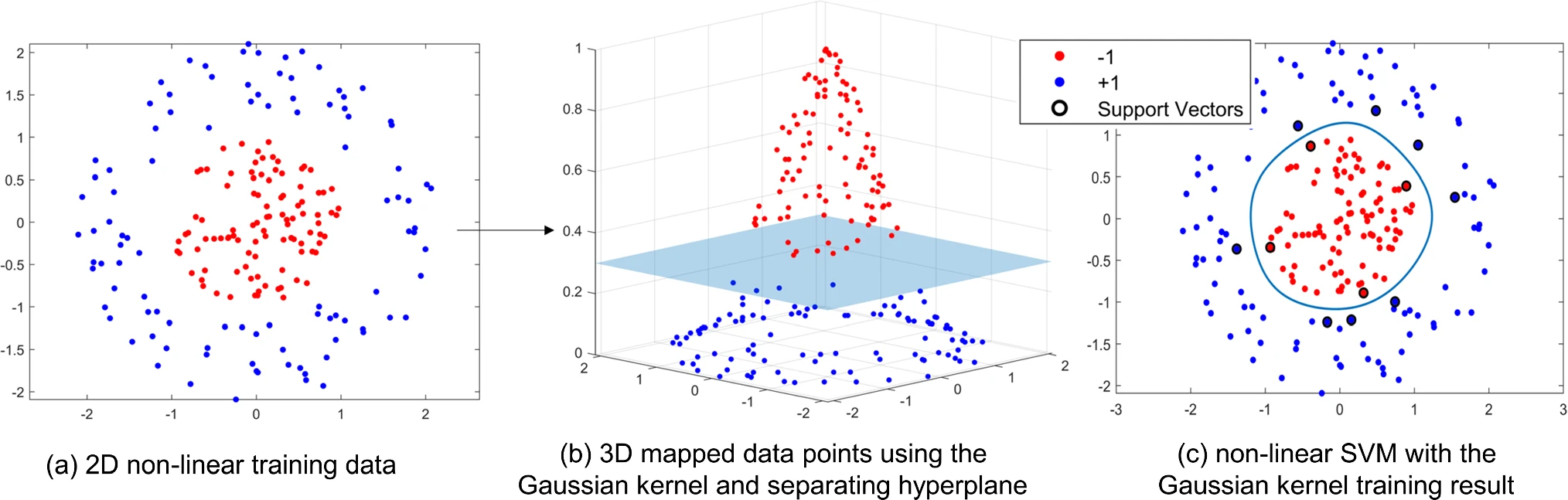

Non-Linear Support Vector Machine

Non-linear SVM finds an optimal separating Hyperplane in high-dimensional feature space . It accomplished by the kernel trick. The kernel trick is that instead of computing inner products in , compute them using a non-linear Kernel Function in input space.

Hard-Margin Non-Linear Support Vector Machine

If the data can be separated in , then the dual optimization problem is defined as where

The optimal separating Hyperplane in the is

and the decision rule is defined as

Soft-Margin Non-Linear Support Vector Machine

In the non-separable case, the dual problem is defined as where

The optimal separating Hyperplane in the is

and the decision rule is defined as