Definition

Let be an observed data, be an unobserved (latent) variable, are independent, be a joint pdf of , be a joint pdf of , be a conditional pdf of given

By the definition of a conditional pdf, we have the identity

The goal of the EM algorithm is maximizing the observed likelihood using the complete likelihood .

Using the definition conditional pdf, we derive the identity for an arbitrary but fixed

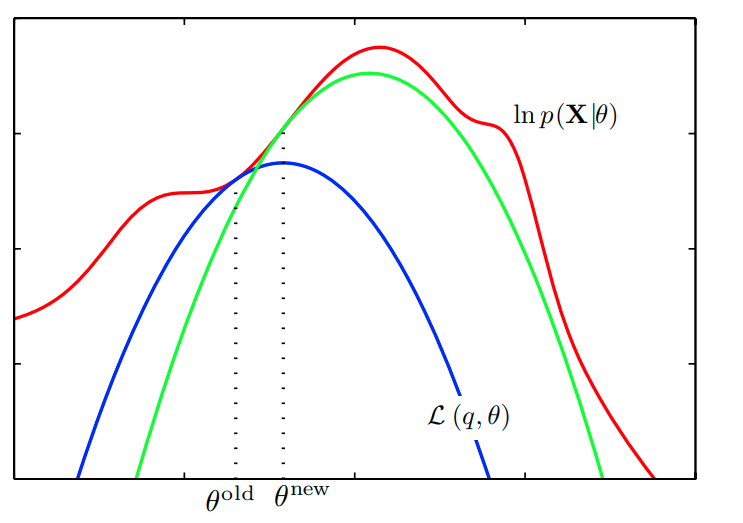

&= \int \ln[h(\mathbf{X}, \mathbf{Z} | \boldsymbol{\theta})] k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0}) d \mathbf{Z} - \int \ln[k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta})]k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0})d\mathbf{Z}\\ &= E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}] - E_{\boldsymbol{\theta}_{0}}[\ln k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}) | \boldsymbol{\theta}_{0}, \mathbf{X}] \end{aligned}$$ Let the first term of RHS be a quasi-likelihood function $$Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X}) := E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}]$$ EM algorithm maximizes $Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X})$ instead of maximizing $\ln L(\boldsymbol{\theta}|\mathbf{X})$ # Algorithm 1. Expectation Step: Compute $$Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X}) := E_{\hat{\boldsymbol{\theta}}^{(m)}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \hat{\boldsymbol{\theta}}_{m}, \mathbf{X}]$$ where the $m = 0, 1, \dots$, and the expectation is taken under the conditional pdf $k(\mathbf{Z} | \mathbf{X}, \hat{\boldsymbol{\theta}}^{(m)})$ 2. Maximization Step: $$\hat{\boldsymbol{\theta}}^{(m+1)} = \underset{\boldsymbol{\theta}}{\operatorname{arg max}} Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X})$$ # Properties ## Convergence The [[Sequence]] of estimates $\hat{\boldsymbol{\theta}}^{(m)}$ satisfies $$L(\hat{\boldsymbol{\theta}}^{(m+1)}|\mathbf{X}) \leq L(\hat{\boldsymbol{\theta}}^{(m)}|\mathbf{X})$$ Therefore the [[Sequence]] of EM estimates converge to (at least local) optimal