Probability and Distributions

The Probability Set Function

Sigma-Algebra

Definition

A sigma-algebra is a Family of Sets satisfying the following properties

where is a universal set

Intersection of Sigma-Fields

Consider a set of sigma-fields on ,

- is a sigma-field on .

- is a sigma-field on .

- is a sigma-field on

Facts

Link to originalIf a set of subsets of a universal set is both Pi-System and Lambda-System, then is a sigma-algebra on .

Probability Measure

Definition

The Measure with normality

Link to original

Inclusion-Exclusion Formula

Definition

Link to original

Increasing and Decreasing Sequence of Events

Definition

Facts

Let be an increasing sequence of events, then

Link to originalLet be a decreasing sequence of events, then

Boole's Inequality

Definition

Link to original

Conditional Probability

Definition

Facts

Link to original

Bayes Theorem

Definition

Discrete Case

where by Law of Total Probability

is called a prior probability, and is called a posterior probability

Continuous Case

where is not a constant, but an unknown parameter follows a certain distribution with a parameter .

is called a prior probability, is called a likelihood, is called an evidence or marginal likelihood, and is called a posterior probability

Parameter-Centric Notation

Examples

Consider a random variable follows Binomial Distribution and a prior distribution follows Beta Distribution where .

The PDFs are defined as and . Then, by Bayes theorem, Under Squared Error Loss, the Bayes Estimator is a mean of the posterior distribution.

Link to original

Statistical Independence

Definition

Statistical Independence of Events

Suppose a Probability Space . The events are independent if

Statistical Independence of Two Random Variables

Rigorous

Suppose a Probability Space . random variables are independent if are independent,

Casual

Two random variables are independent if and only if

Statistical Independence of Random Variables

Mutually Independent

A collection of random variables are mutually independent if and only if where is marginal CDF of

Or equivalently, a collection of random variables are mutually independent if and only if where is marginal PDF of

Pointwise Independent

A collection of random variables are Pointwise independent if and only if where is marginal CDF of and is joint pdf of and .

Statistical Independence of Stochastic Processes

Facts

Random variables are independent

where is a function of only, and is a function of only.

Random variables are independent

Random variables are independent

Random variables are independent

If Moment Generating Function exists and Random variables are independent

Mutually independence pairwise independence

If is mutually independent, then

If random variables are independent, are Borel measurable, so are

Link to originalLet Random variables . and are independent

If and follow normal distributions, and are independent

Random Variables

Random Variable

Definition

A random variable is a function whose inverse function is -measurable for the two measurable spaces and .

The inverse image of an arbitrary Borel set of Codomain is an element of sigma field .

Notations

Consider a probability space

- Outcomes:

- Set of outcomes (Sample space):

- Events:

- Set of events (Sigma-Field):

- Probabilities:

- Random variable:

For a random variable on a Probability Space

- if and only if the Distribution of is

- if and only if the Distribution Function of is

For a random variable on Probability Space and another random variable on Probability Space

Link to original

Transclude of Density-Function

Distribution Function

Definition

A distribution function is a function for the Random Variable on Probability Space

Facts

Proof By definition, So, defining is the Equivalence Relation to defining

Since is a Pi-System, is uniquely determined by by Extension from Pi-System

Now, is a Measurable Space induced by . Therefore, defining on is equivalent to the defining on

Distribution function(CDF) has the following properties

- Monotonic increasing:

- Right-continuous:

If a function satisfies the following properties, then is a distribution function(CDF) of some Random Variable

- Monotonic increasing:

- Right-continuous:

Link to original

Transformation of Random Variable

Definition

A new Random Variable can be defined by applying a function to the outcomes of a Random Variable

Discrete

Transformation Technique

Suppose is Bijective function

Continuous

Let be the PDF of Random Variable with a support , be a differentiable Bijective function, and

Transformation Technique

where

CDF Technique

If is increasing, then

If is decreasing, then

Link to original

Expectation of a Random Variable

Expected Value

Definition

The expected value of the Random Variable on Probability Space

Continuous

where is a PDF of Random Variable

The expected value of the Random Variable when satisfies absolute continuous over ,

Discrete

where is a PMF of Random Variable

The expected value of the Random Variable when satisfies absolute continuous over , In other words, has at most countable jumps.

Expected Value of a Function

Continuous

Discrete

Properties

Linearity

Random Variables

If , then

Matrix of Random Variables

Let be a matrices of random variables, be matrices of constants, and a matrix of constant. Then,

Notations

Link to original

Expression Discrete Continuous Expression for the event and the probability Expression for the measurable space and the distribution

Some Special Expectations

Moment

Definition

-th moment

Facts

Link to original

Central Moment

Definition

-th central moment

A Moment of a random variable about its mean

Link to original

Moment Generating Function

Definition

Univariate

Let be a Random Variable

Then, is called the moment generating function of the

Calculations of Moments

Moment can be calculated using the mgf

Uniqueness of MGF

Let be random variables with mgf , respectively Then, where

Let be a Random Variable If , where , then determine the distribution uniquely.

Multivariate

Let be a Random Vector where is a constant vector.

Calculations of Multivariate Moments

Moment can be calculated using the mgf

Marginal MGF

Facts

The mgf may not exist.

Let be a Random Vector. If exists and can be expressed as then and are statistically independent.

Link to originalLet be a Random Vector, , and , then and is independent where and are functions.

Characteristic Function

Definition

Link to original

Cumulant Generating Function

Definition

Calculations of Cumulants

the -th cumulant can be calculated using cgf

Link to original

Important Inequalities

Markov's Inequality

Definition

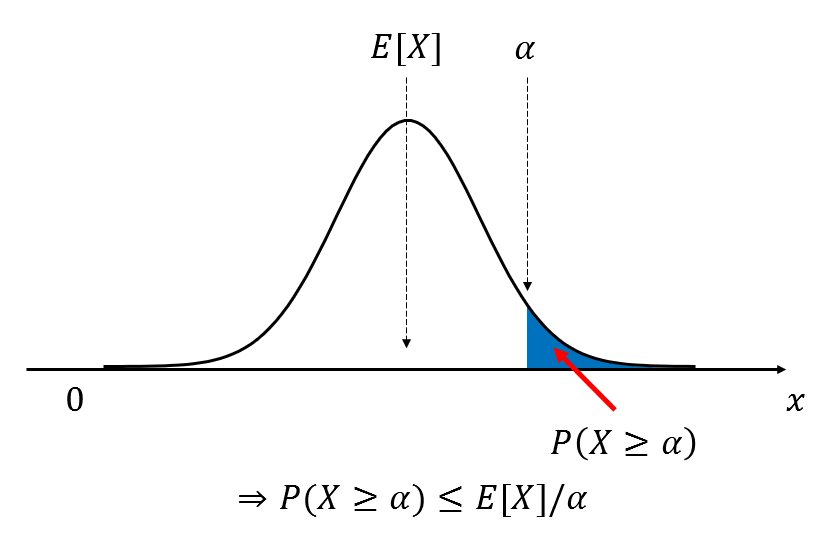

Let be a non-negative Random Variable, and , then where serves as an upper bound for the probability

Extended Version for Non-negative Functions

Let be a non-negative Function of a Random Variable , and , then where serves as an upper bound for the probability

Link to original

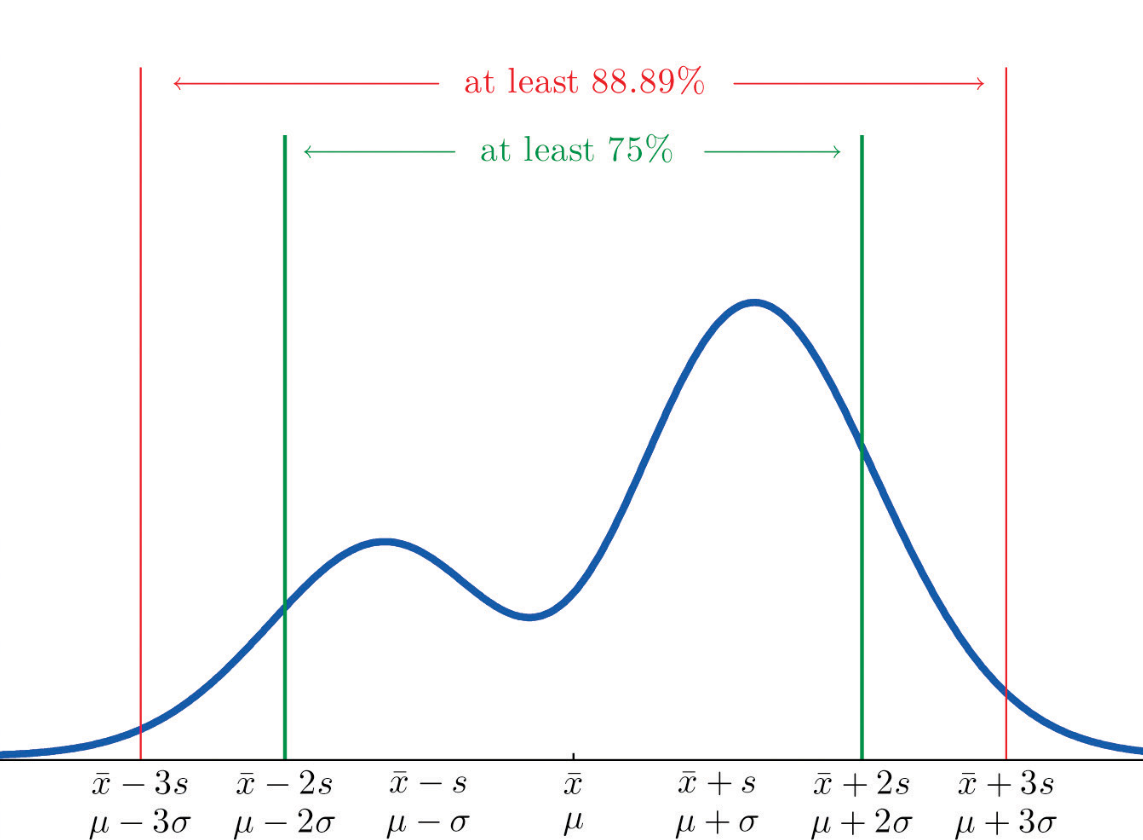

Chebyshev's Inequality

Definition

Link to original

Jensen's Inequality

Definition

Let be a Convex Function on an interval , be a Random Variable with support , and , then

Facts

Link to original

By Jensen’s Inequality, the following relation is satisfied arithmetic mean geometric mean harmonic mean

Multivariate Distribution

Random Vector

Definition

Column vector whose components are random variables .

Link to original

Multivariate Distribution

Definition

Joint CDF

The Distribution Function of a Random Vector is defined as where

Joint PDF

The joint probability Distribution Function of discrete Random Vector

The joint probability Distribution Function of continuous Random Vector

Expected Value of a Multivariate Function

Continuous

E[g(\mathbf{X})] = \idotsint\limits_{x_{n} \dots x_{1}} g(\mathbf{x})f(\mathbf{x})dx_{1} \dots \dots dx_{n}

Discrete

Marginal Distribution of a Multivariate Function

Marginal CDF

Marginal PDF

f_{X_{1}}(x_{1}) = E[g(\mathbf{X})] = \idotsint\limits_{x_{n} \dots x_{1}} f(x_{2}, \dots, x_n)dx_{2} \dots \dots dx_{n}

Conditional Distribution of a Multivariate Function

Properties

Linearity

If , then

Link to original

Transformation of Random Vector

Definition

Discrete

Let be a Random Vector with joint pmf and support , and be a vector Bijective transformation

Continuous

Let be a Random Vector with joint pdf and support , be a vector Bijective transformation, and

One-to-One Transformation Case

where is a Jacobian Matrix, and

Many-to-One Transformation Case

Let be a many-to-one transformation, , be a partition of a set whose each partition’s transformation result is one-to-one Then, where is a Jacobian Matrix for each partition, and , and is a union of the pairwise disjoint sets

CDF Technique

If is increasing, then

If is decreasing, then

Link to original

Conditional Distribution

Conditional Distribution

Definition

where

Link to original

Conditional Expectation

Definition

Conditional Expectation of a Random Variable

Conditional Expectation of a Function

Conditional Expectation with respect to a Sub-Sigma-Algebra

Consider a Probability Space , a Random Variable , and a sub-Sigma-Algebra . A conditional expectation of given , denoted as is a function which satisfies:

Existence of Conditional Distribution

Define a measure and its restriction where , and . Then, ( is absolute continuous with respect to ) and there exists a Radon–Nikodym Theorem satisfying and it is called the conditional expectation of given .

Conditional Expectation with respect to a Random Variable

Suppose a Probability Space , and random variables and . The conditional expectation of given , denoted as is defined as where is the sigma-field generated by random variable

Facts

Link to originalIf is -measurable, then , where is sub-Sigma-Field

Since is -measurable, -measurable function s.t. By the property of conditional expectation, .

Conditional Variance

Definition

Link to original

Law of Total Expectation

Definition

Proof

By definition of conditional expectation Since is sub-Sigma-Field, and hold.

Then, By definition of Expected Value

Link to original

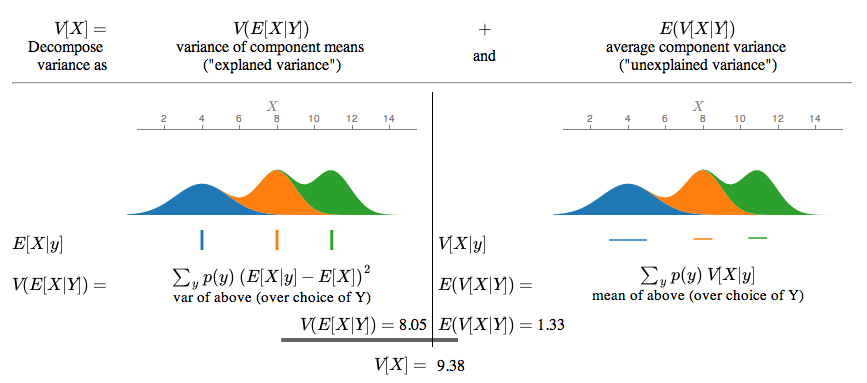

Law of Total Variance

Definition

Link to original

The Correlation Coefficient

Covariance

Definition

Properties

Covariance with Itself

Covariance of Linear Combinations

Link to original

Pearson Correlation Coefficient

Definition

Facts

Link to originalLet is linear in , that is , then the Conditional Expectation and Conditional Variance will be

Independent Random Variable

Independent and Identically Distributed Random Variable

Definition

A Random Vector is independent and identically distributed if each Random Variable has the same Distribution and are mutually independent.

Facts

Link to originalIf is i.i.d. Random Vector, then

Extension to Several Random Variables

Covariance Matrix

Definition

Variance-Covariance Matrix

Let be an dimensional Random Vector with finite variance , and , then the variance-covariance matrix of is

Cross-Covariance Matrix

Let be dimensional random vectors with finite variance , and , then the cross-covariance matrix of is

Properties

Let be a Random Vector with finite variance , and be a matrix of constants, then

Let and be random vectors. where and are matrices of constants.

Facts

Every variance-covariance matrix is Positive Semi-Definite Matrix

Link to originalLet be a Random Vector such that no element of is a linear combination of the remaining elements. Then, the Variance-Covariance Matrix of the random vector is Positive-Definite Matrix.

Some Special Distributions

Uniform Distribution

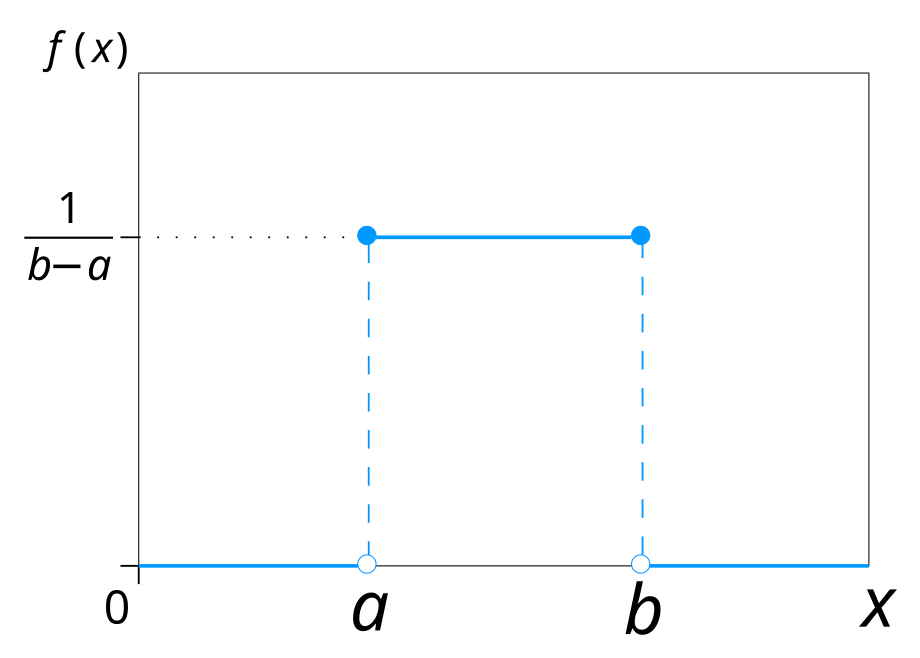

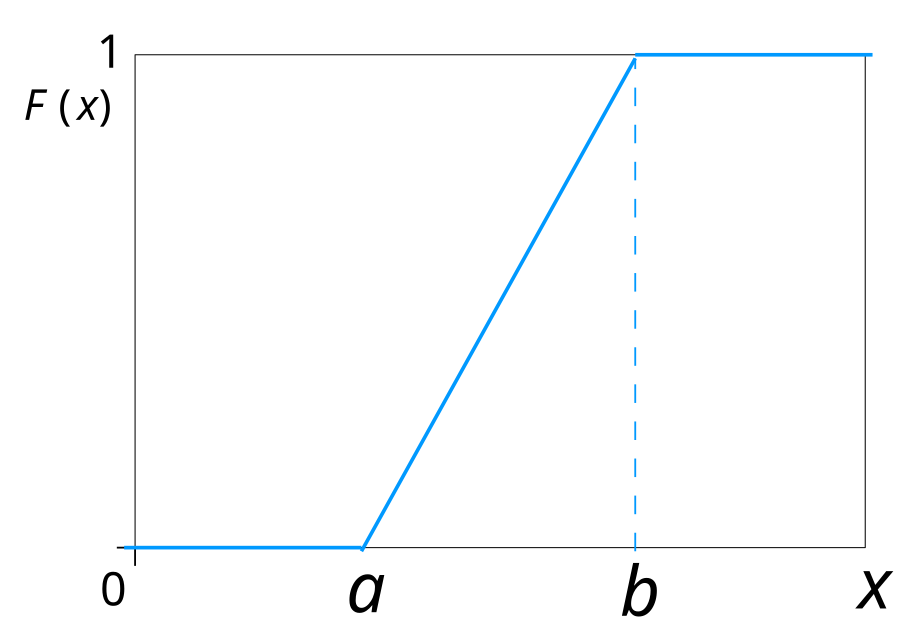

Definition

where and are the minimum and maximum values.

Properties

CDF

Mean

Variance

Link to original

The Binomial and Related Distributions

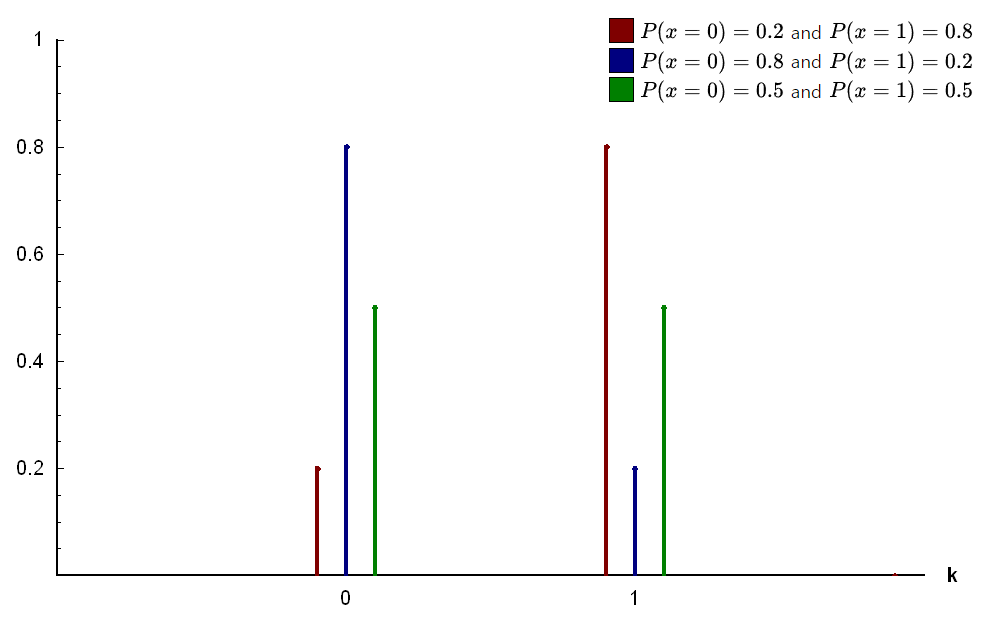

Bernoulli Distribution

Definition

where is a probability of success

number of success in a single trial with success probability

Bernoulli Process

The i.i.d. Random Vector with Bernoulli distribution

Properties

Mean

Variance

Link to original

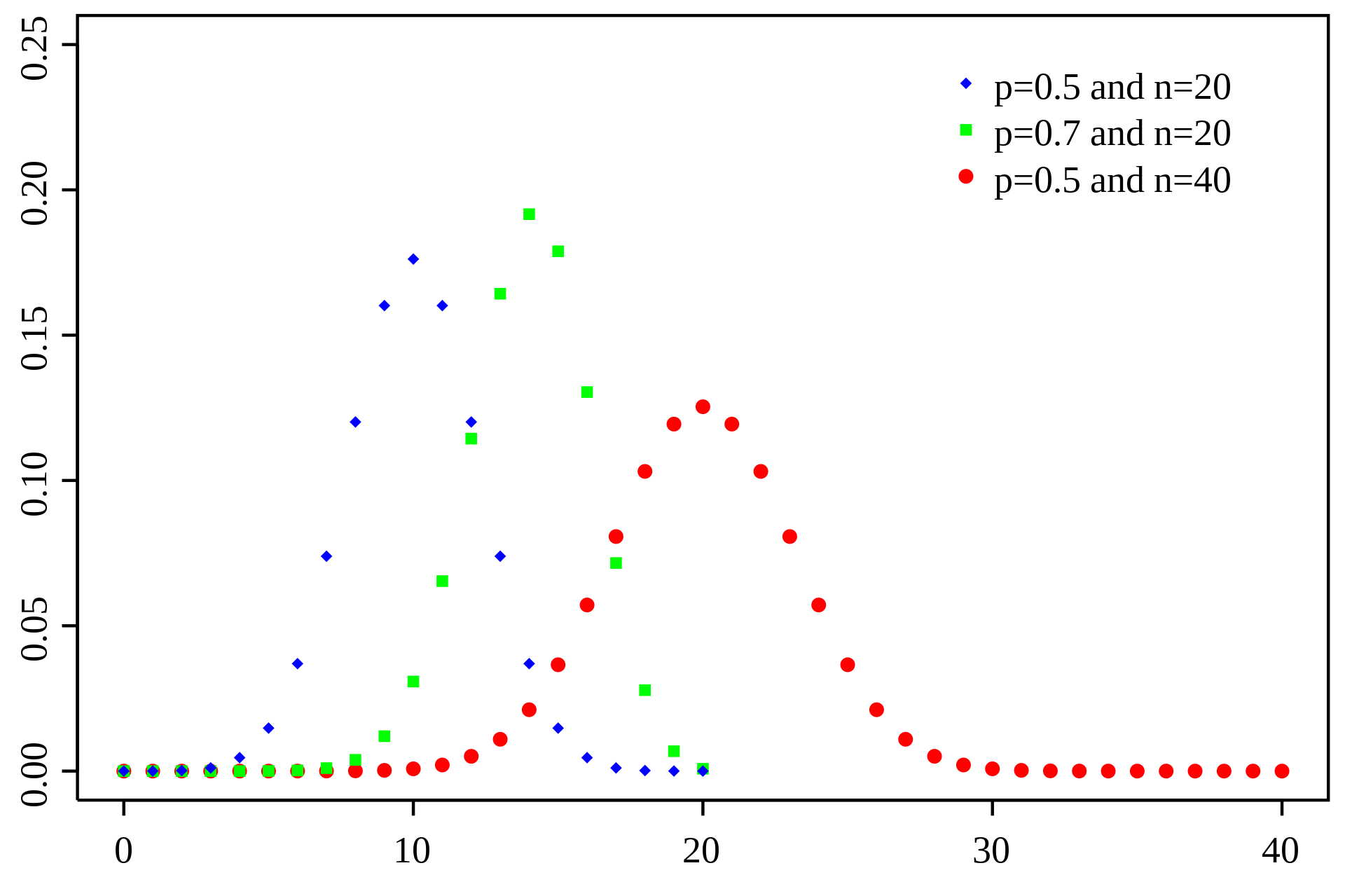

Binomial Distribution

Definition

where is the length of bernoulli process, and is a probability of success

The number of successes in length bernoulli process with success probability

Properties

Mean

Variance

MGF

Facts

Link to originalLet be independent random variables following binomial distribution, then

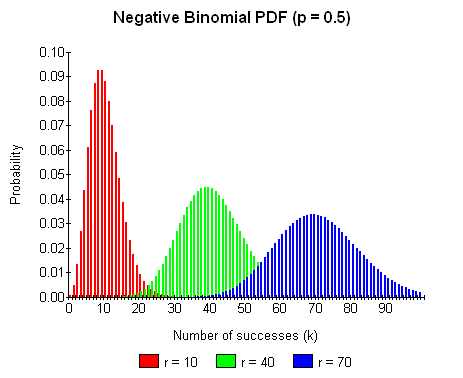

Negative Binomial Distribution

Definition

where is a number of successes, and is a probability in each experiment

The number of failures in a bernoulli process before the -th success

Properties

Mean

Variance

MGF

Link to original

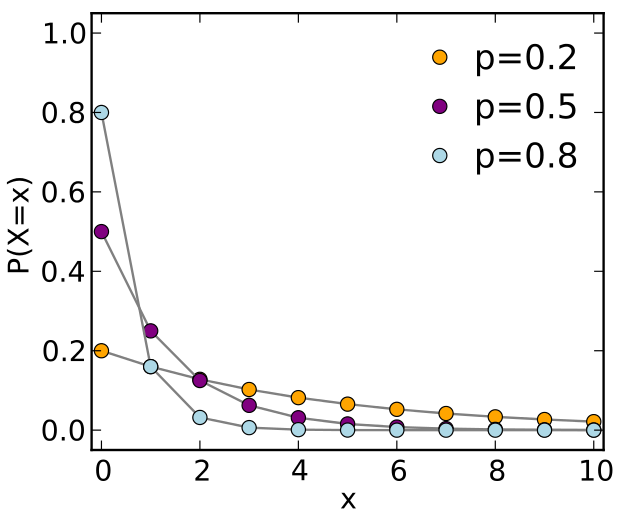

Geometric Distribution

Definition

where is a probability in each experiment

The number of failures before the 1st success

Properties

Mean

Variance

Link to original

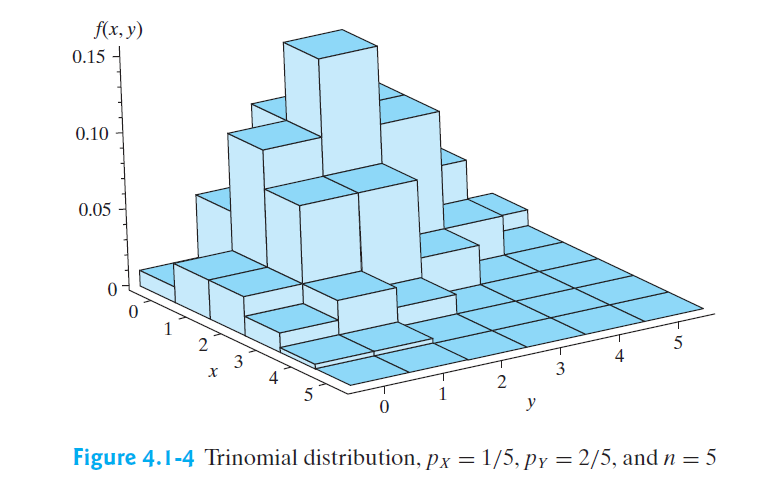

Trinomial Distribution

Definition

where is the number of trials, is a probability of category , and is a probability of category

Distribution that expands the outcomes of a binomial distribution to three

Properties

MGF

Marginal PDF

Conditional PDF

Link to original

Multinomial Distribution

Definition

where is the number of trials, is a probability of category

Distribution that describes the probability of observing a specific combination of outcomes

Properties

where , , and

MGF

Marginal PDF

Each one-variable marginal pdf is Binomial Distribution, each two-variables marginal pdf is Trinomial Distribution, and so on.

Link to original

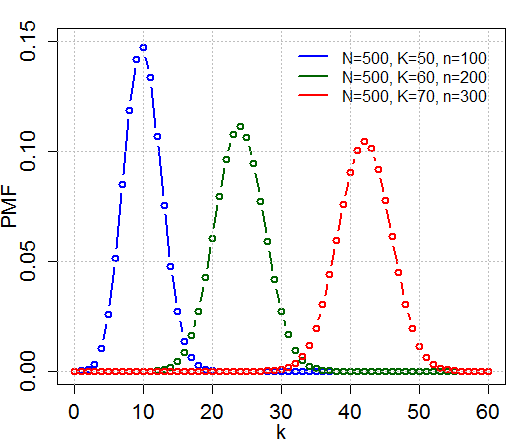

Hypergeometric Distribution

Definition

where is the population size, is the number of success, and is the number of trials,

Distribution that describes the probability of successes in draws, without replacement, from a population that contains successes.

Draws without replacement version of Binomial Distribution

Properties

Mean

Variance

Link to original

Poisson Distribution

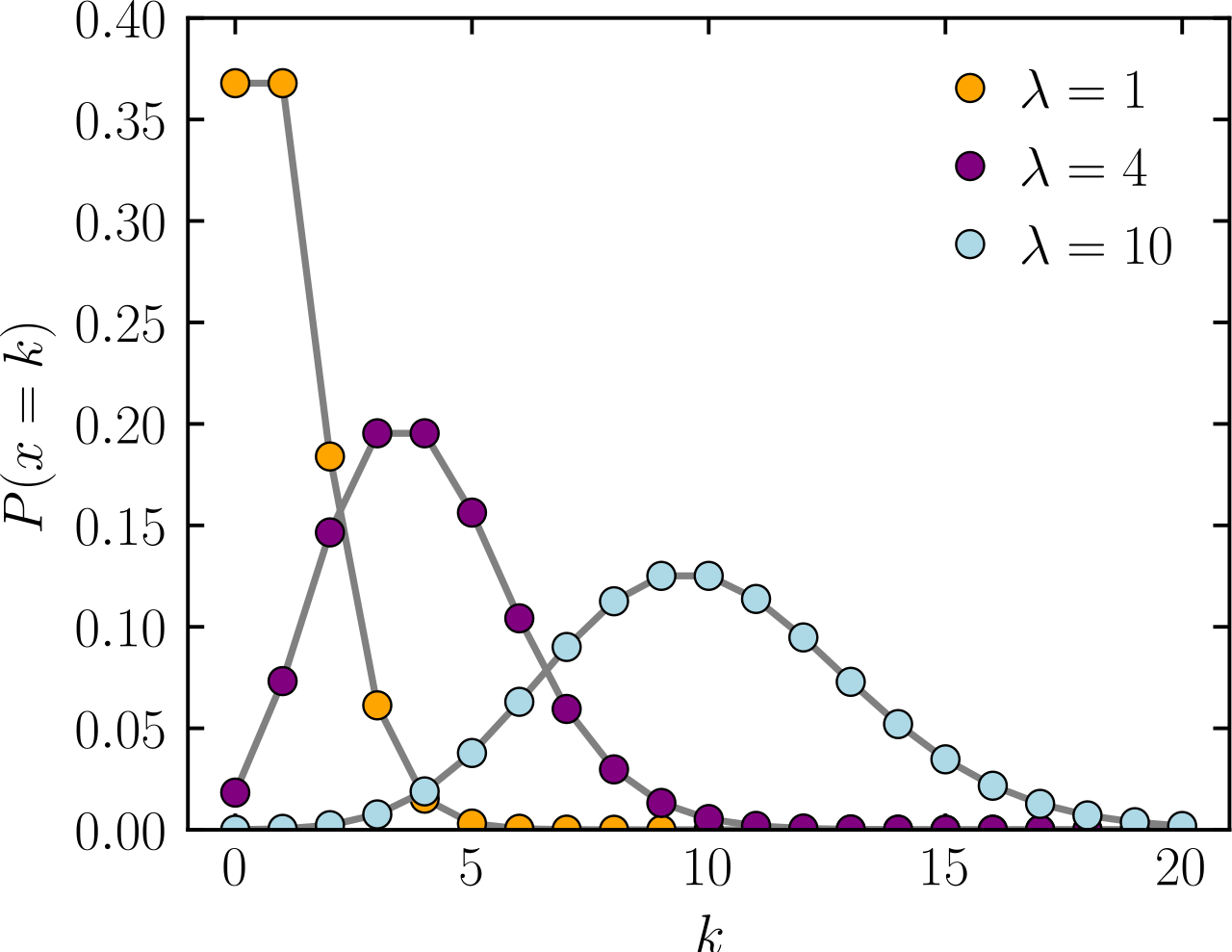

Poission Distribution

Definition

where is the average number of occurrences in a fixed interval of time

The number of occurrences in a fixed interval of time with mean

Properties

where

Mean

Variance

MGF

Summation

Let , and ‘s are independent, then

Link to original

The Gamma, Chi-Squared, and Beta Distribution

Gamma Function

Definition

where

where

Gamma function is used as an extension of the factorial function

Properties

Link to original

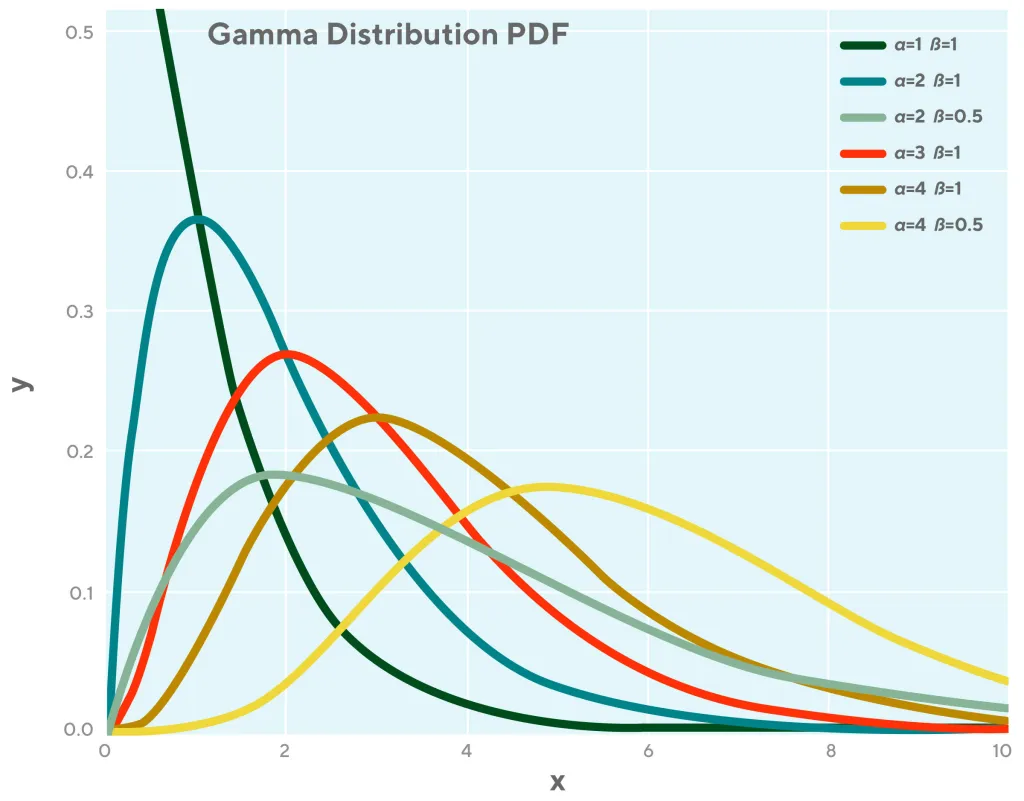

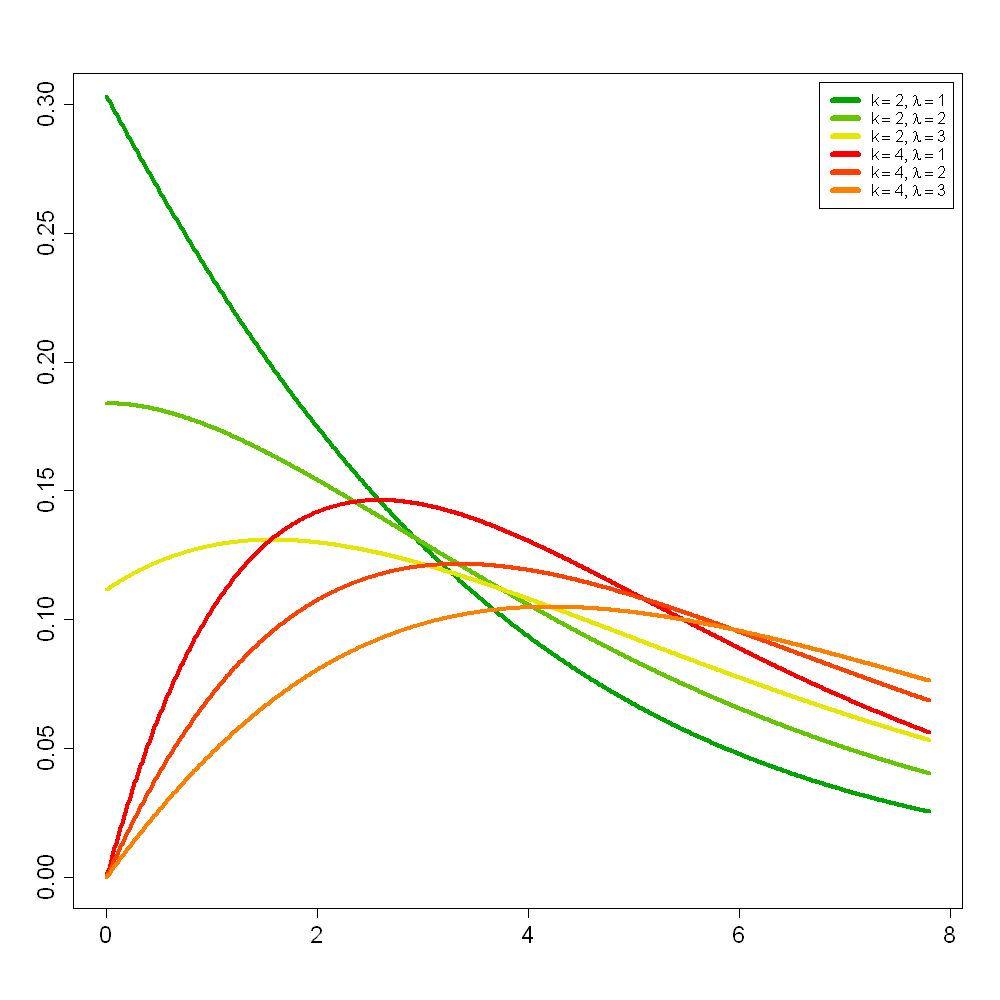

Gamma Distribution

Definition

where is the shape parameter, and is the scale parameter

Distribution that models the waiting time until occurring th events in a Poisson process with mean

Properties

where is the Gamma Function

Mean

Variance

MGF

where and

Sum of independent Gamma distributions

Let ‘s are independent gamma distributions

Scaling

Let , then,

Let , then,

Relationship with Poisson Distribution

Let be the time until the -th event in a poisson process with rate , then, the cdf and pdf of is

Link to original

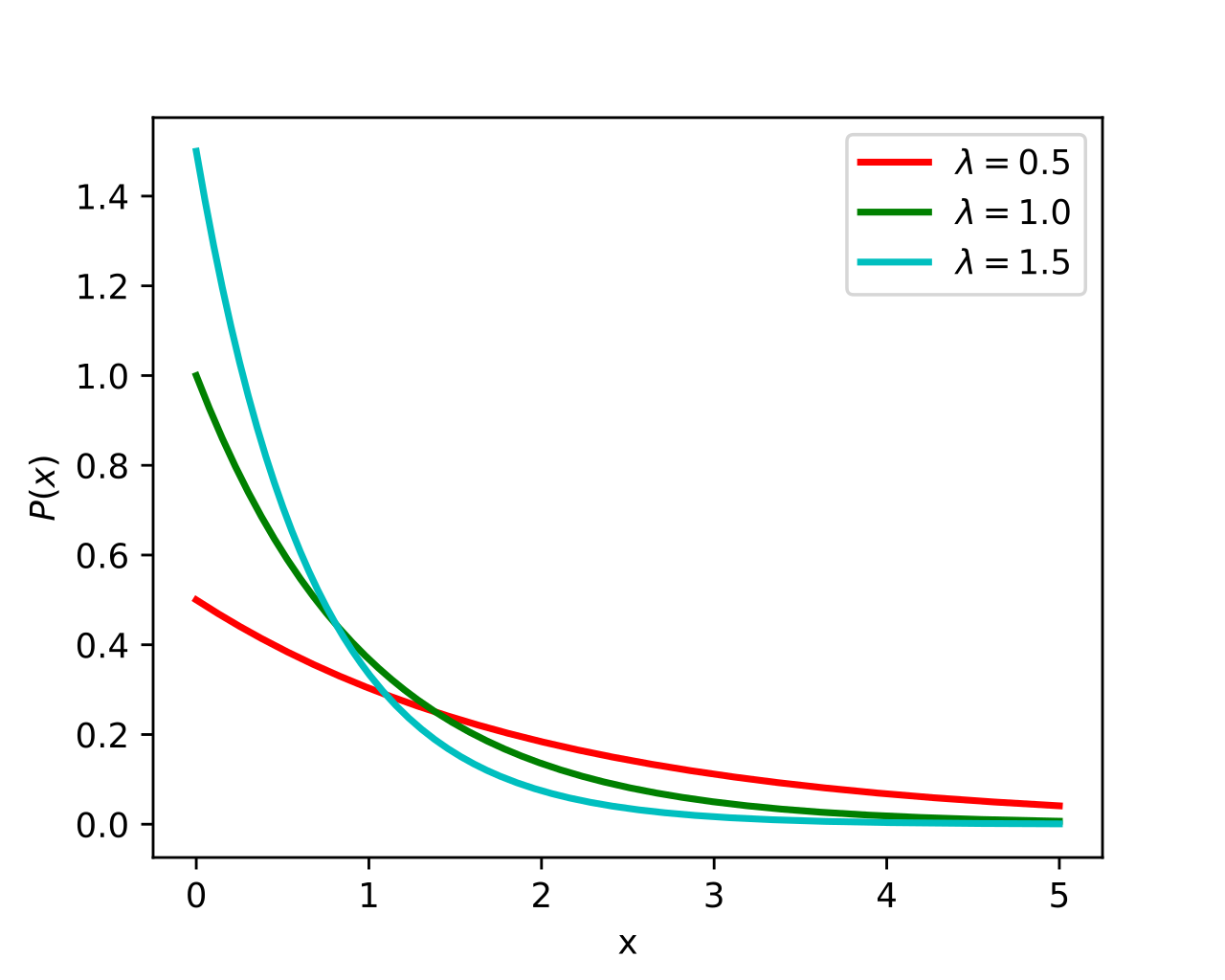

Exponential Distribution

Definition

where is the average number of events per fixed unit of time

Time interval between events in a Poisson process with mean

Properties

CDF

Mean

Variance

Memorylessness Property

Link to original

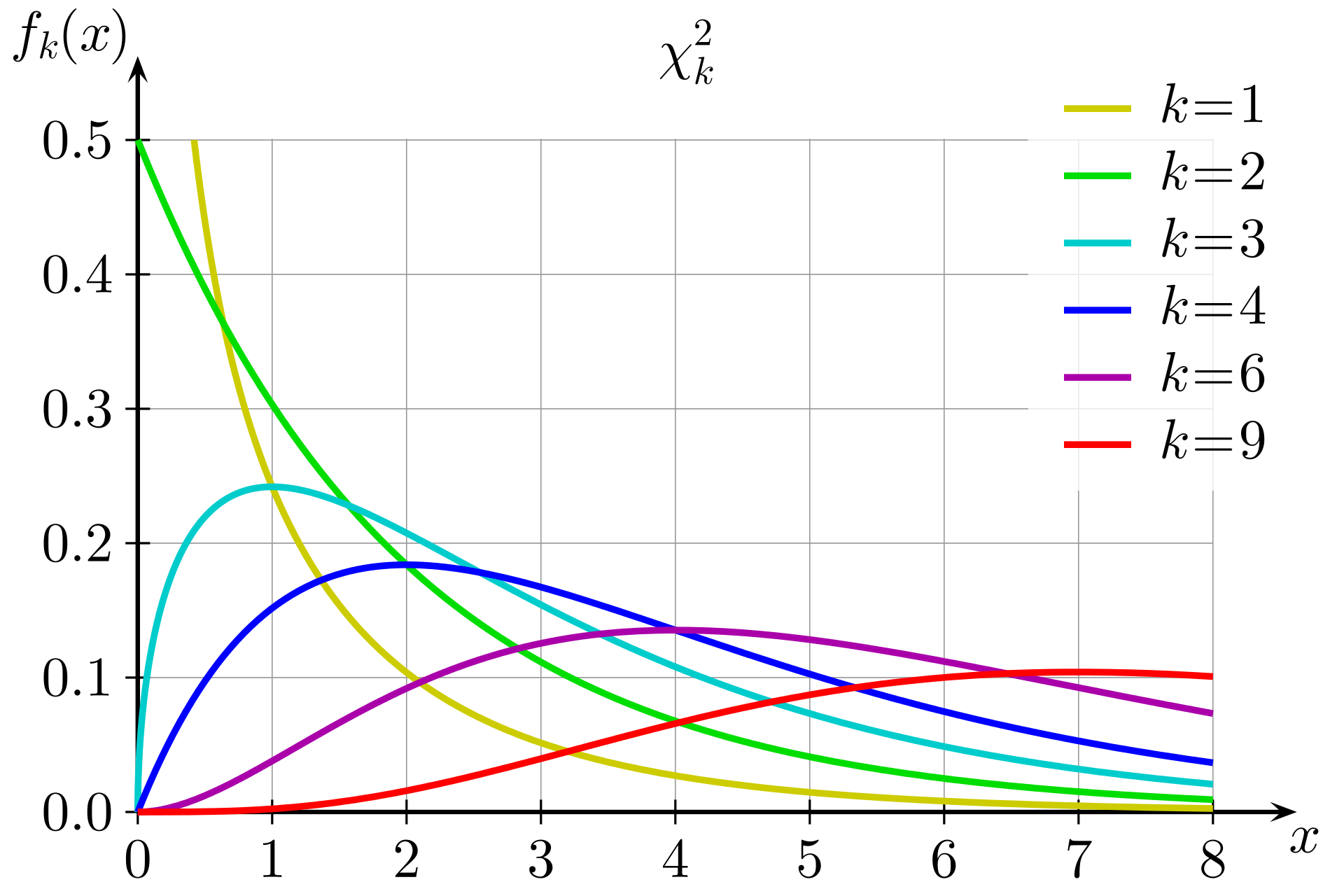

Chi-squared Distribution

Definition

where is the degrees of freedom

squared sum of independent standard normal distributions

Properties

Mean

Variance

MGF

Additivity

Let ‘s are independent chi-squared distributions

Facts

Let and , and is independent of , then

Link to originalLet , where are quadratic forms in , where each element of the is a Random Sample from If , then

- are independent

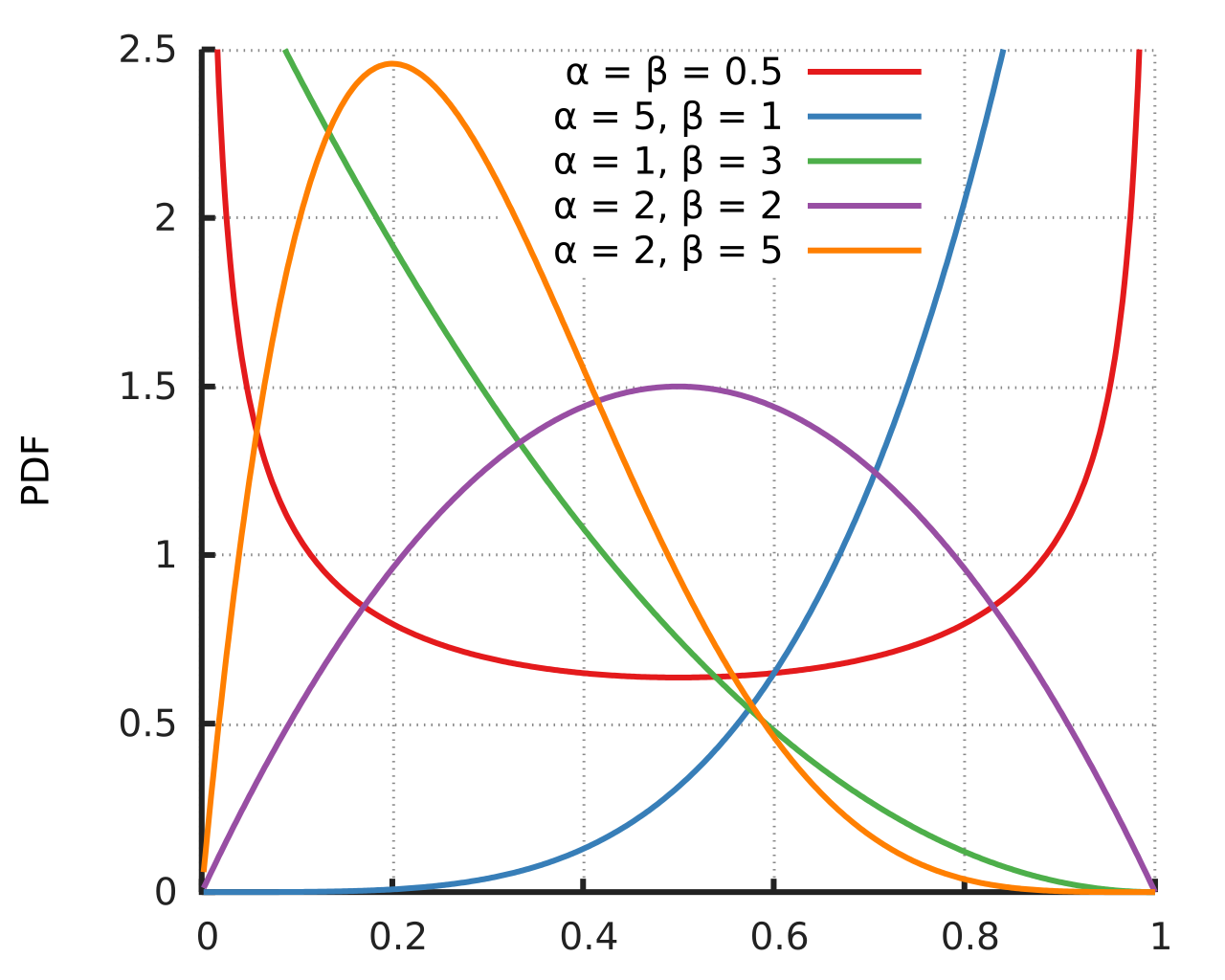

Beta Distribution

Definition

where are the shape parameters

Properties

Mean

Variance

Derivation from Gamma Distribution

Let are independent gamma distributions, then

Link to original

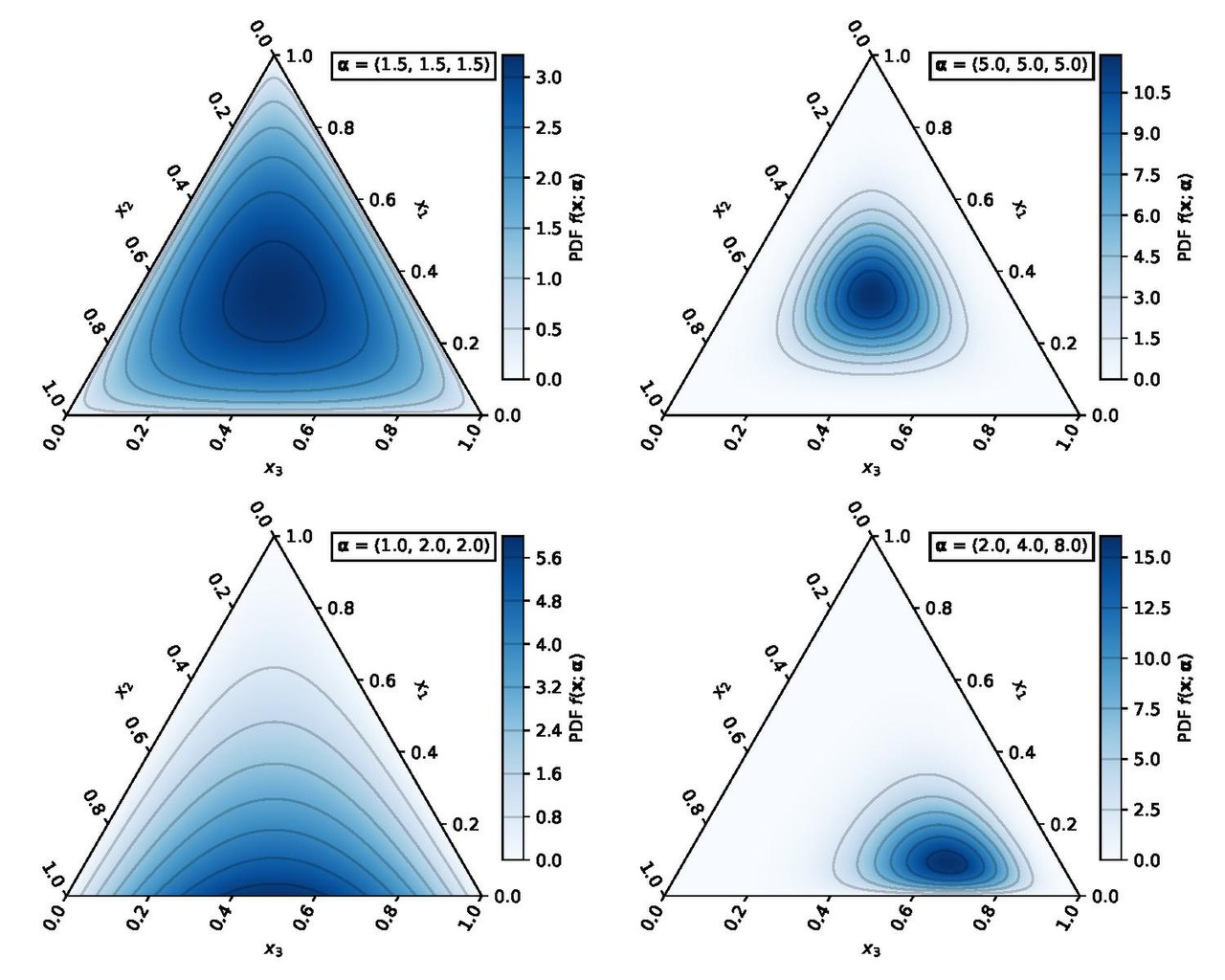

Dirichlet Distribution

Definition

where is the number of categories, and ‘s are the concentration parameters

A multivariate generalization of the Beta Distribution

Properties

Facts

Link to originalIf , then the Dirichlet distribution is a beta distribution.

The Normal Distribution

Normal Distribution

Definition

where is the location parameter(mean), and is the scale parameter(variance)

Standard Normal Distribution

Properties

Mean

Variance

MGF

Higher Order Moments

Sum of Normally Distributed Random Variables

Let be independent random variables following normal distribution, then

Relationship with Chi-squared Distribution

Let be a standard normal distribution, then

Facts

Link to originalLet , then

The Multivariate Normal Distribution

Multivariate Normal Distribution

Definition

where is the number of dimensions, is the vector of location parameters, and is the vector of scale parameters

Standard Multivariate Normal Distribution

MGF

Properties

Mean

Variance

MGF

Affine Transformation

Let be a Random Variable following multivariate normal distribution, be a matrix, and be a dimensional vector, then

Relationship with Chi-squared Distribution

Suppose be a Random Variable following multivariate normal distribution, then

Facts

Let , be an , and be a -dimensional vector, then

Let , , , , where is and is vectors. Then, and are independent

Link to originalLet , , and , where is , is matrices. and are independent

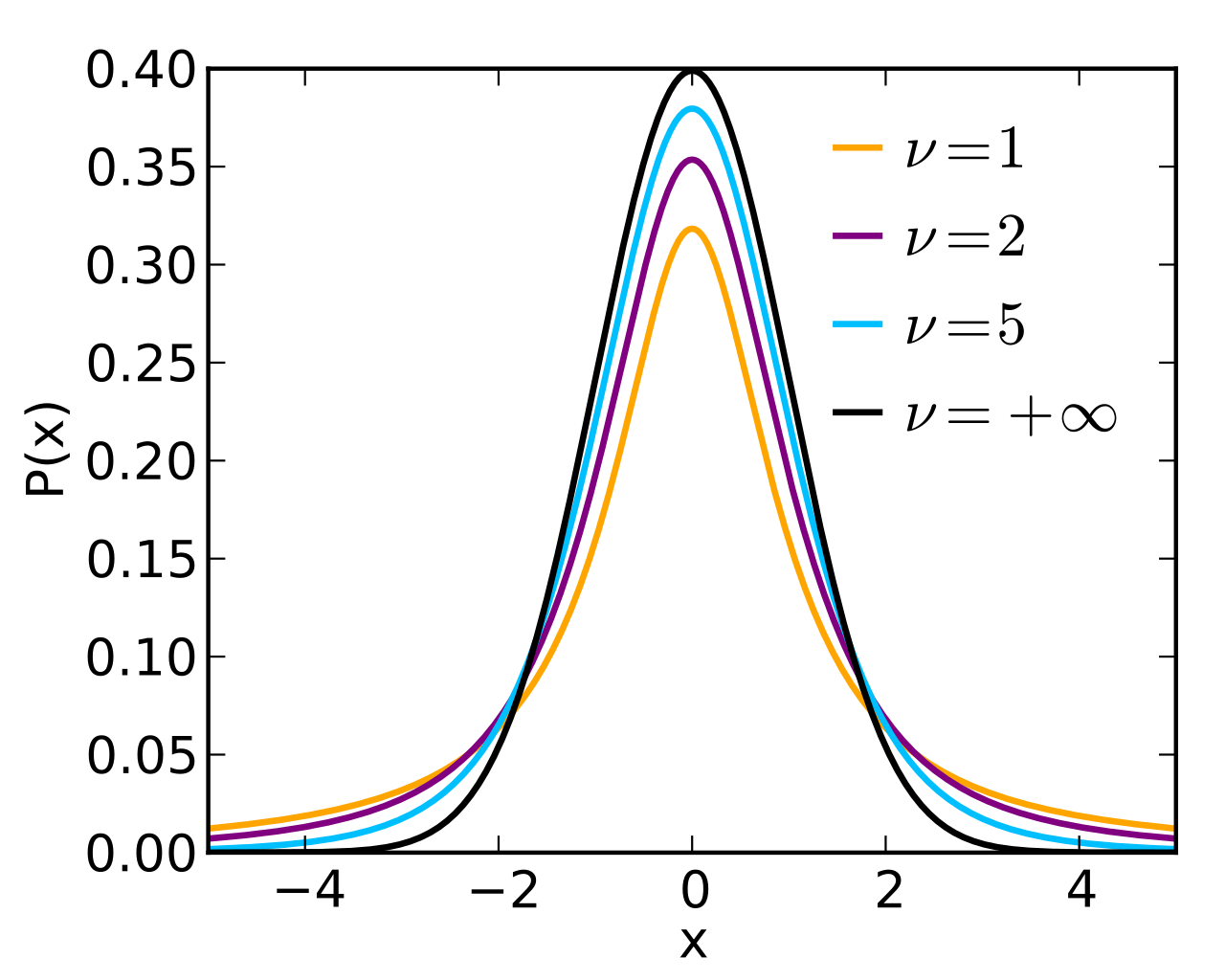

t-and F-Distribution

Student's t-Distribution

Definition

Let be a standard normal distribution, be a Chi-squared Distribution, and be independent, then where is the degrees of freedom

Properties

Mean

Variance

Link to original

F-Distribution

Definition

Let be independent random variables following Chi-squared distributions, then where are the degrees of freedoms

Properties

{\Gamma(\frac{r_{1} + r_{2}}{2}) (\frac{r_{1}}{r_{2}})^{\frac{r_{1}}{2}} x^{\frac{r_{1}}{2}-1}} {\Gamma(\frac{r_{1}}{2}) \Gamma(\frac{r_{2}}{2}) (\frac{r_{1}}{r_{2}}x+1)^{(r_{1} + r_{2})/2}}$$ ## Mean $$E(X) = \frac{r_{2}}{r_{2} - 2},\quad r_{2}>2$$ ## Variance $$\operatorname{Var}(X) = \frac{2(r_{1}+r_{2}-2)}{r_{1} (r_{2}-2)^{2}(r_{2}-4)},\quad r_{2}>4$$Link to original

Student's Theorem

Definition

Let be i.i.d. Random Vector following Normal Distribution, , and , then

Link to original

- and are independent

Consistency, and Limiting Distributions

Convergence in Probability

Convergence in Probability

Definition

Let be a Sequence of random variables and be a Random Variable, then converges in probability to if , and denoted by

Facts

If the Sequence of random variables converges in probability to , then it also Almost Surely converge to .

Link to original

Convergence in Probability implies convergence in distribution

Convergence in probability has Linearity

Link to originalContinuous Mapping Theorem

Definition

Continuous functions preserve convergences (in probability, Almost Surely, or in distribution) of a Sequence of random variables to limits.

Consider a Sequence of random variables defined on same Probability Space, and a Continuous Function on the space. Then,

Link to original

- Convergence in Probability to a Constant:

- Convergence in Probability:

- Almost Sure Convergence:

- Convergence in Distribution:

Consistency

Definition

A Statistic is called consistent estimator of if converges in probability to

Link to original

Converge in Distribution

Convergence in Distribution

Definition

Let be a Sequence of random variables with CDF , be a Random Variable with cdf , and be a set of every point at which is continuous, then converges in distribution to if , and denoted by . is called the limiting distribution of or asymptotic distribution of

Facts

Convergence in Probability implies convergence in distribution

Continuous Mapping Theorem

Definition

Continuous functions preserve convergences (in probability, Almost Surely, or in distribution) of a Sequence of random variables to limits.

Consider a Sequence of random variables defined on same Probability Space, and a Continuous Function on the space. Then,

Link to original

- Convergence in Probability to a Constant:

- Convergence in Probability:

- Almost Sure Convergence:

- Convergence in Distribution:

Slutzky Theorem

Definition

Let be Sequence of random variables, be a Random Variable, be constants, then

Link to originalLink to originalLink to originalLet be a sequence of distributions. Then, The convergence of the KL-Divergence to zero implies that the JS-Divergence also converges to zero. The convergence of the JS-Divergence to zero is equivalent to the convergence of the Total Variation Distance to zero. The convergence of the Total Variation Distance to zero implies that the Wasserstein Distance also converges to zero. The convergence of the Wasserstein Distance to zero is equivalent to the Convergence in Distribution of the sequence.

Boundedness in Probability

Definition

Facts

Link to originalLet be a Random Vector, and be a Random Variable, then If , then is bounded in probability

Big O Notation

Definition

Let be the rate of convergence, and be a Sequence, then

Link to original

Big O in Probability Notation

Definition

Extension of Big O Notation for Random Variable

Let be the rate of convergence, and be a Sequence of random variables, then

Facts

If , then is called Boundedness in Probability

Link to original

Delta Method

Definition

Univariate Delta Method

Let be a sequence of random variables satisfying , be a differentiable function at , and , then

Proof

By Taylor Series approximation

Where by the assumption. By the continuous mapping theorem, , which is a function of , also converges to random variable, so it is Boundedness in Probability by the property of converging random vector

Therefore, by the property of sequence of random variables bounded in probability

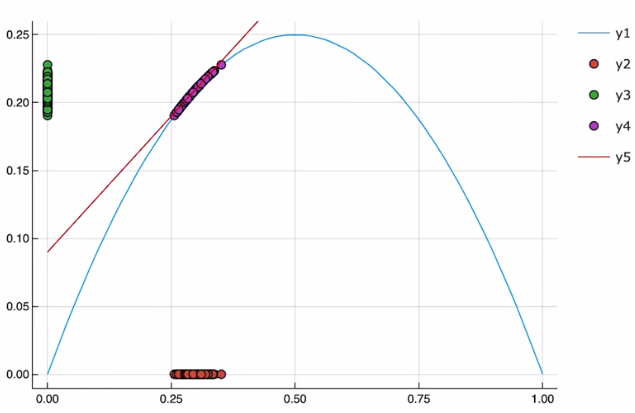

Examples

Estimation of the sample variance of Bernoulli Distribution

Let by CLT

by Delta method

Let , then

Therefore, the sample mean and variance follow such distributions

Visualization

- x-axis:

- y_axis:

- y1: ^[variance by mean]

- y2: ^[sample mean]

- y3, y4: ^[sample variance that calculated by the sample mean]

- y5: first order approximated line at

If sample size , variance of sample mean . So, sample variance can be well approximated by first-order approximation.

Link to original

MGF Technique

Definition

Let be a Sequence of random variables with MGF and be a Random Variable with MGF , then

A technique calculating limiting distribution using Moment Generating Function

Facts

Link to original

Central Limit Theorem

Central Limit Theorem

Definition

Let be i.i.d. Random Sample from a distribution with mean , variance , then

For i.i.d random variables that have finite variance, the sample mean converges to Normal Distribution.

Link to original

Asymptotics for Multivariate Distributions

Asymptotics for Multivariate Distributions

Definition

Convergence in Probability

Let be a Sequence of random vectors and be a Random Vector, then converges in probability to if , and denoted by

Let be a Sequence of random vectors and be a Random Vector, then

Convergence in Distribution

Let be a Sequence of random vectors with CDF , be a Random Variable with cdf , and be a set of every point at which is continuous, then converges in distribution to if , and denoted by . is also called limiting distribution of or asymptotic distribution of

Continuous Mapping Theorem

Let be a Continuous Function

MGF Technique

Let be a Sequence of random vectors with MGF and be a Random Vector with MGF , then

Central Limit Theorem

Let be i.i.d. Sequence of random vectors from a distribution with mean , variance-covariance matrix , then

For i.i.d random variables that have finite variance, the sample mean converges to Normal Distribution.

Delta Method

Let be a Sequence of p-dimensional random vectors with , , be a matrix, and , then

Facts

Let , then

Link to originalLet be a Sequence of p-dimensional random vectors, , be a constant matrix, and be a dimensional constant vector, then

Some Elementary Statistical Inferences

Sample and Statistic

Random Sample

Definition

A realization of i.i.d. Random Vector

Properties

Let be random samples, be constant matrix, and be constant matrix, then the Expected Value and Covariance Matrix of random vectors become

Link to original

Statistic

Definition

A quantity computed from values in a Random Sample

Link to original

Bias of an Estimator

Definition

Let be a Random Sample from where is a parameter, and be a Statistic.

is unbiased estimator An estimator is unbiased if its bias is equal to zero for all values of parameter .

Link to original

Order Statistic

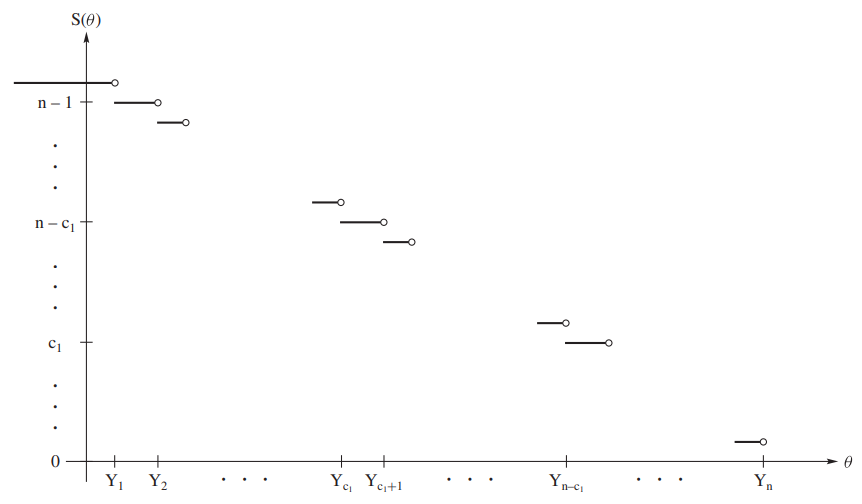

Order Statistic

Definition

Let be Random Sample from a population with a PDF and CDF . And let be the smallest of ‘s, be the 2nd smallest of ‘s , , and be the largest of ‘s, then is called the order statistic of

Properties

Joint PDF

where

Marginal PDF

Joint PDF of and

Suppose

Joint PDF of

Suppose where

Link to original

Quantiles

Definition

Let be a Random Variable with cdf , and be a -th quantile of

Properties

Estimator of Quantile

Let be order statistics, then we can define an estimator of quantile using the order statistic where

and call the a -th sample quantile

Confidence Intervals of Quantiles

where

Select satisfying the equation

Link to original

Tolerance Limits for Distributions

Tolerance Limits for Distributions

Definition

Let be Random Sample from a distribution with cdf , and be Order Statistic, then If , then is tolerance limits for of the probability for the distribution of

Properties

Joint PDF

Computation of

where

A probability that the tolerance interval contains of the probability

Link to original

Introduction to Hypothesis Testing

Hypothesis Testing

Definition

Types of Errors

is true is true Not reeject type 2 error () Reject type 1 error () Let the set where is rejected be a rejection region and a statistic for testing be a test statistic, then the size is the maximum of probability of type 1 error a test of size is a power of the test is the probability of the type 2 error is

p-value

The probability of obtaining test results at least as extreme as the result of actually observed under

Link to original

Goodness-of-Fit Test for Multinomial Distribution

Definition

Let be a Multinomial Distribution and consider a null hypothesis . Then, the test Statistic, which follows Chi-squared Distribution, is defined as

Link to original

Homogeneity Test for Multinomial Distribution

Definition

Let and consider a null hypothesis . Then, the test Statistic, which follows Chi-squared Distribution, is defined as where

Link to original

Independence Test for Two Discrete Variables

Definition

Let category variables and and consider a null hypothesis are independent. Then, the test Statistic, which follows Chi-squared Distribution, is defined as where , , ,

Link to original

The Method of Monte Carlo

Inverse Transform Sampling

Definition

we can generate with CDF by using inverse function of CDF and uniform random variable

Facts

Link to originalLet and be continuous CDF, then

Box-Muller Transformation

Definition

Let , , and , then and

The method that generates pairs of i.i.d. normal distribution by using two uniform distributions or Exponential Distribution

Link to original

Accept Reject Generation Algorithm

Definition

Let be independent random variables, be a PDF which we want to sample, and step 1: Generate and . step 2: If otherwise, go to step 1. step 3. repeat step 1 and 2.

Link to original

Bootstrap Procedures

Bootstrapping

Definition

Let be a random variable with , be a Random Sample of , and be a point estimator of Then, the bootstrap sample is -dimensional sample vector drawn with replacement from the vector of original samples

Bootstrap Confidence Interval

Draw (large number) bootstrap samples and calculate confidence interval using the samples Calculate point estimators using the bootstrap samples , and define order statistics for the estimators. Then, confidence bootstrap confidence interval for is , where

Link to original

Maximum Likelihood Methods

Maximum Likelihood Estimation

Likelihood Function

Definition

Log-Likelihood Function

Link to original

Maximum Likelihood Estimation

Definition

MLE is the method of estimating the parameters of an assumed Distribution

Let be Random Sample with PDF , where , then the MLE of is estimated as

Regularity Conditions

- R0: The pdfs are distinct, i.e.

- R1: The pdfs have same supports

- R2: The true value is an interior point in

- R3: The pdf is twice differentiable with respect to

- R4:

- R5: The pdf is three times differentiable with respect to , , and interior point

Properties

Functional Invariance

If is the MLE for , then is the MLE of

Consistency

Under R0 ~ R2 Regularity Conditions, let be a true parameter, is differentiable with respect to , then has a solution such that

Asymptotic Normality

Under the R0 ~ R5 Regularity Conditions, let be Random Sample with PDF , where , be a consistent Sequence of solutions of MLE equation , and , then where is the Fisher Information.

By the asymptotic normality, the MLE estimator is asymptotically efficient under R0 ~ R5 Regularity Conditions

Asymptotic Confidence Interval

By the asymptotic normality of MLE, Thus, confidence interval of for is

Delta method for MLE Estimator

Under the R0 ~ R5 Regularity Conditions, let be a continuous function and , then

Facts

Link to originalUnder R0 and R1 regularity conditions, let be a true parameter, then

Rao-Cramer Lower Bound and Efficiency

Bartlett Identities

Definition

First Bartlett Identity

where is a Likelihood Function and is a Score Function

Second Bartlett Identity

where is a Likelihood Function

Link to original

Score Function

Definition

The gradient of the log-likelihood function with respect to the parameter vector. The score indicates the steepness of the log-likelihood function

Facts

Link to originalThe score will vanish at a local Extremum

Fisher Information

Definition

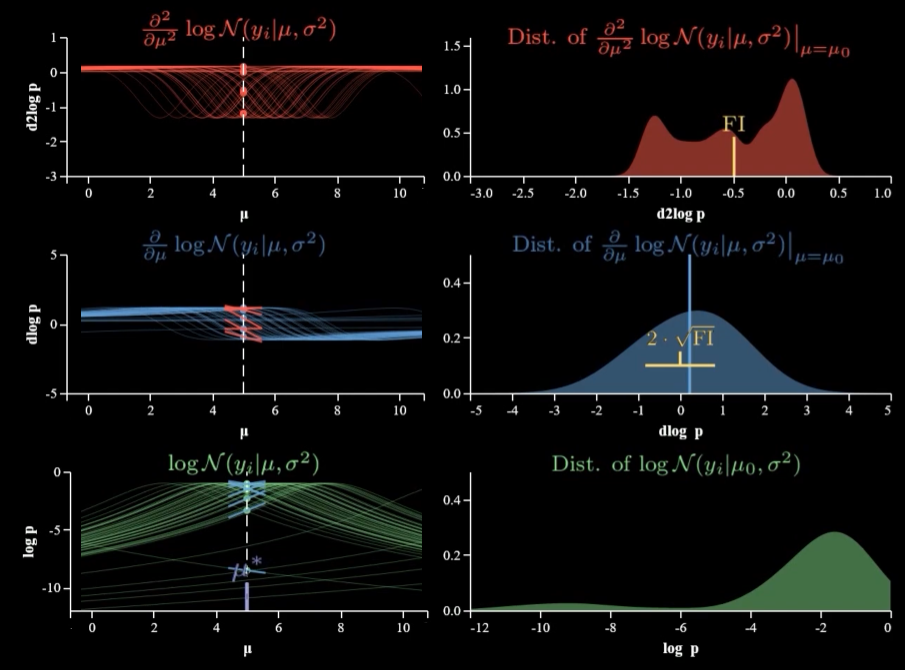

Fisher Information

I(\theta) &:= E\left[ \left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right)^{2} \right] = \int_{\mathbb{R}} \left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right)^{2} p(x, \theta)dx\\ &= -E\left[ \frac{\partial^{2} \ln f(X|\theta)}{\partial \theta^{2}} \right] = -\int_{\mathbb{R}} \frac{\partial^{2} \ln f(X|\theta)}{\partial \theta^{2}} p(x, \theta)dx\\ \end{aligned}$$ by the [[Bartlett Identities#second-bartlett-identity|second Bartlett identity]] $$I(\theta) = \operatorname{Var}\left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right) = \operatorname{Var}(s(\theta|x))$$ where $s(\theta|x)$ is a [[Score Function]] ## Fisher Information Matrix ![[Pasted image 20231224171415.png|800]] Let $\mathbf{X}$ be a [[Random Vector]] with [[Density Function|PDF]] $f(x|\boldsymbol{\theta})$, where $\boldsymbol{\theta} \in \Omega \subset R^{p}$, then the **Fisher information matrix** for on $\boldsymbol{\theta}$ is a $p \times p$ matrix defined as $$I(\boldsymbol{\theta}) := \operatorname{Cov}\left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right) = E\left[ \left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right) \left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right)^\intercal \right] = -E\left[ \cfrac{\partial^{2}}{\partial \boldsymbol{\theta} \partial \boldsymbol{\theta}^\intercal} \ln f(x|\boldsymbol{\theta}) \right]$$ and $jk$-th element of $I(\boldsymbol{\theta})$, $I_{jk} = - E\left[ \cfrac{\partial^{2}}{\partial \theta_{j} \partial\theta_{k}} \ln f(x|\boldsymbol{\theta}) \right]$ # Properties ## Chain Rule The information in length $n$ [[Random Sample]] $X_{1}, X_{2}, \dots, X_{n}$ is $n$ times the information in a single sample $X_{i}$ $I_\mathbf{X}(\theta) = n I_{X_{1}}(\theta)$ # Facts > In a location model, information is not dependent on a location parameter. > $$I(\theta) = \int_{-\infty}^{\infty}\left( \frac{f'(z)}{f(z)} \right)^{2} f(z)dz$$Link to original

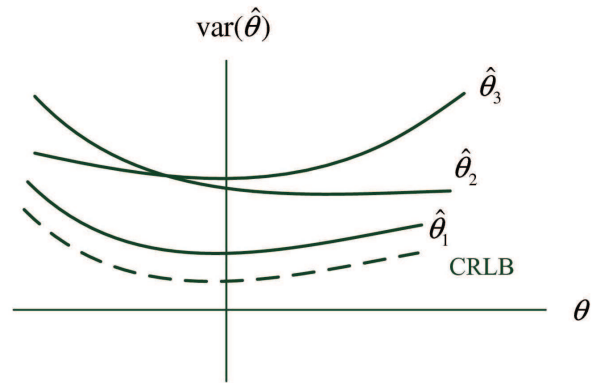

Rao-Cramer Lower Bound

Definition

Let be Random Sample with PDF with the R0 ~ R4 regularity conditions and be a Statistic with , then

Facts

Link to originalLet be an unbiased estimator of , then . Thus, by a Rao-Cramer Lower Bound,

Efficiency

Definition

Efficient Estimator

where is a Fisher Information

An unbiased estimator is called an efficient estimator if its variance is the Rao-Cramer Lower Bound

Efficiency

Let be an unbiased estimator, then the efficiency of , is

Asymptotic Efficiency

Let be i.i.d. random variables with PDF , and be an estimator satisfying , then

- the asymptotic efficiency of is

- If , then is called asymptotically efficient

- Assume that another estimator such that Then, the asymptotic relative efficiency (ARE) of to is

Examples

In a Laplace distribution, So, sample median is times more efficient than sample mean for the estimator of the location parameter.

Link to original

In a Normal distribution, So, sample mean is times more efficient than sample median for the estimator of the location parameter.

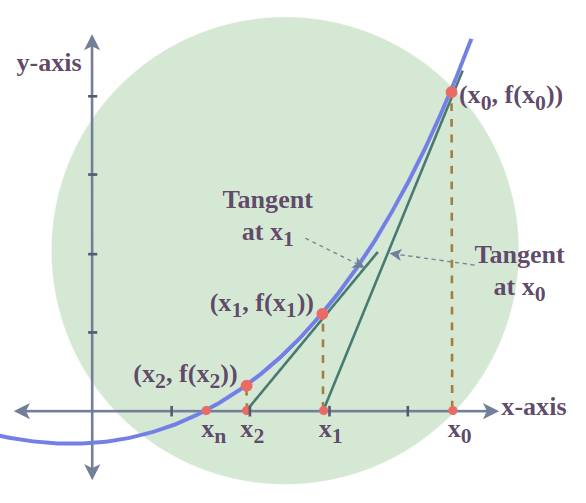

Newton's Method

Definition

An iterative algorithm for finding the roots of a differentiable function, which are solution to the equation

Algorithm

Find the next point such that the Taylor series of the given point is 0 Taylor first approximation: The point such that the Taylor series is 0:

multivariate version:

In convex optimization,

Find the minimum point^[its derivative is 0] of Taylor quadratic approximation. Taylor quadratic approximation: The derivative of the quadratic approximation: The minimum point of the quadratic approximation^[the point such that the derivative of the quadratic approximation is 0]: multivariate version:

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient and hessian are ,

Then, solution is If is invertible, is a Least Square solution.

Link to original

Fisher's Scoring Method

Definition

Fisher’s scoring method is a variation of Newton–Raphson method that uses Fisher Information instead of the Hessian Matrix

where is the Fisher Information.

Link to original

Maximum Likelihood Tests

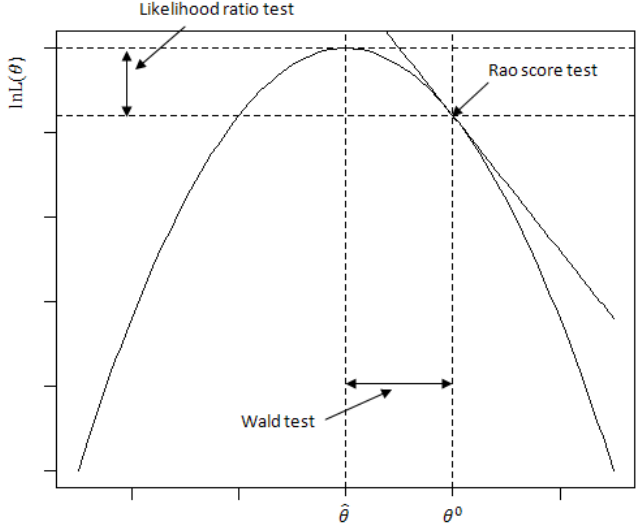

Likelihood Ratio Test

Definition

Let be Random Sample with PDF where , , where . is called a likelihood ratio, where are MLE under and respectively. If is true, then will be close to , while if is not true, then the will be close to

Therefore, LRT rejects when , where the is determined by the level alpha condition.

By the Wilks’ Theorem, the likelihood ratio test Statistic for the hypothesis is given by

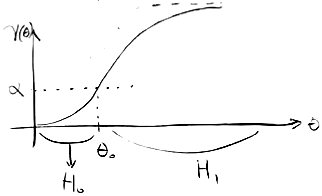

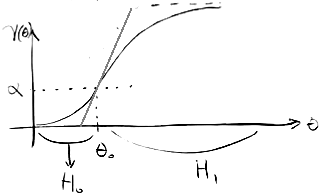

Visualization

The comparison of the Likelihood Ratio Test, Wald Test, and Score Test

Link to original

Wilks' Theorem

Definition

Single Parameter

Under R0 ~ R5 regularity conditions, let , where is the likelihood ratio, and the is the MLE of

Multiparameter

Under R0 ~ R5 regularity conditions, let , where is the likelihood ratio, and the is the MLE of without constraints.

Link to original

Wald Test

Definition

the Wald test Statistic for the hypothesis is given by

Link to original

Score Test

Definition

the Score test Statistic for the hypothesis is given by

Link to original

Multiparameter Case

Multiparametric Maximum Likelihood Estimation

Definition

Multiparameter MLE

Let be Random Sample with PDF , where , then the MLE of is estimated as

Properties

Multiparameter Asymptotic Normality

Under the R0 ~ R5 Regularity Conditions, let be Random Sample with PDF , where , then

- has solution such that

Delta Method for Multiparameter MLE

Multiparameter Rao-Cramer Lower Bound

Let be Random Sample with PDF , be an unbiased estimator of , then . Thus, by a Rao-Cramer Lower Bound, where is -th diagonal element of

If holds, then is called an efficient estimator of

In a location-scale model, the information of the parameters do not depend on the locale parameter.

Link to original

Multiparametric Likelihood Ratio Test

Definition

Let be Random Sample from PDF , where , be the number of parameters, be the number of constraints (restrictions) , where is differentiable function , where the dimension of is .

Now, consider a test statistic to test the hypothesis If is true, then will be close to , while if is not true, then the will be close to Therefore, LRT rejects when , where the is determined by the level alpha condition.

By the Wilks’ Theorem, the likelihood ratio test Statistic for the hypothesis is given by

Link to original

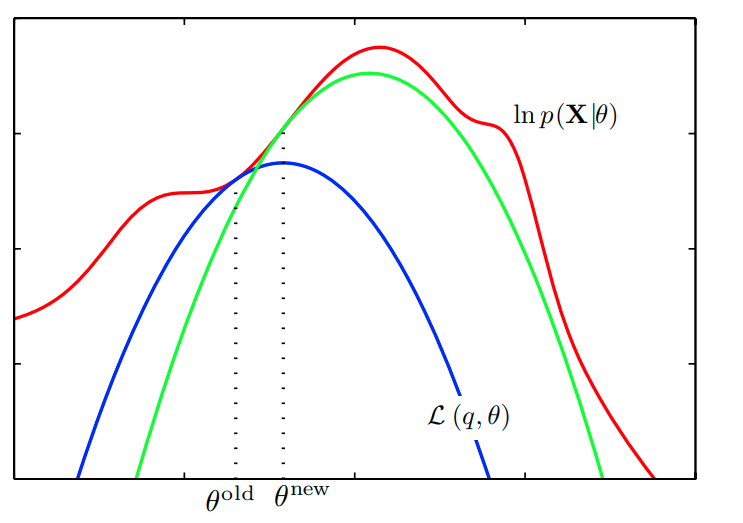

EM Algorithm

Expectation-Maximization Algorithm

Definition

Let be an observed data, be an unobserved (latent) variable, are independent, be a joint pdf of , be a joint pdf of , be a conditional pdf of given

By the definition of a conditional pdf, we have the identity

The goal of the EM algorithm is maximizing the observed likelihood using the complete likelihood .

Using the definition conditional pdf, we derive the identity for an arbitrary but fixed

&= \int \ln[h(\mathbf{X}, \mathbf{Z} | \boldsymbol{\theta})] k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0}) d \mathbf{Z} - \int \ln[k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta})]k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}_{0})d\mathbf{Z}\\ &= E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}] - E_{\boldsymbol{\theta}_{0}}[\ln k(\mathbf{Z} | \mathbf{X}, \boldsymbol{\theta}) | \boldsymbol{\theta}_{0}, \mathbf{X}] \end{aligned}$$ Let the first term of RHS be a quasi-likelihood function $$Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X}) := E_{\boldsymbol{\theta}_{0}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \boldsymbol{\theta}_{0}, \mathbf{X}]$$ EM algorithm maximizes $Q(\boldsymbol{\theta} | \boldsymbol{\theta}_{0}, \mathbf{X})$ instead of maximizing $\ln L(\boldsymbol{\theta}|\mathbf{X})$ # Algorithm 1. Expectation Step: Compute $$Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X}) := E_{\hat{\boldsymbol{\theta}}^{(m)}}[\ln L^{c}(\boldsymbol{\theta}|\mathbf{X}, \mathbf{Z}) | \hat{\boldsymbol{\theta}}_{m}, \mathbf{X}]$$ where the $m = 0, 1, \dots$, and the expectation is taken under the conditional pdf $k(\mathbf{Z} | \mathbf{X}, \hat{\boldsymbol{\theta}}^{(m)})$ 2. Maximization Step: $$\hat{\boldsymbol{\theta}}^{(m+1)} = \underset{\boldsymbol{\theta}}{\operatorname{arg max}} Q(\boldsymbol{\theta} | \hat{\boldsymbol{\theta}}^{(m)}, \mathbf{X})$$ # Properties ## Convergence The [[Sequence]] of estimates $\hat{\boldsymbol{\theta}}^{(m)}$ satisfies $$L(\hat{\boldsymbol{\theta}}^{(m+1)}|\mathbf{X}) \leq L(\hat{\boldsymbol{\theta}}^{(m)}|\mathbf{X})$$ Therefore the [[Sequence]] of EM estimates converge to (at least local) optimalLink to original

Sufficiency

Decision Function

Definition

Let be Random Sample with PDF , where , be a Statistic for the parameter , and be a function of the observed value of the statistic , then The function is called a decision function or a decision rule.

Link to original

Loss Function

Definition

Let be a parameter, be a Statistic for the parameter , and be a Decision Function

A loss function is a non-negative function defined as

It indicates the difference or discrepancy between and

Examples

Link to original

- Absolute Error Loss

- Squared Error Loss

- Sum of Squared Errors Loss

- Cross-Entropy Loss

- Goal Post Error Loss

- Huber Loss

- Binary Loss

- Triplet Loss

- Pairwise Loss

Risk Function

Definition

Risk function is an expectation of Loss Function

Link to original

Minimum Variance Unbiased Estimator

Definition

An estimator satisfying the following is the minimum variance unbiased estimator (MVUE) for where is an unbiased estimator

An Unbiased Estimator that has lower variance than any other unbiased estimator for the parameter

Facts

A minimum variance unbiased estimator does not always exist.

Link to originalIf some unbiased estimator’s variance is equal to the Rao-Cramer Lower Bound for all , then it is a minimum variance unbiased estimator.

Minimax Estimator

Definition

An estimator satisfying the following is he minimax estimator of

Link to original

A Sufficient Statistic for a Parameter

Sufficient Statistic

Definition

Let be a Random Sample with PDF , where , and be a Statistic with PDF . The is a sufficient statistic for if and only of

No other statistic that can be calculated from the same sample provides any additional information as to the value of the parameter.

Facts

Any one-to-one transformation of a sufficient statistic is also sufficient statistic

Link to originalMLE is a function of sufficient statistic

Let be a Random Sample with PDF , where , be a Statistic with PDF , and be a unique MLE of , then is a function of

Neyman's Factorization Theorem

Definition

Let be a Random Sample with PDF , where , then The statistic is a Sufficient Statistic for if and only if where are non-negative functions, and does not depend on

Link to original

Rao-Blackwell Theorem

Definition

Let be a Random Sample with PDF , where , be a Sufficient Statistic for , be a Unbiased Estimator for . And define , then is an Unbiased Estimator, and it has a lower variance than

Link to original

Completeness and Uniqueness

Complete Statistic

Definition

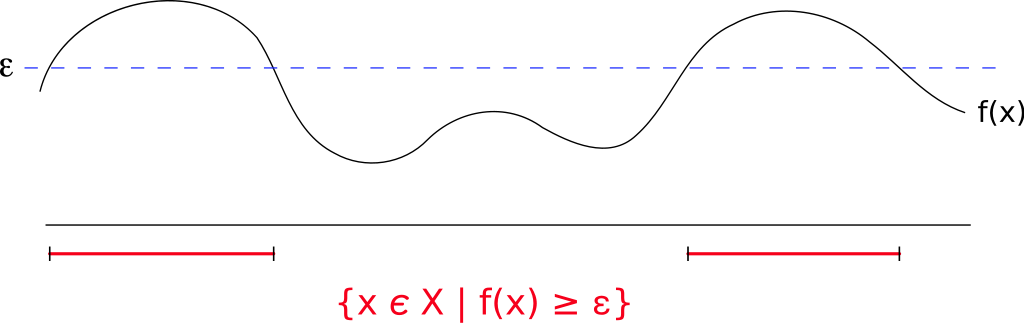

Let be a Random Variable with PDF , where . A complete statistic for satisfies the condition then, is called a complete family.

Link to original

Uniformly Minimum Variance Unbiased Estimator

Definition

where is an unbiased estimator, and is the parameter.

An Unbiased Estimator that has lower variance than any other unbiased estimator for all possible values of the parameter

Link to originalAn MVUE for all possible values of the parameters

Lehmann-Scheffe Theorem

Definition

Let be a Random Sample with PDF , where , be a complete sufficient Statistic for , then If , then is the unique UMVUE of

Facts

Link to originalLet be a complete sufficient Statistic for , be a Unbiased Estimator of

- , then is a unique UMVUE of

- By the Rao-Blackwell Theorem, is a unique UMVUE of

The Exponential Class of Distribution

Regular Exponential Class

Definition

A PDF of form is said to be a member of the regular exponential class if

- , support of , does not depend on

- is a non-trivial (constant) continuous function of

- If is a continuous random variable, then and is a continuous function of

- If is a discrete random variable, then is non-trivial function of

Properties

Joint PDF

Let be a Random Sample from a regular exponential class, then the joint pdf of is

Monotone Likelihood Ratio

Let be a Random Sample from a regular exponential class If is monotone function of , then likelihood ratio has Monotone Likelihood Ratio property in

Facts

Link to originalLet be a Random Sample from a regular exponential class, and be a Statistic, then

- The pdf of is where is a function of only.

- is a complete sufficient Statistic

Function of a Parameter

Multiparametric Sufficiency

Definition

Joint Sufficient Statistic

Let be a Random Sample with PDF , where , , where be a Statistic, and be a pdf of . The is jointly sufficient for if and only where does not depend on

if and only if where does not depend on

Completeness

Let be a family of pdfs of random variables If , then the family of pdfs is called complete family, and are complete statistics for

m-Dimensioanl Regular Exponential Class

Let be a Random Variable with PDF , where , and be a support of the . If can be written as then, it is called -dimensional exponential class. Further, it is called a regular case if the following are satisfied

- , support of , does not depend on

- contains m-dimensional open rectangle

- are functionally independent and continuous function of

- If is a continuous random variable, then and are continuous function

- If is a discrete random variable, then are non-trivial function of

Let be a Random Sample from a m-dimensional regular exponential class, then the joint pdf of is

, where , is a joint complete sufficient Statistic for

the joint pdf of is , where does not depend on

k-Dimensional Random Vector with p-Dimensional Parameters

Exponential Class

Let be a -dimensional random vector with pdf , where

Link to original

Minimum Sufficiency and Ancillary Statistic

Minimal Sufficient Statistic

Definition

A Sufficient Statistic is called minimal sufficient statistic (MSS) if it has the minimum dimension among all sufficient statistics

Facts

Link to originalcomplete sufficient Statistic minimal sufficient statistic

Ancillary Statistic

Definition

Link to originalLet be a Random Sample with PDF , where is called ancillary statistic to if its distribution does not depend on the

Location Parameter

Definition

Let , where , be Random Sample from a pdf If , then is called location parameter

Link to original

Location Invariant Statistic

Definition

Let be a Random Sample from a location model, and be a statistic such that , then . So, the distribution of does not depend on . In this case, is Ancillary Statistic to , and it is called location-invariant statistic.

Link to original

Scale Parameter

Definition

Let , where , be Random Sample from a pdf If , then is called scale parameter

Link to original

Scale-Invariant Statistic

Definition

Let be a Random Sample from a scale model, and be a statistic such that , then . So, the distribution of does not depend on . In this case, is Ancillary Statistic to , and it is called scale-invariant statistic.

Link to original

Location-Scale Family

Definition

Let , where , be Random Sample from a pdf If , then is called location parameter and is called scale parameter

Link to original

Location- and Scale-Invariant Statistic

Definition

Let be a Random Sample from a location-scale model, and be a statistic such that , then . So, the distribution of does not depend on . In this case, is Ancillary Statistic to , and it is called location- and scale-invariant statistic.

Link to original

Sufficiency, Completeness, and Independence

Basu's Theorem

Definition

Let be a Random Sample with PDF , where , be a complete sufficient Statistic for , and be a Ancillary Statistic to , then UMP and are independent.

Link to original

Optimal Tests of Hypothesis

Most Powerful Test

Best Critical Region

Definition

Let be a subset of sample space. is a best critical region of size for testing the simple hypothesis test if

Link to original

- (level alpha condition)

- (most powerful)

Most Powerful Test

Definition

A test with the Best Critical Region is called most powerful (MP) test

Link to original

Neyman-Pearson Theorem

Definition

The Neyman-Pearson theorem states that the Most Powerful Test for choosing between two simple hypotheses is based on the likelihood ratio.

Let be random samples with PDF , where , then the Likelihood Function of is defined as Consider the simple null hypothesis against the simple alternative hypothesis and define the likelihood ratio test statistic.

Let , and be a subset of the sample space such that

- where

Then, is a Best Critical Region of size for the hypothesis test.

Link to original

Uniformly Most Powerful Tests

Uniformly Most Powerful Critical Region

Definition

If is a Best Critical Region of size for every in , then is called a uniformly most powerful critical region

Link to original

Uniformly Most Powerful Test

Definition

A test defined by Uniformly Most Powerful Critical Region is called a uniformly most powerful (UMP) test

Simple vs Composite

Consider is a simple hypothesis and is a composite hypothesis. Fix some satisfying and obtain a most powerful test using Neyman-Pearson Theorem and generalize it to all satisfying conditions

Composite vs Composite

Link to originalKarlin-Rubin Theorem

Definition

Link to original

Consider the hypothesis and , and assume that the likelihood ratio has MLR property in some Sufficient Statistic If the likelihood ratio is decreasing (increasing) in the statistic Then the Uniformly Most Powerful Test of level for the hypothesis is rejecting if , where is determined by

Monotone Likelihood Ratio

Definition

has a monotone likelihood ratio (MLR) property in If is a monotone function of

Link to original

Karlin-Rubin Theorem

Definition

Link to original

Consider the hypothesis and , and assume that the likelihood ratio has MLR property in some Sufficient Statistic If the likelihood ratio is decreasing (increasing) in the statistic Then the Uniformly Most Powerful Test of level for the hypothesis is rejecting if , where is determined by

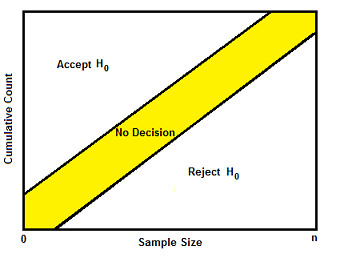

The Sequential Probability Ratio Test

Sequential Probability Ratio Test

Definition

Let be Random Sample with PDF , where , and be the Likelihood Function Consider a simple test We observe likelihood ratio sequentially i.e. , and reject if and only if , where , and do not reject if and only if , where

i.e. we continue to observe as long as , and stop otherwise.

Choice of and

Let be type 1 and 2 error, respectively, then Hence, Therefore, By the inequality, we choose

Link to original

Minimax and Classification Procedures

Minimax Procedures

Definition

We want to test Let be a Loss Function such that and , be a rejection region, be an acceptance region, and be a Risk Function Then, The minimax procedure is to find such that is minimized

The minimax solution is the region , where satisfies

Link to original

Inferences About Normal Linear Models

Quadratic Form

Definition

where is a Symmetric Matrix

A mapping where is a Module on Commutative Ring that has the following properties.

Matrix Expressions

Facts

Let be a Random Vector and be a symmetric matrix of constants. If and , then the expectation of the quadratic form is

Let be a Random Vector and be a symmetric matrix of constants. If and , , and , where , then the variance of the quadratic form is where is the column vector of diagonal elements of .

If and ‘s are independent, then If and ‘s are independent, then

Let , , where is a Symmetric Matrix and , then the MGF of is where ‘s are non-zero eigenvalue of

Let , where is Positive-Definite Matrix, then

Let , , where is a Symmetric Matrix and , then where

Let , , where are symmetric matrices, then are independent if and only if

Let , where are quadratic forms in Random Sample from If and is non-negative, then

- are independent

Let , , where , where , then

Link to original

One-way ANOVA

One-way ANOVA

One-way ANOVA is used to analyze the significance of differences of means between groups. Let an -th response of the -th group be where

We want to test the null hypothesis i.e. there is no treatment effect. where is the total number of observations, represents the mean of the -th group and represents the overall mean, of the numerator indicates between-group variance (SSB) and of the denominator indicates within-group variance (SSW)

The Likelihood Ratio Test rejects if

Link to original

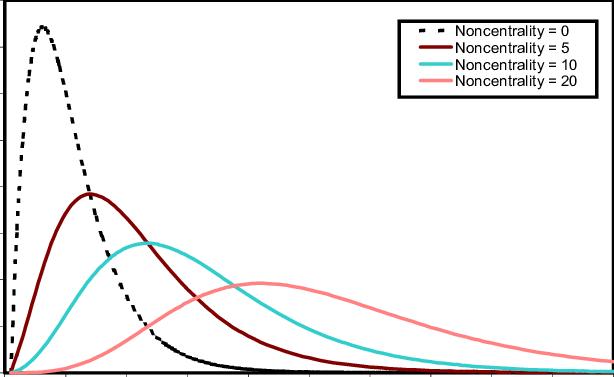

Noncentral Chi-squared and F distributions

Noncentral Chi-squared Distribution

Definition

Let be independent normal distributions , then where is degree of freedom, and is non-centrality parameter.

Properties

MGF

Link to original

Noncentral F-Distribution

Definition

Let be independent random variables following Noncentral Chi-squared Distribution and Chi-squared Distribution, respectively, then where are degree of freedoms and is non-centrality parameter.

Properties

Link to original

Multiple Comparisons

Multiple Comparisons

Definition

Assume that is rejected, and we interested in the confidence interval for some linear combination of parameters

Let ‘s be random samples from We want to compute the confidence interval of , where

Type 1 Error

Consider multiple testing problem , and let be an event of rejecting even though is true (type 1 error). If a test satisfies for level , then the test is called to be controlled at Under the independence of each test, the probability of type 1 error in multiple testing is Thus, the probability of type 1 error approaches to as increase.

Fixed Constants Case

The ‘s are fixed constants. The confidence interval for each is where

Scheffe’s Simultaneous Confidence Interval

The ‘s are allowed to have any real numbers. The simultaneous confidence interval for all is where

Link to original

The Analysis of Variance

Two-way ANOVA

Definition

Two-way ANOVA Without Replications

Let ‘s be Random Sample from . The can be decomposed as sum of means (global mean -th level effect of factor -th level effect of factor ). In this setup, we assume that

We want to test . where , and

The Likelihood Ratio Test rejects if

Under , the follows Noncentral F-Distribution where

Therefore, the power of the test is

Two-way ANOVA With Equal Replications

Let ‘s be Random Sample from . The can be decomposed as sum of means (global mean + -th level effect of factor + -th level effect of factor + interaction effect of -th level of factor and the -th level of factor ). In this setup, we assume that

We want to test . where , , and

The Likelihood Ratio Test rejects if

Under , the follows Noncentral F-Distribution where

Therefore, the power of the test is

If is not rejected, then we continue to test or

Two-way ANOVA with a Regression Model

where and are dummy variables representing categories of the two factors, is the number of categories for the first factor, and is the number of categories for the second factor

In this setting, a corner-point constraint is used for both factors.

The null hypotheses can be tested by Deviance

Deviance Test for Two-way ANOVA

For a two-way ANOVA with factors and , we have three null hypotheses to test:

- i.e. there is no treatment effect of factor

- i.e. there is no treatment effect of factor

- i.e. there is no interaction effect between factor and They can be tested with the Deviance.

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

Link to original

Nonparametric Statistics

Functional (Statistics)

Definition

is called a functional if is a function of CDF or PDF

Let be Random Sample from a cdf and be functional, then the empirical Distribution Function of at is , and is called an induced estimator of .

Link to original

Location Model

Definition

Location Functional

Let be continuous Random Sample with CDF . If satisfies the following, then it is called location functional

Location Model

Let be a location functional, then is called to follow a location model with if

Facts

Mean functional is a locational functional

Link to originalLet be Random Variable with CDF and PDF which is symmetric about . If is a location functional, then

Sample Median and Sign Test

Sign Test

Definition

Let , where ‘s are i.i.d. with CDF and PDF with median . i.e. median of ‘s are .

We want to test , which is called sign test Consider a statistic , which is called sign statistic We reject if .

Distribution of Sign Statistic

under . Therefore, . Also, by CLT,

Consider a sequence of local alternatives The efficacy of the sign statistic is

Composite Sign Test

Consider a composite hypothesis Since the power function is non-decreasing, the sign test of size is

Facts

Consider a test and the test statistic , then Also, the power function of the test is a non-decreasing function of

Consider a sequence of hypothesis , where is called a local alternative, then where is a CDF of standard normal distribution, and

Link to originalLet be random variables from location model, where ‘s are i.i.d. with CDF , PDF , and median 0, and be the sample median of , then where

Efficacy of Sign Test

Definition

Let be a test statistic and , then the efficacy of is defined as where can be interpreted as the rate of change of the mean of

Link to original

Asymptotic Relative Efficiency

Definition

Let be two test statistics, then the asymptotic relative efficiency of to is the ratio between those efficacies

Link to original

Signed-Rank Wilcoxon

Signed-Rank Wilcoxon Test

Definition

Let be Random Sample from cdf , symmetric pdf , and median . Consider a Sign Test

The signed-rank Wilcoxon test statistic for the test is defined as where is the rank of , among

We reject if , where is determined by the level

takes one of . So, we can rewrite the statistic as where is an observation such that

Let be the sum of the ranks of positive ‘s, then So.

can be written as where are called the Walsh averages

In general, if the median of is , then

Consider a sequence of local alternatives The efficacy of the modified signed-rank Wilcoxon test statistic is

Facts

Assume that is symmetric, then under , are independent of

Let be Random Sample from cdf , symmetric pdf , and median , and , then under

- is distribution free

Consider a sequence of hypothesis , then where is a CDF of standard normal distribution, and

Link to originalThe estimator , which can be a solution of the equation , is called Hodges-Lehmann estimator. Hodges-Lehmann estimator is an estimator of median of the Warsh average .

Let be random variables from location model, where ‘s are i.i.d. with CDF , symmetric PDF , and median 0, then where

Mann-Whitney-Wilcoxon Procedure

Mann-Whitney-Wilcoxon Procedure

Definition

Let be Random Sample from a distribution with cdf , and be Random Sample from a distribution with cdf Consider a test

The Mann-Whitney-Wilcoxon test statistic for the test is defined as where is the rank of in the combined (pooled) sample of size

We reject when , where is defined by the level

Therefore, the Mann-Whitney-Wilcoxon test statistic is decomposed as where

So, We reject when

Also, the power function of the test is defined as

Consider a sequence of local alternatives , and assume that , where The efficacy of the modified Mann-Whitney-Wilcoxon test statistic is

Facts

Let be Random Sample from a distribution with cdf , be Random Sample from a distribution with cdf , and then under

- is distribution free

Consider a sequence of hypothesis , then where is a CDF of standard normal distribution, and

Measures of Association

Kendall's Tau

Definition

Let be a Random Sample from a bivariate distribution with cdf We want to test are independent

Let be independent pairs with the same bivariate distribution. If , then we say these pairs are concordant If , then pairs are discordant

Kendall’s is defined as

is bounded by . If are independent, then

Consider another statistic

, so it is an unbiased estimator of

Facts

Link to originalLet be a Random Sample from a bivariate distribution with cdf , and , then under are independent

- is distribution free with a symmetric pdf

Spearman's Rho

Definition

Let be a Random Sample from a bivariate distribution with cdf

Instead of a sample Pearson Correlation Coefficient, we define another statistic using the ranks of samples which is called Spearman’s

is bounded by . If are independent, then

Facts

Link to originalLet be a Random Sample from a bivariate distribution with cdf , and , then under are independent

- is distribution free with a symmetric pdf