Definition

Factor Analysis (FA) is a dimensionality reduction techniques that that aims to explain the covariance structure of given data using a fewer number of latent variables (features). FA models the observed data as linear combinations of latent features plus some Gaussian noise.

Consider an data matrix whose rows are i.i.d. samples from an unknown distribution . FA assumes the samples are generated from a lower-dimensional latent features , where .

The FA model is defined as Where:

- is a factor loading matrix that maps the latent space to the observed space.

- is the -dimensional mean of the observed data.

- is the Gaussian noise term, where is a diagonal matrix.

From the model, we can derive the properties of the observed data.

The goal of FA is to estimate the factor loading matrix , , and the variance matrix from the observed data. This is done using either SVD-based approach or EM Algorithm.

Each observed variable has its own variance (Since is diagonal), representing the variability not explained by the common factors. This is a crucial difference from PPCA.

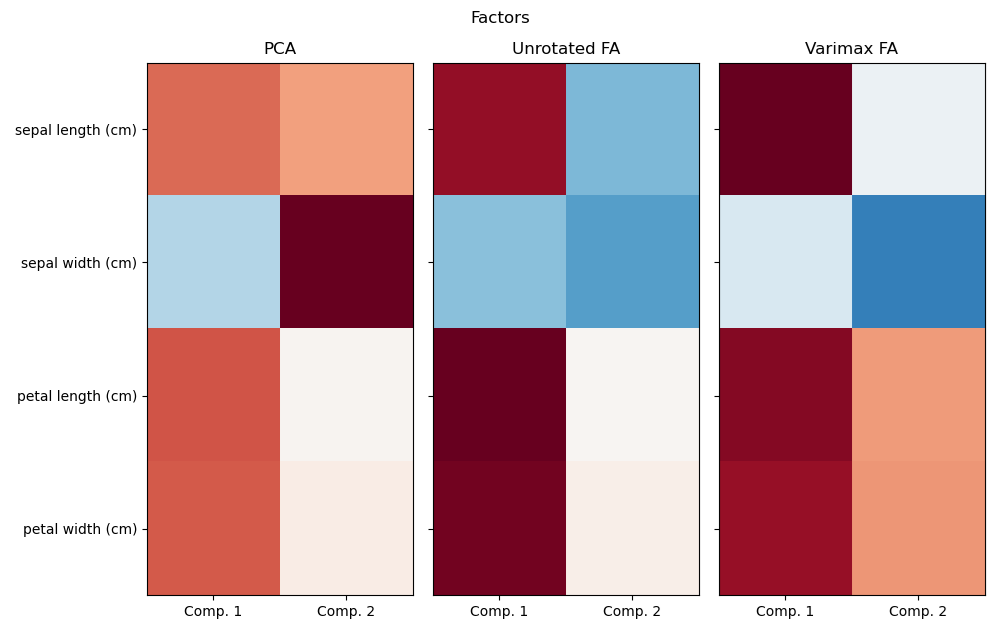

In FA, the log-likelihood function with respect to the parameters is invariant to orthogonal transformations of . That is, replacing with where is any Orthonormal Matrix does not change the value. The rotational freedom can be used to achieve a more interpretable factor structure.

PPCA is a special case of Factor Analysis which restricts to have the same value along the diagonal .