Definition

Optimizers are algorithms used to adjust the parameters of a model to minimize the loss function. The optimizers aim to improve the convergence speed and stability of the training process compared to standard Stochastic Gradient Descent.

Examples

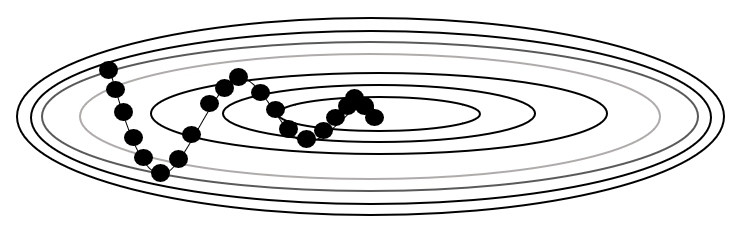

Momentum Optimizer

Definition

Momentum optimizer remembers the update at each iteration, and determines the next update as a linear combination of the gradient and the previous update

where is the momentum coefficient.

Link to original

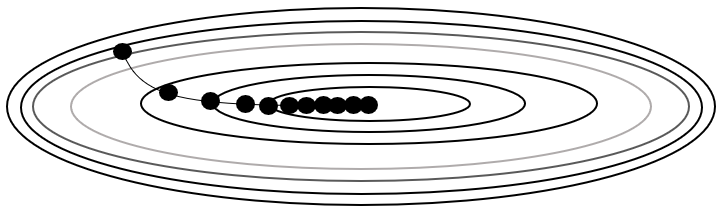

AdaGrad Optimizer

Definition

Adaptive gradient descent (AdaGrad) is a Gradient Descent with parameter-wise learning rate.

where is the sum of squares of past gradients, and is a small constant to prevent division by zero.

Link to original

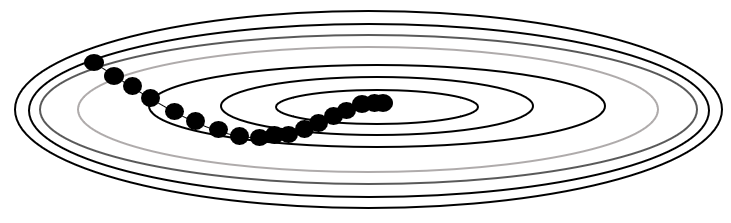

RMSProp Optimizer

Definition

RMSProp optimizer resolves AdaGrad Optimizer’s rapidly diminishing learning rates and relative magnitude difference by taking the exponential moving average on history.

where is the decay rate.

Link to original

Adam Optimizer

Definition

Adaptive momentum estimation (Adam) combines the ideas of momentum and RMSProp optimizers.

Where:

Link to original

- is the estimate of the first moment (mean) of the gradients

- is the estimate of the second moment (un-centered variance) of the gradients

- and are decay rates for the moment estimates

- and are bias-corrected estimates