Definition

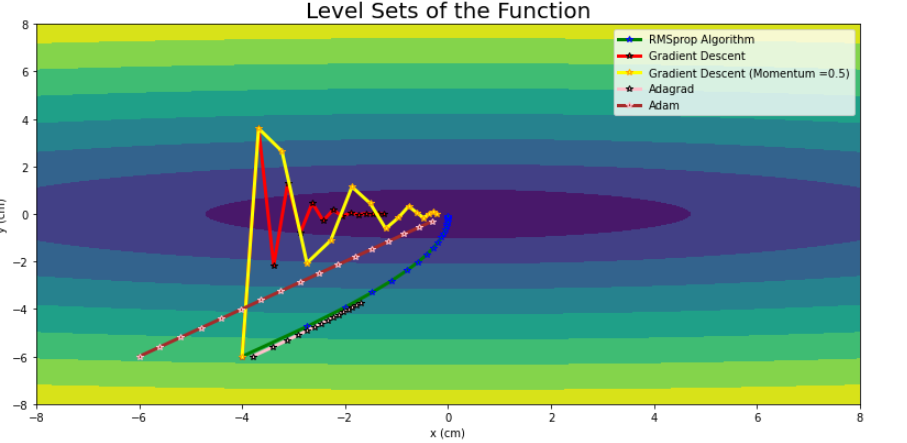

Stochastic gradient descent (SGD) is a stochastic approximation of Gradient Descent. It replaces the actual gradient (calculated from the entire dataset) with an estimation of it by randomly selecting a subset of the data.

where is the learning rate.