Definition

DQN has large or continuous state space and discrete action space.

Naive DQN

Naive DQN treats as a target and minimizes MSE loss by SGD.

Due to the training instability and correlated samples, the Naive DQN has very poor results, even worse than a linear model.

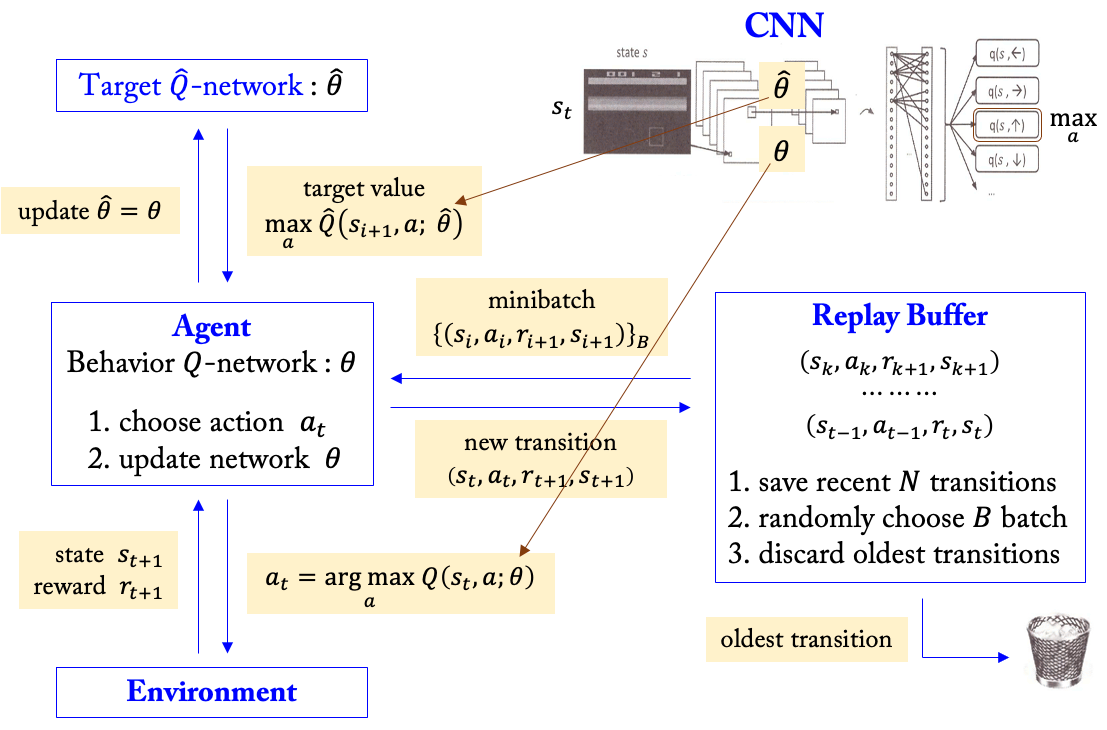

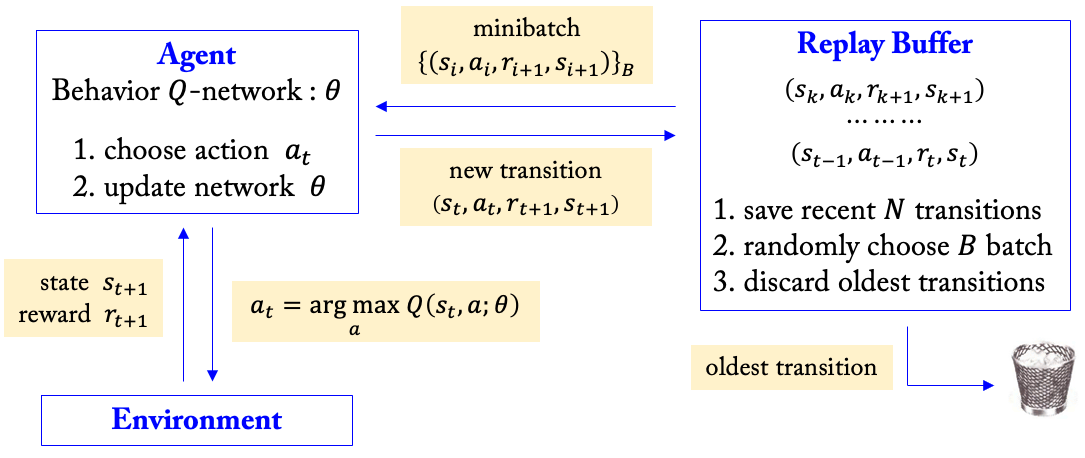

Experience Replay

Online RL agents incrementally update parameters while observing a stream of experience. This structure cause strongly temporally-correlated updates, breaking i.i.d. assumption, and rapidly forget rare experiences that would be useful later on. Experience replay stores experiences in the replay buffer, and randomly samples temporally uncorrelated minibatches from the replay buffer when learning.

Target Network

If target function is changed too frequently, then this moving target makes training difficult (non-stationary target problem). The target network technique updates the parameters of the behavior Q-network at every step, while updating the parameters of the target Q-network sporadically (e.g. every steps). where is the size of a minibatch, and is a -sized index set drew from the replay buffer.

Algorithm

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample random minibatch of transitions from .

- .

- Perform Gradient Descent on loss .

- Update the target network parameter every steps.

- Update .