Markov Decision Process

Markov Property

Definition

Markov property refers to the memoryless property of a Stochastic Process.

Consider a Probability Space with a Filtration , and a Measurable Space . A Stochastic Process is said to possess the Markov property if Here, in the LHS represents all information up to time , whereas conditions only on the single value at time .

In the case where is a discrete set and , this can be reformulated as follows

Link to original

Markov Chain

Definition

A Markov chain is a Stochastic Process that satisfies Markov Property. It consists of a set of states and a Transition Probability Matrix

Summary

Conditions and Properties of Markov Chain (Finite States)

Example Finite IRR Aperiodicity ^[a unique Limiting Distribution] ^[a unique Stationary Distribution] ^[Ergodic Theorem] ^[Ergodicity] O X X X X X X Identity/Block matrix O X O X X X X Exchange matrix O O X X O O X Ergodic Markov Chain O O O O O O O flowchart LR mc[HMC] --> finite subgraph finiteness finite([finite]) end finite --> finite_mc finite_mc --> irreducible finite_mc --> reducible subgraph Irreducibility irreducible([irreducible]) reducible([reducible]) end irreducible --> irr_mc[Irreducible MC] reducible -->|divide|irr_mc irr_mc ---|holds|egt([Ergodic Theorem]) irr_mc --> aperiodic subgraph Aperiodicity aperiodic([aperiodic]) end aperiodic --> ergmc[Ergodic MC]Conditions and Properties of Markov Chain (Infinite States)

IRR Nature Aperiodicity ^[a unique Limiting Distribution] ^[a unique Stationary Distribution] ^[Ergodic Theorem] ^[Ergodicity] O Transient can’t define X X X X O Null recurrent no matter X X X X O Positive recurrent X X O O X O Positive recurrent O O O O O Link to originalflowchart LR mc[HMC] mc --> irreducible mc --> reducible subgraph Irreducibility irreducible([irreducible]) reducible([reducible]) end irr_mc[Irreducible MC] irreducible --> irr_mc reducible -->|divide|irr_mc irr_mc --> pr irr_mc --> nr irr_mc --> tr subgraph Recurrence pr([positive recurrent]) nr([null recurrent]) tr([transient]) end pr_mc[Recurrent MC] pr --> pr_mc pr_mc --> aperiodic egt([Ergodic Theorem]) pr_mc ---|holds|egt subgraph Aperiodicity aperiodic([aperiodic]) end aperiodic --> ergmc[Ergodic MC]

Transition

Definition

where is the distribution of states and is Transition Matrix

Link to original

Transition Probability

Definition

where is HMC that has countable state space

The conditional probability of the Transition from state to

Facts

Link to original

Transition Probability Matrix

Definition

A transition probability matrix is a matrix whose -th element is . where each is a Transition Probability of HMC having countable state space

Facts

Since the number of elements of state space can be infinity, strictly speaking is not a matrix. However, several operations of matrix are satisfied for the

Link to originalThe sum of each row of a transition matrix is one

Markov Decision Processes

Definition

Example of a simple MDP with three states (green circles) and two actions (orange circles), with two rewards (orange arrows)

Markov decision process (MDP) is a tuple . Where:

- : A set of states (State space)

- : A set of actions (Action space)

- : A Transition Probability from to given .

- : The immediate Reward received after transitioning from state to due to action .

Facts

Environmental model in MDP is the Transition Probability. If the Transition Probability is known, it is called a model-based MDP, otherwise called a model-free MDP.

Link to originalAny Markov decision process satisfies the followings:

- There exists an optimal policy

- All optimal policies achieve the optimal state-value function

- All optimal policies achieve the optimal action-value function

Reward

Definition

Reward is a scalar feedback indicating how well the agent is doing at step .

There are three reward types:

- : the reward in the state .

- : the reward by the action in the state .

- : the reward received after transitioning from state to due to action .

Expected Reward

Under known dynamics of all transitions , one can compute the followings:

State transition probability

Expected reward for (state, action) pair

Expected reward for (state, action, next state) triple

Facts

Link to originalReinforcement learning is based on the Reward Hypothesis.

Reward Hypothesis

Definition

All goals can be described by the maximization of the expected value of the cumulative sum of rewards.

Link to original

Return

Definition

where is a discount factor

Return is the total discounted Reward from time step .

Link to original

Policy

Definition

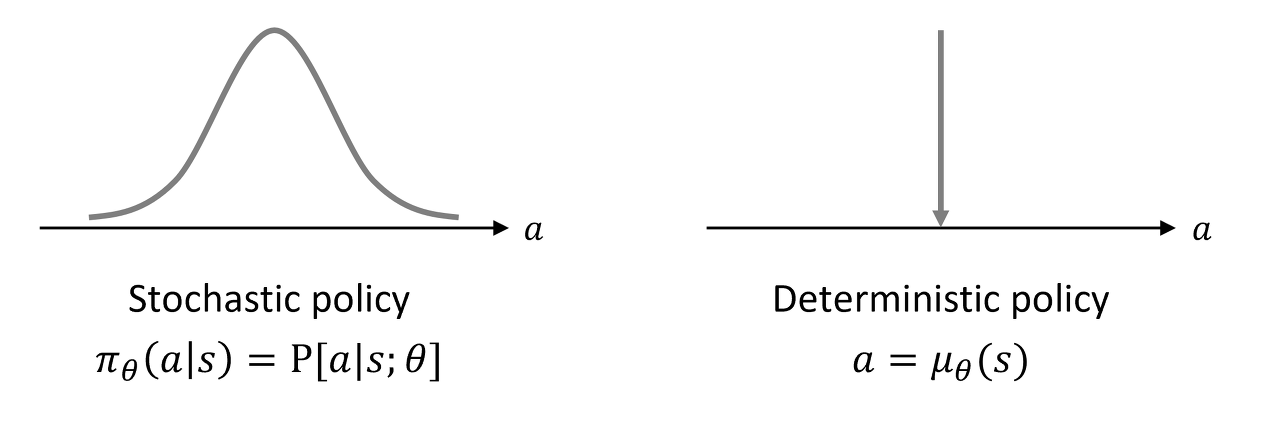

Deterministic Policy

A deterministic policy is a mapping from state space to action space .

Stochastic Policy

A stochastic policy is a probability distribution over actions for a given state .

Optimal Policy

A policy maximizing the cumulative sum of rewards is called an optimal policy.

Better Policy

Consider two policies and . is better policy than if

Facts

Link to original

- Under known MDP, there exists deterministic optimal policy .

- Under unknown MDP, stochastic policy is needed.

State-Value Function

Definition

where is a Action-Value Function.

A state-value function is the expected discounted Return starting in state under policy .

Optimal State-Value Function

An optimal state-value function is the maximum possible state-value function (a state-value function under optimal policy).

Link to original

Action-Value Function

Definition

An action-value function is the expected discounted Return of taking action starting in state under policy .

Optimal Action-Value Function

An optimal action-value function is the maximum possible action-value function (an action-value function under optimal policy).

Link to original

Advantage Function

Definition

An advantage function is the difference between the Action-Value Function and the State-Value Function under policy . It represents how much better it is to take action in state compared to the average action.

Link to original

Bellman Equation

Law of Total Probability

Definition

Suppose is a set of mutually and collectively exclusive events, then for any event :

Link to original

Law of Large Numbers

Definition

Weak Law of Large Numbers

Consider a Sequence of i.i.d. random variables with mean , variance . Let be the sample average. Then, the sample average converges in probability to the expected value.

Strong Law of Large Numbers

Consider a Sequence of i.i.d. random variables with mean , variance . Let be the sample average. Then, the sample average converges almost surely to the expected value.

Link to original

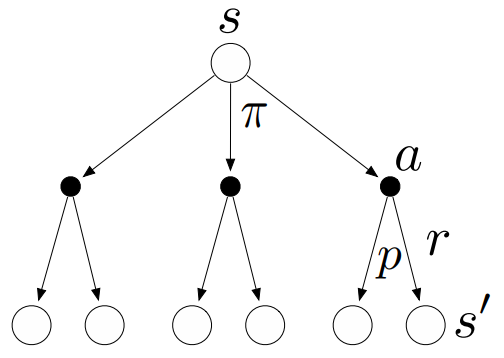

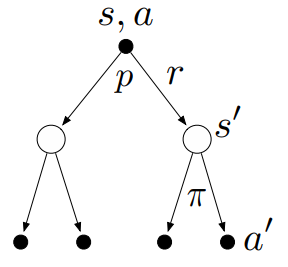

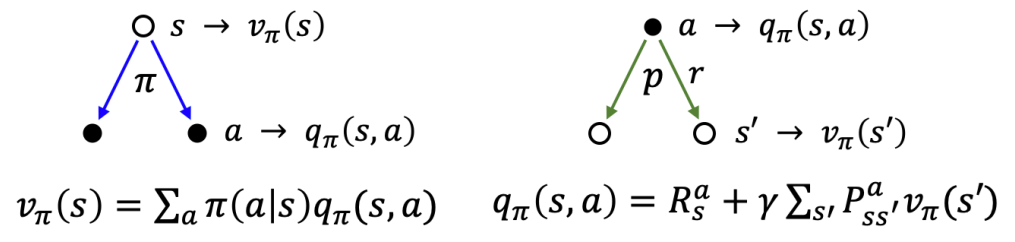

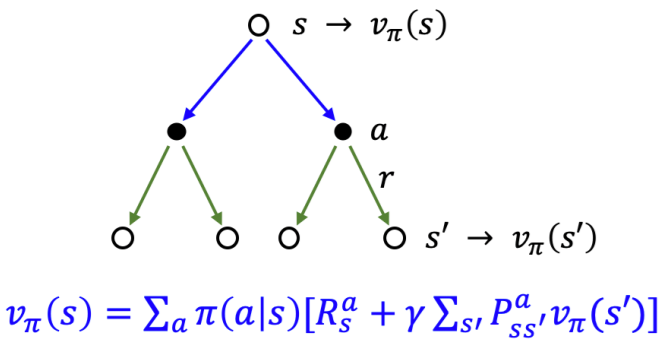

Bellman Expectation Equation

Definition

Bellman expectation equation is a recursive equation decomposing State-Value Function into immediate Reward and discounted future state-value .

Bellman Equation for State-Value Function

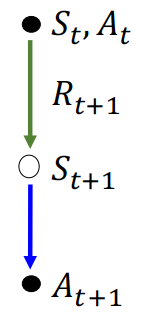

v_{\pi}(s) &= E_{\pi}[G_{t} | S_{t}=s]\\ &= P(A_{t}=a|S_{t}=s)\cdot E_{\pi}[G_{t} | S_{t}=s, A_{t}=a]\quad \left( = \sum\limits_{a} \pi(a|s) q_{\pi}(s, a) \right)\\ &= \sum\limits_{a} \pi(a|s) \cdot E_{\pi}[R_{t+1} + \gamma G_{t+1} | S_{t}=s, A_{t}=a]\\ &= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} E_{\pi}[R_{t+1} + \gamma G_{t+1} | S_{t}=s, A_{t}=a, S_{t+1}=s', R_{t+1}=r]\cdot P(S_{t+1}=s', R_{t+1}=r | S_{t}=s, A_{t}=a)\\ &= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} p(s', r | s, a)[r + \gamma E_{\pi}[G_{t+1} | S_{t+1}=s']]\quad \text{(by Markov property)}\\ &= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} p(s', r | s, a)[r + \gamma v_{\pi}(s')]\quad \left( =\sum\limits_{a} \pi(a|s)[R_{s}^{a} + \gamma \sum\limits_{s'}P_{ss'}^{a} v_{\pi}(s')] \right)\\ &= E_{\pi}[R_{t+1} + \gamma v_{\pi}(S_{t+1}) | S_{t}=s] \end{aligned}$$ ## Bellman Equation for [[Action-Value Function]] ![[Pasted image 20241207130544.png|450]] $$\begin{aligned} q_{\pi}(s, a) &= E_\pi[G_{t} | S_{t}=s, A_{t}=a]\\ &= \sum\limits_{s'r}p(s', r | s, a)[r + \gamma \underbrace{\sum\limits_{a'} \pi(a'|s') q_{\pi}(s', a')}_{v_{\pi}(s')}]\quad \left( = R_{s}^{a} + \gamma \sum\limits_{s'} P_{ss'}^{a} \underbrace{\sum\limits_{a'} \pi(a'|s') q_{\pi}(s', a')}_{v_{\pi}(s')} \right)\\ &= E_{\pi}[R_{t+1} + \gamma q_{\pi}(S_{t+1}, A_{t+1}) | S_{t}=s, A_{t}=a] \end{aligned}$$Link to original

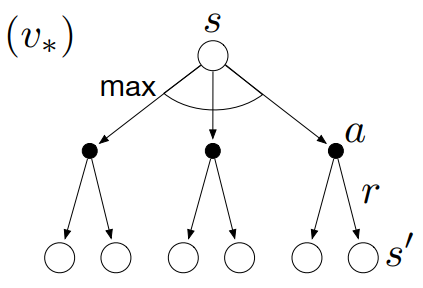

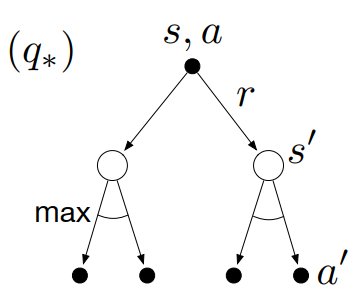

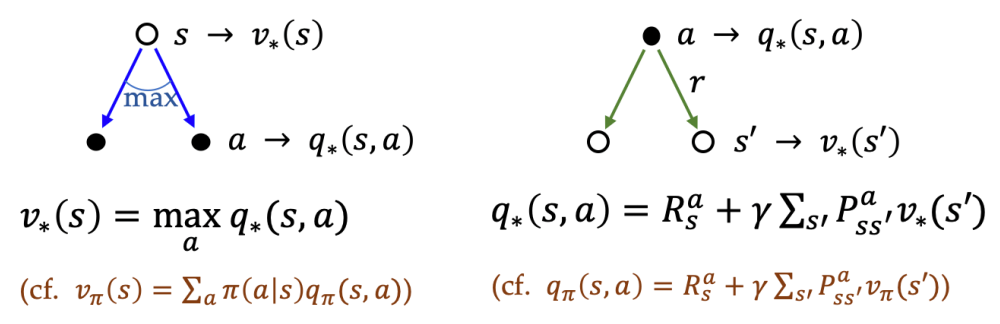

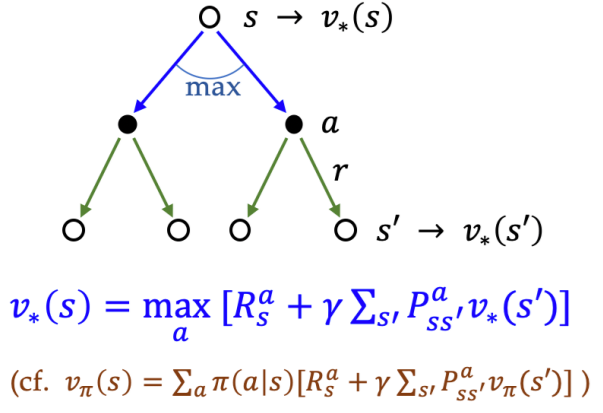

Bellman Optimality Equation

Definition

Under model-based (known MDP), the optimal value functions can be iteratively evaluated by dynamic programming.

Bellman Optimality Equation for State-Value Function

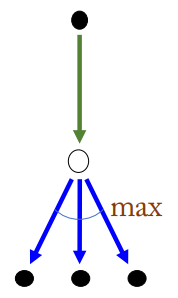

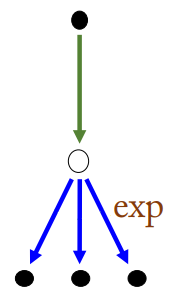

v_{*}(s) &= \max_{a} q_{*}(s, a)\\ &= \max_{a} E_{\pi_{*}}[G_{t} | S_{t}=s, A_{t}=a]\\ &= \max_{a} E_{\pi_{*}}[R_{t+1} + \gamma G_{t+1} | S_{t}=s, A_{t}=a]\\ &= \max_{a} E_{s',r}[R_{t+1} + \gamma v_{*}(S_{t+1}) | S_{t}=s, A_{t}=a]\\ &= \max_{a} \sum\limits_{s',r}p(s', r | s, a) [r + \gamma v_{*}(s')]\quad \left( =\max_{a} [R_{s}^{a} + \gamma\sum\limits_{s'}P_{ss'}^{a} v_{*}(s')] \right) \end{aligned}$$ ## Bellman Optimality Equation for [[Action-Value Function]] ![[Pasted image 20241207152144.png|450]] $$\begin{aligned} q_{*}(s, a) &= E_{s',r}[R_{t+1} + \gamma \max_{a'} q_{*}(S_{t+1}, a') | S_{t}=s, A_{t}=a]\\ &= \sum\limits_{s',r}p(s',r | s, a)[r + \gamma \max_{a'} q_{*}(s', a')]\quad \left(= R_{s}^{a} + \gamma \sum\limits_{s'}P_{ss'}^{a} \max_{a'} q_{*}(s', a') \right) \end{aligned}$$Link to original

Dynamic Programming

Dynamic Programming

Definition

Dynamic programming is a method for solving complex problems by breaking them down into simpler sub-problems in a recursive manner.

Dynamic programming works when a problem has the following properties:

- Optimal substructure: An optimal solution can be constructed from optimal solutions of its sub-problems.

- Overlapping sub-problems: Solutions of same sub-problems are reused several times.

Algorithms

Link to original

Value Iteration

Definition

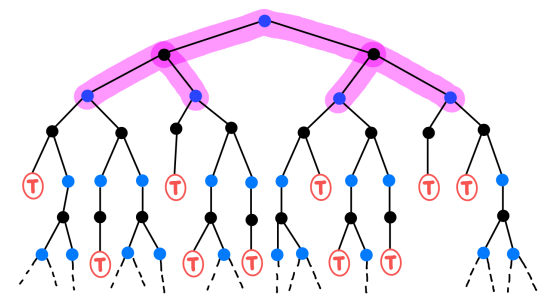

Value iteration iteratively updates state-value function until convergence. Its time complexity is where and are the numbers of states and actions respectively.

Algorithm

Link to original

- Initialize

- Update iteratively from all (full backup) until convergence to .

- Synchronoius backups: compute for all and update simultaneously.

- Asynchronoius backups: compute for one and update it immediately.

- Compute the optimal policy (one-step lookahead) and return it.

Policy Improvement Theorem

Definition

Consider two policies and . This implies that is a better policy than .

Link to original

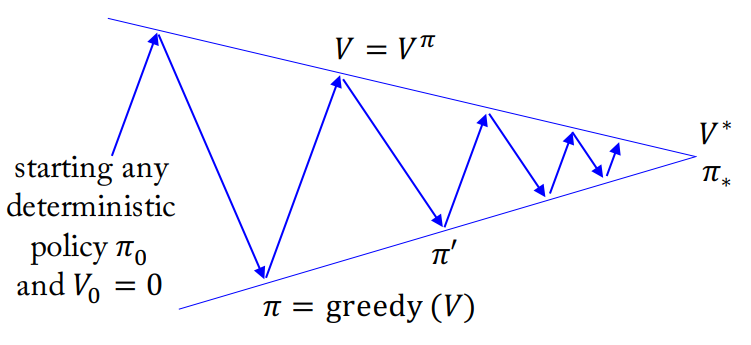

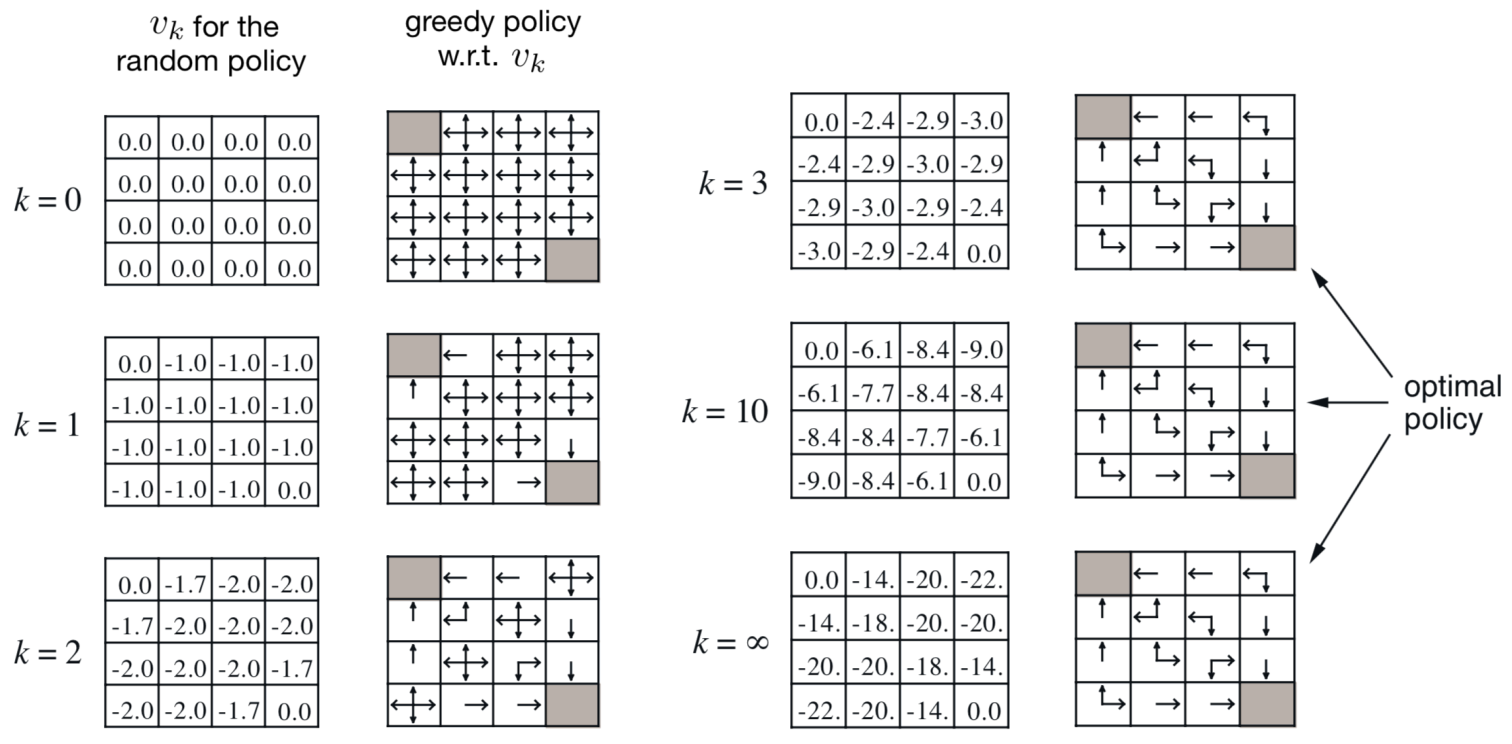

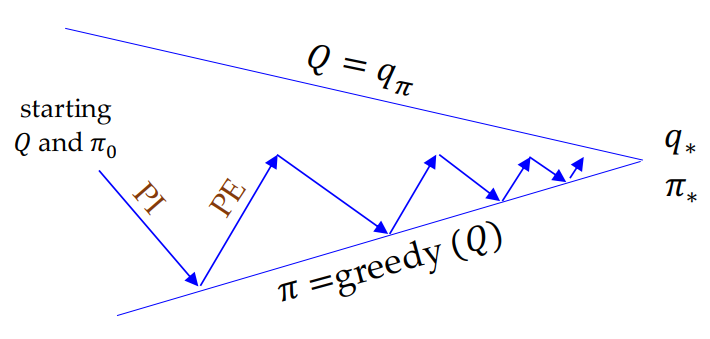

Policy Iteration

Definition

Policy iteration repeats policy evaluation and policy improvement until convergence. Its time complexity is where and are the numbers of states and actions respectively.

Compare to Value Iteration, policy iteration requires much fewer iterations to reach optimal policy.

Policy Evaluation

Compute from the deterministic policy .

Policy Improvement

Improve to by greedy policy based on .

Since , always either is strictly better than or is optimal when by the Policy Improvement Theorem.

Algorithm

- Initialize , and arbitrarily.

- Update every from all (full backup) until convergence to .

- Improve by greedy policy based on .

- If policy is stable, then stop and return and .

Examples

Link to original

Reinforcement Learning

Generalized Policy Iteration

Definition

Generalized policy iteration uses the repeatedly approximated value function to the true value of the current policy (sample backup) and the policy is repeatedly improved to approach the optimality.

Link to original

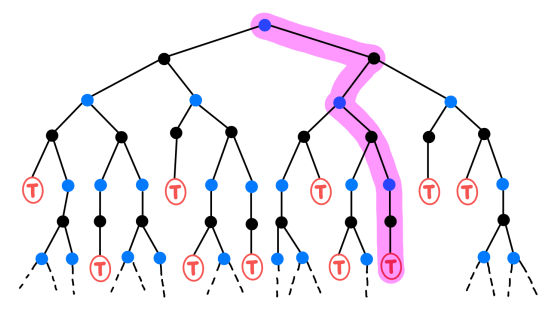

Monte Carlo Method

Definition

Monte Carlo method (MC) is tabular updating and model-free. MC policy iteration adapts GPI based on episode-by-episode (sample multi-step backup) of PE estimating and -greedy PI.

MC Prediction (Policy Evaluation)

Learn from entire episodes of real experience under policy .

Empirical Mean

MC policy evaluation uses empirical mean return instead of expected return. where is increment total return and is incremental count.

The mean return converges to true action-value function as incremental count increases.

Incremental MC Update

The incremental mean can be expressed in a recursive manner:

Thus, for each state-action pair with return , after one episode, the mean return can be calculated as:

Constant- MC Policy Evaluation

It substitutes the weights, used for incremental MC, with constant step-size .

MC Control (-Greedy Policy Improvement)

Choose the greedy action with probability and a random action with probability .

For any -greedy policy , -greed policy with respect to is always improved i.e. .

-greedy policy is GLIE if e.g.

Algorithm

- Initialize arbitrarily, terminal , and arbitrary -soft policy (non-empty probability).

- Repeat for each episode:

- Generate an episode following the policy : .

- Repeat for each step of an episode, (MC Prediction):

- If is a first visit (), then where can be either incremental MC or constant- MC.

- For each visited state in the episode (MC Control):

- If policy is stable, then stop and return .

Facts

Link to originalMC is less harmed by violations of Markov Property because it updates value estimates based on true Return (not based on value estimates of successor states).

Time Difference Learnings

GLIE policy

Definition

A learning Policy is called GLIE (Greedy in Limit with Infinite Exploration) if it satisfies:

Link to original

- All state-action pairs are explored infinitely many times where is incremental count of a .

- The learning policy converges to a greedy policy. where

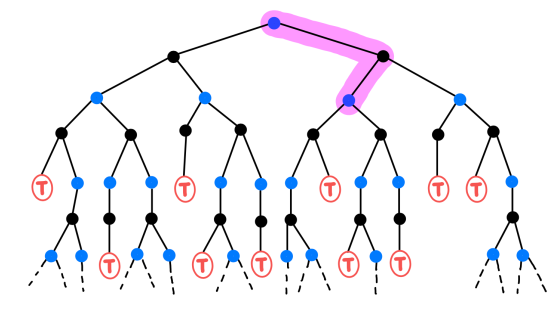

Temporal Difference Learning

Temporal Difference Learning (TD) is tabular updating and model-free. TD policy iteration adapts GPI based on one-step transitions of sample episodes.

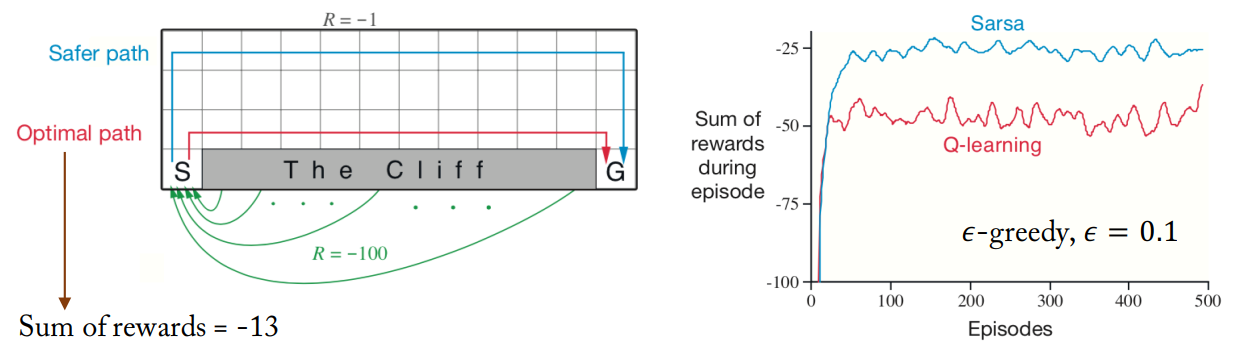

Algorithms

Examples

Sarsa allows for potential penalties from exploration moves, which tends to make it avoid a dangerous optimal path and prefers a slower but safer path. In contrast, Q-Learning ignores these penalties and takes action with the highest action value, which results in its occasional falling because of -greedy actions.

Sarsa allows for potential penalties from exploration moves, which tends to make it avoid a dangerous optimal path and prefer a slower but safer path. In contrast, Q-Learning ignores these penalties and takes action based on the highest action value, which can occasionally result in falling off due to -greedy action.

Link to original

Sarsa

Definition

Sarsa learns by using Bellman Expectation Equation. The both behavior and target policies of Sarsa is derived from , so it is an on-policy method.

Algorithm

Link to original

- Initialize arbitrarily, and .

- Repeat for each episode:

- Initialize .

- Choose an action from the initial state using policy derived from (e.g. -greedy).

- Repeat for each step of an episode until is terminal:

- Take the action and observe a reward and a next state .

- Choose an action from the next state using policy derived from (e.g. -greedy).

- Update and

Q-Learning

Definition

Q-learning directly learns by using Bellman Optimality Equation. The behavior policy of Q-learning is derived from while the target policy is , so it is an off-policy method.

Algorithm

Link to original

- Initialize , arbitrarily, and .

- Repeat for each episode:

- Initialize .

- Repeat for each step of an episode until is terminal:

- Choose an action from the initial state using policy derived from (e.g. -greedy).

- Take the action and observe a reward and a next state .

- Update

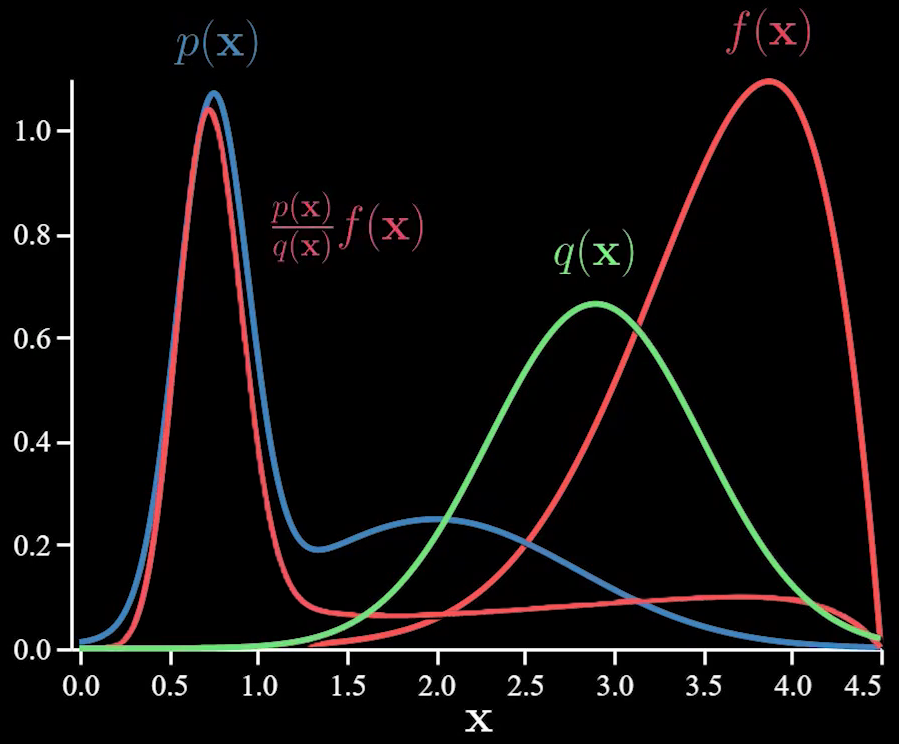

Importance Sampling

Definition

Importance sampling is used when we want to estimate the expectation of a function under a probability distributtion , but sampling directly from the distribution is either inefficient or impossible. Where

Link to original

- is the target distribution

- is the proposal distribution whose support includes that of the support of the target distribution (). The proposal distribution needs to be easy to evaluate and sample from. Also, to reduce the variance of the expectation, should be high where is high.

- is the importance weight.

Off-Policy Control

Definition

Off-policy control uses two different policies for target policy and behavior policy . The target policy may be deterministic while the behavior policy is stochastic. It enables the model to learn optimal policy while maintaining exploration.

Almost all off-policy method utilize Importance Sampling. The Return obtained under the target policy is weighted according to the relative probability of the trajectories under the target and behavior policy, called the importance-sampling ratio (importance weight).

Given a starting state , the probability of the state-action trajectory occurring under a policy is written as where is the state-transition probability.

Thus, the relative probability of the trajectory under the target and behavior policies is

Algorithms

Off-policy MC

where is the importance weight.

The variance of return in Monte Carlo method can dramatically higher.

Off-policy Sarsa

The variance of return in off-policy TD is lower than MC’s one.

Link to original

Expected Sarsa

Definition

Q(S_{t}, A_{t}) &\leftarrow Q(S_{t}, A_{t}) + \alpha [R_{t+1} + \gamma E_{\pi}[Q(S_{t+1}, A_{t+1})|S_{t+1}] - Q(S_{t}, A_{t})]\\ &(= Q(S_{t}, A_{t}) + \alpha [R_{t+1} + \gamma \sum\limits_{a \in \mathcal{A}(S_{t+1})} Q(S_{t+1}, a)\pi(a | S_{t+1}) - Q(S_{t}, A_{t})) \end{aligned}$$ Expected Sarsa uses the expected value over the next state-action pairs, so all actions viable in the state are considered. Expected Sarsa performs better than [[Sarsa]] in terms of the variance of its [[Return]] but costs more.Link to original

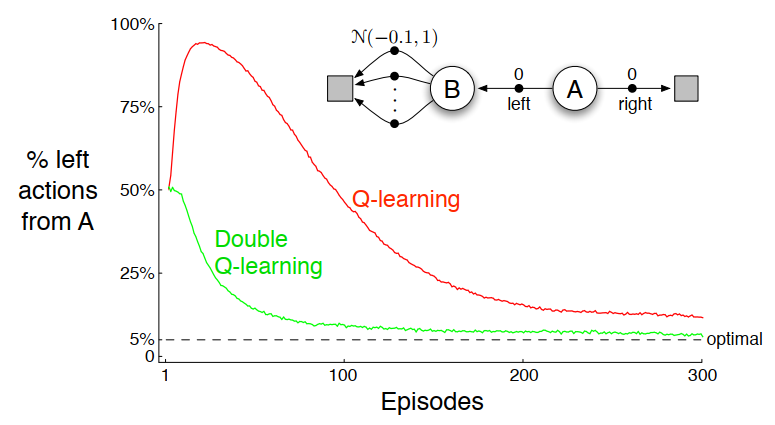

Double Q-Learning

Definition

Q-Learning takes the action with the highest value, which tends to overestimate the value of actions (maximization bias) in the early stages of learning, slowing the convergence to .

Double Q-learning uses two Q-value functions to overcome this problem: one for action selection and another for value evaluation.

Algorithm

- Initialize , , and arbitrarily, and and .

- Repeat for each episode:

- Initialize .

- Repeat for each step of an episode until is terminal:

- Choose an action from the initial state using policy derived from and (e.g. -greedy in ).

- Take the action and observe a reward and a next state .

- With probability : else:

- Update

Examples

Q-Learning initially learns to take the left action much more often than the right action despite the lower true state-value of the left. Even at asymptote, it takes the left action more than optimal (5%). In contrast, double Q-learning is unaffected by maximization bias.

Link to original

n-Step Bootstrapping

n-Step Return

Definition

G_{t:t+n} :&= \sum\limits_{k=0}^{n-1}\gamma^{k} R_{t+k+1} + \gamma^{n}V(S_{t+n}) \\ &= R_{t+1} + \gamma R_{t+2} + \dots + \gamma^{n-1} R_{t+n} + \gamma^{n}V(S_{t+n}) \end{aligned}$$ n-step returns can be considered approximations to the full [[Return]], truncated after $n$ steps and then corrected for remaining missing terms by the [[State-Value Function|state-value]] estimator $V(S_{t+n})$. # Examples - $G_{t:t+1}$: (one-step) [[Temporal Difference Learning|TD]] target - $G_{t:t+n}$: [[n-Step TD]] target - $G_{t:\infty}$: [[Monte Carlo Method|MC]] targetLink to original

n-Step TD

Definition

where is the n-Step Return.

The n-step TD learning is a method that bridges the gap between TD and MC methods.

Examples

Larger reduces bias but increases variance.

Link to original

n-Step Sarsa

Definition

where is the n-Step Return.

Link to original

n-step Off-Policy Learning

Definition

The relative probability of the n-step trajectory under the target and behavior policies is

Algorithms

Off-policy n-Step TD

where is the importance weight.

Off-policy n-Step Sarsa

where is the importance weight, and .

Link to original

Eligibility Traces

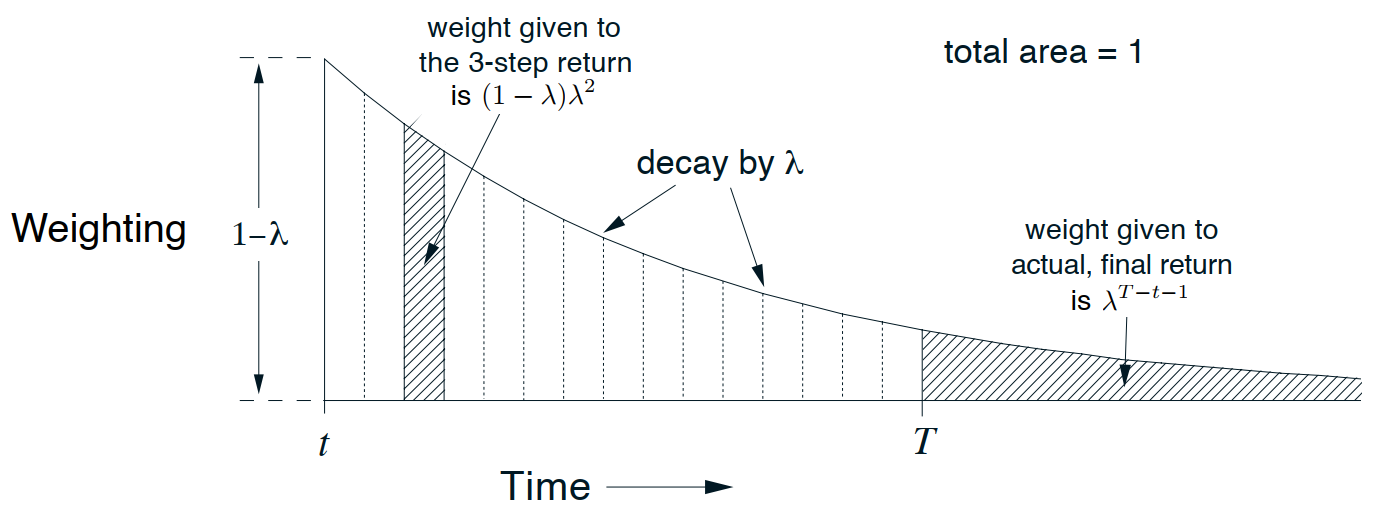

lambda-Return

Definition

The -return is a way to combine -step returns for different ‘s using a weighted average.

G_{t}^{\lambda} :&= (1-\lambda) \sum\limits_{n=1}^{\infty}\lambda^{n-1} G_{t:t+n}\\ &= (1-\lambda) \sum\limits_{n=1}^{T-t-1}\lambda^{n-1} G_{t:t+n} + \lambda^{T-t-1}G_{t} \end{aligned}$$ where $G_{t:t+n}$ is the [[n-Step Return]]. # Examples - $\lambda=0$: [[Temporal Difference Learning|TD]] target - $\lambda =1$: [[Monte Carlo Method|MC]] return - $0 < \lambda < 1$: creates a smooth blend of all $n$-step returns.Link to original

TD(lambda)

Definition

TD() is a TD algorithm that uses the lambda-Return for value function updates. where is the lambda-Return

Examples

Link to original

Deep Reinforcement Learning

DQNs

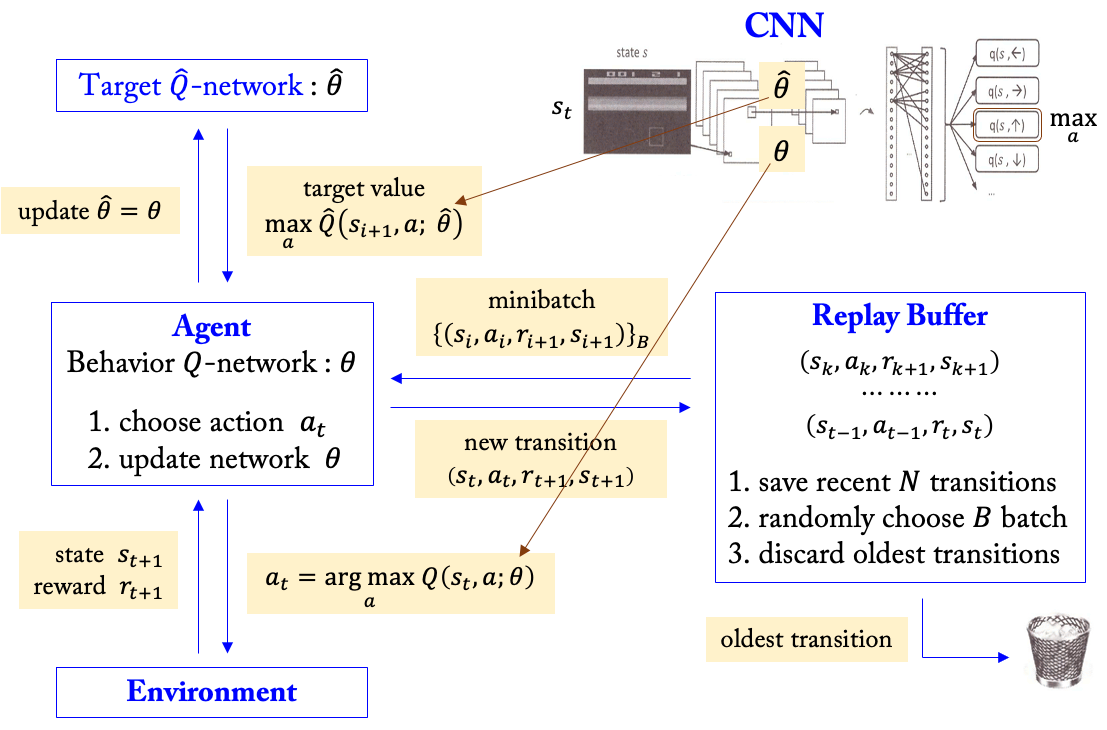

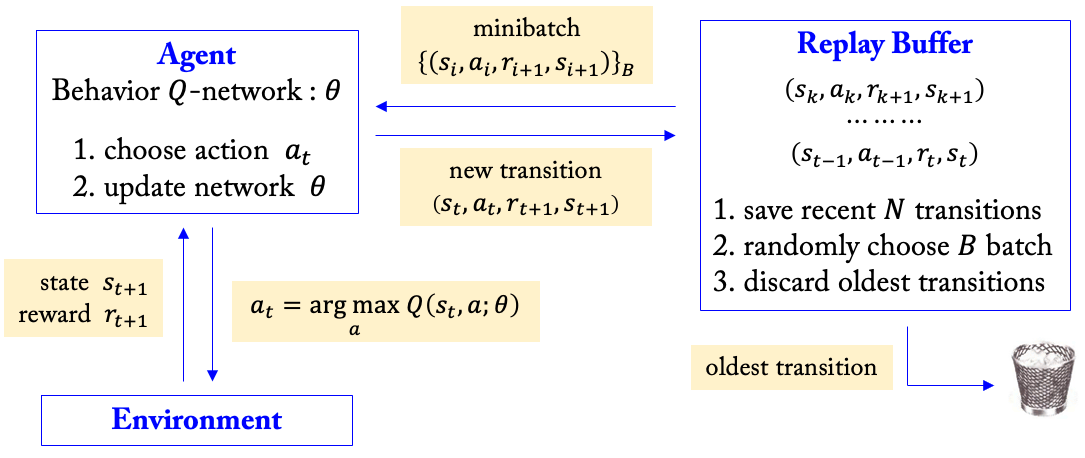

Deep Q-Network

Definition

DQN has large or continuous state space and discrete action space.

Naive DQN

Naive DQN treats as a target and minimizes MSE loss by SGD.

Due to the training instability and correlated samples, the Naive DQN has very poor results, even worse than a linear model.

Experience Replay

Online RL agents incrementally update parameters while observing a stream of experience. This structure cause strongly temporally-correlated updates, breaking i.i.d. assumption, and rapidly forget rare experiences that would be useful later on. Experience replay stores experiences in the replay buffer, and randomly samples temporally uncorrelated minibatches from the replay buffer when learning.

Target Network

If target function is changed too frequently, then this moving target makes training difficult (non-stationary target problem). The target network technique updates the parameters of the behavior Q-network at every step, while updating the parameters of the target Q-network sporadically (e.g. every steps). where is the size of a minibatch, and is a -sized index set drew from the replay buffer.

Algorithm

Link to original

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample random minibatch of transitions from .

- .

- Perform Gradient Descent on loss .

- Update the target network parameter every steps.

- Update .

Double DQN

Definition

Double DQN is a variation of DQN that uses the idea of Double Q-Learning to reduce overestimation. In this case, the target network in DQN works as the second network for double Q-learning without introducing an additional network.

Algorithm

Link to original

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample random minibatch of transitions from .

- .

- Perform Gradient Descent on loss .

- Update the target network parameter every steps.

- Update .

TD Error

Definition

The TD error is the difference between the target and the current prediction.

TD error for State-Value Function

TD error for SARSA

TD error for Q-Learning

Link to original

Prioritized Replay

Definition

Prioritized replay is an enhancement to the standard experience replay. Instead of uniformly sampling experiences from the replay buffer, prioritized replay samples important transitions more frequently based on their priority values. It makes it possible to learn more efficiently as the agent focuses on important experiences more.

The priority of transition is calculated by TD Error. where is the TD Error, and .

The probability of sampling transition is where controls how much prioritization is used (uniform sampling if ).

However, it can lead to a loss of diversity and introduce bias, but these issues can be alleviated and corrected with stochastic sampling prioritization (using ) and Importance Sampling weights.

The Importance Sampling weights are calculated as where

- is the size of the replay buffer

- is annealing parameter that fully compensates the bias when . It starts from and ends with .

The wight is normalized by before used for stability.

Algorithm

Double DQN with Prioritized Replay

Link to original

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer with maximal priority .

- For every (replay period) steps:

- Sample random minibatch of transitions from .

- Compute the importance weight

- Compute TD Error

- Update transition priority

- Accumulate weight-change

- Update behavior network weights and reset

- Update the target network parameter every steps.

- Update .

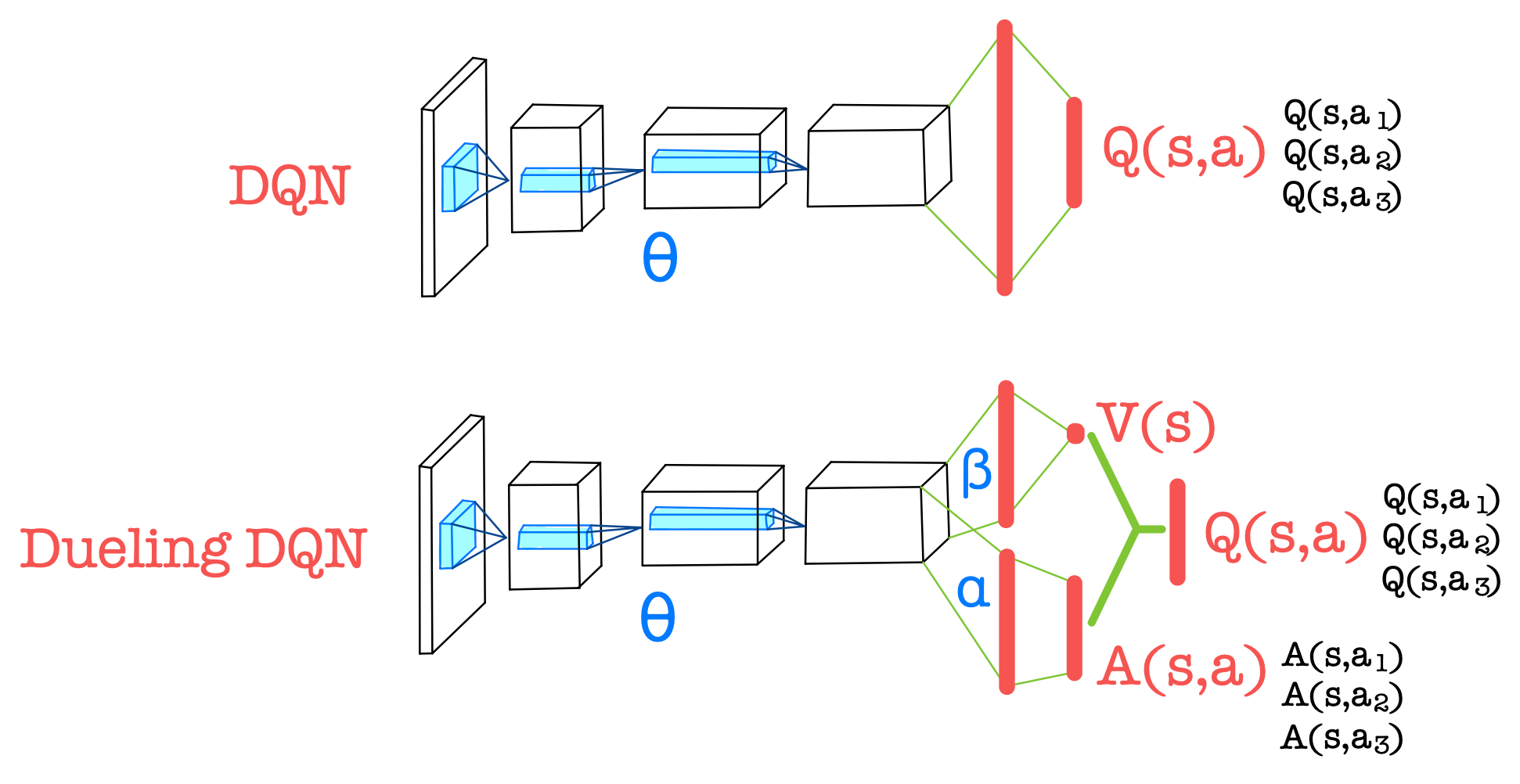

Dueling DQN

Definition

Dueling DQN has two streams: advantage and action-independent state-value , sharing a feature encoder (CNN), and combined by an aggregator to produce action-value .

Aggregating module is unidentifiable in the sense that given a Q-value function, there are multiple possible decompositions into value and advantage functions ( where is a constant value). This makes learning process unstable and less efficient. To force a unique decomposition, we introduce a constraint that makes the Advantage Function have zero-mean.

Link to original

REINFORCE

Policy Gradient

Definition

Policy gradient algorithms directly learn the optimal policy by a parametric probability distribution . The policy stochastically selects an action in a state according to a parameter . It typically proceeds by sampling a stochastic policy and adjusting the parameter in the direction of maximizing the total reward.

The objective function of policy gradient algorithm is defined as: where is a trajectory, is the total reward of the trajectory , and is the PDF of .

Link to original

Policy Gradient Theorem

Definition

The derivative of the expected total reward is the expectation of the product of total rewards and summed gradients of log of the policy .

Proof

Link to original

REINFORCE

Definition

REINFORCE algorithm is a policy gradient algorithm that maximizes the expected return. The objective function of REINFORCE algorithm based on the Policy Gradient Theorem. It substitutes the expectation and the total reward of Policy Gradient with averaging and returns .

Algorithm

Link to original

- Execute trajectories (Each starts from a state under the policy ).

- Approximate the gradient of the objective function

- Update policy to maximize where is a learning rate.

REINFORCE with Baseline

Definition

REINFORCE with Baseline algorithm is a variant of the REINFORCE algorithm that helps reduce variance in Policy Gradient methods by adopting Actor-Critic Method. It modifies the objective function of the REINFORCE algorithm by subtracting a baseline from the returns, which helps reduce the variance of the gradient without introducing bias. where is a baseline function not related to (commonly chosen as a State-Value Function ).

Actor (Policy Gradient Update)

Critic (Value Network Update)

Algorithm

Link to original

- Initialize state-value and policy networks randomly.

- Set the hyperparameters: step-sizes , and discount factor

- Repeat for each episode (Each starts from a state under the policy ):

- Repeat for each step of an episode until terminal, :

- (minimize )

- (maximize with the Policy Gradient )

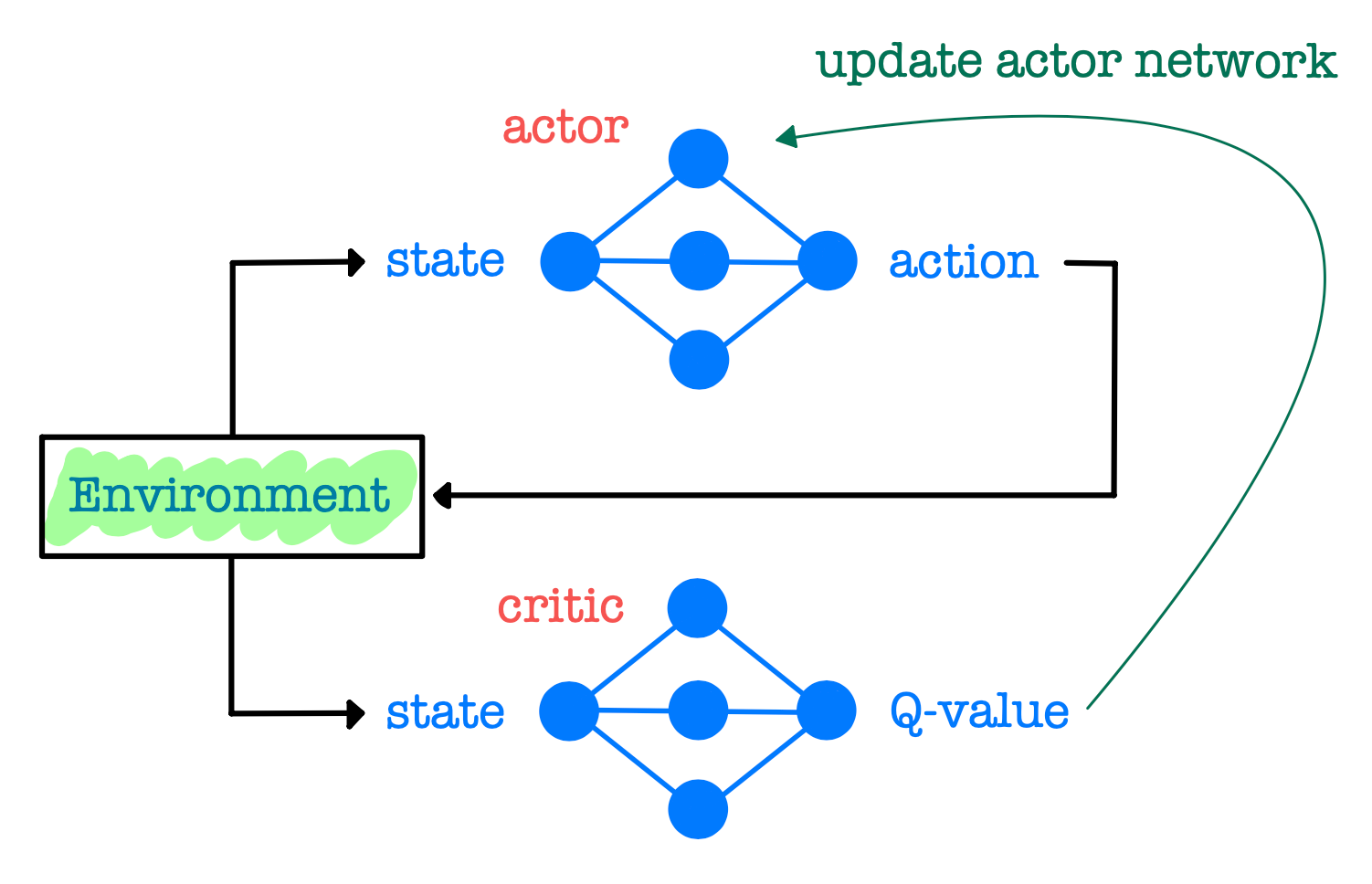

Actor-Critics

Actor-Critic Method

Definition

Actor-Critic method consists of two networks: actor and critic networks.

Actor network updates parameter for policy by maximizing using Policy Gradient

Critic network updates parameter for value function by minimizing

Examples

Actor-Critic Method with TD(0) Return

Asynchronous Advantage Actor-Critic Method

Deep Deterministic Policy Gradient

Link to original

Actor-Critic Method with TD(0) Return

Definition

Actor (Policy Gradient Update)

Critic (Value Network Update)

Algorithm

Link to original

- Initialize critic and actor networks randomly.

- Set the hyperparameters: step-sizes , and discount factor

- Repeat for each episode (Each starts from a state under the policy ):

- Repeat for each step of an episode until terminal, :

- Select action according to policy .

- Take the action and observe a reward and a next state .

Asynchronous Advantage Actor-Critic Method

Definition

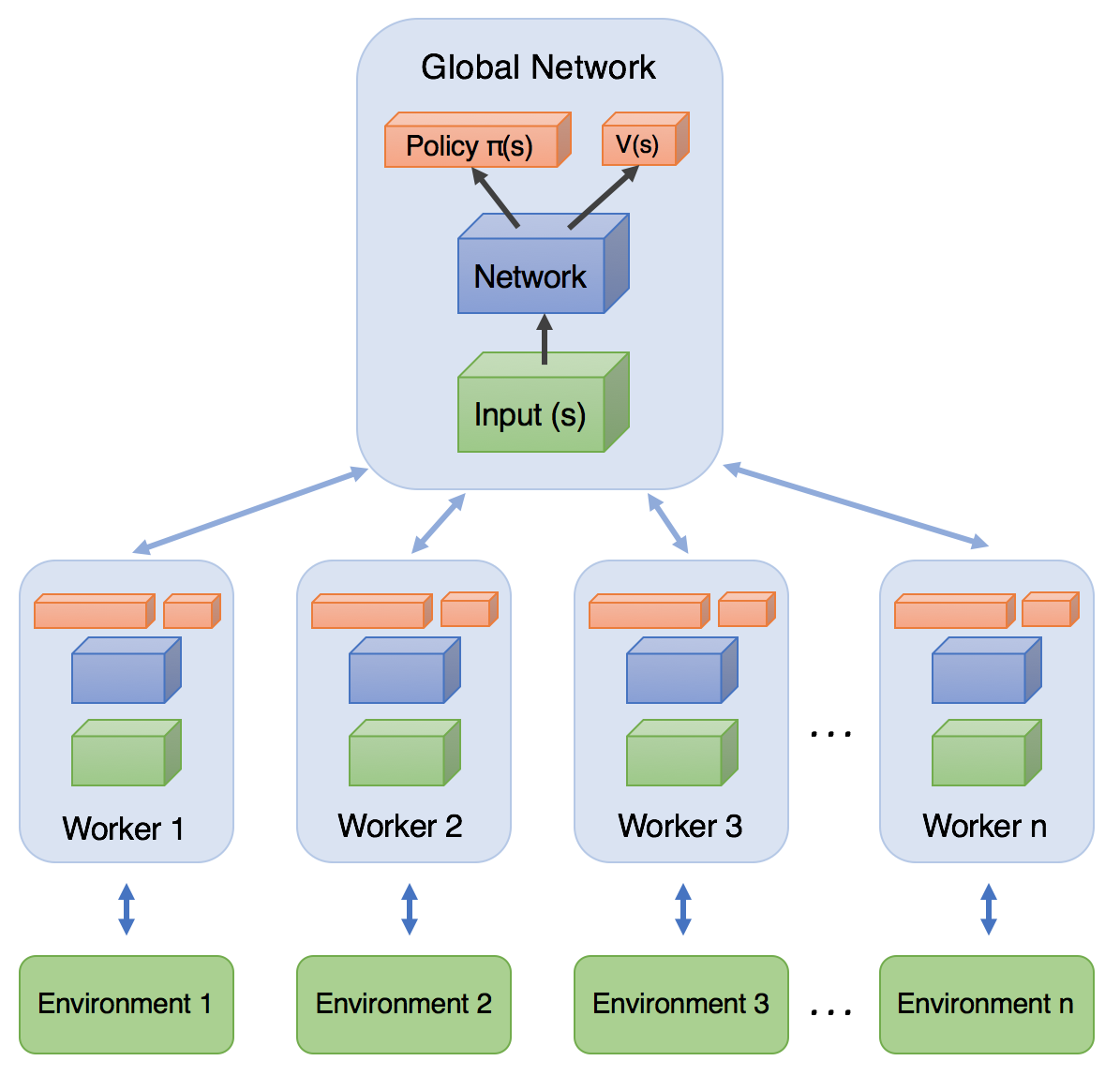

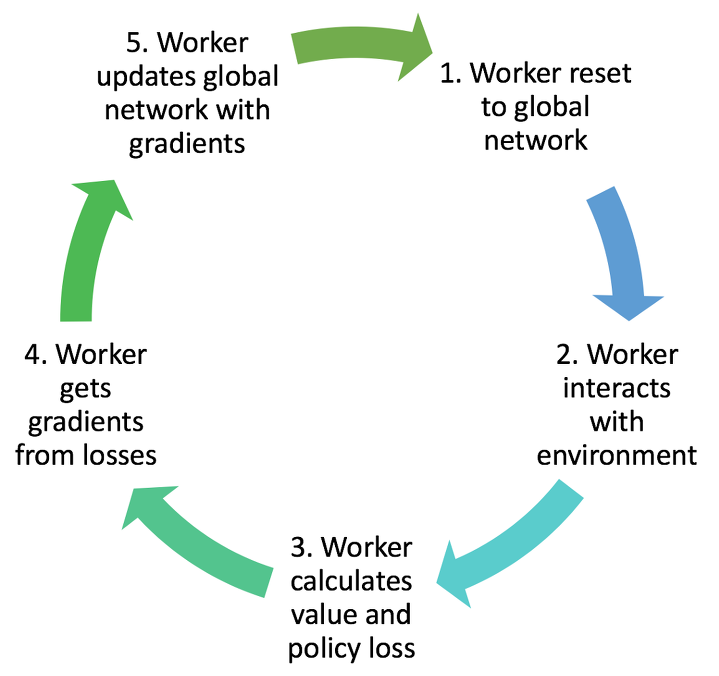

A3C (Asynchronous Advantage Actor-Critic Method) is an Actor-Critic Method that utilizes multiple networks which are a global network and multiple worker agents working in parallel across multiple instances of the environment, and agents update asynchronously the global network parameter. The parallelism reduce each agent’s temporal correlation. The return estimates the Advantage Function using the n-Step Return instead of Action-Value Function .

Actor (Policy Gradient Update)

where is an Entropy that encourages exploration

Critic (Value Network Update)

Algorithm

For each worker agent

Link to original

- Let be global network’s shared parameters, and let be a worker agent’s parameters.

- Set the hyperparameters: step-sizes , discount factor , regularization factor , maximum steps per update .

- Repeat:

- Reset gradients and .

- Synchronize parameters and .

- Set and get state .

- For :

- Select action according to policy .

- Take the action and observe a reward and a next state .

- (or for terminal )

- For :

- .

- Accumulate gradients with respect to

- Accumulate gradients with respect to

- Update asynchronously and .

DPGs

Deterministic Policy Gradient

Definition

Deterministic Policy Gradient (DPG) learns a deterministic policy as an actor on continuous action spaces and an Action-Value Function as a critic.

DPG requires fewer samples to approximate the gradient than stochastic Policy Gradient because DPG updates the parameter only over the state space, according to the Deterministic Policy Gradient Theorem.

Link to original

State Visitation Frequency

Definition

State visitation frequency is the discounted sum of probabilities of visiting a given state under policy . where is the initial and is the visitation probability from to in step following .

can be considered as a Distribution Function over the state space.

Link to original

Deterministic Policy Gradient Theorem

Definition

\nabla_{\theta}J(\theta) &= \nabla_{\theta} E_{s\sim \rho_{\mu}}[Q(s, a)|_{a=\mu(s;\theta)}]\\ &= \int_{S} \rho_{\mu}(s)\nabla_{a}Q(s, a)|_{a=\mu(s;\theta)}\nabla_{\theta}\mu(s;\theta)ds\quad\text{(by chain rule)}\\ &= E_{s\sim\rho_{\mu}}[\nabla_{a}Q(s, a)|_{a=\mu(s;\theta)}\nabla_{\theta}\mu(s;\theta)] \end{aligned}$$Link to original

Deep Deterministic Policy Gradient

Definition

Deep Deterministic Policy Gradient (DDPG) is an Actor-Critic Method based on DPG that learns deterministic policies to handle continuous action space by adapting DQN architecture such as experience replay and target network.

Due to the determinacy of the policy of DDPG, extra noise is necessary for exploration. The exploration policy is constructed by the sum of the determinant action and a noise process. where is a noise process.

The target network updating in DQN is substituted by soft (gradual) updating.

\hat{\phi} &\leftarrow \lambda\phi + (1-\lambda)\hat{\phi}\\ \hat{\theta} &\leftarrow \lambda\theta + (1-\lambda)\hat{\theta} \end{aligned}$$ ## Actor (Policy Gradient Update) $$J(\theta) = E_{s\sim \rho_{\mu}}[Q(s, a; \phi)]\Big|_{a = \mu(s;\theta)}$$ $$\Delta\theta = \nabla_{a}Q(s, a;\phi)|_{a=\mu(s;\theta)}\nabla_{\theta}\mu(s;\theta)$$ Where: - $\phi$: behavior critic network. - $\theta$: behavior actor network. ## Critic (Value Network Update) $$L(\phi) = E_{s\sim \rho_{\mu}}[r + \gamma \hat{Q}(s', \hat{\mu}(s';\hat{\theta}); \hat{\phi}) - Q(s, a; \phi)]^{2}\Big|_{a = \mu(s;\theta)}$$ $$\Delta\phi = (r + \gamma \hat{Q}(s', \hat{\mu}(s';\hat{\theta}); \hat{\phi}) - Q(s, a; \phi))\nabla_{\phi}Q(s, a; \phi)$$ Where: - $\phi$: behavior critic network. - $\theta$: behavior actor network. - $\hat{\phi}$: target critic network. - $\hat{\theta}$: target actor network. # Algorithm 1. Initialize critic network $Q(s, a; \phi)$ and actor network $\mu(s; \phi)$ randomly, target networks $\hat{Q}, \hat{\mu}$ with weights $\hat{\phi} = \phi, \hat{\theta} = \theta$, and the replay buffer $\mathcal{R}$ to max size $N$. 3. Repeat for each episode: 1. Initialize state $s_{0}$ and a random noise process $\mathcal{N}$. 2. Repeat for each step of an episode until terminal, $t=0, 1, \dots, T-1$ : 1. Select an action $a_{t} = \mu(s_{t}; \theta) + \mathcal{N}_{t}$. 2. Take the action $a_{t}$ and observe a reward $r_{t+1}$ and a next state $s_{t+1}$. 3. Store transition $(s_{t}, a_{t}, r_{t+1}, s_{t+1})$ in the replay buffer $\mathcal{R}$. 4. Sample random minibatch of $B$ transitions $(s_{i}, a_{i}, r_{i+1}, s_{i+1})$ from $\mathcal{R}$. 5. $y_{i} \leftarrow r_{i+1} + \gamma \hat{Q}(s_{i+1}, \hat{\mu}(s_{i+1};\hat{\theta}); \hat{\phi})$. 6. Update critic network by minimizing the loss $L = \cfrac{1}{B}\sum\limits_{i}(y_{i} - Q(s_{i}, a_{i}; \phi))^{2}$. 7. Update actor network using the [[Deterministic Policy Gradient]] $\nabla_{\theta}J \approx \cfrac{1}{B}\sum\limits_{i}\nabla_{a}Q(s_{i}, a;\phi)|_{a=\mu(s;\theta)}\nabla_{\theta}\mu(s_{i};\theta)$ 8. Update target networks $\hat{\phi} \leftarrow \lambda\phi + (1-\lambda)\hat{\phi}$ $\hat{\theta} \leftarrow \lambda\theta + (1-\lambda)\hat{\theta}$Link to original

TRPO

Trust Region Policy Optimization

Definition

The performance of DDPG does not improve monotonically. TRPO addresses the problem by adopting two concepts: Minorize-Maximization Algorithm and trust region. TRPO updates the policy parameter on a trust region in the policy space, guaranteeing monotonic improvement of the objective function (expected return). Although TRPO has achieved high performance, its implementation is very complex so being not practical.

TRPO starts from the maximization problem of expected return

The original problem is approximated multiple times to find a surrogate function and be solved by MM Algorithm. The surrogate function satisfying the trust region constraint is found using the conservative policy iteration update. where is a local approximation of , and is a State Visitation Frequency.

The KL penalized optimization problem is transformed to a constrained optimization problem by Duality.

By approximations, the final optimization problem is

The optimization problem is solved by the natural policy gradient and check the monotonic improvement with Backtracking Line Search where is a policy gradient, is the Fisher Information Metric, and is the constraint threshold.

TRPO is performed by repeating 3 steps in each iteration

- Collect trajectories on , and update -values.

- By averaging over samples, construct the estimated objective and constraint.

- Approximately solve the constrained optimization by natural policy gradient and Backtracking Line Search to update the policy parameter .

Algorithm

Link to original

- Initialize policy parameters .

- For :

- Collect a set of trajectories under current policy .

- Compute advantages using an advantage estimation algorithm.

- Compute policy gradient and Fisher Information Metric .

- Compute natural gradient .

- Estimate proposed step

- Perform Backtracking Line Search to obtain final update: 2. Find minimum such that the KL constraint is satisfied.

- Update the policy

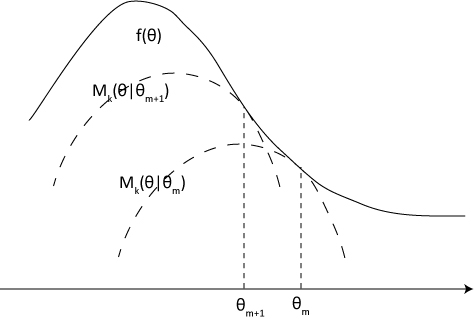

MM Algorithm

Definition

The MM algorithm is an iterative optimization method which exploits the convexity of a function in order to find its maxima or minima. The MM stands for Majorize-Minimization or Minorize-Maximization, depending on whether the desired optimization is a minimization or a maximization.

The MM algorithm works by finding a surrogate function that minorizes or majorizes the objective function. Optimizing the surrogate function will monotonically improve the value of the objective function.

Algorithm

Consider Minorize-Maximization algorithm for a maximization problem of a function .

Link to original

- Find a surrogate function (minorized ) satisfying:

- Find a point maximizes the surrogate function Then,

- Use the maximum point as the next point.

- Re-evaluate the surrogate function at the new point and repeat Iteration until convergence to a (local) maximum.

Natural Gradient

Definition

The natural gradient is an optimization method that takes into account the geometry of the parameter space when updating parameters. Unlike the standard gradient, which points in the direction of the steepest ascent in the Euclidean Space of parameters, the natural gradient points in the direction of the steepest ascent in the space of probability Distribution induced by the parameters.

The natural gradient uses the Fisher Information matrix to define a Metric on the parameter space (Fisher Information Metric). It can be interpreted as the steepest ascent direction in the space of probability distributions, as measured by the KL-Divergence. Instead of taking a step that maximizes the change in the objective function in the Euclidean space of parameters, the natural gradient takes a step that maximizes the change in while keeping the change in the probability distribution, as measured by KL divergence, relatively small.

Link to original

PPO

Proximal Policy Optimization

Definition

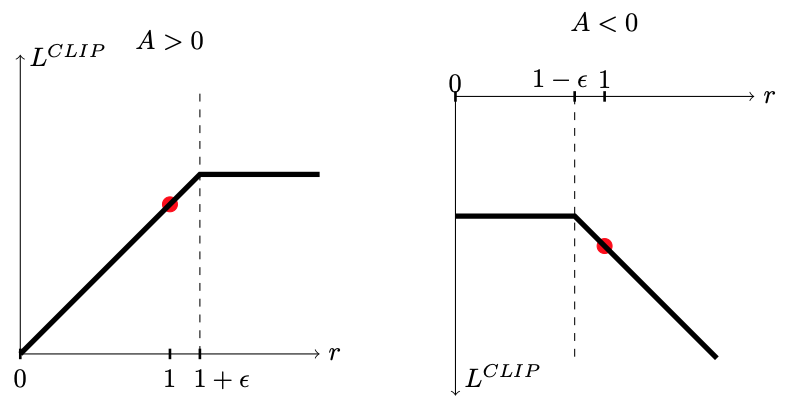

Although TRPO has achieved monotonic improvement, its implementation and computation are complicated due to KL constraint. PPO removed the KL constraint term in the optimization problem of TRPO by introducing the clipped surrogate objective, which provides penalty when the policy update is too high.

PPO apply clipping to the policy ratio . The cliiped surrogate function is defined as where is the policy ratio, and is a hyperparameter that determines the clipping range.

satisfies , so we can still use MM Algorithm.

To minimize the value function loss simultaneously and to encourage exploration, PPO appends additional terms to the objective function. Where

Link to original

- is the clipped surrogate objective

- is the value function loss

- is the Entropy bonus terms.

- are hyperparameter that controls the weight of the losses.

Distributional RL

Distributional Reinforcement Learning

Definition

Distributional RL treats the Reward as a Random Variable, and uses random return , called an action-value distribution, instead of the Action-Value Function.

Algorithms

Link to original

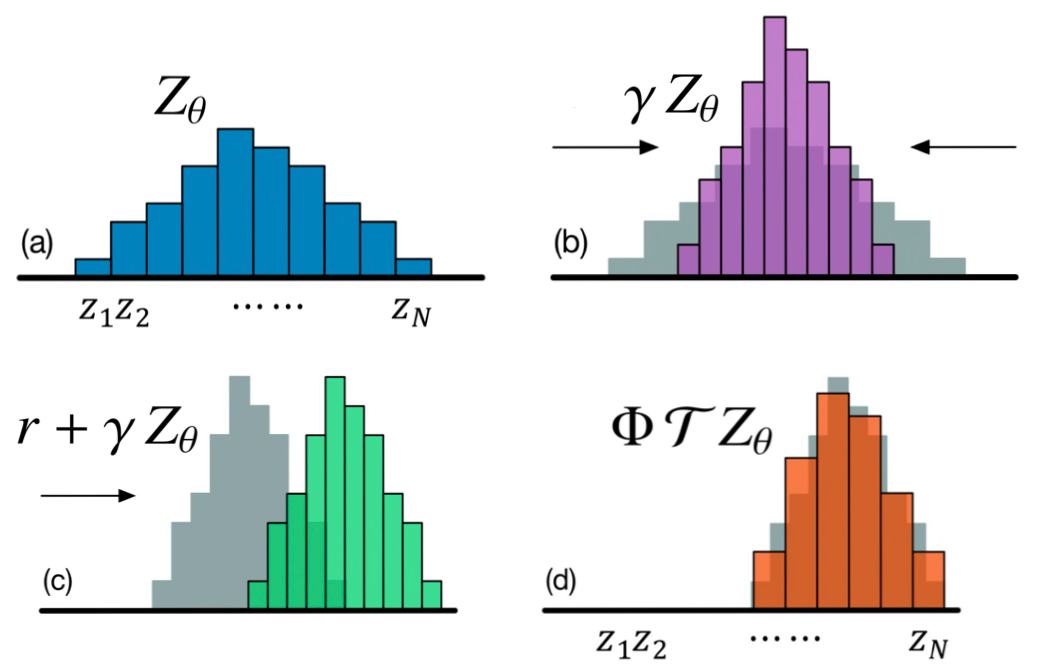

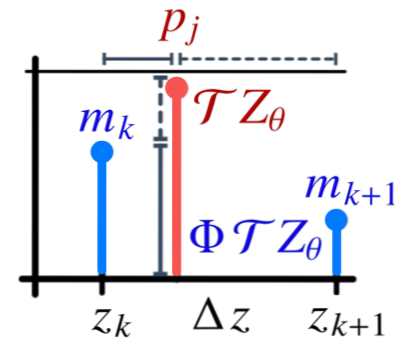

Distributional Bellman Equation

Definition

Distributional Bellman Equation

Distributional Bellman Operator

The distributional Bellman equation can be written with the operator as

Link to original

Distributional Bellman Optimality Equation

Definition

Distributional Bellman Optimality Equation

Distributional Bellman Optimality Operator

The distributional Bellman optimality equation can be written with the operator as

Link to original

Distributional Policy Iteration

Definition

Policy Evaluation

Compute from the current policy .

Distributional Bellman operator for a fixed policy is a Contraction Map in a maximal form of Wasserstein Metric.

Thus, the iteration converges to a unique fixed point (by Contraction Lemma).

Control (Policy Improvement)

Seek a greedy policy based on the , which maximizes the expectation of .

Distributional Bellman optimality operator is not a Contraction Map, not even continuous in any Metric, causing the distributional instability. To overcome the problem, discrete action-value distributions are used to approximate the true distribution .

Link to original

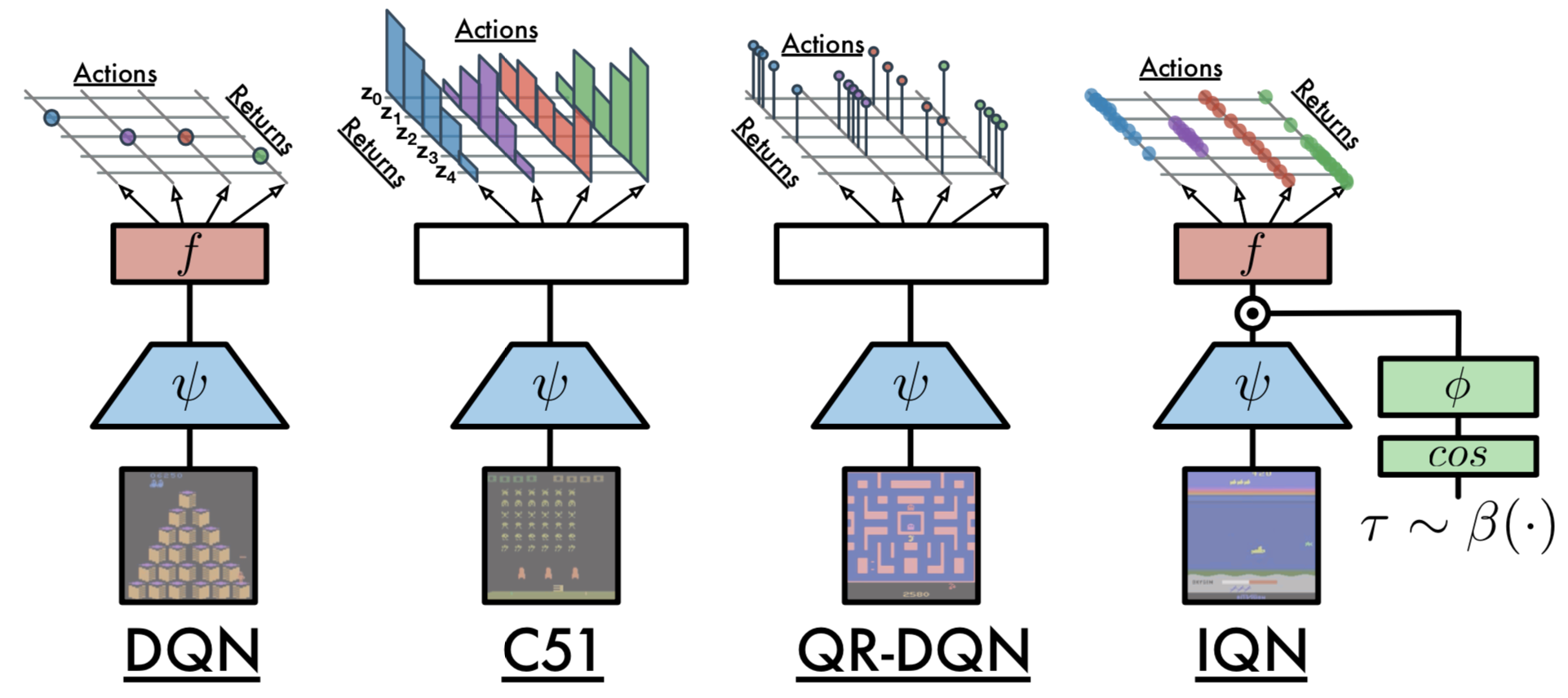

C51

C51

Definition

The C51 algorithm is a Distributional Reinforcement Learning algorithm that approximates the distribution of the random return a using discretized distribution.

Architecture

The range of possible returns is divided into a fixed support of -equally spaced bins , where and . and are the minimum and maximum possible return values, respectively. In the C51 algorithm, the support determined once at the beginning and remains fixed throughout the entire learning process.

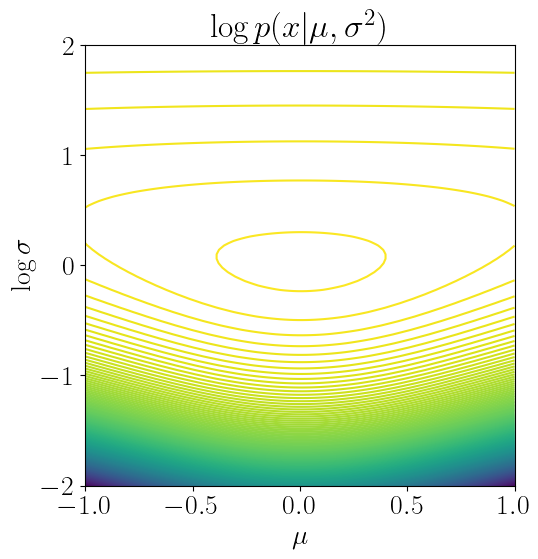

For each state-action pair , the algorithm estimates the probabilities that the return will fall into each -th bin. The probabilities are estimated by a neural network, which takes the state as input and outputs a vector of probabilities for each state-possible action pair . The Softmax Function ensures the properties of the probability of the output for each state-action pair. where are the raw outputs (logits) of the network for the -th atom and action , and represents the network’s parameters.

The discrete distribution over the fixed support is constructed as where is a Dirac’s delta function at .

Projection

In a policy evaluation process, discrete distributions have a problem: and have disjoint supports. Though, Wasserstein Metric in the original setup is robust to this issue, but in practice, KL-Divergence is used instead due to the distributional instability and differentiability. Therefore, the problem still matters.

The disjoint support problem is solved by projection.

- Compute the distributional Bellman update: where

- Distribute the computed probability mass to the neighboring bins in the support proportionally to the distance from each original point: , where is the projection operator.

Loss Function

C51 uses the KL-Divergence as a loss function. where and are the parameters for behavior and target networks, respectively.

The loss function can be simplified to the Cross-Entropy Loss.

Algorithm

Link to original

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Compute and select a greedy action .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .

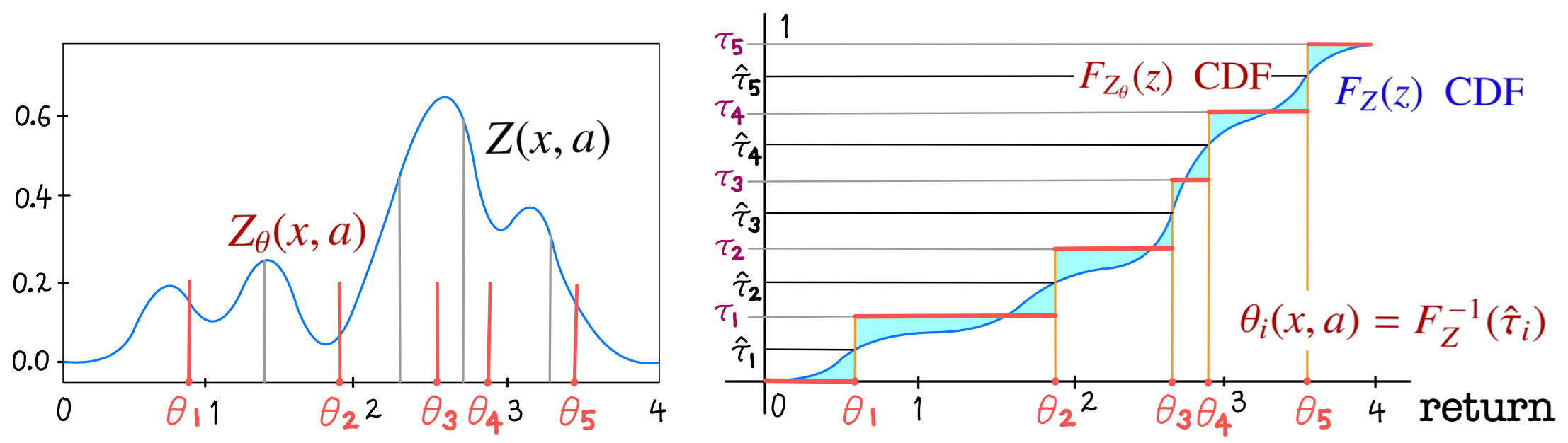

QR-DQN

QR-DQN

Definition

The Quantile-Regression DQN (QR-DQN) algorithm is a Distributional Reinforcement Learning algorithm that approximates the distribution of random return using quantile regression.

Architecture

QR-DQN estimates a set of quantiles of return distribution, where represents the midpoint of -th quantile interval. This can be seen as adjusting the location of the supports of a uniform probability mass to approximate the desired quantile distribution.

The quantile distribution with uniform probability is constructed as where is a Dirac’s delta function at , and are the outputs of the network, representing the estimated quantile values. These values are obtained by applying the inverse CDF of the return distribution to the quantile midpoints .

Using the estimated quantile values as a support minimizes the Wasserstein Distance between the true return distribution and the estimated distribution.

Quantile Regression

Given data set , a -quantile minimizes the loss , where is a quantile loss function.

The quantile values are estimated by minimizing the quantile Huber loss function. Given a transition , the loss is defined as Where:

- and .

- where is Huber Loss.

Algorithm

Link to original

- Initialize behavior network and target network with random weights , and the sample size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Select a greedy action

- Compute the target quantile values .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .

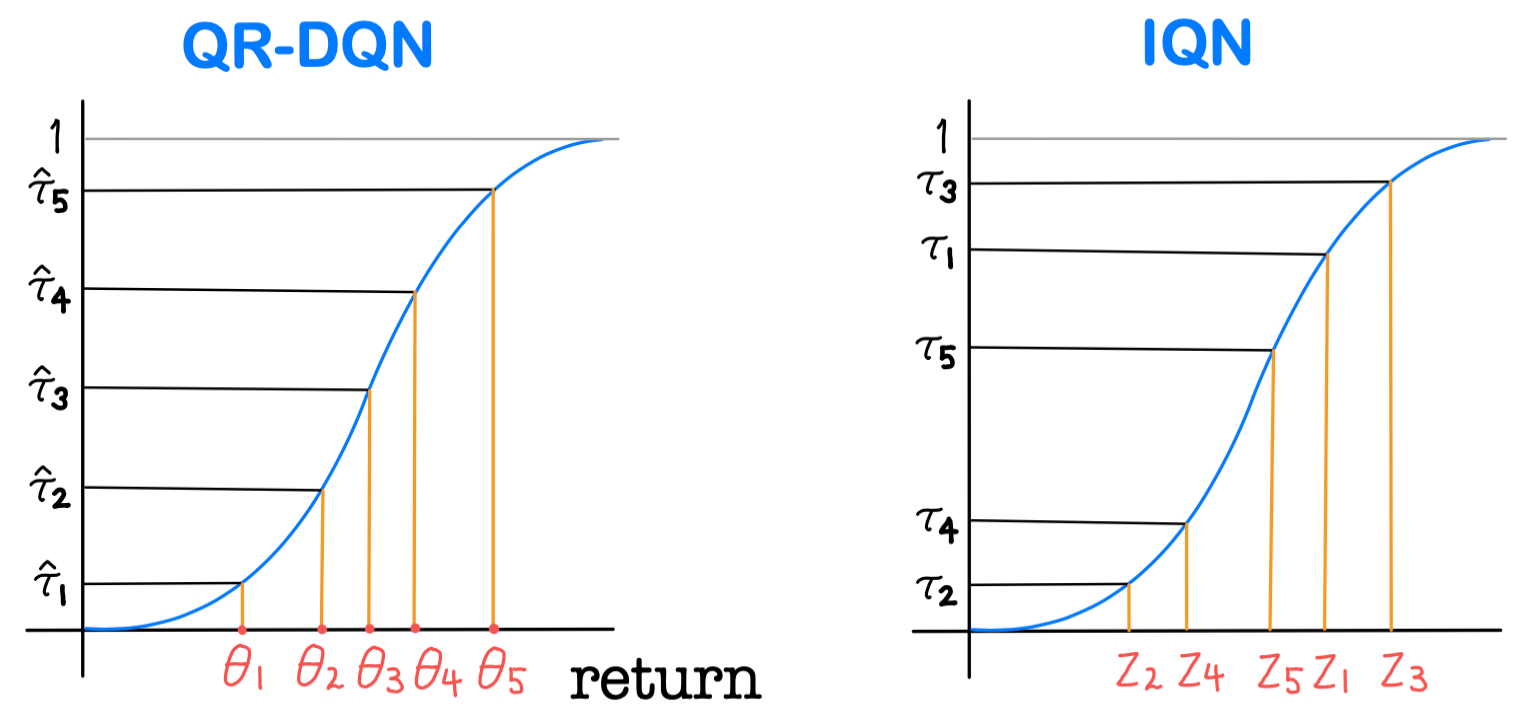

IQN

IQN

Definition

The Implicit Quantile Network (IQN) algorithm is a Distributional Reinforcement Learning algorithm that implicitly estimates the quantiles of a return distribution by learning a function that maps a quantile fraction to the corresponding quantile value.

Architecture

IQN estimates the quantile value for a given state , action , and a quantile fraction . The output is the estimated quantile value corresponding to that fraction .

The input state processed by the encoder layers of neural network to produce a state embedding vector .

The quantile fraction is embedded into a higher-dimensional vector using a set of basis functions, where whose dimension is the same as the one of the state-feature . The two embeddings: state embedding and quantile-embedding, are combined using Hadamard Product .

The combined embedding is fed into the further layers to predict the quantile value

In summary, the quantile value for a given state is estimated by

By sampling different quantile fractions , IQN can approximate the entire return distribution. The expectation of the return can be approximated by averaging the estimated quantile values over the sampled quantile fractions where are sampled quantile fractions.

Loss Function

IQN uses a quantile Huber loss function, similar to QR-DQN. Given a transition , the loss is defined as Where:

- and are quantile fractions for the current state-action pair and next state, respectively.

- and .

- where is Huber Loss.

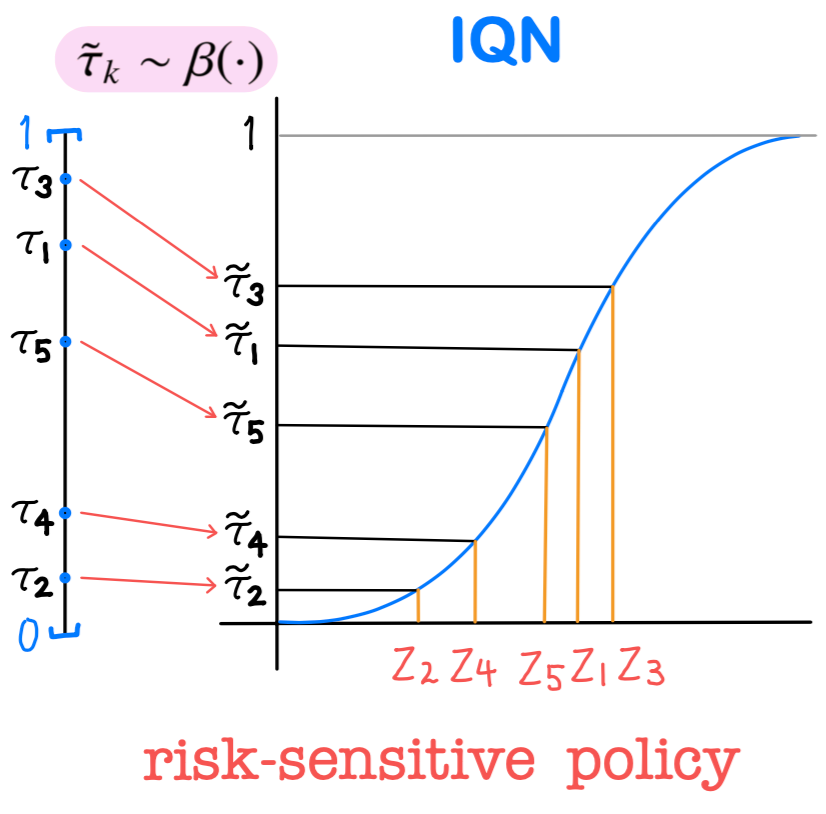

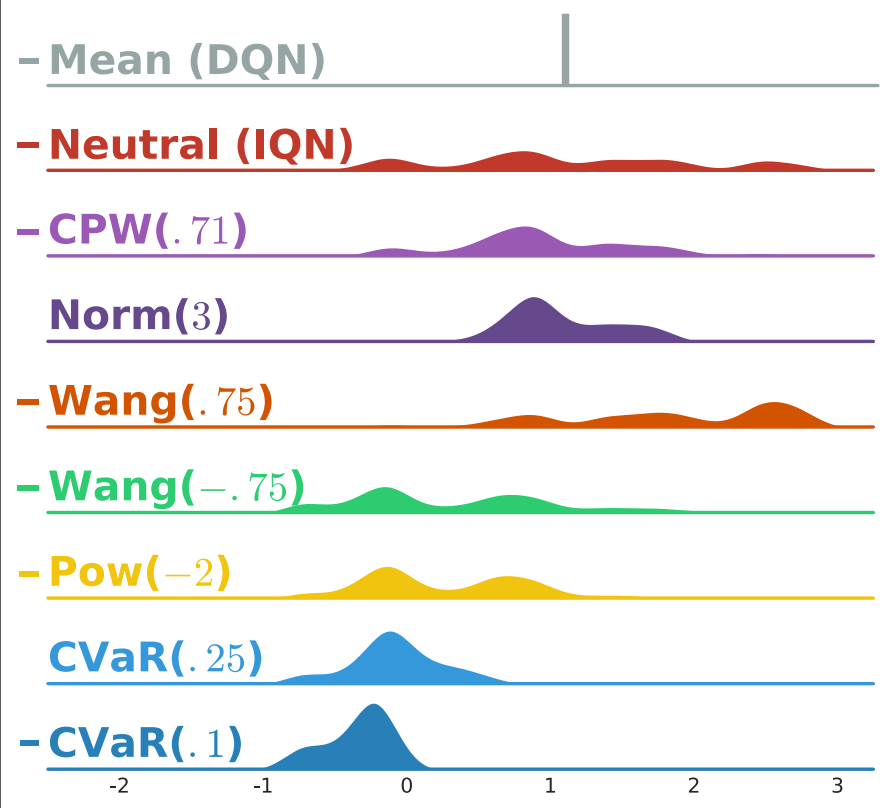

Risk-Sensitive Policy

The IQN has information about the entire distribution by estimating the distribution of returns. This property makes it suitable for implementing risk-sensitive policies, allowing for decision-making considering risk. Risk-sensitive policies focus on not just the expected return, but the variability or uncertainty in the returns. IQN makes it feasible by providing estimated quantiles of the return distribution.

The function is used to define a distortion risk measure that focuses on specific parts of the return distribution. By applying to the quantile fraction , we can re-wright each outcome or change the sampling distribution.

These are examples of distortion measures:

- Cumulative Probability Weighting Parametrization (CPW):

- Wang: where is the CDF for standard normal distribution.

- Power Formula:

- Conditional Value at Risk (CVaR):

Algorithm

Link to original

- Initialize behavior quantile network and target quantile network with random weights , the sample sizes , and a distortion measure .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- Sample quantile fractions under the distortion measure , | and select a greedy action .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Sample quantile fractions for the next state and select a greedy action .

- Compute the target quantile values .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .

/../../Files/Pasted-image-20241212134330.png)