Definition

The Quantile-Regression DQN (QR-DQN) algorithm is a Distributional Reinforcement Learning algorithm that approximates the distribution of random return using quantile regression.

Architecture

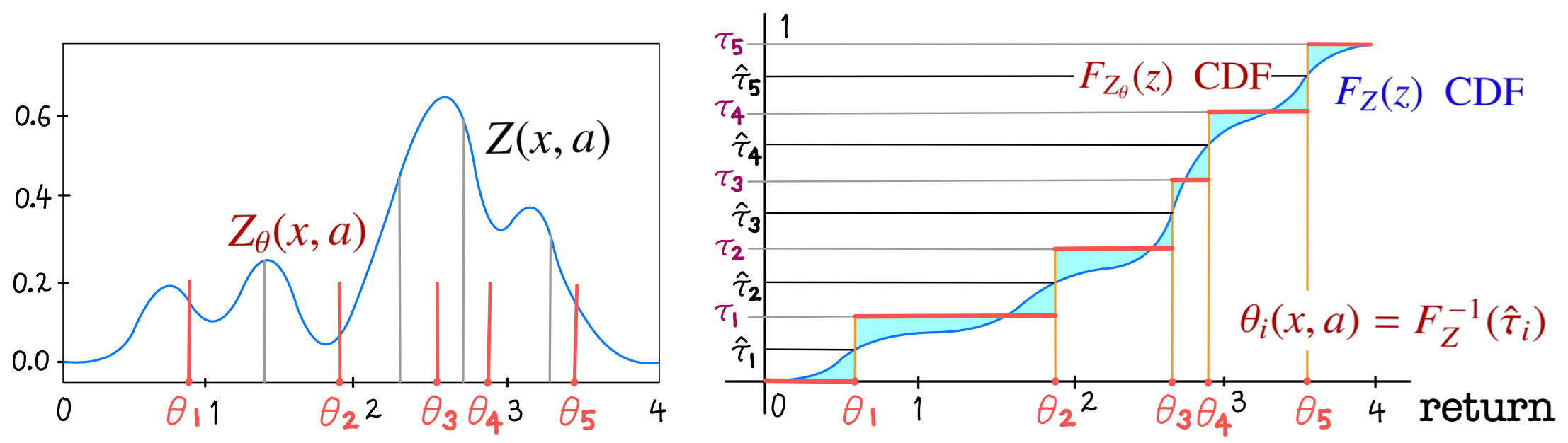

QR-DQN estimates a set of quantiles of return distribution, where represents the midpoint of -th quantile interval. This can be seen as adjusting the location of the supports of a uniform probability mass to approximate the desired quantile distribution.

The quantile distribution with uniform probability is constructed as where is a Dirac’s delta function at , and are the outputs of the network, representing the estimated quantile values. These values are obtained by applying the inverse CDF of the return distribution to the quantile midpoints .

Using the estimated quantile values as a support minimizes the Wasserstein Distance between the true return distribution and the estimated distribution.

Quantile Regression

Given data set , a -quantile minimizes the loss , where is a quantile loss function.

The quantile values are estimated by minimizing the quantile Huber loss function. Given a transition , the loss is defined as Where:

- and .

- where is Huber Loss.

Algorithm

- Initialize behavior network and target network with random weights , and the sample size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Select a greedy action

- Compute the target quantile values .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .