Definition

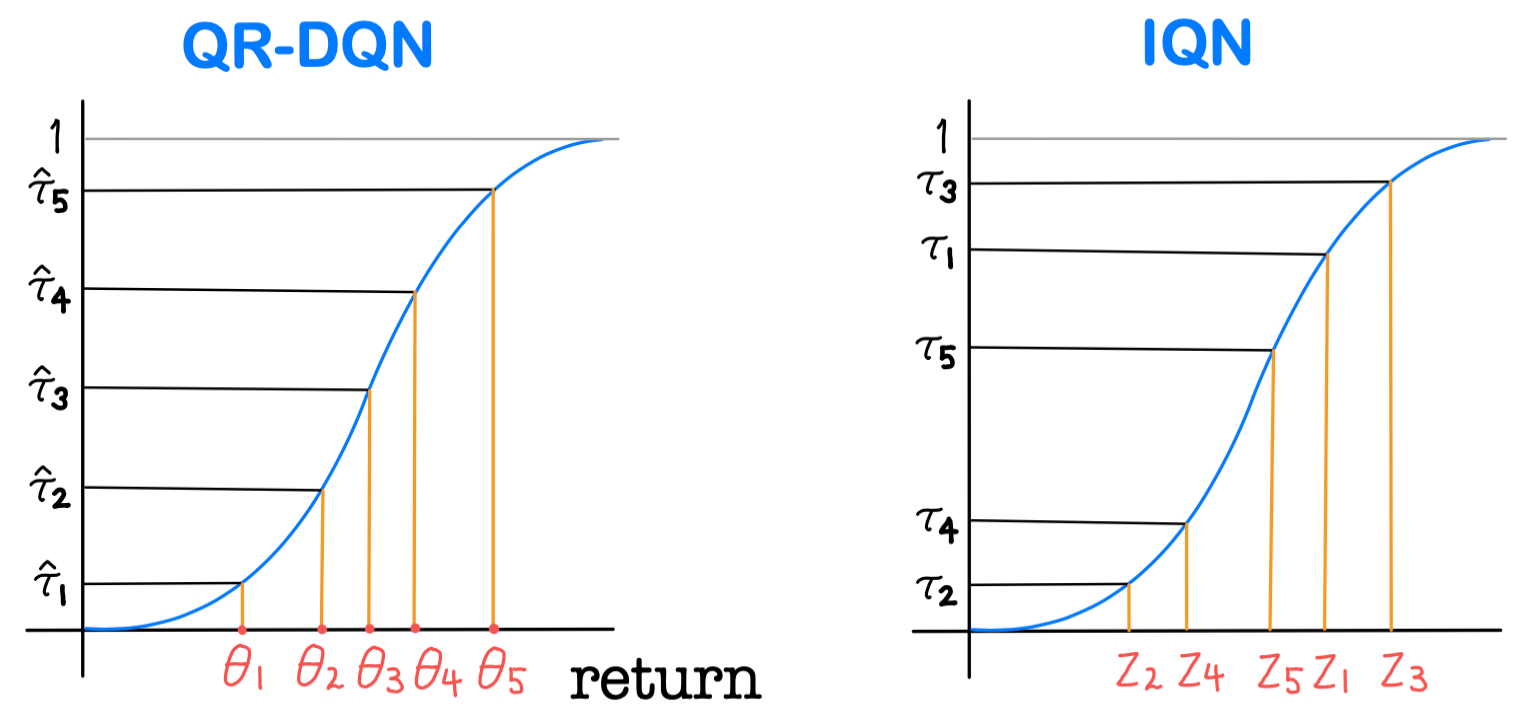

The Implicit Quantile Network (IQN) algorithm is a Distributional Reinforcement Learning algorithm that implicitly estimates the quantiles of a return distribution by learning a function that maps a quantile fraction to the corresponding quantile value.

Architecture

IQN estimates the quantile value for a given state , action , and a quantile fraction . The output is the estimated quantile value corresponding to that fraction .

The input state processed by the encoder layers of neural network to produce a state embedding vector .

The quantile fraction is embedded into a higher-dimensional vector using a set of basis functions, where whose dimension is the same as the one of the state-feature . The two embeddings: state embedding and quantile-embedding, are combined using Hadamard Product .

The combined embedding is fed into the further layers to predict the quantile value

In summary, the quantile value for a given state is estimated by

By sampling different quantile fractions , IQN can approximate the entire return distribution. The expectation of the return can be approximated by averaging the estimated quantile values over the sampled quantile fractions where are sampled quantile fractions.

Loss Function

IQN uses a quantile Huber loss function, similar to QR-DQN. Given a transition , the loss is defined as Where:

- and are quantile fractions for the current state-action pair and next state, respectively.

- and .

- where is Huber Loss.

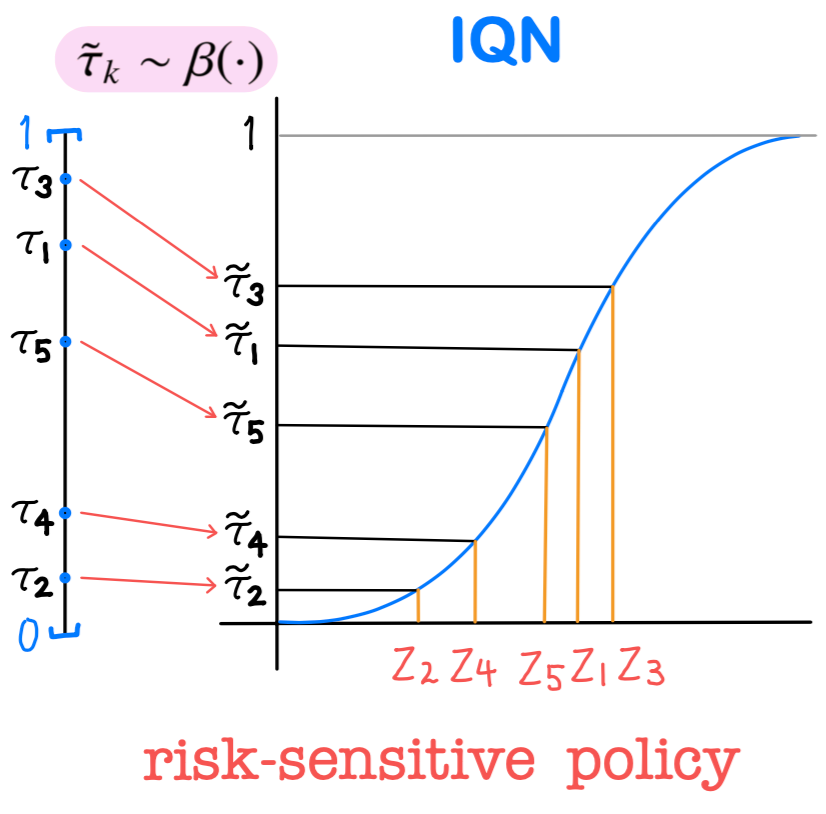

Risk-Sensitive Policy

The IQN has information about the entire distribution by estimating the distribution of returns. This property makes it suitable for implementing risk-sensitive policies, allowing for decision-making considering risk. Risk-sensitive policies focus on not just the expected return, but the variability or uncertainty in the returns. IQN makes it feasible by providing estimated quantiles of the return distribution.

The function is used to define a distortion risk measure that focuses on specific parts of the return distribution. By applying to the quantile fraction , we can re-wright each outcome or change the sampling distribution.

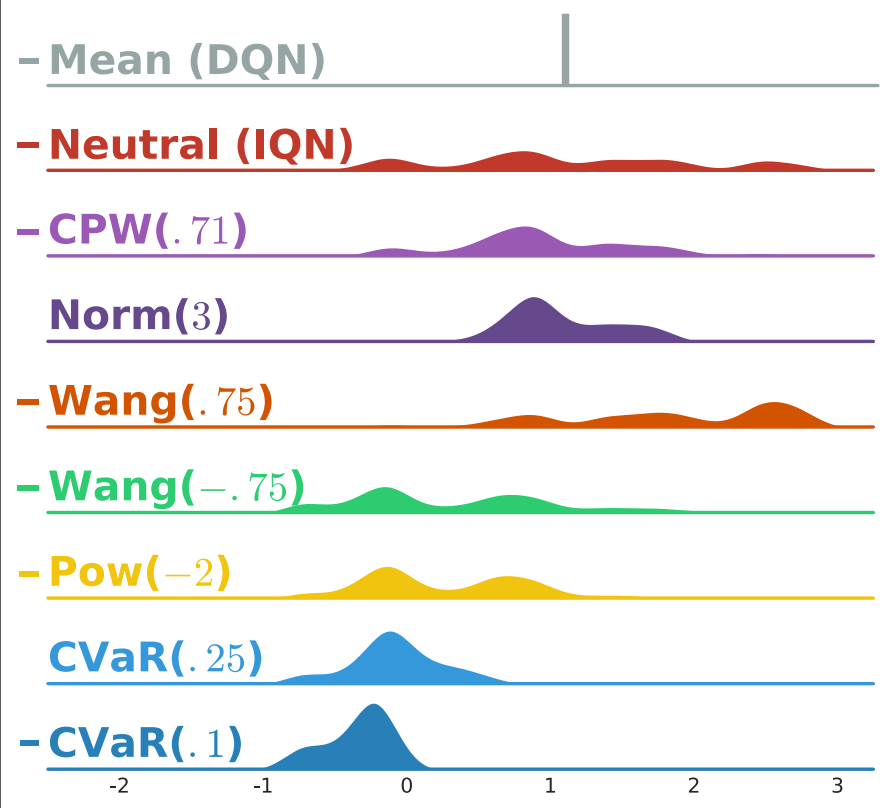

These are examples of distortion measures:

- Cumulative Probability Weighting Parametrization (CPW):

- Wang: where is the CDF for standard normal distribution.

- Power Formula:

- Conditional Value at Risk (CVaR):

Algorithm

- Initialize behavior quantile network and target quantile network with random weights , the sample sizes , and a distortion measure .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- Sample quantile fractions under the distortion measure , | and select a greedy action .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Sample quantile fractions for the next state and select a greedy action .

- Compute the target quantile values .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .