Definition

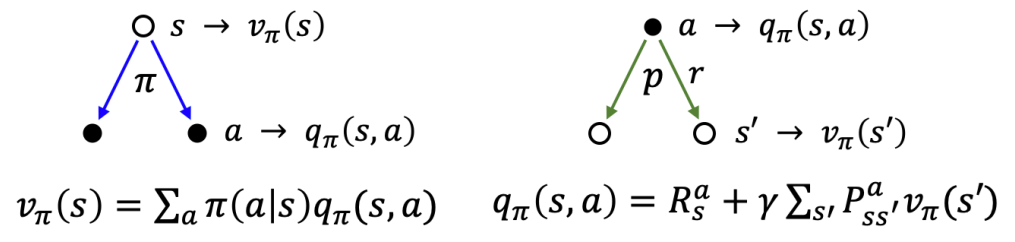

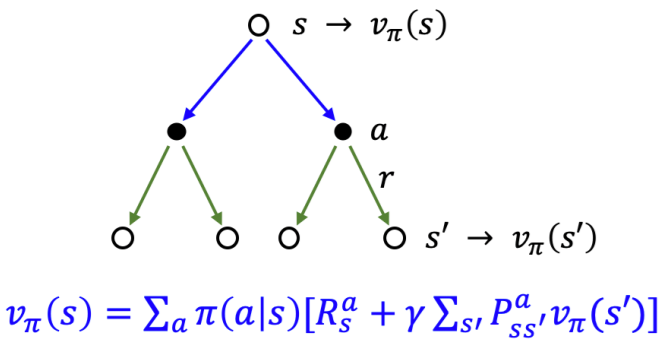

Bellman expectation equation is a recursive equation decomposing State-Value Function v π ( s ) Reward R s a state-value γ v π ( s ′ )

v_{\pi}(s)

&= E_{\pi}[G_{t} | S_{t}=s]\\

&= P(A_{t}=a|S_{t}=s)\cdot E_{\pi}[G_{t} | S_{t}=s, A_{t}=a]\quad \left( = \sum\limits_{a} \pi(a|s) q_{\pi}(s, a) \right)\\

&= \sum\limits_{a} \pi(a|s) \cdot E_{\pi}[R_{t+1} + \gamma G_{t+1} | S_{t}=s, A_{t}=a]\\

&= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} E_{\pi}[R_{t+1} + \gamma G_{t+1} | S_{t}=s, A_{t}=a, S_{t+1}=s', R_{t+1}=r]\cdot P(S_{t+1}=s', R_{t+1}=r | S_{t}=s, A_{t}=a)\\

&= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} p(s', r | s, a)[r + \gamma E_{\pi}[G_{t+1} | S_{t+1}=s']]\quad \text{(by Markov property)}\\

&= \sum\limits_{a} \pi(a|s) \cdot \sum\limits_{s', r} p(s', r | s, a)[r + \gamma v_{\pi}(s')]\quad \left( =\sum\limits_{a} \pi(a|s)[R_{s}^{a} + \gamma \sum\limits_{s'}P_{ss'}^{a} v_{\pi}(s')] \right)\\

&= E_{\pi}[R_{t+1} + \gamma v_{\pi}(S_{t+1}) | S_{t}=s]

\end{aligned}$$

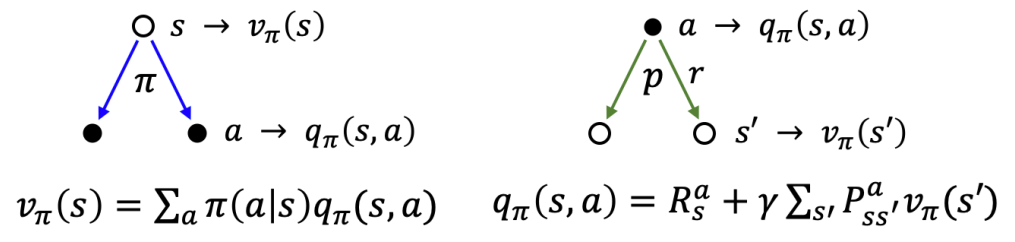

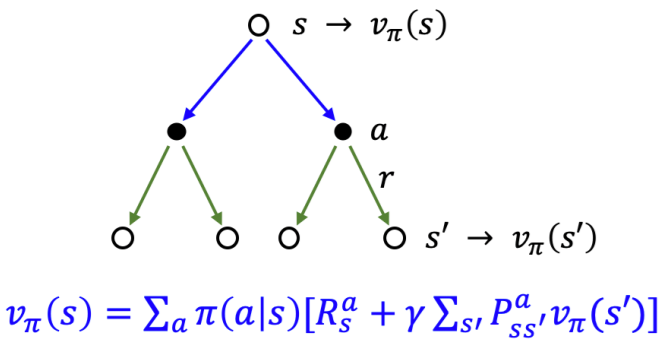

## Bellman Equation for [[Action-Value Function]]

![[Pasted image 20241207130544.png|450]]

$$\begin{aligned}

q_{\pi}(s, a)

&= E_\pi[G_{t} | S_{t}=s, A_{t}=a]\\

&= \sum\limits_{s'r}p(s', r | s, a)[r + \gamma \underbrace{\sum\limits_{a'} \pi(a'|s') q_{\pi}(s', a')}_{v_{\pi}(s')}]\quad \left( = R_{s}^{a} + \gamma \sum\limits_{s'} P_{ss'}^{a} \underbrace{\sum\limits_{a'} \pi(a'|s') q_{\pi}(s', a')}_{v_{\pi}(s')} \right)\\

&= E_{\pi}[R_{t+1} + \gamma q_{\pi}(S_{t+1}, A_{t+1}) | S_{t}=s, A_{t}=a]

\end{aligned}$$