Definition

Monte Carlo method (MC) is tabular updating and model-free. MC policy iteration adapts GPI based on episode-by-episode (sample multi-step backup) of PE estimating and -greedy PI.

MC Prediction (Policy Evaluation)

Learn from entire episodes of real experience under policy .

Empirical Mean

MC policy evaluation uses empirical mean return instead of expected return. where is increment total return and is incremental count.

The mean return converges to true action-value function as incremental count increases.

Incremental MC Update

The incremental mean can be expressed in a recursive manner:

Thus, for each state-action pair with return , after one episode, the mean return can be calculated as:

Constant- MC Policy Evaluation

It substitutes the weights, used for incremental MC, with constant step-size .

MC Control (-Greedy Policy Improvement)

Choose the greedy action with probability and a random action with probability .

For any -greedy policy , -greed policy with respect to is always improved i.e. .

-greedy policy is GLIE if e.g.

Algorithm

- Initialize arbitrarily, terminal , and arbitrary -soft policy (non-empty probability).

- Repeat for each episode:

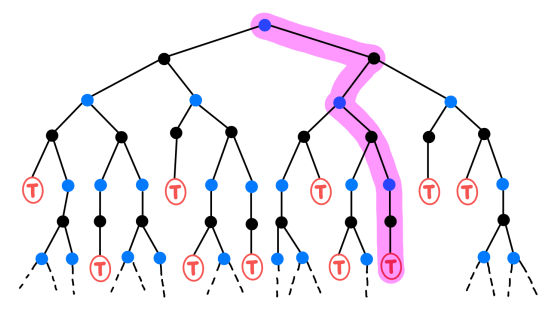

- Generate an episode following the policy : .

- Repeat for each step of an episode, (MC Prediction):

- If is a first visit (), then where can be either incremental MC or constant- MC.

- For each visited state in the episode (MC Control):

- If policy is stable, then stop and return .

Facts

MC is less harmed by violations of Markov Property because it updates value estimates based on true Return (not based on value estimates of successor states).