Definition

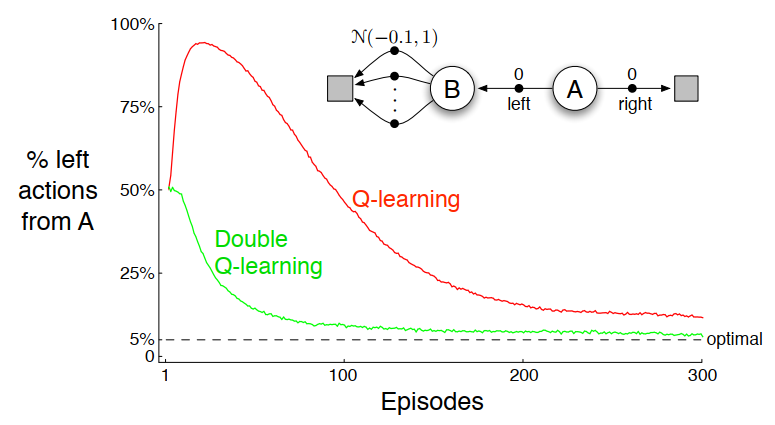

Q-Learning takes the action with the highest value, which tends to overestimate the value of actions (maximization bias) in the early stages of learning, slowing the convergence to .

Double Q-learning uses two Q-value functions to overcome this problem: one for action selection and another for value evaluation.

Algorithm

- Initialize , , and arbitrarily, and and .

- Repeat for each episode:

- Initialize .

- Repeat for each step of an episode until is terminal:

- Choose an action from the initial state using policy derived from and (e.g. -greedy in ).

- Take the action and observe a reward and a next state .

- With probability : else:

- Update

Examples

Q-Learning initially learns to take the left action much more often than the right action despite the lower true state-value of the left. Even at asymptote, it takes the left action more than optimal (5%). In contrast, double Q-learning is unaffected by maximization bias.