Definition

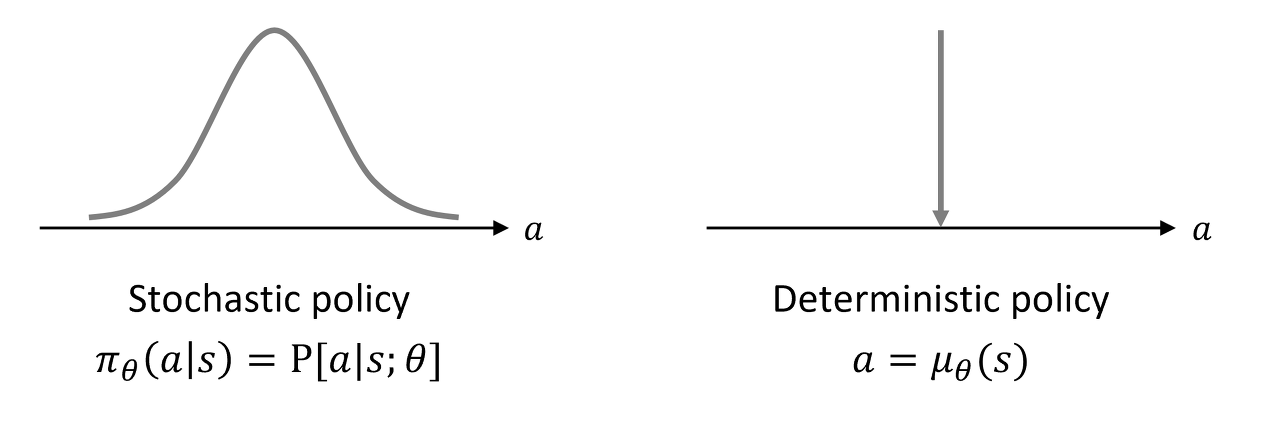

Deterministic Policy Gradient (DPG) learns a deterministic policy as an actor on continuous action spaces and an Action-Value Function as a critic.

DPG requires fewer samples to approximate the gradient than stochastic Policy Gradient because DPG updates the parameter only over the state space, according to the Deterministic Policy Gradient Theorem.