Definition

The C51 algorithm is a Distributional Reinforcement Learning algorithm that approximates the distribution of the random return a using discretized distribution.

Architecture

The range of possible returns is divided into a fixed support of -equally spaced bins , where and . and are the minimum and maximum possible return values, respectively. In the C51 algorithm, the support determined once at the beginning and remains fixed throughout the entire learning process.

For each state-action pair , the algorithm estimates the probabilities that the return will fall into each -th bin. The probabilities are estimated by a neural network, which takes the state as input and outputs a vector of probabilities for each state-possible action pair . The Softmax Function ensures the properties of the probability of the output for each state-action pair. where are the raw outputs (logits) of the network for the -th atom and action , and represents the network’s parameters.

The discrete distribution over the fixed support is constructed as where is a Dirac’s delta function at .

Projection

In a policy evaluation process, discrete distributions have a problem: and have disjoint supports. Though, Wasserstein Metric in the original setup is robust to this issue, but in practice, KL-Divergence is used instead due to the distributional instability and differentiability. Therefore, the problem still matters.

The disjoint support problem is solved by projection.

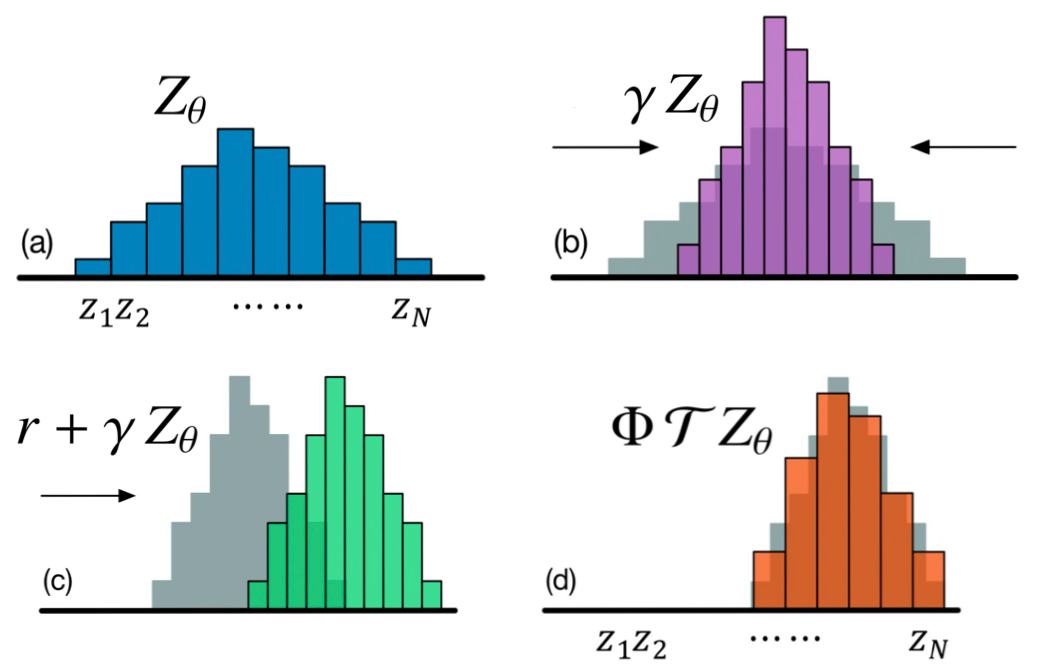

- Compute the distributional Bellman update: where

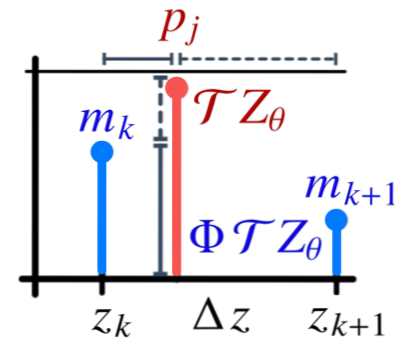

- Distribute the computed probability mass to the neighboring bins in the support proportionally to the distance from each original point: , where is the projection operator.

Loss Function

C51 uses the KL-Divergence as a loss function. where and are the parameters for behavior and target networks, respectively.

The loss function can be simplified to the Cross-Entropy Loss.

Algorithm

- Initialize behavior network and target network with random weights , and the replay buffer to max size .

- Repeat for each episode:

- Initialize sequence .

- Repeat for each step of an episode until terminal, :

- With probability , select a random action otherwise select .

- Take the action and observe a reward and a next state .

- Store transition in the replay buffer .

- Sample a random transition from .

- Compute and select a greedy action .

- Perform Gradient Descent on the loss .

- Update the target network parameter every steps.

- Update .