Temporal Difference Learning (TD) is tabular updating and model-free. TD policy iteration adapts GPI based on one-step transitions of sample episodes.

Algorithms

Examples

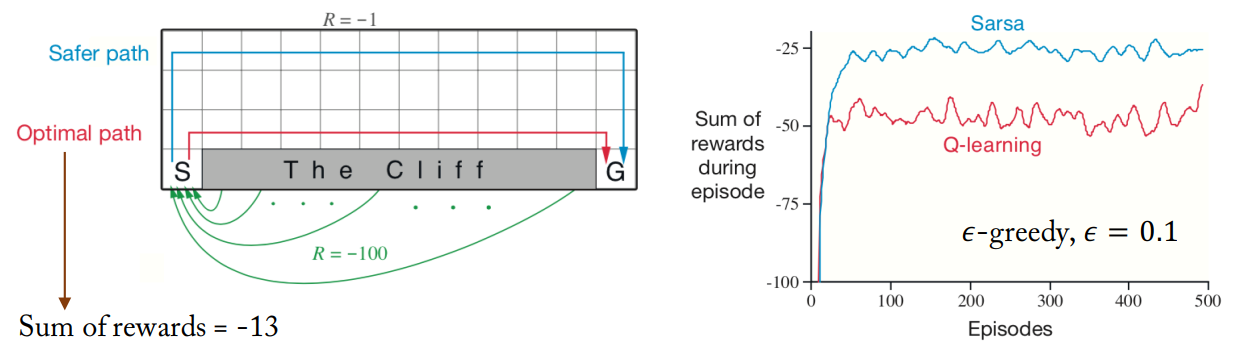

Sarsa allows for potential penalties from exploration moves, which tends to make it avoid a dangerous optimal path and prefers a slower but safer path. In contrast, Q-Learning ignores these penalties and takes action with the highest action value, which results in its occasional falling because of -greedy actions.

Sarsa allows for potential penalties from exploration moves, which tends to make it avoid a dangerous optimal path and prefer a slower but safer path. In contrast, Q-Learning ignores these penalties and takes action based on the highest action value, which can occasionally result in falling off due to -greedy action.