Definition

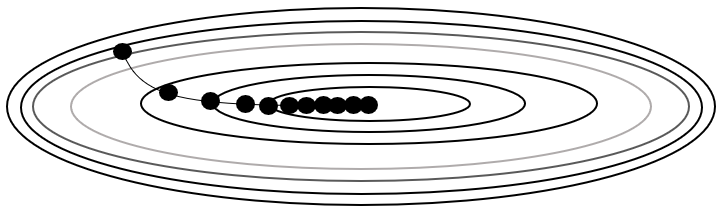

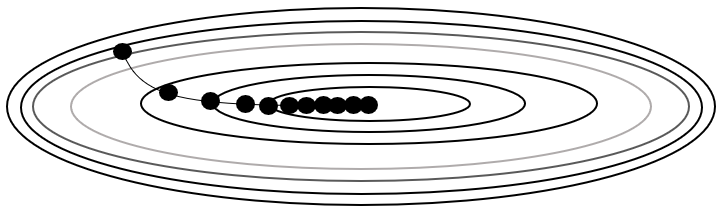

Adaptive gradient descent (AdaGrad) is a Gradient Descent with parameter-wise learning rate.

where is the sum of squares of past gradients, and is a small constant to prevent division by zero.

Adaptive gradient descent (AdaGrad) is a Gradient Descent with parameter-wise learning rate.

where is the sum of squares of past gradients, and is a small constant to prevent division by zero.