Definition

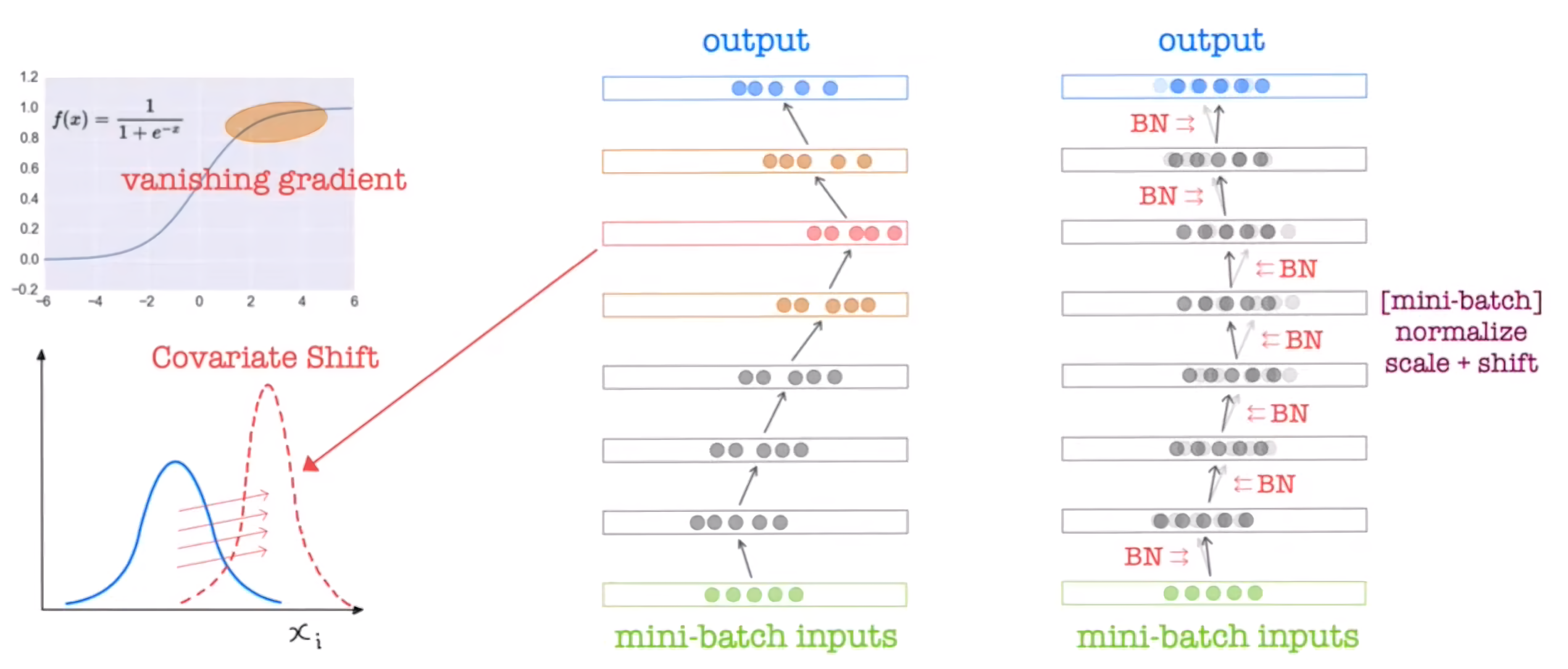

Batch normalization (batch norm) make training of neural network faster through normalization of each layers’ input by re-centering and re-scaling where:

- is the mean vector of the mini-batch

- is the variance vector of the mini-batch

- is the batch size.

- , are the learnable scaling parameters.

- is a small constant to prevent division by zero.

In test stage, the training stage’s moving average of the and are used.