Definition

Variational autoencoder (VAE) utilizes the decoder of an Autoencoder as generator. Unlike traditional autoencoders, VAE models the latent space as a probability distribution, typically a Multivariate Normal Distribution.

Architecture

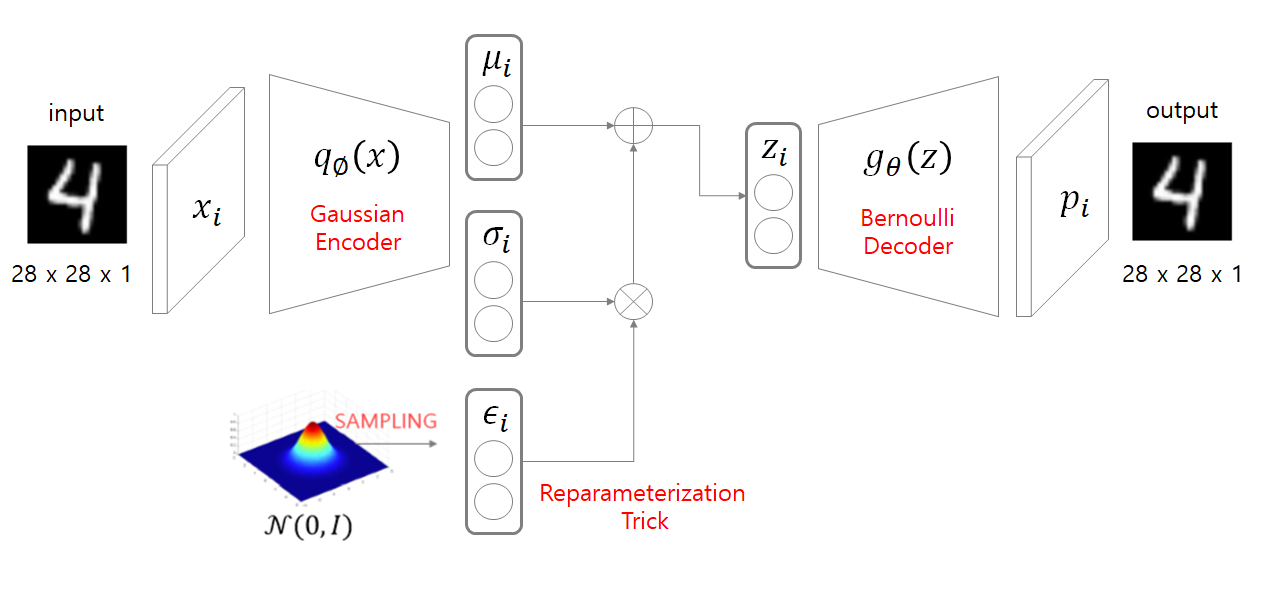

Instead of outputting a single point in latent space, the encoder of VAE produces parameters of a probability distribution on the latent space. The latent vector is sampled from the distribution and is reconstructed by the decoder.

Loss Function (ELBO)

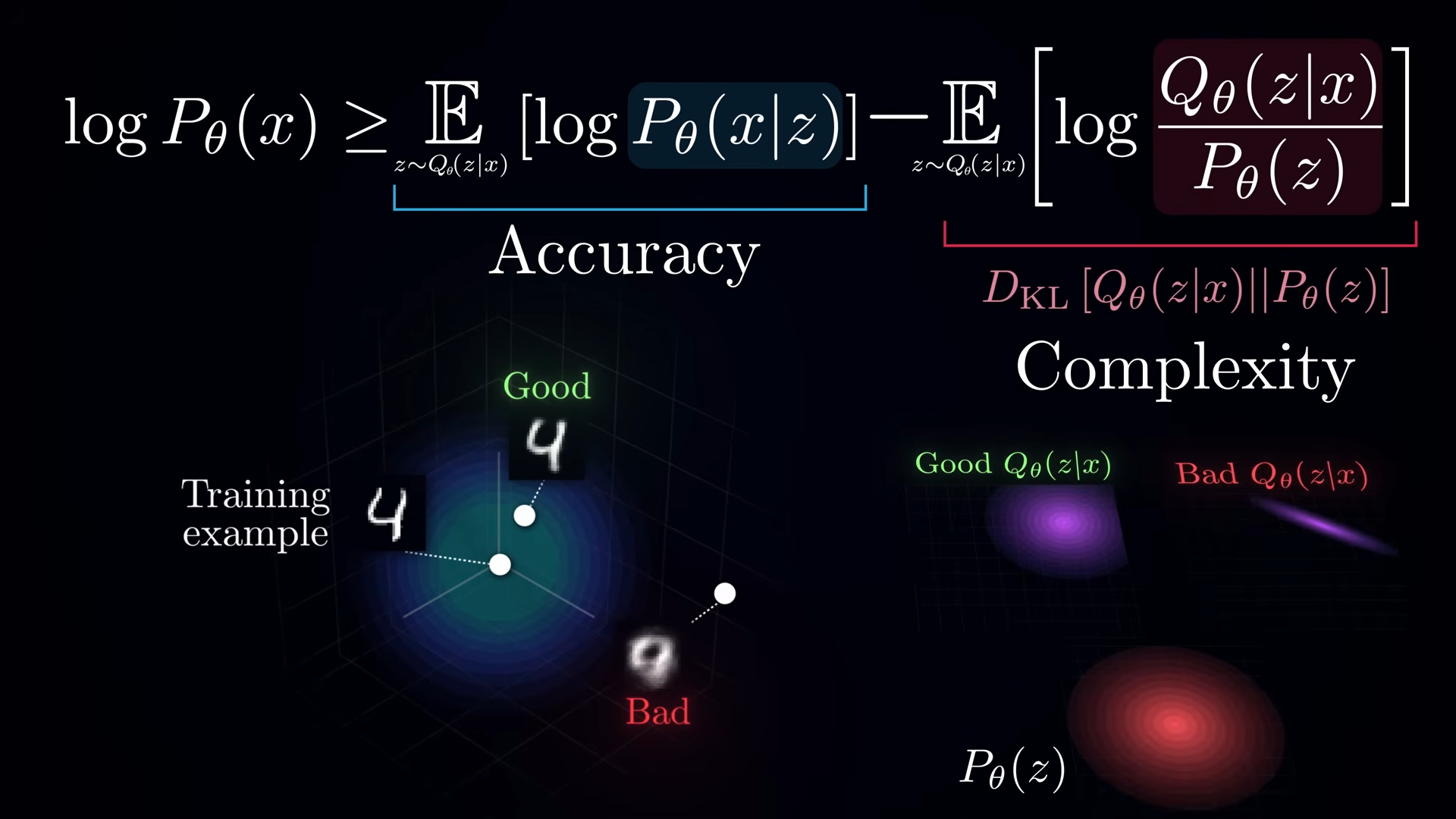

The loss of VAE (also called an evidence lower bound or ELBO) is consists of a reconstruction loss and a KL-Divergence which ensures the latent distribution is close to a normal distribution.

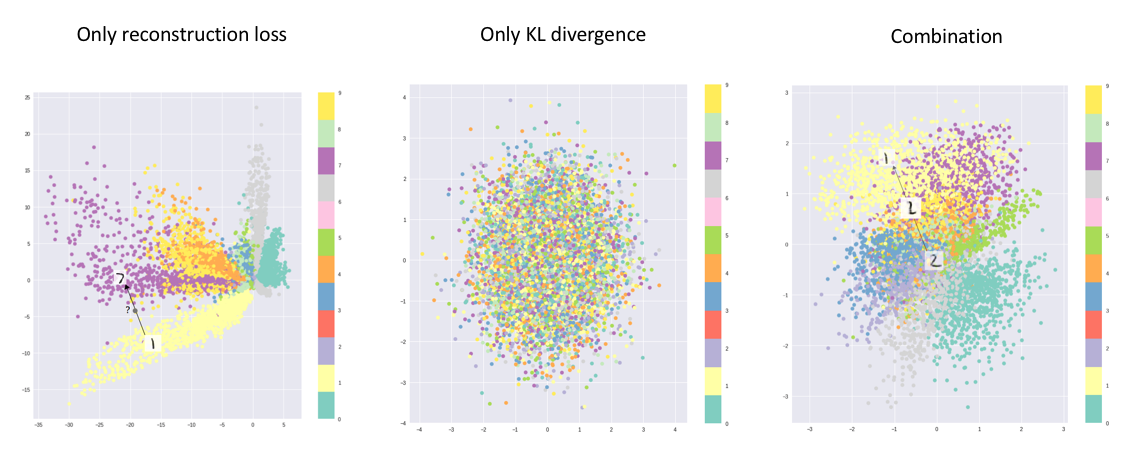

\ln p_{\theta}(x) &= \ln\sum\limits_{z}p_{\theta}(x|z)p_{\theta}(z) \\ &= \ln\sum\limits_{z}p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)}q_{\theta}(z|x) \\ &= \ln\mathbb{E}_{z \sim q_{\theta}(z|x)}\left[ p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)} \right]\\ &\geq \mathbb{E}_{z \sim q_{\theta}(z|x)}\ln\left[ p_{\theta}(x|z)\frac{p_{\theta}(z)}{q_{\theta}(z|x)} \right] =: ELBO\\ &= \underbrace{\mathbb{E}_{z\sim q_{\theta}(z|x)}[\ln p_\theta(x|z)]}_{\text{reconstruction loss}} - \underbrace{D_{KL}(q_\theta(z|x) \| p_\theta(z))}_{\text{regularization loss}} \end{aligned}$$ where $q(z|x)$ is the encoder distribution, $p(x|z)$ is the decoder distribution, and $p(z)$ is the prior distribution (usually $\mathcal{N}(0, I)$). The regularization term ensures the continuity and completeness of the latent space. In terms of variational inference, we can see $p_{\theta}(z)$ as a latent distribution, and $q_{\theta}(z|x)$ as a proposal distribution used for [[Importance Sampling]]. i.e. $q_{\theta}(z|x)$ predicts the probable region in the latent space which likely to have generated the observation $x$. ## Reparameterization Trick ![[Pasted image 20240912191141.png|600]] We can not compute gradients for the operations containing a random variable. So, instead of directly sampling from the distribution $N(\mu, \sigma)$, we randomly sample from $\epsilon \sim N(0, 1)$ and make a latent vector $z = \mu + \sigma \epsilon$. When calculating the gradient in the backpropagation, the sampled $\epsilon$ is considered as a constant ($\cfrac{dz}{d\mu} = 1$ and $\cfrac{dz}{d\sigma} = \epsilon$). # Facts > ![[Pasted image 20240910130423.png|800]] > > The data encoded by VAE is semantically well-distinguished in a latent low-dimensional space.