Definition

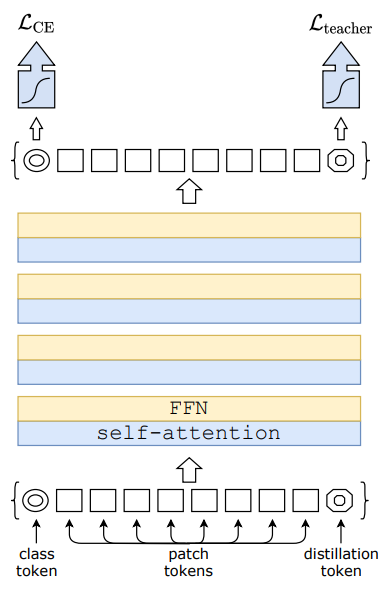

Data-efficient image transformer (DeiT) appended a distillation token to a ViT model and compare the token to the prediction of pre-trained teacher model (CNN).

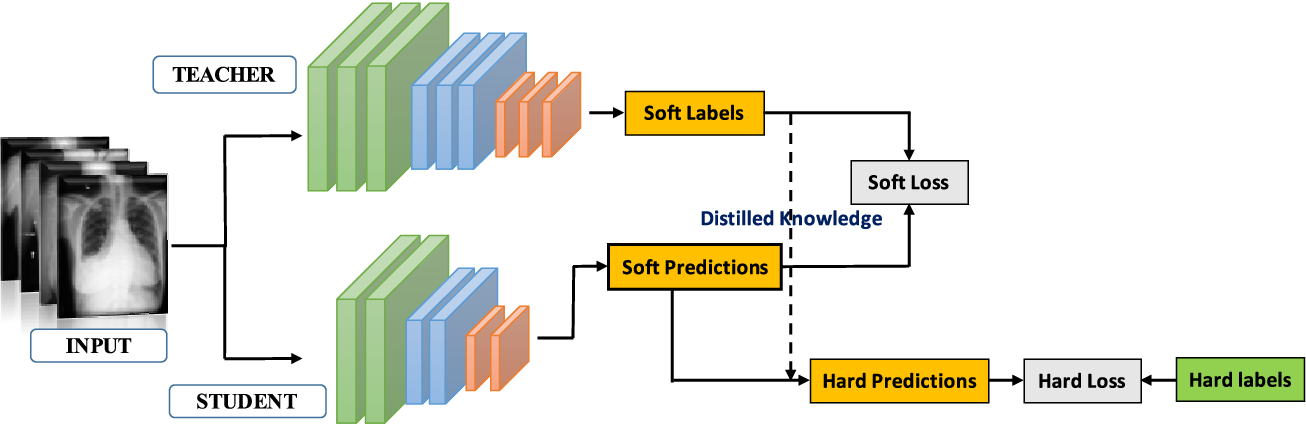

The teacher model and the distillation process in DeiT help the student model (ViT) to generalize better by providing additional supervision from the teacher model. The teacher model’s predictions implicitly contain information about the model’s uncertainties and the relationships between classes.

Architecture

Soft-Label Distillation

Minimizes the KL-Divergence between the softmax label of the teacher model and that of the student.

Hard-Label Distillation

Take the hard decision of the teacher as a true label.