Matrix Theory

Matrix Theory Note

Matrix Theory

Basic Theories

Matrix

Definition

Operations

Addition, Subtraction

Scalar Multiplication

Transpose

,

Matrix Multiplication

where ,

Vector-Matrix Multiplication

Link to original

Trace

Definition

The sum of elements on the main diagonal

Facts

^[Cyclic property]

Link to originalLink to originalThe Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

Determinant

Definition

Computation

matrix

matrix

Laplace Expansion

Definition

Let be a cofactor matrix of

Along the th column gives

Along the th row gives

Link to original

Facts

The volume of box^[parallelepiped] is expressed by a determinant

changes sign, when two rows are exchanged (permutated)

depends on linearly on the 1st row.

If two row or column vectors in are equal, then

Gauss Elimination does not change

If a matrix has zero row or column vectors, then

If a matrix is diagonal or triangular, then the determinant of is the product of diagonal elements where is the element of the matrix .

Link to originalThe Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

where the dimensions of the matrices are and respectively.

Link to original

Idempotent Matrix

Definition

A matrix whose squared matrix is itself.

Facts

Eigenvalues of idempotent matrix is either or .

Let be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Let be an idempotent matrix, then

Link to originalIf is idempotent, then also idempotent.

Inverse Matrix

Rank of Matrix

Definition

The rank of matrix is the number of linearly independent column vectors, or the number of non-zero pivots in Gauss Elimination

Facts

For the non-singular matrices and ,

Link to originalIf a matrix is Symmetric Matrix, then is the number of non-zero eigenvalues.

Inverse Matrix

Definition

satisfies for given matrix

Computation

2 x 2 matrix

where the matrix , and is a Determinant of the matrix

Using Cofactor

where the matrix is the cofactor matrix, which is formed by all the cofactors of a given matrix . Then, by the definition of inverse matrix,

Link to originalSherman–Morrison Formula

Definition

Suppose is an invertible matrix an d . Then, is invertible . In this case,

Proof

Multiplying to the RHS gives

Since is a scalar, The numerator of the last term can be expressed as

So,

Examples

Updating Fitted Least Square Estimator

Sherman–Morrison formula can be used for updating fitted Least Square estimator

Let be the least square estimator of and the matrices with a new data be and Then,

Let for convenience Then, by Sherman-Morrison formula

So, where by expansion

So, can be obtained without additional inverse matrix calculation.

Facts

Sherman–Morrison formula is a special case of the Woodbury Formula

Link to originalMatrix Inversion Lemma

Definition

where the size of matrices are is , is , and is and should be invertible.

The inverse of a rank-k correction of some matrix can be computed by doing a rank-k correction to the inverse of the original matrix

Proof

Start by the matrix

The decomposition of the original matrix become Inverting both sides gives^[Diagonalizable Matrix]

The decomposition of the original matrix become Again inverting both sides,

The first-row first-column entry of RHS of (1) and (2) above gives the Woodbury formula

Link to originalMoore-Penrose Inverse

Definition

For a matrix , the Moore-Penrose inverse of is a matrix is satisfying the following conditions

Facts

The pseudoinverse is defined and unique for all matrices whose entries are real and complex numbers.

If the matrix is a square matrix, then is also a square matrix and

Link to original Footnotes

Partitioned Matrix

Block Matrix

Definition

A matrix that is interpreted as having been broken into sections called blocks or sub-matrices

Operations

Addition, Subtraction

Scalar Multiplication

Matrix Multiplication

Determinant

Let or be invertible matrices

Link to originalEigenvalues and Eigenvectors

Eigendecomposition

Definition

where is a matrix and

If is a scalar multiple of non-zero vector , then is the eigenvalue and is the eigenvector.

Characteristic Polynomial

The values satisfying the characteristic polynomial, are the eigenvalues of the matrix

Eigenspace

The set of all eigenvectors of corresponding to the same eigenvalue, together with the zero vector.

The Kernel of the matrix

Eigenvector: non-zero vector in the eigenspace

Algebraic Multiplicity

Let be an eigenvalue of an matrix . The algebraic multiplicity of the eigenvalue is its multiplicity as a root of a Characteristic Polynomial, that is, the largest k such that

Geometric Multiplicity

Let be an eigenvalue of an matrix . The geometric multiplicity of the eigenvalue is the dimension of the Eigenspace associated with the eigenvalue.

Computation

- Find the solution^[eigenvalues] of the Characteristic Polynomial.

- Find the solution^[eigenvectors] of the Under-Constrained System using the found eigenvalue.

Facts

There exists at least one eigenvector corresponding to the eigenvalue

Eigenvectors corresponding to different eigenvalues are always linearly independent.

When is a normal or real Symmetric Matrix, the eigendecomposition is called Spectral Decomposition

The Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

The Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

Not all matrices have linearly independent eigenvectors.

When holds,

- the eigenvalues of are and the eigenvectors of the matrix are the same as .

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

An eigenvalue’s Geometric Multiplicity cannot exceed its Algebraic Multiplicity

the matrix is diagonalizable the Geometric Multiplicity is equal to the Algebraic Multiplicity for all eigenvalues

Link to originalLet be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Link to originalThe non-zero eigenvalues of are the same as those of .

Quadratic Forms and Positive Definite Matrix

Quadratic Form

Definition

where is a Symmetric Matrix

A mapping where is a Module on Commutative Ring that has the following properties.

Matrix Expressions

Facts

Let be a Random Vector and be a symmetric matrix of constants. If and , then the expectation of the quadratic form is

Let be a Random Vector and be a symmetric matrix of constants. If and , , and , where , then the variance of the quadratic form is where is the column vector of diagonal elements of .

If and ‘s are independent, then If and ‘s are independent, then

Let , , where is a Symmetric Matrix and , then the MGF of is where ‘s are non-zero eigenvalue of

Let , where is Positive-Definite Matrix, then

Let , , where is a Symmetric Matrix and , then where

Let , , where are symmetric matrices, then are independent if and only if

Let , where are quadratic forms in Random Sample from If and is non-negative, then

- are independent

Let , , where , where , then

Link to original

Positive-Definite Matrix

Definition

Matrix , in which is positive for every non-zero column vector is a positive-definite matrix

Facts

Let , then

- where is a matrix.

- The diagonal elements of are positive.

- For a Symmetric Matrix , for sufficiently small

where is a non-singular matrix.

all the leading minor determinants of are positive.

Link to original

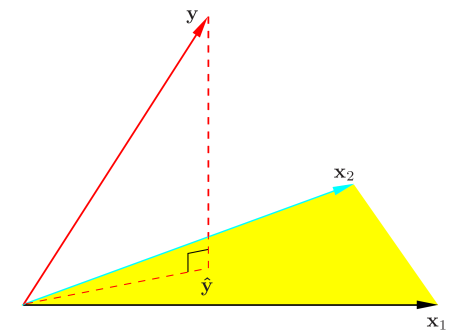

Projection and Decomposition of Matrix

Projection Matrix

Definition

For some vector , is the projection of onto

Facts

The projection matrix is symmetric Idempotent Matrix

Consider a Symmetric Matrix , then is idempotent with rank if and only if eigenvalues are and eigenvalues are .

The projection matrix is Positive Semi-Definite Matrix

Link to originalIf and are projection matrices, and is Positive Semi-Definite Matrix, then

- is a projection matrix.

QR Decomposition

Definition

Decomposition of matrix into a product of an orthonormal matrix and an upper triangular matrix .

Computation

Using the Gram Schmidt Orthonormalization

QR decomposition can be computed by Gram Schmidt Orthonormalization. Where is the matrix of orthonormal column vectors obtained by the orthonormalization and

Link to originalCholesky Decomposition

Definition

Decomposition of a Positive-Definite Matrix into the product of lower triangular matrix and its Conjugate Transpose.

Computation

Let be Positive-Definite Matrix. Then, By setting the first-row first-column entry , can find other entries using substitution. By summarizing, ,

Link to originalSpectral Theorem

Definition

where is a Unitary Matrix, and

A matrix is a Hermitian Matrix if and only if is Unitary Diagonalizable Matrix.

Facts

Every Hermitian Matrix is diagonalizable, and has real-valued Eigenvalues and orthonormal eigenvector matrix.

For the Hermitian Matrix , the every eigenvalue is real.

Proof For the eigendecomposition , . Since is hermitian, and norm is always real. Therefore, every eigenvalue is real.

Link to originalFor the Hermitian Matrix , the eigenvectors from different eigenvalues are orthogonal.

Proof Let the eigendecomposition where . By the property of hermitian matrix, and So, . Since , . Therefore, are orthogonal.

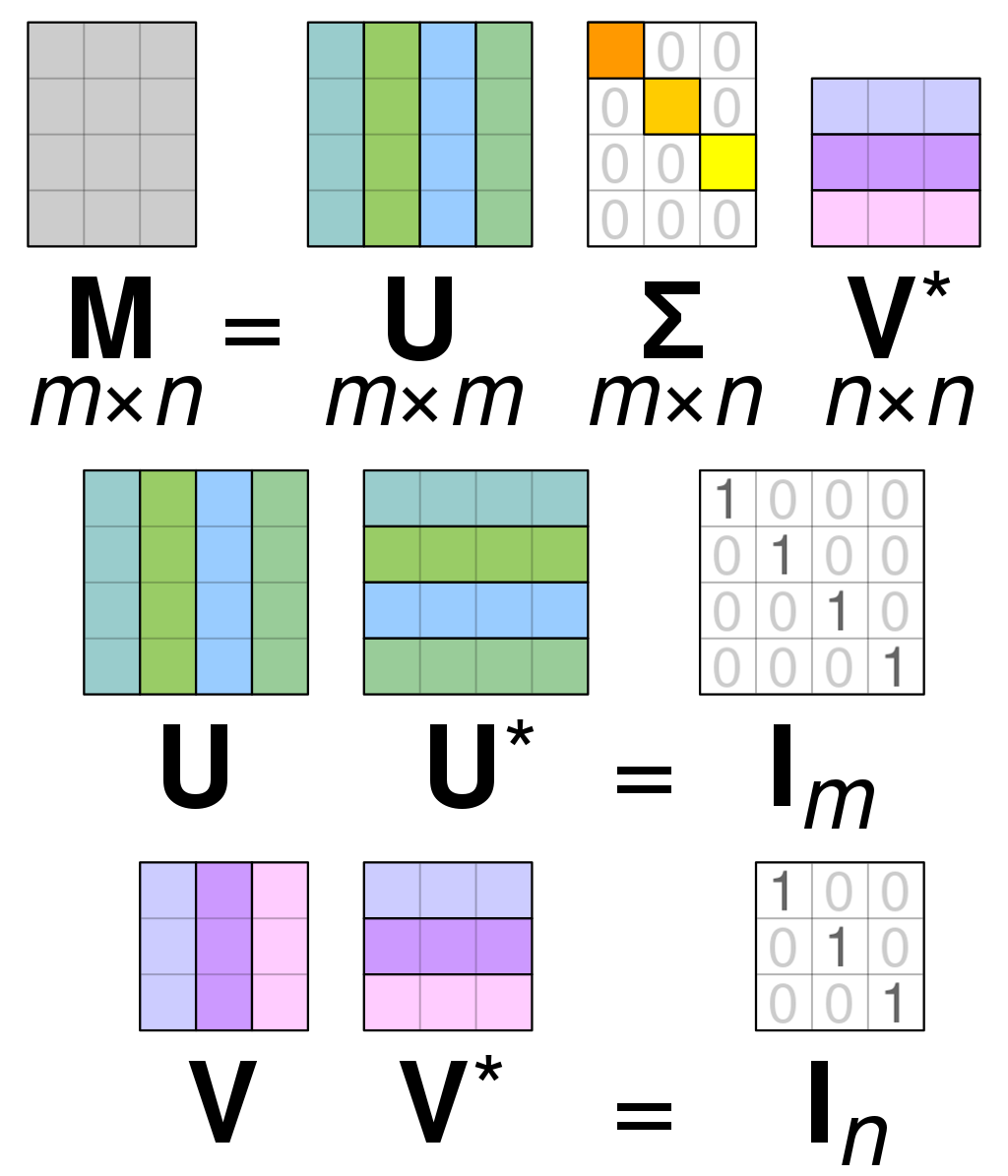

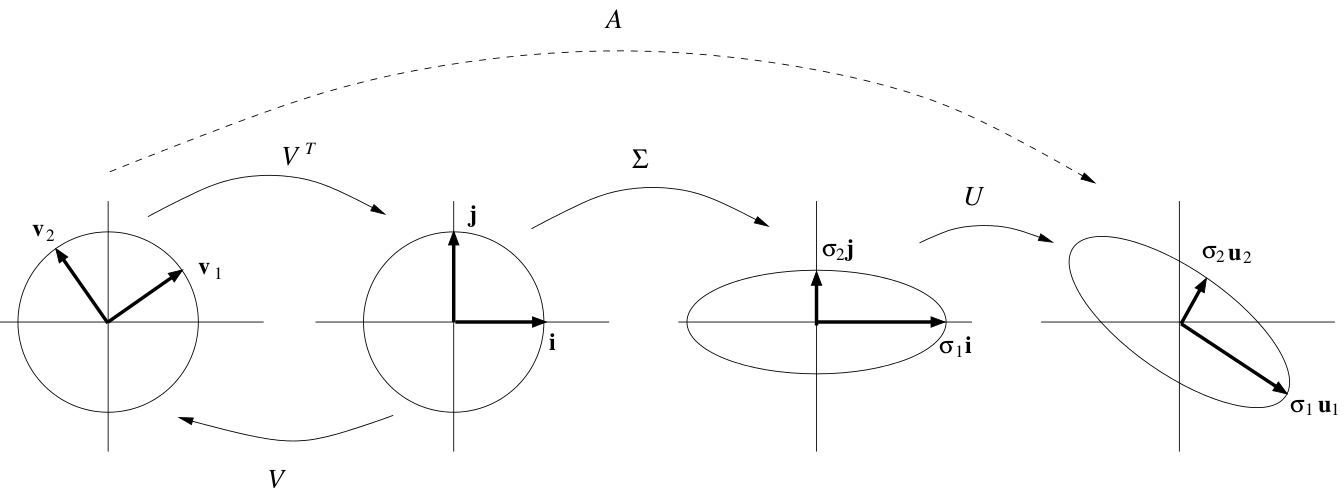

Singular Value Decomposition

Definition

An arbitrary matrix can be decomposed to .

For where and and is Diagonal Matrix

For where and and is Diagonal Matrix

Calculation

For matrix

is the matrix of orthonormal eigenvectors of is the matrix of orthonormal eigenvectors of is the Diagonal Matrix made of the square roots of the non-zero eigenvalues of and sorted in descending order.

If the eigendecomposition is , then the eigenvalues and the eigenvectors are orthonormal by Spectral Theorem. If the , then . Where are called the singular values.

Now, the Orthonormal Matrix is calculated using the singular values. Where is the Orthonormal Matrix of eigenvectors corresponding to the non-zero eigenvalues and is the Orthonormal Matrix of eigenvectors corresponding to zero eigenvalues where each , and is a rectangular Diagonal Matrix.

Since is an orthonormal matrix and is a Diagonal Matrix, . Now, the Orthonormal Matrix is calculated using the linear system and the null space of , Where is the Orthonormal Matrix of the vectors obtained from the system equation And is the Orthonormal Matrix of the vectors . which is corresponding to zero eigenvalues

The Orthonormal Matrix can also be formed by the eigenvectors of similarly to calculating of .

Facts

are the Unitary Matrix

Let be a real symmetric Positive Semi-Definite Matrix Then, the Eigendecomposition(Spectral Decomposition) of and the singular value decomposition of are equal.

where and are non-negative and the shape of the every matrix is

Visualization

every matrix can be decomposed as a

Link to original

- : rotation and reflection

- : scaling

- : rotation and reflection

Miscellanea in Matrix

Centering Matrix

Definition

where is matrix where all elements are

Summing Vector

Facts

Link to originalwhere is a sample variance of

Derivatives of Matrix

Definition

Differentiation by vector

where

Differentiation by Matrix

where

Calculation

Let be a vector function, be an -dimensional vector, then By the definition of derivative, we hold where

Examples

Let be -dimensional vectors, then

Let be an -dimensional vector and be an matrix, then

Let be an -dimensional vector and be an Symmetric Matrix, then the derivative of quadratic form is

Link to originalHessian Matrix

Definition

A square matrix of second-order partial derivative of a scalar-valued function

Link to originalVectorization

Definition

Let be an matrix, then

A linear transformation which converts the matrix into a vector.

Facts

Link to originalLet be matrices, then

Kronecker Product

Definition

Let be an matrix and be a matrix, then the Kronecker product is the block matrix

Facts

Link to originalLet be matrices, then

, where are square matrices Eigenvalues of is the product of the eigenvalue of and the eigenvalue of

Norms

Norm

Definition

A real-valued function with the following properties

- Positive definiteness:

- Absolute Homogeneity:

- Sub additivity or Triange inequality: where on a vector space

Vector

Real

Let be an -dimensional vector, then the norm of the is defined as

Complex

Let be an -dimensional complex vector, then the norm of the is defined as

Function

: norm of

Facts

Link to originala norm can be induced by a Inner product

Schatten Norm

Definition

Let be an matrix, then the Schatten norm of is defined as where is the -th singularvalue of

Facts

Link to originalFrobenius Norm

Definition

Let be an matrix, then the Frobenius norm of is defined as

Link to originalMatrix p-Norm

Definition

Let be an matrix, then the matrix p-norm of is defined as

Facts

Link to originalWhen , the norm is a Spectral Norm.

Spectral Norm

Definition

Let be an matrix, then the matrix p-norm of is defined as

Link to originalNuclear Norm

Definition

Let be an matrix, then the nuclear norm of is defined as

Link to originalL-pq Norm

Definition

norm is an entry-wise matrix norm. where

Facts

When , the norm is the sum of the absolute values of every entry and is called a matrix norm.

Link to originalLink to originalRayleigh-Ritz Theorem

Definition

Let be an Hermitian Matrix with eigenvalues sorted in descending order , where , then

Link to original

Elementary Statistical Theory

Random Variable and Random Sampling

Random Variable

Definition

A random variable is a function whose inverse function is -measurable for the two measurable spaces and .

The inverse image of an arbitrary Borel set of Codomain is an element of sigma field .

Notations

Consider a probability space

- Outcomes:

- Set of outcomes (Sample space):

- Events:

- Set of events (Sigma-Field):

- Probabilities:

- Random variable:

For a random variable on a Probability Space

- if and only if the Distribution of is

- if and only if the Distribution Function of is

For a random variable on Probability Space and another random variable on Probability Space

Link to original

Expected Value

Definition

The expected value of the Random Variable on Probability Space

Continuous

where is a PDF of Random Variable

The expected value of the Random Variable when satisfies absolute continuous over ,

Discrete

where is a PMF of Random Variable

The expected value of the Random Variable when satisfies absolute continuous over , In other words, has at most countable jumps.

Expected Value of a Function

Continuous

Discrete

Properties

Linearity

Random Variables

If , then

Matrix of Random Variables

Let be a matrices of random variables, be matrices of constants, and a matrix of constant. Then,

Notations

Link to original

Expression Discrete Continuous Expression for the event and the probability Expression for the measurable space and the distribution

Multivariate Distribution

Definition

Joint CDF

The Distribution Function of a Random Vector is defined as where

Joint PDF

The joint probability Distribution Function of discrete Random Vector

The joint probability Distribution Function of continuous Random Vector

Expected Value of a Multivariate Function

Continuous

E[g(\mathbf{X})] = \idotsint\limits_{x_{n} \dots x_{1}} g(\mathbf{x})f(\mathbf{x})dx_{1} \dots \dots dx_{n}

Discrete

Marginal Distribution of a Multivariate Function

Marginal CDF

Marginal PDF

f_{X_{1}}(x_{1}) = E[g(\mathbf{X})] = \idotsint\limits_{x_{n} \dots x_{1}} f(x_{2}, \dots, x_n)dx_{2} \dots \dots dx_{n}

Conditional Distribution of a Multivariate Function

Properties

Linearity

If , then

Link to original

Covariance

Definition

Properties

Covariance with Itself

Covariance of Linear Combinations

Link to original

Transclude of Density-Function

Distribution Function

Definition

A distribution function is a function for the Random Variable on Probability Space

Facts

Proof By definition, So, defining is the Equivalence Relation to defining

Since is a Pi-System, is uniquely determined by by Extension from Pi-System

Now, is a Measurable Space induced by . Therefore, defining on is equivalent to the defining on

Distribution function(CDF) has the following properties

- Monotonic increasing:

- Right-continuous:

If a function satisfies the following properties, then is a distribution function(CDF) of some Random Variable

- Monotonic increasing:

- Right-continuous:

Link to original

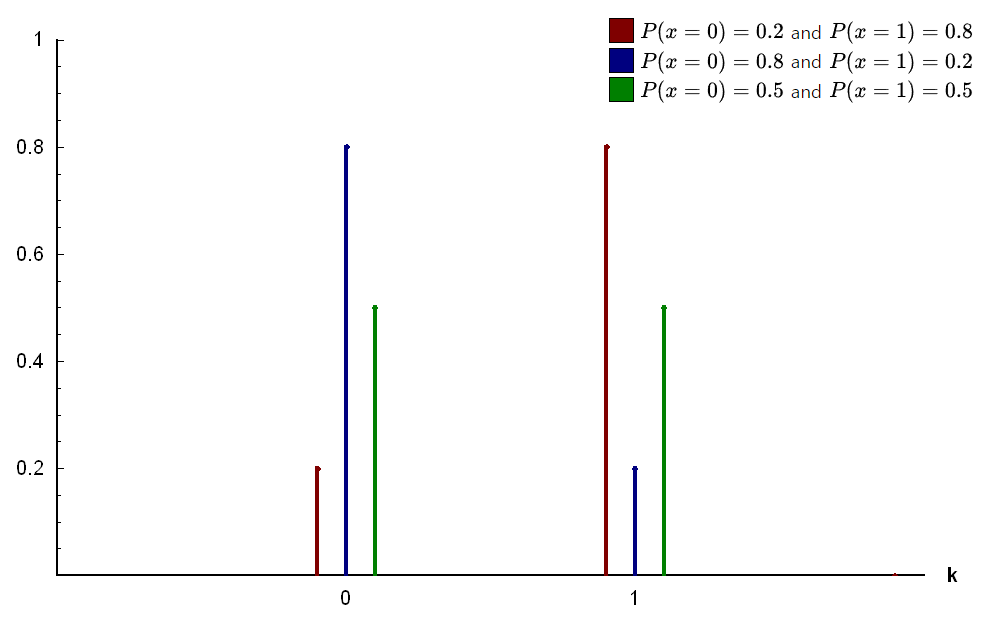

Bernoulli Distribution

Definition

where is a probability of success

number of success in a single trial with success probability

Bernoulli Process

The i.i.d. Random Vector with Bernoulli distribution

Properties

Mean

Variance

Link to original

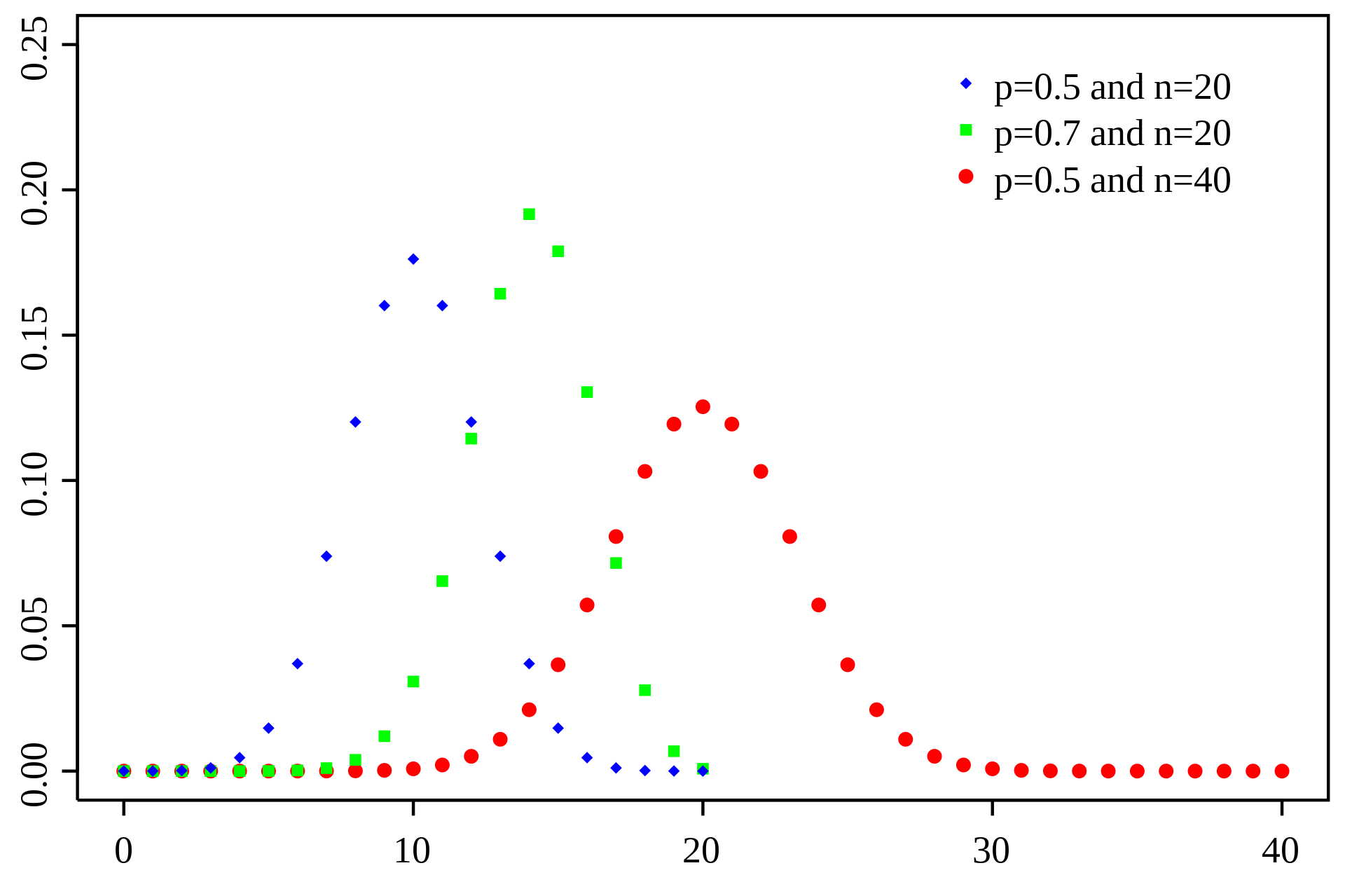

Binomial Distribution

Definition

where is the length of bernoulli process, and is a probability of success

The number of successes in length bernoulli process with success probability

Properties

Mean

Variance

MGF

Facts

Link to originalLet be independent random variables following binomial distribution, then

Multinomial Distribution

Definition

where is the number of trials, is a probability of category

Distribution that describes the probability of observing a specific combination of outcomes

Properties

where , , and

MGF

Marginal PDF

Each one-variable marginal pdf is Binomial Distribution, each two-variables marginal pdf is Trinomial Distribution, and so on.

Link to original

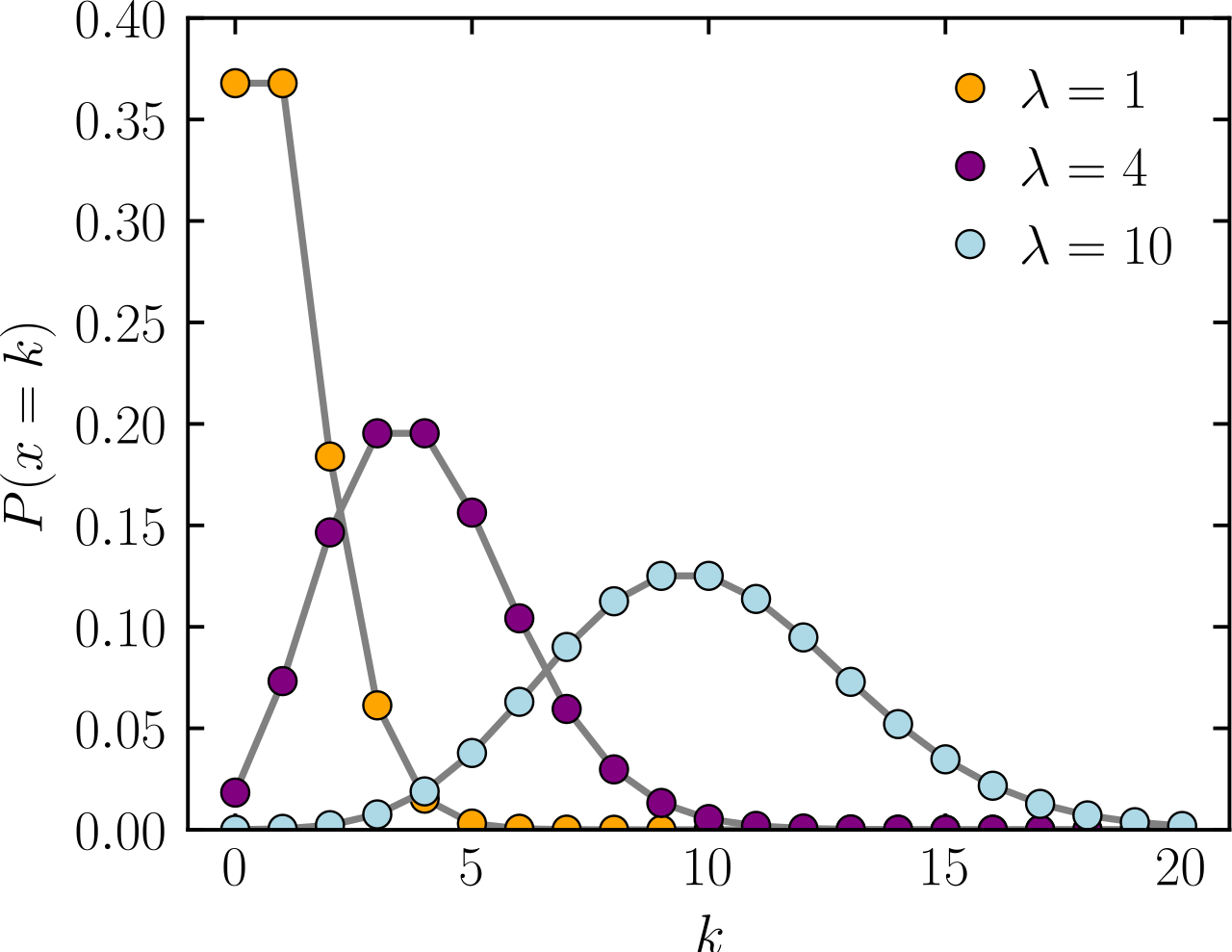

Poission Distribution

Definition

where is the average number of occurrences in a fixed interval of time

The number of occurrences in a fixed interval of time with mean

Properties

where

Mean

Variance

MGF

Summation

Let , and ‘s are independent, then

Link to original

Normal Distribution

Definition

where is the location parameter(mean), and is the scale parameter(variance)

Standard Normal Distribution

Properties

Mean

Variance

MGF

Higher Order Moments

Sum of Normally Distributed Random Variables

Let be independent random variables following normal distribution, then

Relationship with Chi-squared Distribution

Let be a standard normal distribution, then

Facts

Link to originalLet , then

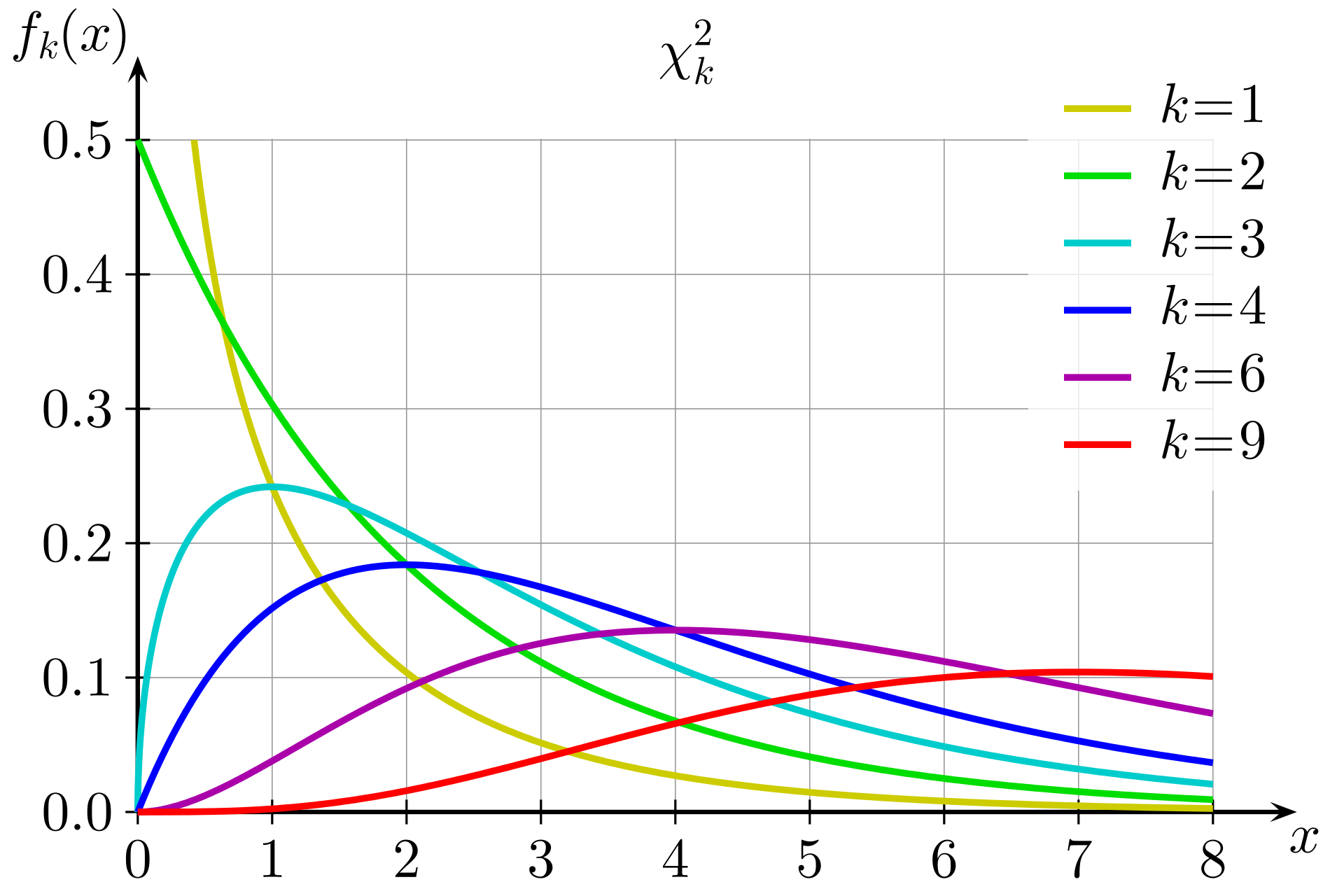

Chi-squared Distribution

Definition

where is the degrees of freedom

squared sum of independent standard normal distributions

Properties

Mean

Variance

MGF

Additivity

Let ‘s are independent chi-squared distributions

Facts

Let and , and is independent of , then

Link to originalLet , where are quadratic forms in , where each element of the is a Random Sample from If , then

- are independent

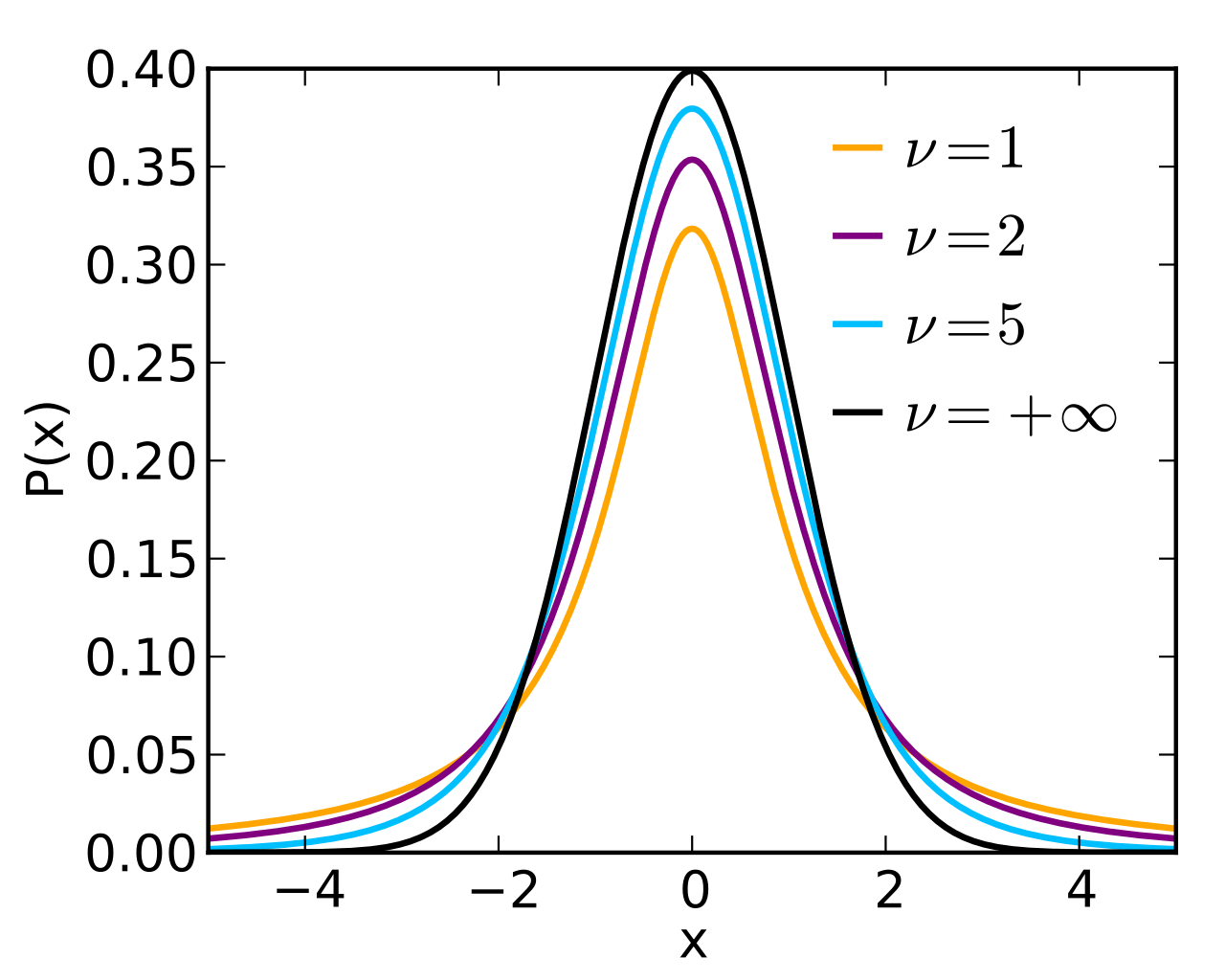

Student's t-Distribution

Definition

Let be a standard normal distribution, be a Chi-squared Distribution, and be independent, then where is the degrees of freedom

Properties

Mean

Variance

Link to original

F-Distribution

Definition

Let be independent random variables following Chi-squared distributions, then where are the degrees of freedoms

Properties

{\Gamma(\frac{r_{1} + r_{2}}{2}) (\frac{r_{1}}{r_{2}})^{\frac{r_{1}}{2}} x^{\frac{r_{1}}{2}-1}} {\Gamma(\frac{r_{1}}{2}) \Gamma(\frac{r_{2}}{2}) (\frac{r_{1}}{r_{2}}x+1)^{(r_{1} + r_{2})/2}}$$ ## Mean $$E(X) = \frac{r_{2}}{r_{2} - 2},\quad r_{2}>2$$ ## Variance $$\operatorname{Var}(X) = \frac{2(r_{1}+r_{2}-2)}{r_{1} (r_{2}-2)^{2}(r_{2}-4)},\quad r_{2}>4$$Link to original

Random Vector

Definition

Column vector whose components are random variables .

Link to original

Multivariate Normal Distribution

Definition

where is the number of dimensions, is the vector of location parameters, and is the vector of scale parameters

Standard Multivariate Normal Distribution

MGF

Properties

Mean

Variance

MGF

Affine Transformation

Let be a Random Variable following multivariate normal distribution, be a matrix, and be a dimensional vector, then

Relationship with Chi-squared Distribution

Suppose be a Random Variable following multivariate normal distribution, then

Facts

Let , be an , and be a -dimensional vector, then

Let , , , , where is and is vectors. Then, and are independent

Link to originalLet , , and , where is , is matrices. and are independent

Statistical Estimation and Testing

Bias of an Estimator

Definition

Let be a Random Sample from where is a parameter, and be a Statistic.

is unbiased estimator An estimator is unbiased if its bias is equal to zero for all values of parameter .

Link to original

Consistency

Definition

A Statistic is called consistent estimator of if converges in probability to

Link to original

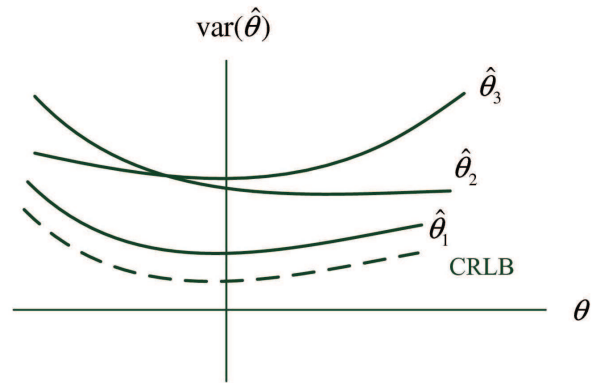

Minimum Variance Unbiased Estimator

Definition

An estimator satisfying the following is the minimum variance unbiased estimator (MVUE) for where is an unbiased estimator

An Unbiased Estimator that has lower variance than any other unbiased estimator for the parameter

Facts

A minimum variance unbiased estimator does not always exist.

Link to originalIf some unbiased estimator’s variance is equal to the Rao-Cramer Lower Bound for all , then it is a minimum variance unbiased estimator.

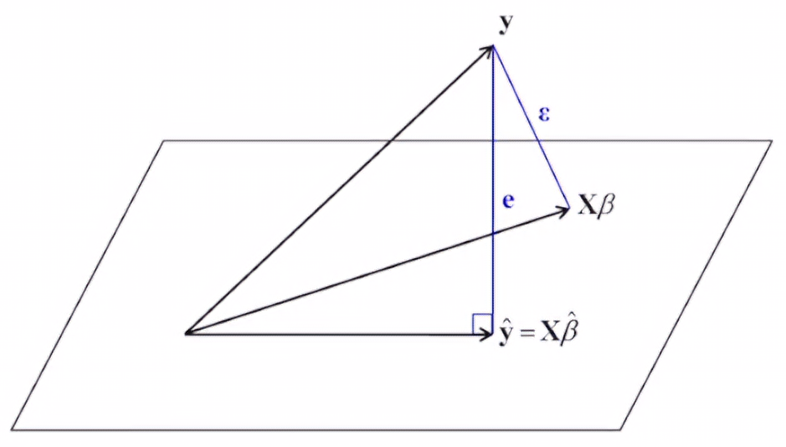

Least Squares Estimator

Definition

Simple Linear Regression Case

Consider a Simple Linear Regression model The least square estimator is the estimator that minimizes

The least square estimator of the model is where and

Estimation of

where ‘s are residuals

Multiple Linear Regression Case

Consider a Multiple Linear Regression model The least square estimator is the estimator that minimizes

The least square estimator of the model is

Fitted response vector is expressed as where is called the hat matrix.

Estimation of

where , ‘s are residuals, and is the number of the explanatory variables

Facts

The least square estimator is a linear combination of ‘s Let , then

The fitted line always go through

Link to originalLet , where ‘s are independent, , and be the 2nd, 3rd, and 4th Central Moment of respectively. Then, is the unique non-negative quadratic Unbiased Estimator of with minimum variance when the excess kurtosis is or when the diagonal elements of the hat matrix are equal.

Maximum Likelihood Estimation

Definition

MLE is the method of estimating the parameters of an assumed Distribution

Let be Random Sample with PDF , where , then the MLE of is estimated as

Regularity Conditions

- R0: The pdfs are distinct, i.e.

- R1: The pdfs have same supports

- R2: The true value is an interior point in

- R3: The pdf is twice differentiable with respect to

- R4:

- R5: The pdf is three times differentiable with respect to , , and interior point

Properties

Functional Invariance

If is the MLE for , then is the MLE of

Consistency

Under R0 ~ R2 Regularity Conditions, let be a true parameter, is differentiable with respect to , then has a solution such that

Asymptotic Normality

Under the R0 ~ R5 Regularity Conditions, let be Random Sample with PDF , where , be a consistent Sequence of solutions of MLE equation , and , then where is the Fisher Information.

By the asymptotic normality, the MLE estimator is asymptotically efficient under R0 ~ R5 Regularity Conditions

Asymptotic Confidence Interval

By the asymptotic normality of MLE, Thus, confidence interval of for is

Delta method for MLE Estimator

Under the R0 ~ R5 Regularity Conditions, let be a continuous function and , then

Facts

Link to originalUnder R0 and R1 regularity conditions, let be a true parameter, then

Taylor Approximation

Taylor Series

Definition

Taylor Series for One Variable

Let be an infinitely differentiable function at the point , then

Taylor Series for Two Variables

Let be an infinitely differentiable function at the point , then

&+ \frac{\partial f}{\partial t}(t_{0}, x_{0})(t-t_{0}) + \frac{\partial f}{\partial x}(t_{0}, x_{0})(x-x_{0})\\ &+ \frac{1}{2}\frac{\partial^{2} f}{\partial t^{2}}(t_{0}, x_{0})(t-t_{0})^{2} + \frac{1}{2}\frac{\partial^{2} f}{\partial x^{2}}(t_{0}, x_{0})(x-x_{0})^{2} + \frac{\partial^{2} f}{\partial t \partial x}(t_{0}, x_{0})(x-x_{0})(t-t_{0})\\ &+ \dots \end{aligned}$$ ## Approximation Let $f: \mathbb{R} \to \mathbb{R}$ be a $k$-the differentiable function at the point $c \in \mathbb{R}$ and $|x-c| \to 0$, then $$f(x) = \sum\limits_{n=0}^{k} \cfrac{f^{(n)}(c)}{n!}(x-c)^{n} + o(|x-c|^{k})$$ where $o$ is [[Big O Notation|little oh]] notation Let $f: \mathbb{R} \to \mathbb{R}$ be a $k+1$-the differentiable function at any point and $|f^{(k+1)}(x)| \leq M \leq \infty$, then by the [[Rolle's Theorem]] $$f(x) = \sum\limits_{n=0}^{k} \cfrac{f^{(n)}(c)}{n!}(x-c)^{n} + \frac{1}{(k+1)!}f^{(k+1)}(\xi)(x-c)^{k+1}$$ where $\xi \in (x, c)$ ## Maclaurin Series $$f(x) = \sum\limits_{n=0}^{\infty} \cfrac{f^{(n)}(0)}{n!}x^{n} = f(0)+f'(0)x + \cfrac{f''(0)}{2!}x^{2}+ \dots +\cfrac{f^{(m)}(0)}{m!}x^m + \dots$$ Taylor Series with $c=0$ ## Derivation from the [[Fundamental Theorem of Calculus]] Let $f$ be an infinitely [[Differentiability|differentiable]] function. By applying [[Fundamental Theorem of Calculus|FTC]] $n$ times, we can expande the function $f(x)$ as follows: $$\begin{aligned} f(x) &= \int\limits_{c}^{x} f'(x_{1}) dx_{1} + f(c)\\ &= \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} f''(x_{2}) dx_{2} + f'(c) dx_{1} + f(c)\\ &= \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} f''(x_{2}) dx_{2} dx_{1} + \int\limits_{c}^{x} f'(c) dx_{1} + f(c)\\ &= \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\int\limits_{c}^{x_{2}} f'''(x_{3}) dx_{3} dx_{2} dx_{1} + \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} f''(c) dx_{2} dx_{1} + \int\limits_{c}^{x} f'(c) dx_{1} + f(c)\\ &= \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{n-1}} f^{(n)}(x_{n}) dx_{n} dx_{n-1} \dots dx_{1} + \sum_{i=0}^{n} \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{i-1}} f^{(i)}(c) dx_{i} dx_{i-1} \dots dx_{1} \end{aligned}$$ The integrals inside the summation, can be simplified $$\begin{aligned} \int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{i-1}} f^{(i)}(c) dx_{i} dx_{i-1} \dots dx_{1} &= f^{(i)}(c)\int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{i-1}} 1 dx_{i} dx_{i-1} \dots dx_{1} \\ &= f^{(i)}(c)\int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{i-2}} (x_{i-1} - c) dx_{i-1} dx_{i-2} \dots dx_{1} \\ &= f^{(i)}(c)\int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{i-3}} \frac{1}{2}((x_{i-2} - c)^2 - (c - c)^2) dx_{i-2} dx_{i-3} \dots dx_{1} \\ &\dots \\ &= f^{(i)}(c)\frac{(x - c)^i}{i!} \end{aligned}$$ Therefore, we have $$f(x) = \underbrace{\int\limits_{c}^{x} \!\int\limits_{c}^{x_{1}} \!\dots \!\int\limits_{c}^{x_{n-1}} f^{(n)}(x_{n}) dx_{n} dx_{n-1} \dots dx_{1}}_{\text{Error term}} + \underbrace{\sum_{i=0}^{n} f^{(i)}(c)\frac{(x - c)^i}{i!}}_{\text{Polynomial terms}}$$Link to original

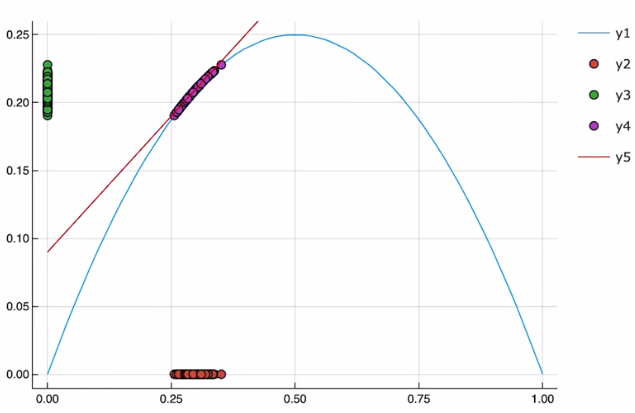

Delta Method

Definition

Univariate Delta Method

Let be a sequence of random variables satisfying , be a differentiable function at , and , then

Proof

By Taylor Series approximation

Where by the assumption. By the continuous mapping theorem, , which is a function of , also converges to random variable, so it is Boundedness in Probability by the property of converging random vector

Therefore, by the property of sequence of random variables bounded in probability

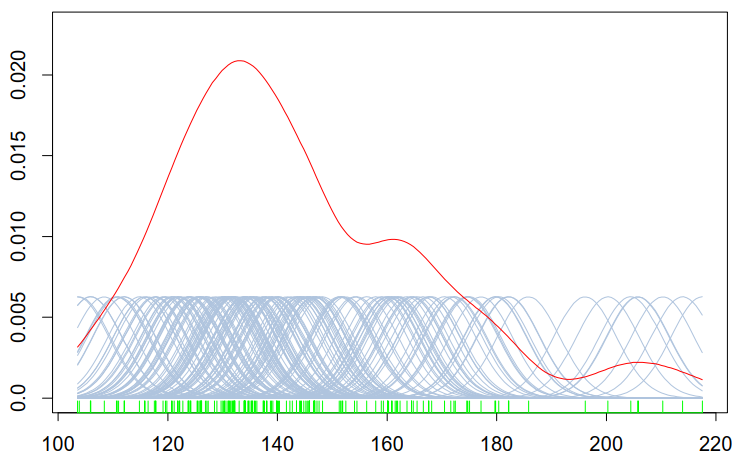

Examples

Estimation of the sample variance of Bernoulli Distribution

Let by CLT

by Delta method

Let , then

Therefore, the sample mean and variance follow such distributions

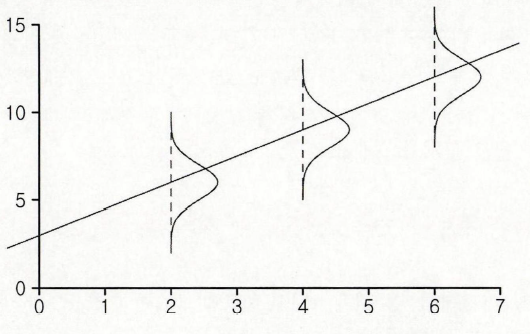

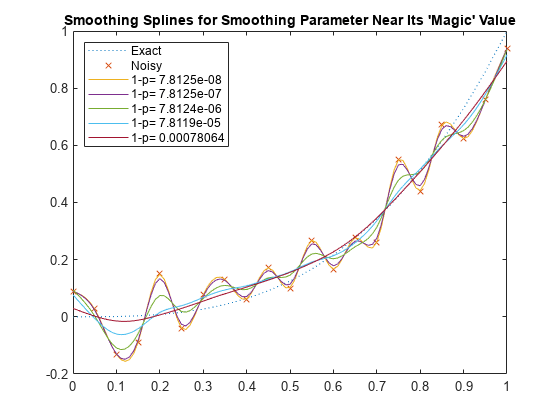

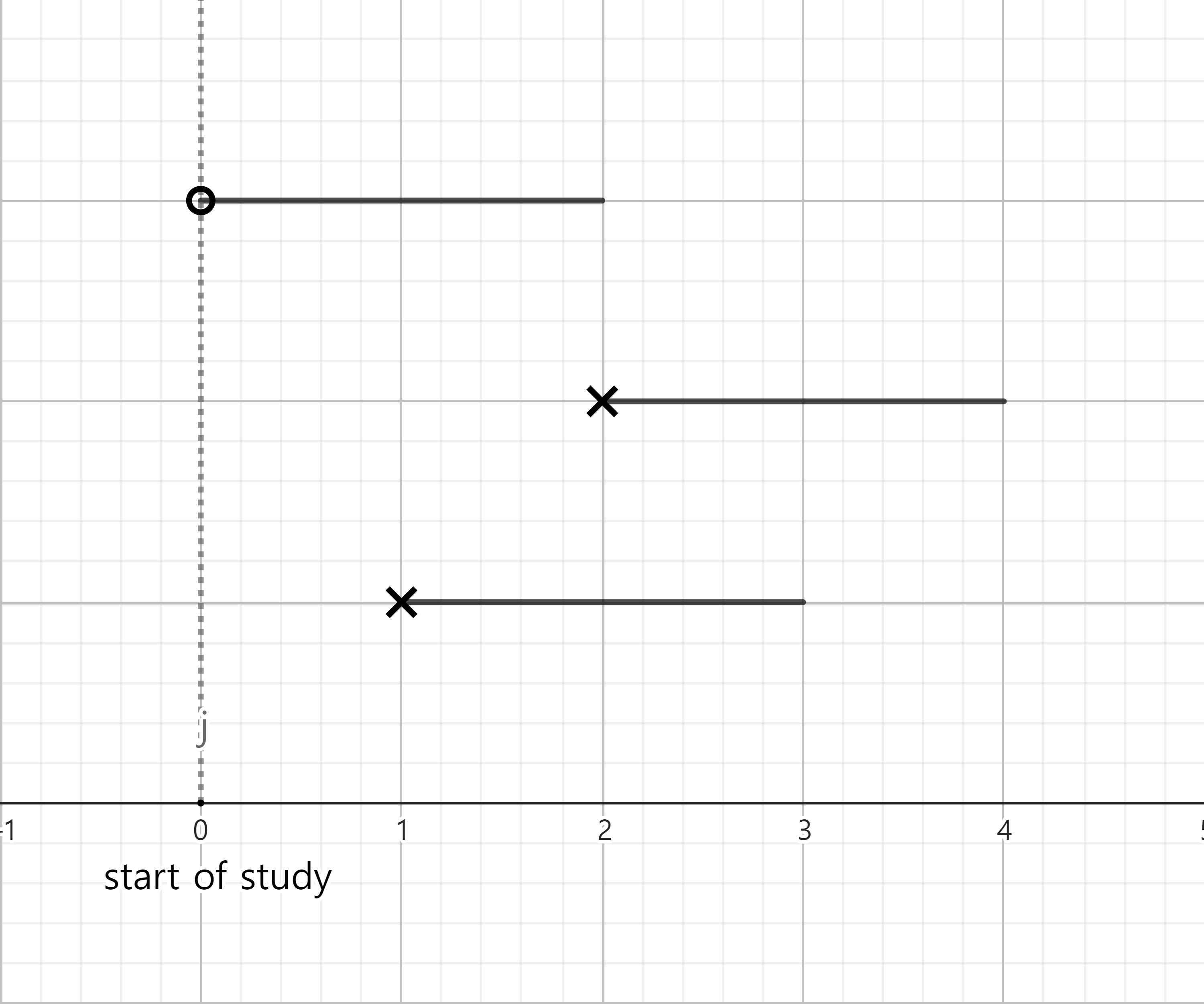

Visualization

- x-axis:

- y_axis:

- y1: ^[variance by mean]

- y2: ^[sample mean]

- y3, y4: ^[sample variance that calculated by the sample mean]

- y5: first order approximated line at

If sample size , variance of sample mean . So, sample variance can be well approximated by first-order approximation.

Link to original

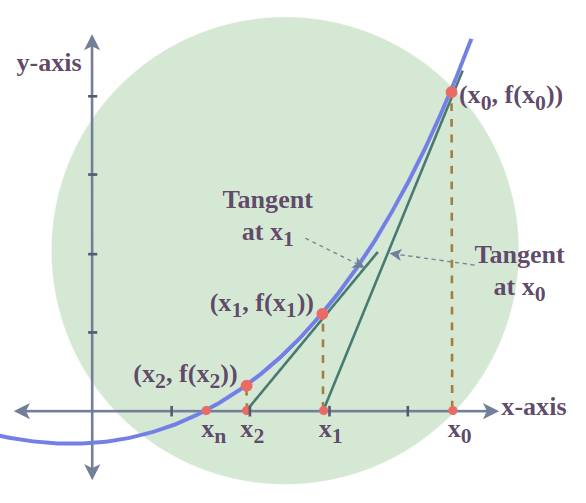

Newton's Method

Definition

An iterative algorithm for finding the roots of a differentiable function, which are solution to the equation

Algorithm

Find the next point such that the Taylor series of the given point is 0 Taylor first approximation: The point such that the Taylor series is 0:

multivariate version:

In convex optimization,

Find the minimum point^[its derivative is 0] of Taylor quadratic approximation. Taylor quadratic approximation: The derivative of the quadratic approximation: The minimum point of the quadratic approximation^[the point such that the derivative of the quadratic approximation is 0]: multivariate version:

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient and hessian are ,

Then, solution is If is invertible, is a Least Square solution.

Link to original

Multiple Testing

Family-Wise Error Rate

Definition

Consider a result of Multiple Testing

fact \ test result do not reject reject total is true is true total where is the number of rejected null hypothesis, and is the total number of hypotheses tested.

The family-wise error rate (FWER) is the probability of making at least one type 1 error in the family Therefore, by assuring , the probability of making one or more type 1 errors in the family is controlled at level

Link to original

Bonferroni's Method

Definition

Consider Multiple Testing problem , and let be an event of type 1 error for . Then, by the Bonferroni Inequality, Therefore, to control Family-Wise Error Rate at , we need to control

The Bonferroni method is too conservative and consequently, it is not a powerful test.

Link to original

Sidak Method

Definition

Consider Multiple Testing problem , and let be an event of type 1 error for By the definition of the type 1 error in multiple testing, Therefore, to control Family-Wise Error Rate at , Sidak method uses instead of the

Link to original

False Discovery Rate

Definition

where is the number of false discoveries and is the number of discoveries

The false discovery rate (FDR) is the expected proportion of falsely rejected we control FDR at some ,

Facts

If Family-Wise Error Rate is controlled, then FDR also be controlled.

Link to original

Let be the -values of hypotheses, and is given. If , then FDR rejects hypotheses corresponding to

Simple Linear Regression Model

Simple Linear Regression Model

Simple Linear Regression

Definition

where ‘s are i.i.d. error terms, with and

Link to original

Estimation of Regression Coefficients

Least Squares Estimator

Definition

Simple Linear Regression Case

Consider a Simple Linear Regression model The least square estimator is the estimator that minimizes

The least square estimator of the model is where and

Estimation of

where ‘s are residuals

Multiple Linear Regression Case

Consider a Multiple Linear Regression model The least square estimator is the estimator that minimizes

The least square estimator of the model is

Fitted response vector is expressed as where is called the hat matrix.

Estimation of

where , ‘s are residuals, and is the number of the explanatory variables

Facts

The least square estimator is a linear combination of ‘s Let , then

The fitted line always go through

Link to originalLet , where ‘s are independent, , and be the 2nd, 3rd, and 4th Central Moment of respectively. Then, is the unique non-negative quadratic Unbiased Estimator of with minimum variance when the excess kurtosis is or when the diagonal elements of the hat matrix are equal.

Least Absolute Deviation Estimator

Definition

Consider a Simple Linear Regression model The least absolute deviation estimator are the estimator that minimize

Link to original

Gauss-Markov Theorem

Definition

Simple Linear Regression Case

Consider a Simple Linear Regression model Let and , then the Least Squares Estimator has the minimum variance among the all unbiased linear estimators, i.e. BLUE

Multiple Linear Regression Case

Consider a Multiple Linear Regression model Let , then the Least Squares Estimator has the minimum variance among all the unbiased and linear estimators, i.e. BLUE

Link to original

Maximum Likelihood Estimation

Definition

MLE is the method of estimating the parameters of an assumed Distribution

Let be Random Sample with PDF , where , then the MLE of is estimated as

Regularity Conditions

- R0: The pdfs are distinct, i.e.

- R1: The pdfs have same supports

- R2: The true value is an interior point in

- R3: The pdf is twice differentiable with respect to

- R4:

- R5: The pdf is three times differentiable with respect to , , and interior point

Properties

Functional Invariance

If is the MLE for , then is the MLE of

Consistency

Under R0 ~ R2 Regularity Conditions, let be a true parameter, is differentiable with respect to , then has a solution such that

Asymptotic Normality

Under the R0 ~ R5 Regularity Conditions, let be Random Sample with PDF , where , be a consistent Sequence of solutions of MLE equation , and , then where is the Fisher Information.

By the asymptotic normality, the MLE estimator is asymptotically efficient under R0 ~ R5 Regularity Conditions

Asymptotic Confidence Interval

By the asymptotic normality of MLE, Thus, confidence interval of for is

Delta method for MLE Estimator

Under the R0 ~ R5 Regularity Conditions, let be a continuous function and , then

Facts

Link to originalUnder R0 and R1 regularity conditions, let be a true parameter, then

Goodness-of-Fit of the Regression Line

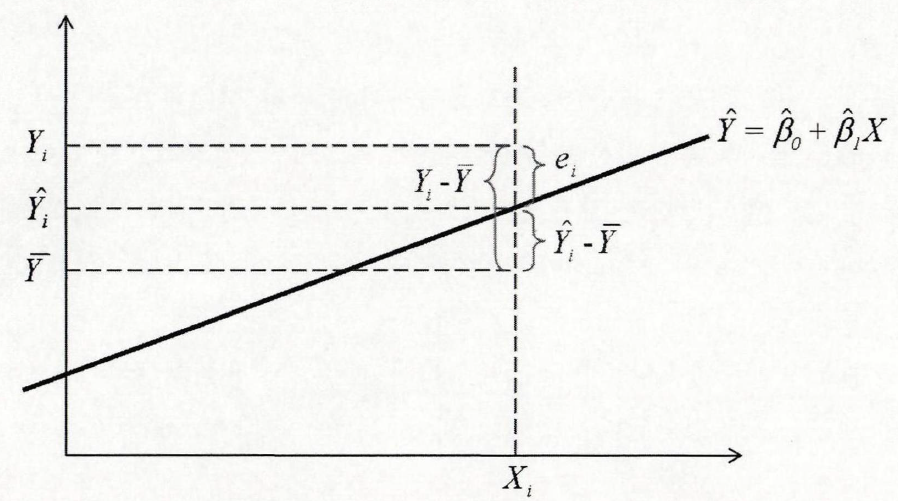

Decomposition of Sum of Squares

Definition

Simple Linear Regression Case

Consider a Simple Linear Regression model The total sum of squares is expressed by the sum of the unexplained variation and the explained variation where

- is the total sum of squares and has the degree of freedom ,

- is the error sum of squares and has the degree of freedom , and

- is the regression sum of squares and has the degree of freedom

Multiple Linear Regression Case

Consider a Multiple Linear Regression model

- has the degree of freedom ,

- has the degree of freedom , and

- has the degree of freedom

Facts

Link to original

Coefficient of Determination

Definition

The coefficient of determination of the linear regression model is defined as

Facts

Link to originalThe coefficient of determination is a squared value of Pearson Correlation Coefficient

Analysis of Variance

One-way ANOVA

One-way ANOVA is used to analyze the significance of differences of means between groups. Let an -th response of the -th group be where

We want to test the null hypothesis i.e. there is no treatment effect. where is the total number of observations, represents the mean of the -th group and represents the overall mean, of the numerator indicates between-group variance (SSB) and of the denominator indicates within-group variance (SSW)

The Likelihood Ratio Test rejects if

Link to original

Statistical Inference

Confidence Interval for the Mean in Simple Linear Regression

Consider a Simple Linear Regression model The confidence region for the mean response , when is given, is defined as where

Link to original

Prediction interval for a New Response in Linear Regression

Definition

Consider a Simple Linear Regression model The prediction interval for a new response , when is given, is defined as where

Facts

Link to originalThe prediction interval is always wider than the Confidence Interval.

Multiple Linear Regression Model

Multiple Linear Regression Model

Multiple Linear Regression

Definition

where ‘s are i.i.d. error terms, with and

Matrix Notations

Let and , then the regression model can be express the model as

Let , , , then the regression model can be express as where , and

Link to original

Statistical Inference

Distribution of Regression Coefficient

Definition

Distribution of Regression Coefficient

Assume that The Least Squares Estimator also follows Normal Distribution. where is the number of explanatory variables.

Marginal Distribution of Regression Coefficient

The marginal distribution of the Multivariate Normal Distribution is univariate Normal Distribution where

Link to original

Confidence Interval for Regression Coefficient

Definition

Assume that The confidence interval for is defined as where is the Standard Error for Regression Coefficient , and is the number of explanatory variables.

Link to original

Standard Error for Regression Coefficient

Definition

Assume that The standard error for regression coefficient is calculated as where , , and is the number of explanatory variables.

Link to original

Hypothesis testing for Regression Coefficient

Definition

Assume that The null hypothesis can be tested with the test statistic where is the Standard Error for Regression Coefficient .

Link to original

Joint Confidence Region for Regression Coefficient

Definition

Assume that The join confidence region for is defined as where and is the number of explanatory variables.

The joint confidence region is ellipsoidal shape whose center is by the inequality.

Link to original

Simultaneous Confidence Interval for Regression Coefficient

Definition

Simultaneous confidence interval is used when computing confidence intervals for parameters simultaneously.

Joint confidence region provides the accurate elliptical area as the confidence region, but the calculations and interpretations are complex. To obtain a rectangular-shaped confidence interval, the simultaneous confidence interval is used. When multiplying the confidence intervals of each coefficient, the resulting confidence region becomes smaller than the desired area. Therefore, to obtain the “at least ” confidence region, correction method is used. Conservative methods like the Bonferroni’s Method, provide a confidence region much larger than our desired , which satisfies the condition of being at least but reduces the power of the test. To address this issue, other methods have been devised.

Bonferroni’s Method

Assume that The Bonferroni confidence interval for is defined as where is the Standard Error for Regression Coefficient and is the number of explanatory variables.

Scheffe Method

Assume that The Scheffe confidence interval for is defined as where is the Standard Error for Regression Coefficient and is the number of explanatory variables.

Maximum Modulus Method

Assume that The Maximum Modulus confidence interval for is defined as where is a Random Variable representing the maximum of the absolute of independent random variables following , is the Standard Error for Regression Coefficient , and is the number of explanatory variables.

Facts

Link to originalMaximum Modulus method < Bonferroni’s method < Scheffe’s method Here, the second inequality holds only when the degree of freedom of comparisons is relatively small compared to the number of groups being compared.

Confidence Interval for the Mean in Multiple Linear Regression

Consider a Multiple Linear Regression model The confidence region for the mean response , when is given, is defined as where and is the number of explanatory variables

Link to original

Partial F-Test

Definition

Consider a Multiple Linear Regression model and the hypothesis

Define the full model as and under , define the reduced model as

We reject if is large, where and are the regression sum of squares of the full and reduced model, respectively. By the equality , we can test the instead of the

and . Therefore, where and

Hence, we reject if and we call it partial F-test

Link to original

The General Linear Test

Definition

Consider a Multiple Linear Regression model The general linear test can be written as where is matrix with rank and is vector.

e.g. for the hypothesis ,

and . Therefore, where

Hence, we reject if

Method of Lagrange Multiplier

By the method of Lagrange multiplier, Therefore, we can calculate the F-statistic without fitting the reduced model

Link to original

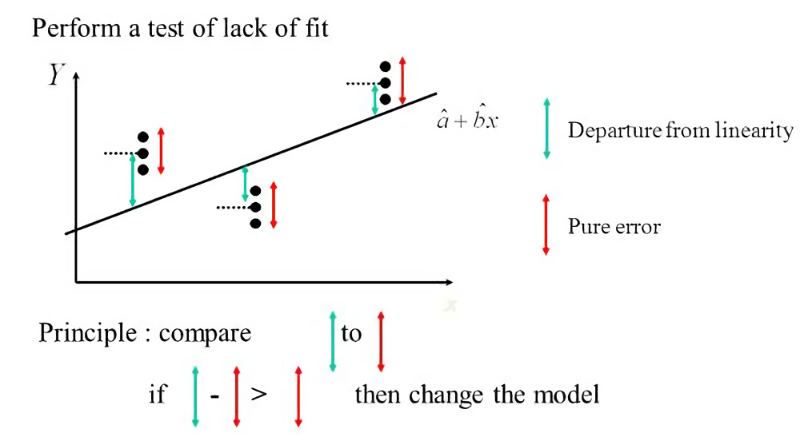

Lack of Fit Test

Lack of Fit Test

Definition

Consider a Multiple Linear Regression model We want to test the linearity of the model

Assume that is the -th replication at , then The is decomposed to the sum of the pure error sum of squares and the lack-of-fit sum of squares .

The degree of freedoms are , , and , where

Also, the mean squares are obtained by dividing the sum of squares by its degree of freedom

Under , the is an unbiased estimator of , and is always an unbiased estimator of , regardless of . Therefore, we can test the hypothesis by comparing ratios of both estimators.

and . Therefore,

Hence, we reject if

Facts

Link to originalThe lack of fit test uses data’s replication, Therefore, there should be more than one data (replication) for some ‘s.

Miscellanea

Multicollinearity

Definition

If two or more covariates are highly correlated, in a multiple regression model, the model has a multicollinearity The multicollinearity makes the estimation of coefficient very unstable. So, the estimated coefficients are not reliable.

Link to original

Regression Diagnostics

Residual

Studentized Residual

Definition

A studentized residual is a technique used to detect outliers

Internally Studentized Residual

where

Externally Studentized Residual

where is the unbiased estimator of based on observations after deleting the -th observation

Facts

Link to original

Leverage (Statistics)

Consider a Multiple Linear Regression model

is the -th leverage, the distance between and

A measure of how far away the independent variable value of an observation is from those of the other observations It is used to detect outlier

Facts

Link to original, where is the number of explanatory variables

Influence Measures

Cook's Distance

Definition

Single Observation

Consider a Multiple Linear Regression model where is the number of explanatory variables, and is the Leverage (Statistics)

is the Cook’s distance. The more influential the data point, the larger the Cook’s distance

Set of Observations

Consider a Multiple Linear Regression model where is the number of explanatory variables, and is the matrix of leverages

Link to original

Andrews-Pregibon Statistic

Definition

Single Observation

Consider a Multiple Linear Regression model where is the Leverage (Statistics) of the matrix

is the Andrews-Pregibon statistic. The more influential the data point, the smaller the Andrews-Pregibon statistic

Set of Observations

Consider a Multiple Linear Regression model where is the matrix of leverages

Link to original

DFBETAS

Definition

The DFBEATS consider the influence of the -th observation on

Single Observation

Consider a Multiple Linear Regression model where is the externally studentized residual and is the element of the matrix

Set of Observations

Consider a Multiple Linear Regression model where is the matrix of leverages and is the element of the matrix

Link to original

DFFITS

Definition

The DFFITS consider the influence of the -th observation on

Single Observation

Consider a Multiple Linear Regression model where is the externally studentized residual

Set of Observations

Consider a Multiple Linear Regression model where is the externally studentized residual and is the matrix of leverages

Link to original

COVRATIO

Definition

The COVRATIO consider the influence of the -th observation on

Single Observation

Consider a Multiple Linear Regression model

Set of Observations

Consider a Multiple Linear Regression model

Link to original

Multicollinearity

Variance Inflation Factor

Definition

Consider a Multiple Linear Regression model where is the number of explanatory variables.

The variance inflation factor (VIF) measures how much the variance of an estimated regression coefficient is increased because of Multicollinearity

Link to original

Condition Number

Definition

Consider a matrix with singular values

is the conditional number of

Facts

Link to originalIn linear regression, if the conditional value of the matrix is large, where is a design matrix, the model has a Multicollinearity problem.

Autocorrelation and Durbin-Watson Test

Durbin-Watson Test

Definition

Test used to detect the presence of autocorrelation at lag in the residual

Link to originalAssume is the residual structure of where is the number of observations and

indicates no autocorrelation

Selection of Regression Models

Adjusted R_squared Value

Definition

where is the number of explanatory variables.

Link to original

Mallow's Cp

Definition

where is the number of explanatory variables, is a sample variance under the full model, and

Mallow’s is used to assess the fit of a regression model. A small value of means that the model is relatively precise.

Link to original

PRESS statistic

Definition

where is an estimated value of calculated by the data excluding

The predicted residual error sum of squares (PRESS) is a form of cross validation used in regression analysis

Link to original

Model Validation

Cross Validation

Definition

Partition a set of data into sets and denote a function such that . For the dataset , let be the estimator based on observations except , then the cross validation estimation for the prediction error is defined as where is a loss function

Facts

Link to originalIf the data is partitioned into group with equal size, then it is called a -fold cross validation.

Miscellanea in Model Selection

Jensen's Inequality

Definition

Let be a Convex Function on an interval , be a Random Variable with support , and , then

Facts

Link to original

By Jensen’s Inequality, the following relation is satisfied arithmetic mean geometric mean harmonic mean

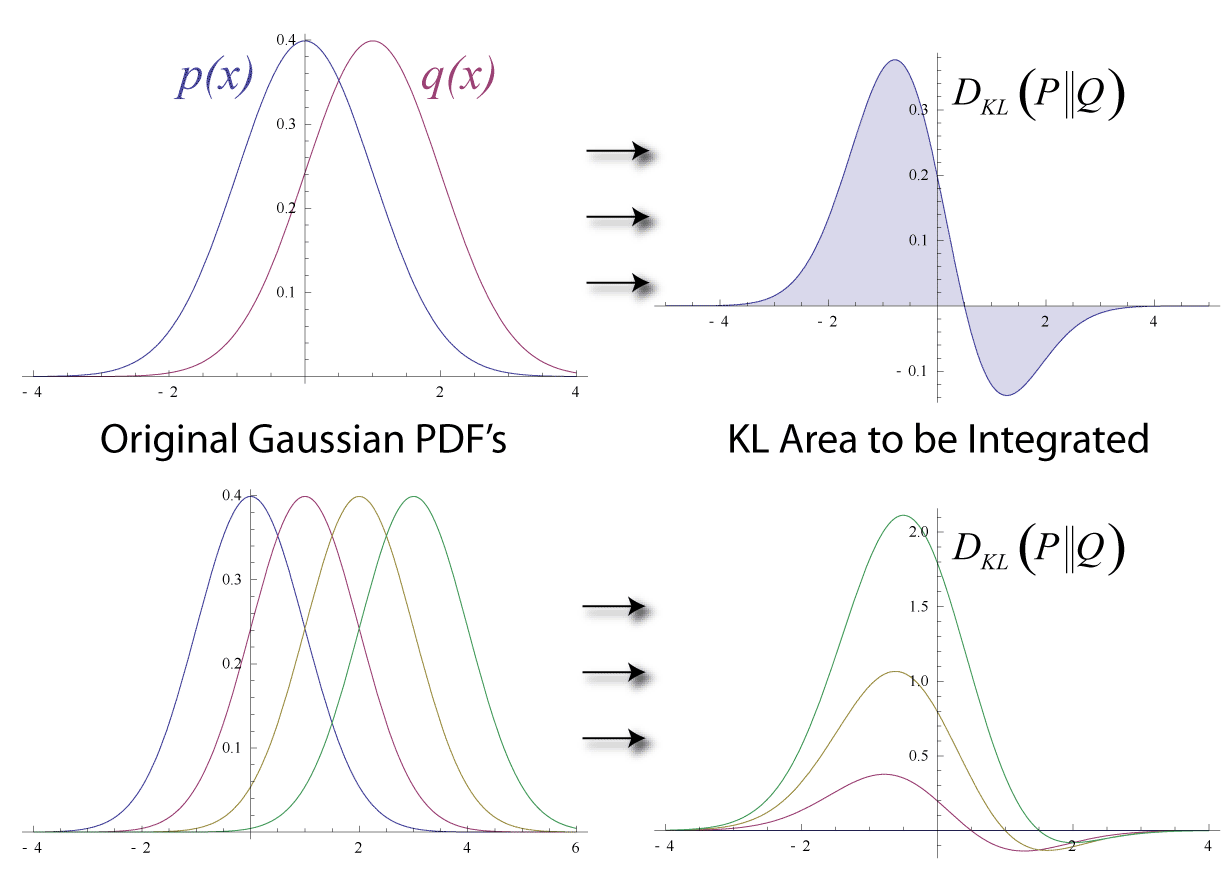

Kullback-Leibler Divergence

Definition

Assume that two Probability Distributions and are given. Then the Kullback-Leibler divergence between and is defined as

Kullback-Leibler divergence measures how different two distributions are.

It also can be expressed as a difference between the cross entropy (difference between distributions and )and entropy (inherent uncertainty of ).

Facts

Link to originalLet be a sequence of distributions. Then, The convergence of the KL-Divergence to zero implies that the JS-Divergence also converges to zero. The convergence of the JS-Divergence to zero is equivalent to the convergence of the Total Variation Distance to zero. The convergence of the Total Variation Distance to zero implies that the Wasserstein Distance also converges to zero. The convergence of the Wasserstein Distance to zero is equivalent to the Convergence in Distribution of the sequence.

Akaike Information Criterion

Definition

where is the MLE of the postulated model’s parameter

Link to original

Bayesian Information Criterion

Definition

Facts

BIC more penalize than AIC when is large

Link to original

Transformation of the Linear Regression Model

The Use of Dummy Variables

Dummy Variable

Definition

A dummy variable is one that takes a binary variable. It is commonly used in regression analysis to represent categorical variables that have more than two categories.

Link to original

One-way ANOVA with a Regression Model

where are dummy variables represent each category, and is the number of categories

In the setting, a corner-point constraint is used.

The null hypothesis i.e. there is no treatment effect, yields same test statistic as the null hypothesis of the one-way ANOVA with equal replication

Link to original

Two-way ANOVA with a Regression Model

where and are dummy variables representing categories of the two factors, is the number of categories for the first factor, and is the number of categories for the second factor

In this setting, a corner-point constraint is used for both factors.

The null hypotheses can be tested by Deviance

Link to original

Polynomial Regression

Polynomial Regression

Definition

Consider explanatory variables and a response variable The -variables 2nd order polynomial regression model is defined as

The single variable -th order polynomial regression model is defined as

Facts

Link to originalThe column vectors of the design matrix in the polynomial regression are highly correlated, so proper transformation is needed. Among them, orthogonal polynomial is often used.

Response Surface Analysis

Definition

The goal of response surface analysis is finding the optimal condition for the response. It employs Polynomial Regression model to model response surface.

Consider explanatory variables and a response variable . The second-order response surface model is defined as where , , Let the fitted model is . Then, the stationary point is obtained by and the fitted value at the stationary point is . If is Positive-Definite Matrix, then is minimum If is Negative-Definite Matrix, then is maximum If is neither Positive-Definite Matrix nor Negative-Definite Matrix, then is saddle point.

Link to original

Weighted Least Squares Method

Weighted Least Squares

Definition

Weighted least squares (WLS) is a generalization of Least Square to cope with Heteroskedasticity.

We assume that where The weight matrix is positive definite, so there exists a non-singular matrix . Consider a transformed model Now, the new error term is i.i.d. And the weighted least squares estimator is obtained by

Link to original

Box-Cox Transformation Model

Box-Cox Transformation Model

Definition

Box-Cox transformation is useful when dealing with heteroskedasticity by stabilizing variance.

Box-Cox Transformation

Box-Cox Transformation Model

The model using the Box-Cox Transformed variable as a response variable is called a Box-Cox transformation model where

Link to original

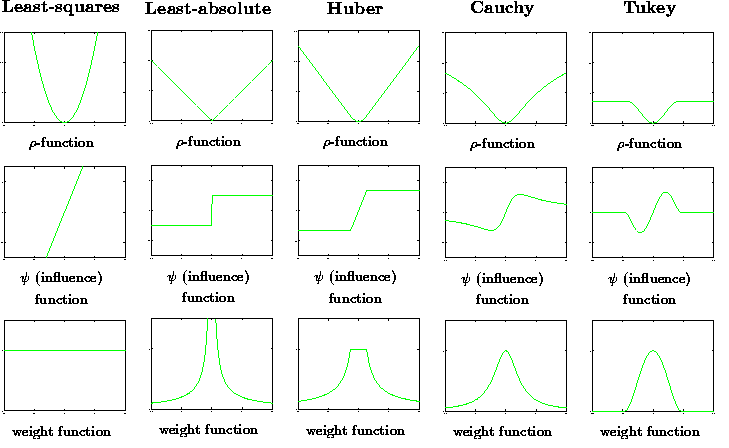

Robust Regression

M-estimator

Definition

Consider an error (loss) function , The M-estimator is obtained by where is a robust estimator, and given by

If , then it is LSE, and if , then it is -norm regression estimator. The derivative of , denoted , is called the influence function. Since is a non-liner in , we van not get explicit solution. Instead, we use an iterative method, called IRLS (Iterative Reweighted Least Squares).

Iterative Reweighted Least Squares

Link to original

- Initialize , often obtained from Ordinary Least Squares.

- Calculate the weight If , then

- Update using Weighted Least Squares where

- Iterate step 1 to 3 until the estimator converges.

Inverse Regression

Inverse Regression

Definition

The problem predicting for a given response is called inverse regression (calibration, discrimination).

Consider a simple linear regression model The point estimation of for a given is obtained by Also, the confidence interval for is obtained by the second-order inequality where and . Let be solutions for the inequality, and we have a confidence interval

Link to original

Biased Estimation

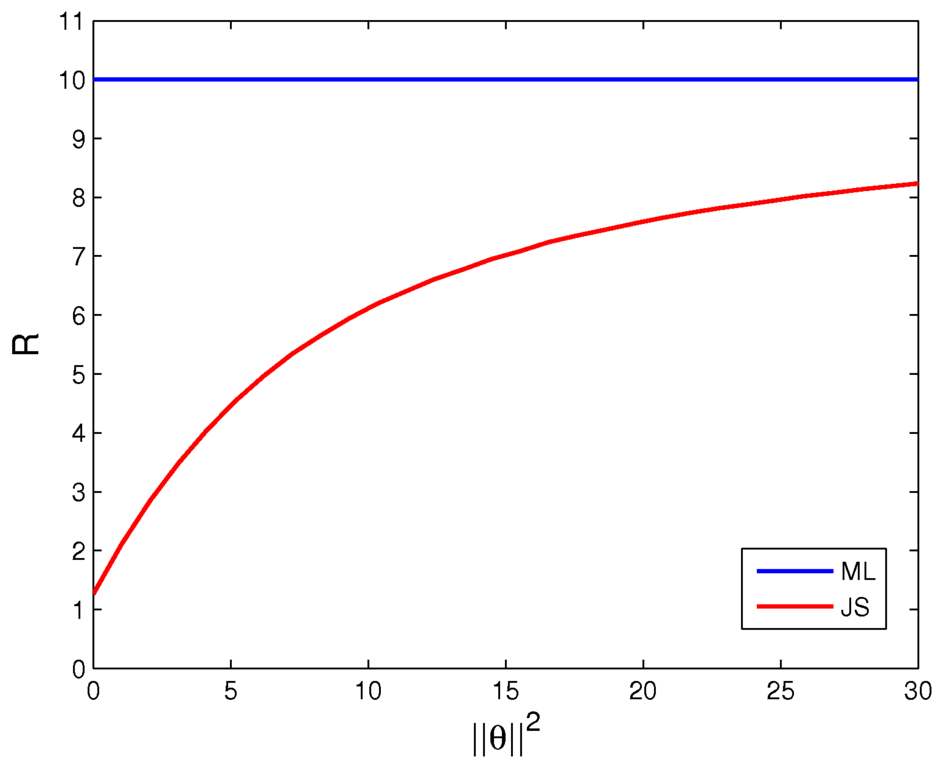

James-Stein Shrinkage Method

James-Stein Estimator

Definition

The James–Stein estimator is a biased estimator of the mean of correlated Multivariate Normal Distribution.

Let . The James–Stein estimator is defined as

The Least Squares Estimator is MLE and UMVUE. However, in terms of Mean Squared Error, James-Stein estimator is better than the least squares estimator for cases.

Link to original

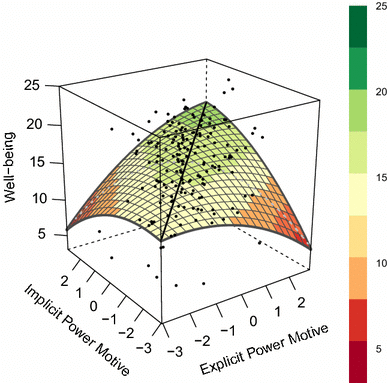

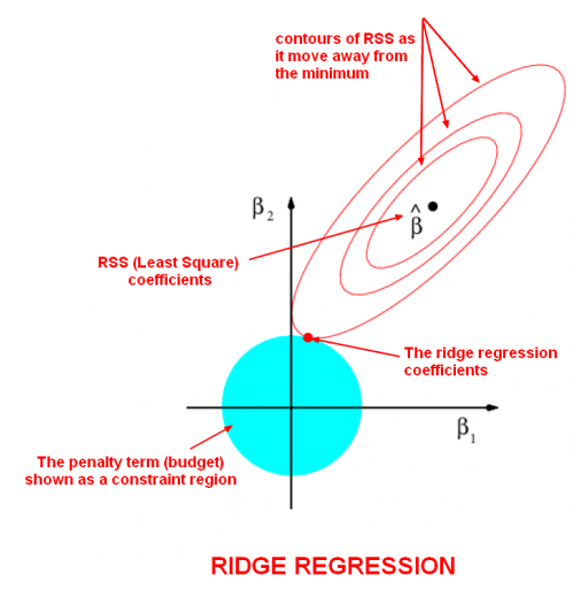

Ridge Regression

Ridge Regression

Definition

where is a complexity parameter that controls the amount of shrinkage.

Ridge regression is particularly useful to mitigate the problem of Multicollinearity in linear regression

Facts

Link to originalwhere by Singular Value Decomposition

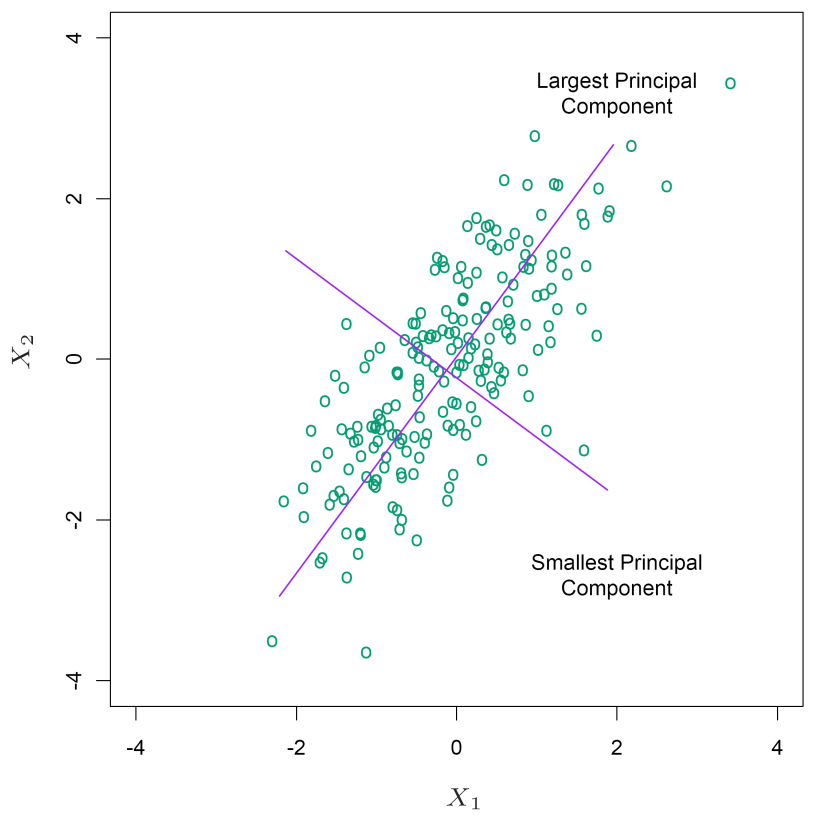

Principal Component Regression

Principal Component Analysis

Definition

PCA is a linear dimensionality reduction technique. The correlated variables are linearly transformed onto a new coordinate system such that the directions capturing the largest variance in the data.

Population Version

Given a random vector , we find a such that is maximized: Equivalently, by the Method of Lagrange Multipliers with , By differentiation, the is given by the eigen value problem Thus the maximizing the variance of is the eigenvector corresponding to the largest Eigenvalue.

Sample Version

Given a data matrix , by Singular Value Decomposition, A matrix can be factorized as . By algebra, , where we call the -th principal component.

Facts

Link to originalSince and

Principal Component Regression

Definition

where is a matrix whose columns are . In PCR, instead of regressing the dependent variables on explanatory variables directly, principal components(PC) of the explanatory variables are used as regressors. One typically uses only first a few PCs for regression, making PCR a kind of regularized procedure.

Facts

Link to originalSince are linear combination of the original , we can express the solution in therms of coefficients of the .

PLS: Partial Least Squares

Partial Least Squares Regression

Definition

where is the sample covariance matrix.

Unlike PCR maximizing only, PLS finds directions maximizing both and

Link to original

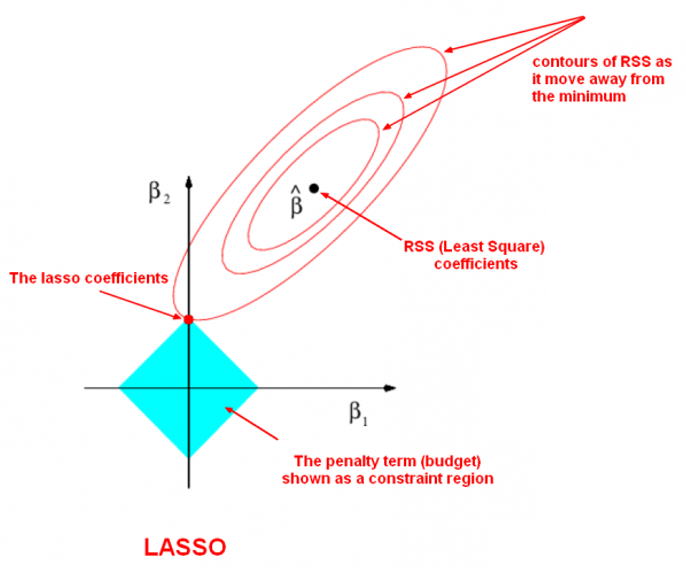

LASSO

Lasso Regression

Definition

Lasso model assume that the coefficients of the model are sparse.

Link to original

Bayes Estimator and Biased Estimators

Bayes Theorem

Definition

Discrete Case

where by Law of Total Probability

is called a prior probability, and is called a posterior probability

Continuous Case

where is not a constant, but an unknown parameter follows a certain distribution with a parameter .

is called a prior probability, is called a likelihood, is called an evidence or marginal likelihood, and is called a posterior probability

Parameter-Centric Notation

Examples

Consider a random variable follows Binomial Distribution and a prior distribution follows Beta Distribution where .

The PDFs are defined as and . Then, by Bayes theorem, Under Squared Error Loss, the Bayes Estimator is a mean of the posterior distribution.

Link to original

Loss Function

Definition

Let be a parameter, be a Statistic for the parameter , and be a Decision Function

A loss function is a non-negative function defined as

It indicates the difference or discrepancy between and

Examples

Link to original

- Absolute Error Loss

- Squared Error Loss

- Sum of Squared Errors Loss

- Cross-Entropy Loss

- Goal Post Error Loss

- Huber Loss

- Binary Loss

- Triplet Loss

- Pairwise Loss

Risk Function

Definition

Risk function is an expectation of Loss Function

Link to original

Bayes Risk

Definition

r(\theta, \delta) &= \int_{\Theta} R(\theta, \delta) \pi(\theta) d\theta = E_\theta[R(\theta, \delta)] = E_\theta[E_{x}[L(\theta, \delta(x))]]\\ &= \int_{\Theta} \int_{X} L(\theta, \delta(x))p(x|\theta) \pi(\theta) dx d\theta = \int_{X} \int_{\Theta} L(\theta, \delta(x))p(\theta|x)p(x) d\theta dx\\ &= \int_{X}E_\theta[L(\theta, \delta(x))|X=x]p(x)dx = \int_{X}\rho(x, \pi)p(x)dx \end{aligned}$$ where $L(\theta, \delta)$ is [[Loss Function]], $R(\theta, \delta)$ is [[Risk Function]], and $\rho(x, \pi)$ is a [[Posterior Risk]].Link to original

Bayes Estimator

Definition

Estimator that minimizes the Bayes Risk or Posterior Risk

Facts

Under Squared Error Loss, the Bayes estimator is a posterior mean, and a posterior mode under Absolute Error Loss.

Link to originalConsider a regression model and the prior distribution for , . Then, the Bayes estimator under the Squared Error Loss is obtained as If for some and , then the Bayes estimator is the same as ridge estimator If and , then the Bayes estimator is the James-Stein regression estimator

Empirical Bayes Estimator

Definition

Empirical Bayes estimator is a Bayes Estimator whose prior distribution is estimated from the data.

The parameter of the prior distribution is estimated by Maximum Likelihood Estimation. And a posterior distribution is calculated with the . Estimator is obtained using the posterior distribution.

Examples

Consider data following a Poission Distribution and a prior distribution follows a Gamma Distribution where with known, unknown.

Then the marginal likelihood is defined as

p(x_{i}|\beta) &= \int \operatorname{Pois}(x|\lambda_{i})\Gamma(\lambda_{i};\alpha, \beta) d\lambda_{i} = \int \left[ \frac{e^{-\lambda_{i}}\lambda^{x_{i}}}{x_{i}!} \right]\left[ \frac{\beta^{\alpha}\lambda_{i}^{\alpha-1}e^{-\beta \lambda_{i}}}{\Gamma(\alpha)} \right]d\lambda_{i}\\ &= \binom{x_{i}+\alpha-1}{\alpha-1}\left( \frac{\beta}{\beta+1} \right)^{\alpha} \left( \frac{1}{\beta+1} \right)^{x_{i}} \sim NB\left( \alpha, \frac{\beta}{\beta+1} \right) \end{aligned}$$ And the [[Maximum Likelihood Estimation|MLE]] of $\beta$, $\hat{\beta}_\text{MLE}$ is $$\hat{\beta}_\text{MLE} = \underset{\beta}{\operatorname{argmax}} \prod_{i=1}^{n}p(x_{i}|\beta) = \frac{\alpha}{\bar{X}}$$ The posterior distribution with $\hat{\beta}_\text{MLE}$ is defined as $$p(\lambda_{i}|x; \hat{\beta}_{\text{MLE}}) \propto p(x|\lambda_{i})\pi(\lambda_{i};\alpha, \hat{\beta}) \sim \Gamma(x_{i}+\alpha, 1+\hat{\beta})$$ Under [[Squared Error Loss]], the [[Bayes Estimator]] is a mean of the posterior distribution $\Gamma(x_{i}+\alpha, 1+\hat{\beta})$. $$\hat{\delta}_{\text{Bayes}} = \frac{\bar{X}(X_{i}+\alpha)}{\bar{X} + \alpha}$$ # Facts > Assume that $\mathbf{z} \sim N_{p}(\boldsymbol{\mu}, \mathbf{I})$ and the prior distribution for $\boldsymbol{\mu}$ is $\boldsymbol{\mu} \sim N_{p}(\mathbf{0}, \sigma^{2}\mathbf{I})$. > Then, the empirical Bayes estimator under the [[Squared Error Loss]] is [[James-Stein Estimator]] $\hat{\boldsymbol{\mu}}_{JS} = \left( 1 - \cfrac{p-2}{\mathbf{z}^{\intercal}\mathbf{z}} \right)\mathbf{z}$Link to original

Generalized Linear Model

Exponential Family

Exponential Family

Definition

General Representation

Where:

- is the vector of natural parameters.

- is the vector of sufficient statistics.

- is the non-negative volume of

- is the normalizer refers to the measure of .

Canonical Form with Dispersion Parameter

where , , and are known functions, is called a variance function, and is called a dispersion parameter. This form is mainly used for GLMs.

Examples

Distributions Normal Poisson Binomial Gamma Inverse Gaussian Notation Natural link Identity log logit inverse Facts

Link to originalFor an exponential family, and holds by Bartlett Identities

Bartlett Identities

Definition

First Bartlett Identity

where is a Likelihood Function and is a Score Function

Second Bartlett Identity

where is a Likelihood Function

Link to original

Score Function

Definition

The gradient of the log-likelihood function with respect to the parameter vector. The score indicates the steepness of the log-likelihood function

Facts

Link to originalThe score will vanish at a local Extremum

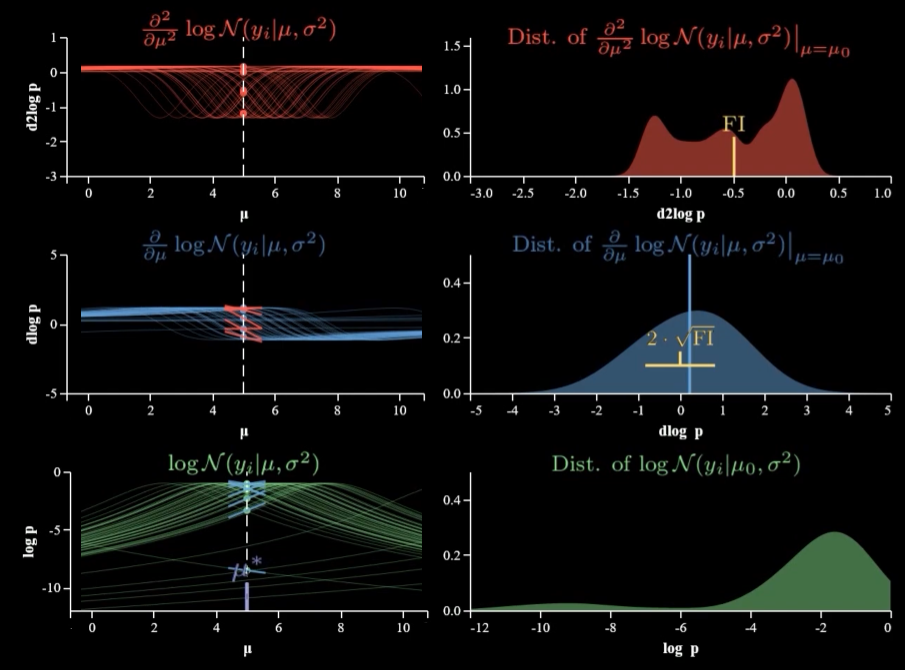

Fisher Information

Definition

Fisher Information

I(\theta) &:= E\left[ \left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right)^{2} \right] = \int_{\mathbb{R}} \left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right)^{2} p(x, \theta)dx\\ &= -E\left[ \frac{\partial^{2} \ln f(X|\theta)}{\partial \theta^{2}} \right] = -\int_{\mathbb{R}} \frac{\partial^{2} \ln f(X|\theta)}{\partial \theta^{2}} p(x, \theta)dx\\ \end{aligned}$$ by the [[Bartlett Identities#second-bartlett-identity|second Bartlett identity]] $$I(\theta) = \operatorname{Var}\left( \frac{\partial \ln f(X|\theta)}{\partial \theta} \right) = \operatorname{Var}(s(\theta|x))$$ where $s(\theta|x)$ is a [[Score Function]] ## Fisher Information Matrix ![[Pasted image 20231224171415.png|800]] Let $\mathbf{X}$ be a [[Random Vector]] with [[Density Function|PDF]] $f(x|\boldsymbol{\theta})$, where $\boldsymbol{\theta} \in \Omega \subset R^{p}$, then the **Fisher information matrix** for on $\boldsymbol{\theta}$ is a $p \times p$ matrix defined as $$I(\boldsymbol{\theta}) := \operatorname{Cov}\left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right) = E\left[ \left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right) \left( \cfrac{\partial}{\partial \boldsymbol{\theta}} \ln f(x|\boldsymbol{\theta}) \right)^\intercal \right] = -E\left[ \cfrac{\partial^{2}}{\partial \boldsymbol{\theta} \partial \boldsymbol{\theta}^\intercal} \ln f(x|\boldsymbol{\theta}) \right]$$ and $jk$-th element of $I(\boldsymbol{\theta})$, $I_{jk} = - E\left[ \cfrac{\partial^{2}}{\partial \theta_{j} \partial\theta_{k}} \ln f(x|\boldsymbol{\theta}) \right]$ # Properties ## Chain Rule The information in length $n$ [[Random Sample]] $X_{1}, X_{2}, \dots, X_{n}$ is $n$ times the information in a single sample $X_{i}$ $I_\mathbf{X}(\theta) = n I_{X_{1}}(\theta)$ # Facts > In a location model, information is not dependent on a location parameter. > $$I(\theta) = \int_{-\infty}^{\infty}\left( \frac{f'(z)}{f(z)} \right)^{2} f(z)dz$$Link to original

Construction of GLMs

Generalized Linear Model

Definition

A generalized linear model (GLM) is a generalization of linear regression model.

The GLM consists of three elements.

- Response variable: The i.i.d. response variables follow Exponential Family.

- Linear predictor: is called a linear predictor

- Link function: There exists a link function satisfies , where is assumed to be monotone and differentiable.

Link Functions

Link functions for binomial response.

- Logit:

- Probit: where is a CDF of Normal Distribution

- Complementary log-log:

For an Exponential Family, the link function satisfying is called natural link.

Facts

Link to originalThe parameter is estimated by MLE. Since the score function is non-linear, it can’t have explicit solution, so we use Newton–Raphson method or Fisher’s Scoring Method.

Estimation of Regression Coefficients

Newton's Method

Definition

An iterative algorithm for finding the roots of a differentiable function, which are solution to the equation

Algorithm

Find the next point such that the Taylor series of the given point is 0 Taylor first approximation: The point such that the Taylor series is 0:

multivariate version:

In convex optimization,

Find the minimum point^[its derivative is 0] of Taylor quadratic approximation. Taylor quadratic approximation: The derivative of the quadratic approximation: The minimum point of the quadratic approximation^[the point such that the derivative of the quadratic approximation is 0]: multivariate version:

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient and hessian are ,

Then, solution is If is invertible, is a Least Square solution.

Link to original

Fisher's Scoring Method

Definition

Fisher’s scoring method is a variation of Newton–Raphson method that uses Fisher Information instead of the Hessian Matrix

where is the Fisher Information.

Link to original

Goodness-of-Fit Measures for GLMs

Deviance

Definition

Deviance is a goodness-of-fit statistic for Generalized Linear Model.

Let be an estimator of under maximal model . The deviance is defined as where is a log-likelihood, is the number of parameter of the current model, and is the sample size.

Consider a null hypothesis : the current model is not good, can be tested with the deviation. We reject if

Examples

Link to original

Distribution Deviance Normal Poisson Binomial Gamma Inverse Gaussian

Pearson's Chi-squared Statistic

Definition

The Pearson’s statistic is goodness-of-fit measure for GLM defined as where , is the variance function, is the number of parameter of the current model, and is the sample size.

Facts

Link to originalUnder the Gaussian distribution, the Deviance and the Pearson’s statistic is the same and follow Chi-squared Distribution

Testing and Residuals

Goodness-of-Fit Test with Deviance

Definition

Assume that a current model with -parameters and consider a null hypothesis . Then, the test Statistic is defined as where are the Deviance of the generalized linear models.

We reject if

Link to original

Pearson Residual

Definition

The Pearson residual is defined as where , is the variance function.

Facts

Link to originalwhere is the Pearson’s Chi-squared Statistic

Anscombe Residual

Definition

Anscombe Transformation

where is the variance function.

Anscombe Residual

The Anscombe residual is the Anscombe Transformed Pearson Residual. The transformation make the residual follows a normal distribution. where , is the variance function.

Link to original

Deviance Residual

Definition

The deviance residual is defined as where , is the variance function, and the Deviance

Link to original

ANOVA Models

Constraints for Dummy Variable

Definition

When using Dummy Variable, the design matrix where is the number of groups, is not a full rank. The problem can be solved by assigning some constraints.

Sum-to-Zero Constraint

Sum-to-zero constraint uses row-wise demeaned dummy variables.

With sum-to-zero constraint, each coefficient indicates discrepancy from overall mean, and the intercept term indicates overall mean.

Corner-Point Constraint

Corner-point constraint omit a base group from the model.

With corner-point constraint, each coefficient indicates discrepancy from base group, and the intercept term indicates mean of base group.

Link to original

Deviance Test for One-way ANOVA

The null hypothesis i.e. there is no treatment effect, can be tested with the Deviance. If is known, the test statistic is defined as And reject the if

If is unknown, the test statistic is defined as And reject the if

Link to original

Deviance Test for Two-way ANOVA

For a two-way ANOVA with factors and , we have three null hypotheses to test:

- i.e. there is no treatment effect of factor

- i.e. there is no treatment effect of factor

- i.e. there is no interaction effect between factor and They can be tested with the Deviance.

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

If is known, the test statistic for is defined as And reject the if

If is unknown, the test statistic for is defined as And reject the if

Link to original

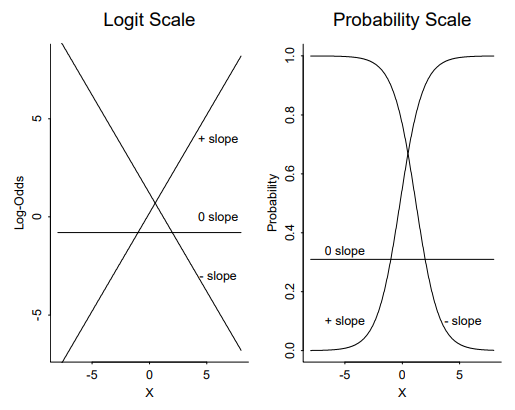

Logistic Regression Model

Logistic Regression

Definition

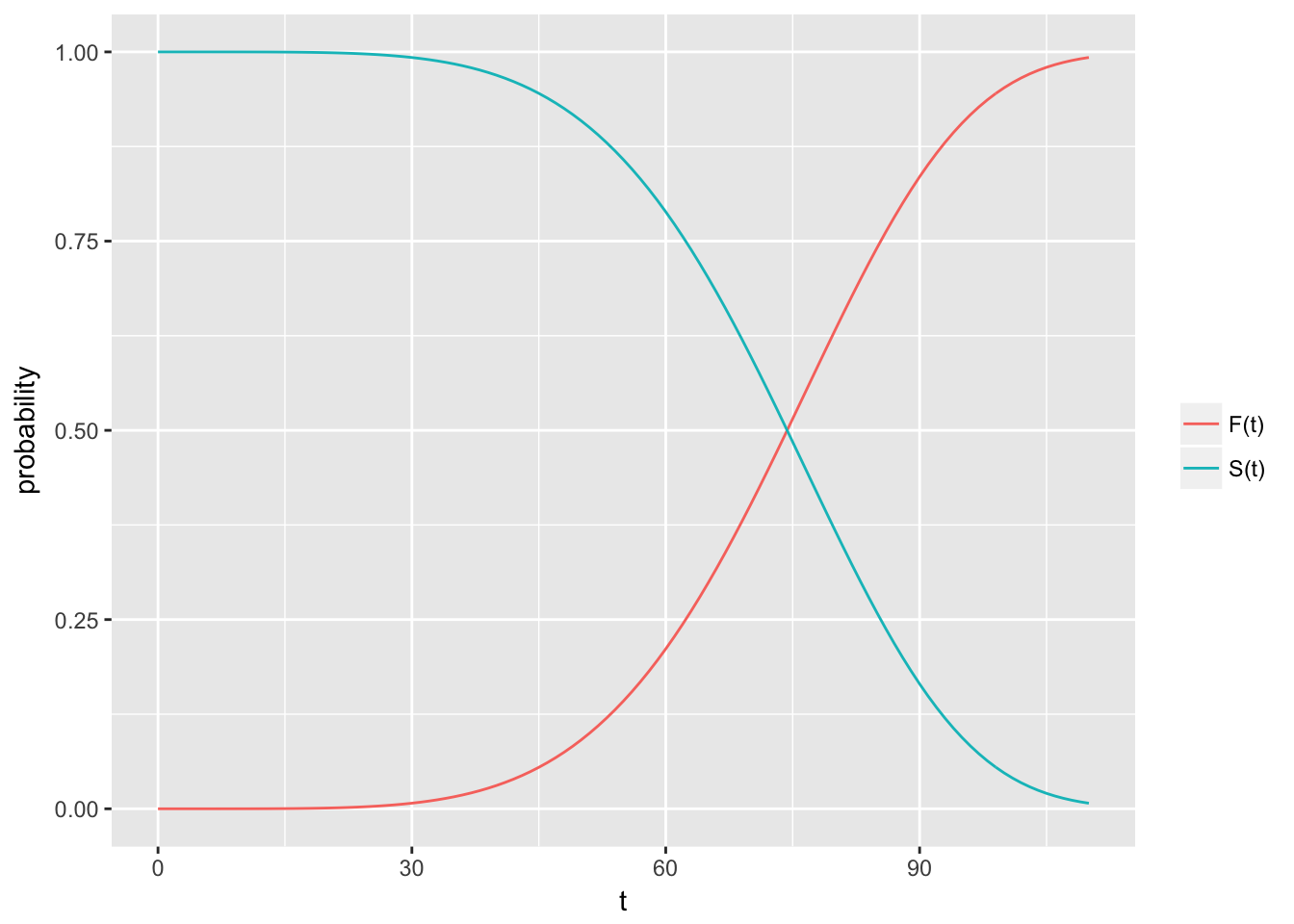

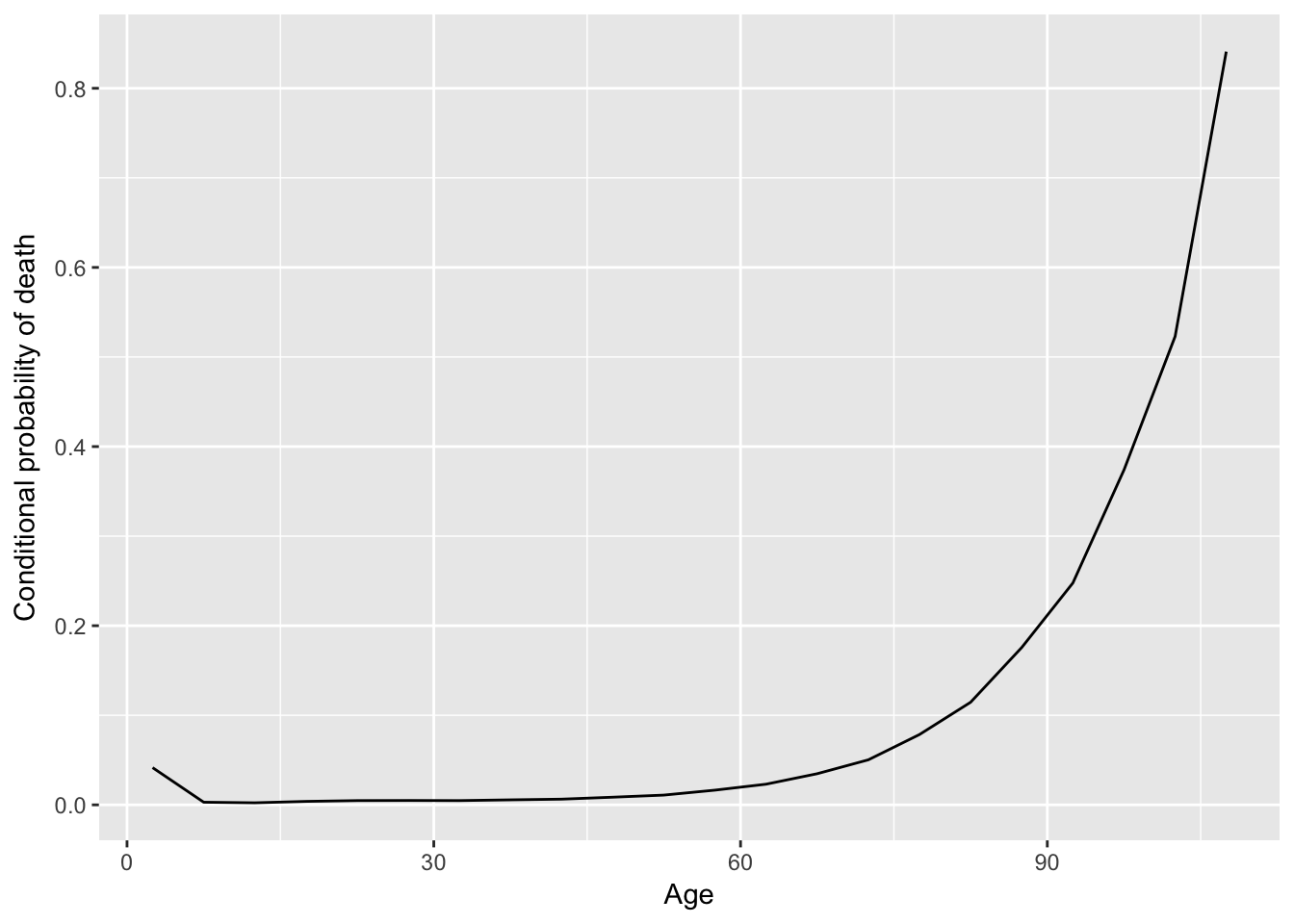

Logistic regression is a Generalized Linear Model with Logit link function. Logistic regression models the log-odds of an event as a linear combination of independent variables. The probability is calculated as

Link to original

Weighted Least Squares for Binomial Distribution

Definition

Let and random variables and consider a variance stabilizing transformation . Then, by Taylor expansion and Delta Method and hold. Now, the Pearson is defined as By minimizing we can find the WLS estimator . It can be seen as a Weighted Least Squares with , , and

Widely Used Variance Stabilizing Transformations

Link to original

- Logit function:

- The arcsin squared-root function:

- Empirical logistic function:

Multinomial Logistic Regression

Definition

Multinomial logistic regression is used for polychotomous (multi-class) data.

Suppose the number of classes is The probability is calculated as

Link to original

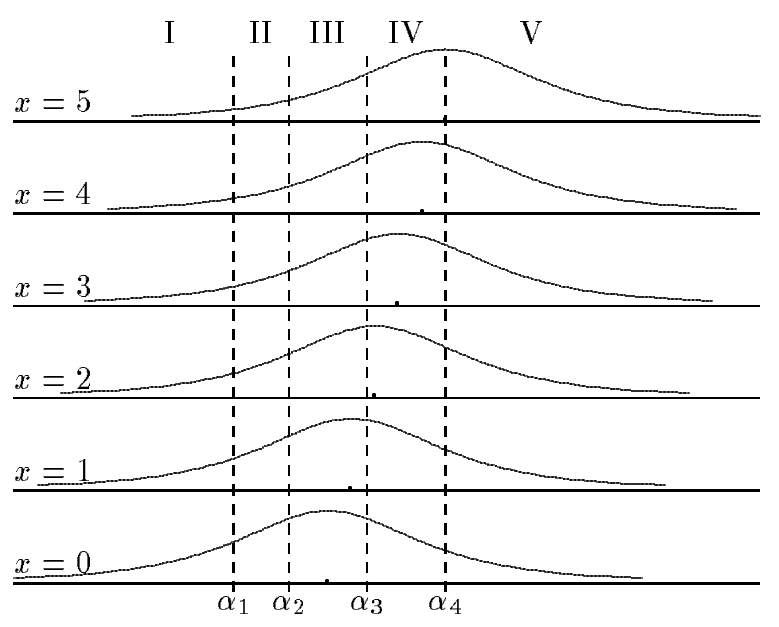

Proportional Odds Model

Definition

The proportional odds model is a Generalized Linear Model used for modeling the ordinal response.

Consider the response having possible categories. And the cumulative probability of a response is .

Link to original

Log-Linear Model

Contingency Table

Definition

contingency table

Total Total Contingency table is a table displays the multivariate frequency distribution of the variables.

Examples

Poisson and Multinomial Distribution Cases

2-Dimensional Case

Consider a contingency table.

No Margin Fixed

If ‘s are independent and , then the joint PDF becomes

Fixed Total

Given the total , the conditional distribution is Multinomial Distribution. where and

One Margin Fixed

Given one fixed margin (in this case, row) , the conditional distribution of frequency of -th row is Multinomial Distribution. And if each row is independent, the conditional distribution is product-Multinomial Distribution (joint multinomial distribution).

3-Dimensional Case

Consider a contingency table.

No Margin Fixed

If ‘s are independent and , then the joint PDF becomes

Fixed Total

Given the total , the conditional distribution is Multinomial Distribution. where and

One Margin Fixed

Given one fixed margin (in this case, ) , and each -th rows are independent, the conditional distribution is product-Multinomial Distribution (joint multinomial distribution).

Two Margin Fixed

If two margins are fixed (in this case, and ) , and each -th and -th rows are independent, the conditional distribution is product-Multinomial Distribution (joint multinomial distribution).

Link to original

Poisson Regression

Definition

Poisson Regression

Log-linear model is a Generalized Linear Model with log link function. Log-linear model models the log expected counts of an event as a linear combination of independent variables.

Log-Linear Model for Contingency Table

Consider a Contingency Table, then the model is defined as where is the overall effect, and are main effect, and are interaction effect.

The Deviance of the model is defined as and the Pearson’s Chi-squared Statistic is defined as

Link to original

Overdispersion

Overdispersion

Definition

Overdispersion is the presence of greater observed variance than what would be expected under a given statistical model. It can happen due to heterogeneity or lack of independence between trials, and clustering or grouping in the data.

Overdispersion on Binomial Distribution

Assume that there are clusters, the number of observations of each cluster is . Let the Random Variable following Binomial Distribution be the number of successes out of observation, where is also Random Variable with mean and variance . Also, let be the total number of successes in the all clusters, where . Then, the mean and variance of is defined as Here, is called a dispersion parameter. If , then overdispersion occurred.

The Lexis Ratio is also used for detecting overdispersion where and

Overdispersion on Poisson Distribution

If in Poission Distribution setting, then the overdispersion might occur. Let i.i.d. be the number of occurrences at the -th cluster, and the number of clusters is also a Random Variable. Also, let be the total number of occurrences in clusters, where follows a Poission Distribution and independent to . Then, the mean and variance of is defined as If , then the overdispersion occurs.

Link to original

Nonlinear Regression Model

Nonlinear Regression Model

Nonlinear Regression Model

Definition

Let be a response and be -dimensional explanatory variable. The non-linear regression model can be written as where is a known regression function and is a -dimensional parameter vector.

The parameter vector can not be obtained analytically. So it estimated numerically with such methods Newton–Raphson method, Gauss-Newton Method

Facts

Link to originalIf the function is linear, then the model is Multiple Linear Regression.

Estimation of Parameter

Gauss-Newton Method

Definition

Gauss-Newton method is an iterative algorithm used to solve used to solve non-linear least squares problems. This method approximates Hessian Matrix using the Jacobian Matrix.

Algorithm

Consider a non-linear regression model with -dimensional explanatory variable and -dimensional parameter vector The first order Taylor expansion gives the linear approximation of the model. where is the initial vector for given by domain knowledge, is the estimation vector with , and is the Jacobian Matrix of the function at .

Now, the approximated model has a Multiple Linear Regression. Since and are constants given , we can find LSE of . And the updating formula is defined as The updating is repeated iteratively until it converges. converges to the minimizing Sum of Squared Errors Loss with a proper initial value .

Link to original

Inference in the Nonlinear Model

Distribution of Parameter of Nonlinear Regression

Definition

Suppose a Nonlinear Regression Model If the error term follows a Normal Distribution, LSE is the same as MLE.

For a large sample size, the distribution of the estimated parameter follows a Normal Distribution where is the Jacobian Matrix of and is the variance of the error term.

The variance of the error term can be estimated by

Link to original

Standard Error for Parameter of Nonlinear Regression

Definition

Suppose a Nonlinear Regression Model The standard error for parameter of nonlinear regression is calculated as where , , and is the Jacobian Matrix of .

Link to original

Joint Confidence Region for Nonlinear Regression

Definition

Suppose a Nonlinear Regression Model The join confidence region for is obtained as where , is the number of explanatory variables, and is the Jacobian Matrix of .

Link to original

Confidence Interval for Parameter of Nonlinear Regression

Definition

Suppose a Nonlinear Regression Model The confidence interval for is obtained as where is the Standard Error for Parameter of Nonlinear Regression , and is the number of explanatory variables.

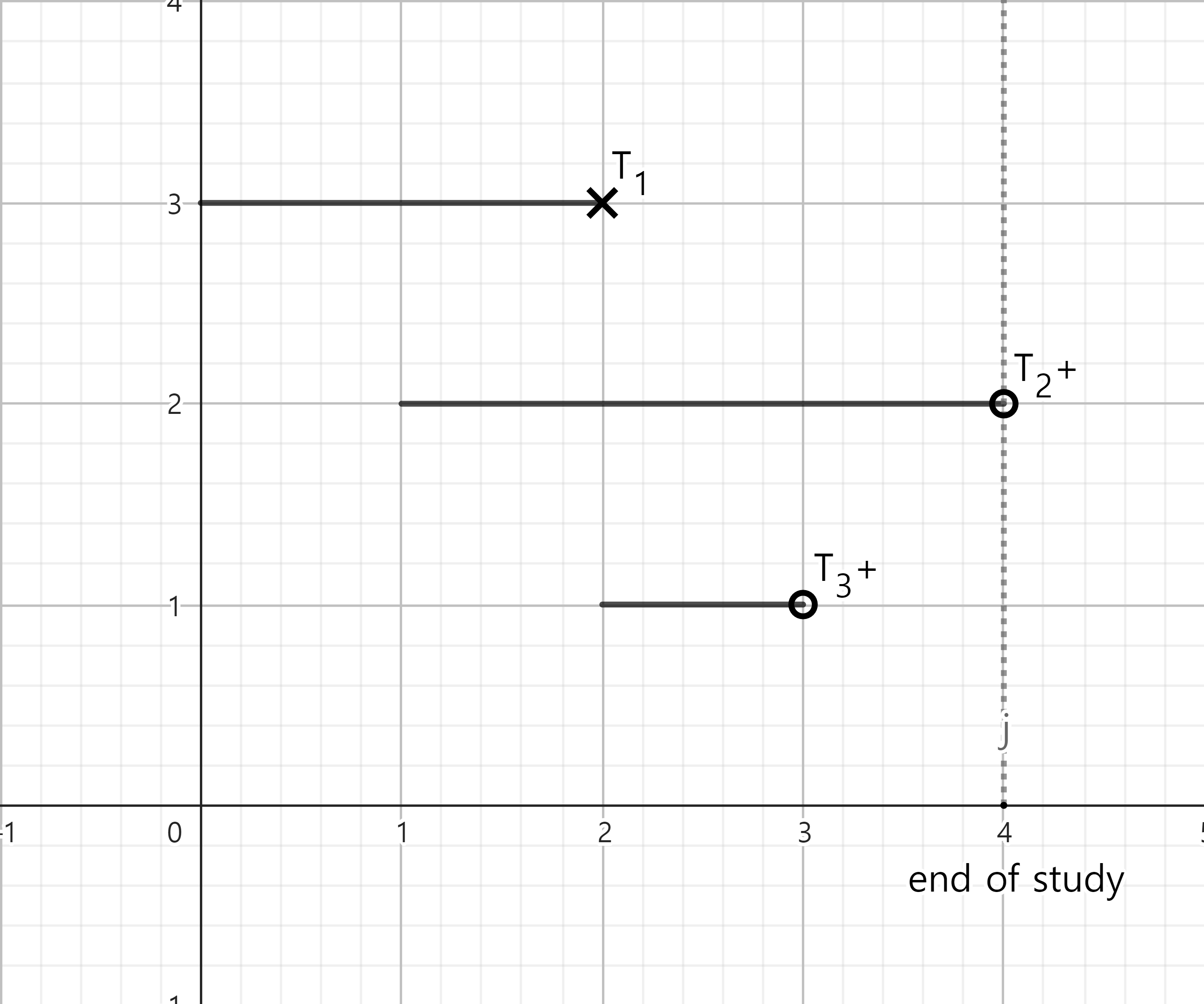

Link to original