Matrix Theory

Basic Theories

Matrix

Definition

Operations

Addition, Subtraction

Scalar Multiplication

Transpose

,

Matrix Multiplication

where ,

Vector-Matrix Multiplication

Link to original

Trace

Definition

The sum of elements on the main diagonal

Facts

^[Cyclic property]

Link to originalLink to originalThe Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

Determinant

Definition

Computation

matrix

matrix

Laplace Expansion

Definition

Let be a cofactor matrix of

Along the th column gives

Along the th row gives

Link to original

Facts

The volume of box^[parallelepiped] is expressed by a determinant

changes sign, when two rows are exchanged (permutated)

depends on linearly on the 1st row.

If two row or column vectors in are equal, then

Gauss Elimination does not change

If a matrix has zero row or column vectors, then

If a matrix is diagonal or triangular, then the determinant of is the product of diagonal elements where is the element of the matrix .

Link to originalThe Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

where the dimensions of the matrices are and respectively.

Link to original

Idempotent Matrix

Definition

A matrix whose squared matrix is itself.

Facts

Eigenvalues of idempotent matrix is either or .

Let be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Let be an idempotent matrix, then

Link to originalIf is idempotent, then also idempotent.

Inverse Matrix

Rank of Matrix

Definition

The rank of matrix is the number of linearly independent column vectors, or the number of non-zero pivots in Gauss Elimination

Facts

For the non-singular matrices and ,

Link to originalIf a matrix is Symmetric Matrix, then is the number of non-zero eigenvalues.

Inverse Matrix

Definition

satisfies for given matrix

Computation

2 x 2 matrix

where the matrix , and is a Determinant of the matrix

Using Cofactor

where the matrix is the cofactor matrix, which is formed by all the cofactors of a given matrix . Then, by the definition of inverse matrix,

Link to original

Sherman–Morrison Formula

Definition

Suppose is an invertible matrix an d . Then, is invertible . In this case,

Proof

Multiplying to the RHS gives

Since is a scalar, The numerator of the last term can be expressed as

So,

Examples

Updating Fitted Least Square Estimator

Sherman–Morrison formula can be used for updating fitted Least Square estimator

Let be the least square estimator of and the matrices with a new data be and Then,

Let for convenience Then, by Sherman-Morrison formula

So, where by expansion

So, can be obtained without additional inverse matrix calculation.

Facts

Sherman–Morrison formula is a special case of the Woodbury Formula

Link to original

Matrix Inversion Lemma

Definition

where the size of matrices are is , is , and is and should be invertible.

The inverse of a rank-k correction of some matrix can be computed by doing a rank-k correction to the inverse of the original matrix

Proof

Start by the matrix

The decomposition of the original matrix become Inverting both sides gives^[Diagonalizable Matrix]

The decomposition of the original matrix become Again inverting both sides,

The first-row first-column entry of RHS of (1) and (2) above gives the Woodbury formula

Link to original

Moore-Penrose Inverse

Definition

For a matrix , the Moore-Penrose inverse of is a matrix is satisfying the following conditions

Facts

The pseudoinverse is defined and unique for all matrices whose entries are real and complex numbers.

If the matrix is a square matrix, then is also a square matrix and

Link to original Footnotes

Partitioned Matrix

Block Matrix

Definition

A matrix that is interpreted as having been broken into sections called blocks or sub-matrices

Operations

Addition, Subtraction

Scalar Multiplication

Matrix Multiplication

Determinant

Let or be invertible matrices

Link to original

Eigenvalues and Eigenvectors

Eigendecomposition

Definition

where is a matrix and

If is a scalar multiple of non-zero vector , then is the eigenvalue and is the eigenvector.

Characteristic Polynomial

The values satisfying the characteristic polynomial, are the eigenvalues of the matrix

Eigenspace

The set of all eigenvectors of corresponding to the same eigenvalue, together with the zero vector.

The Kernel of the matrix

Eigenvector: non-zero vector in the eigenspace

Algebraic Multiplicity

Let be an eigenvalue of an matrix . The algebraic multiplicity of the eigenvalue is its multiplicity as a root of a Characteristic Polynomial, that is, the largest k such that

Geometric Multiplicity

Let be an eigenvalue of an matrix . The geometric multiplicity of the eigenvalue is the dimension of the Eigenspace associated with the eigenvalue.

Computation

- Find the solution^[eigenvalues] of the Characteristic Polynomial.

- Find the solution^[eigenvectors] of the Under-Constrained System using the found eigenvalue.

Facts

There exists at least one eigenvector corresponding to the eigenvalue

Eigenvectors corresponding to different eigenvalues are always linearly independent.

When is a normal or real Symmetric Matrix, the eigendecomposition is called Spectral Decomposition

The Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

The Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

Not all matrices have linearly independent eigenvectors.

When holds,

- the eigenvalues of are and the eigenvectors of the matrix are the same as .

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

An eigenvalue’s Geometric Multiplicity cannot exceed its Algebraic Multiplicity

the matrix is diagonalizable the Geometric Multiplicity is equal to the Algebraic Multiplicity for all eigenvalues

Link to originalLet be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Link to originalThe non-zero eigenvalues of are the same as those of .

Quadratic Forms and Positive Definite Matrix

Quadratic Form

Definition

where is a Symmetric Matrix

A mapping where is a Module on Commutative Ring that has the following properties.

Matrix Expressions

Facts

Let be a Random Vector and be a symmetric matrix of constants. If and , then the expectation of the quadratic form is

Let be a Random Vector and be a symmetric matrix of constants. If and , , and , where , then the variance of the quadratic form is where is the column vector of diagonal elements of .

If and ‘s are independent, then If and ‘s are independent, then

Let , , where is a Symmetric Matrix and , then the MGF of is where ‘s are non-zero eigenvalue of

Let , where is Positive-Definite Matrix, then

Let , , where is a Symmetric Matrix and , then where

Let , , where are symmetric matrices, then are independent if and only if

Let , where are quadratic forms in Random Sample from If and is non-negative, then

- are independent

Let , , where , where , then

Link to original

Positive-Definite Matrix

Definition

Matrix , in which is positive for every non-zero column vector is a positive-definite matrix

Facts

Let , then

- where is a matrix.

- The diagonal elements of are positive.

- For a Symmetric Matrix , for sufficiently small

where is a non-singular matrix.

all the leading minor determinants of are positive.

Link to original

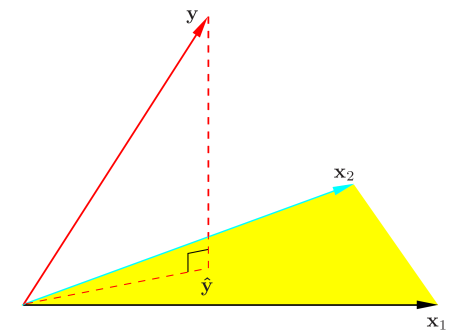

Projection and Decomposition of Matrix

Projection Matrix

Definition

For some vector , is the projection of onto

Facts

The projection matrix is symmetric Idempotent Matrix

Consider a Symmetric Matrix , then is idempotent with rank if and only if eigenvalues are and eigenvalues are .

The projection matrix is Positive Semi-Definite Matrix

Link to originalIf and are projection matrices, and is Positive Semi-Definite Matrix, then

- is a projection matrix.

QR Decomposition

Definition

Decomposition of matrix into a product of an orthonormal matrix and an upper triangular matrix .

Computation

Using the Gram Schmidt Orthonormalization

QR decomposition can be computed by Gram Schmidt Orthonormalization. Where is the matrix of orthonormal column vectors obtained by the orthonormalization and

Link to original

Cholesky Decomposition

Definition

Decomposition of a Positive-Definite Matrix into the product of lower triangular matrix and its Conjugate Transpose.

Computation

Let be Positive-Definite Matrix. Then, By setting the first-row first-column entry , can find other entries using substitution. By summarizing, ,

Link to original

Spectral Theorem

Definition

where is a Unitary Matrix, and

A matrix is a Hermitian Matrix if and only if is Unitary Diagonalizable Matrix.

Facts

Every Hermitian Matrix is diagonalizable, and has real-valued Eigenvalues and orthonormal eigenvector matrix.

For the Hermitian Matrix , the every eigenvalue is real.

Proof For the eigendecomposition , . Since is hermitian, and norm is always real. Therefore, every eigenvalue is real.

Link to originalFor the Hermitian Matrix , the eigenvectors from different eigenvalues are orthogonal.

Proof Let the eigendecomposition where . By the property of hermitian matrix, and So, . Since , . Therefore, are orthogonal.

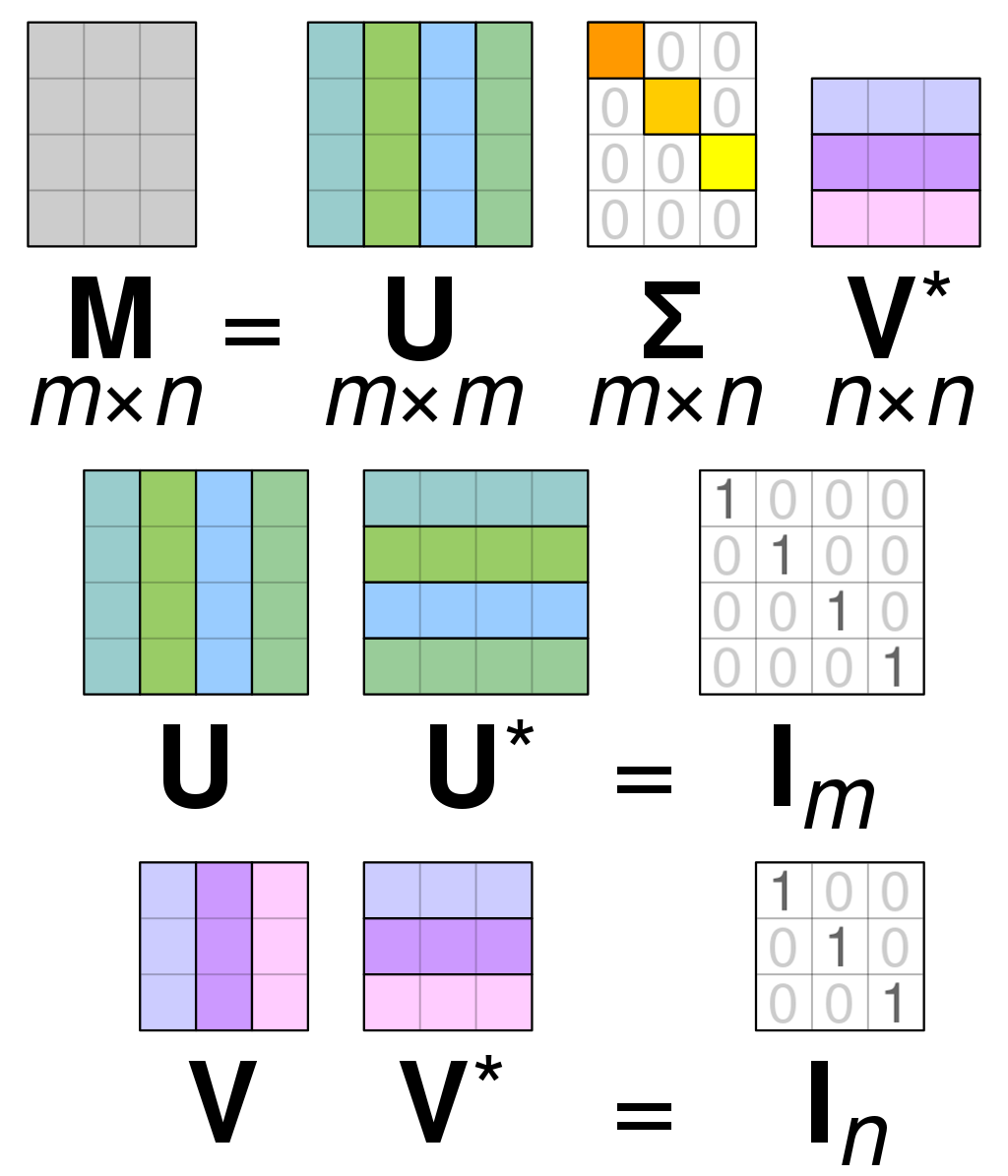

Singular Value Decomposition

Definition

An arbitrary matrix can be decomposed to .

For where and and is Diagonal Matrix

For where and and is Diagonal Matrix

Calculation

For matrix

is the matrix of orthonormal eigenvectors of is the matrix of orthonormal eigenvectors of is the Diagonal Matrix made of the square roots of the non-zero eigenvalues of and sorted in descending order.

If the eigendecomposition is , then the eigenvalues and the eigenvectors are orthonormal by Spectral Theorem. If the , then . Where are called the singular values.

Now, the Orthonormal Matrix is calculated using the singular values. Where is the Orthonormal Matrix of eigenvectors corresponding to the non-zero eigenvalues and is the Orthonormal Matrix of eigenvectors corresponding to zero eigenvalues where each , and is a rectangular Diagonal Matrix.

Since is an orthonormal matrix and is a Diagonal Matrix, . Now, the Orthonormal Matrix is calculated using the linear system and the null space of , Where is the Orthonormal Matrix of the vectors obtained from the system equation And is the Orthonormal Matrix of the vectors . which is corresponding to zero eigenvalues

The Orthonormal Matrix can also be formed by the eigenvectors of similarly to calculating of .

Facts

are the Unitary Matrix

Let be a real symmetric Positive Semi-Definite Matrix Then, the Eigendecomposition(Spectral Decomposition) of and the singular value decomposition of are equal.

where and are non-negative and the shape of the every matrix is

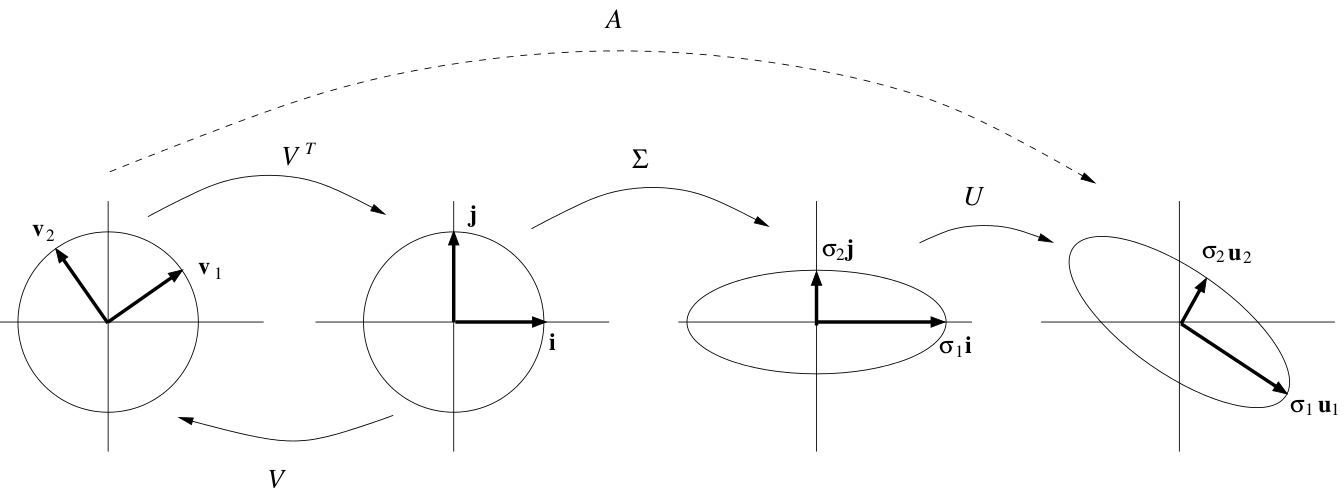

Visualization

every matrix can be decomposed as a

Link to original

- : rotation and reflection

- : scaling

- : rotation and reflection

Miscellanea in Matrix

Centering Matrix

Definition

where is matrix where all elements are

Summing Vector

Facts

Link to originalwhere is a sample variance of

Derivatives of Matrix

Definition

Differentiation by vector

where

Differentiation by Matrix

where

Calculation

Let be a vector function, be an -dimensional vector, then By the definition of derivative, we hold where

Examples

Let be -dimensional vectors, then

Let be an -dimensional vector and be an matrix, then

Let be an -dimensional vector and be an Symmetric Matrix, then the derivative of quadratic form is

Link to original

Hessian Matrix

Definition

A square matrix of second-order partial derivative of a scalar-valued function

Link to original

Vectorization

Definition

Let be an matrix, then

A linear transformation which converts the matrix into a vector.

Facts

Link to originalLet be matrices, then

Kronecker Product

Definition

Let be an matrix and be a matrix, then the Kronecker product is the block matrix

Facts

Link to originalLet be matrices, then

, where are square matrices Eigenvalues of is the product of the eigenvalue of and the eigenvalue of

Norms

Norm

Definition

A real-valued function with the following properties

- Positive definiteness:

- Absolute Homogeneity:

- Sub additivity or Triange inequality: where on a vector space

Vector

Real

Let be an -dimensional vector, then the norm of the is defined as

Complex

Let be an -dimensional complex vector, then the norm of the is defined as

Function

: norm of

Facts

Link to originala norm can be induced by a Inner product

Schatten Norm

Definition

Let be an matrix, then the Schatten norm of is defined as where is the -th singularvalue of

Facts

Link to original

Frobenius Norm

Definition

Let be an matrix, then the Frobenius norm of is defined as

Link to original

Matrix p-Norm

Definition

Let be an matrix, then the matrix p-norm of is defined as

Facts

Link to originalWhen , the norm is a Spectral Norm.

Spectral Norm

Definition

Let be an matrix, then the matrix p-norm of is defined as

Link to original

Nuclear Norm

Definition

Let be an matrix, then the nuclear norm of is defined as

Link to original

L-pq Norm

Definition

norm is an entry-wise matrix norm. where

Facts

When , the norm is the sum of the absolute values of every entry and is called a matrix norm.

Link to original

Rayleigh-Ritz Theorem

Definition

Let be an Hermitian Matrix with eigenvalues sorted in descending order , where , then

Link to original