Manifold Learning

Topological Space

Definition

Topological Space

A topological space is a set along with an additional structure called a Topology .

Link to original

Open Set

Definition

Consider a Topological Space . The elements of the Topology are called the open sets of

Definition in Metric Topology

Consider a Metric Space

Facts

Every point of is an interior point of .

Consider a Topological Space and a subset . Then, is open in if and only if for an arbitrary point in , there exists an open subset containing .

Link to originalis an open set if and only if it’s equal to its Interior .

Closed Set

Definition

Consider a Topological Space . A set is closed if

The complement of an Open Set is called a closed set.

Facts

- and are closed

Consider a Subspace Topology of a Topological Space and a subset . Then,

Consider a Subspace Topology of a Topological Space and a subset . Then,

Consider a Topological Space and a subset . Then, where is the set of all limit points of

Link to originalConsider a Topological Space and a subset . If the subset is equal to its Closure, it is closed.

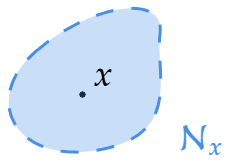

Neighborhood

Definition

Consider a subset of a Topological Space . A neighborhood of a point is a subset of including an open set containing .

Link to original

Embedding

Definition

Embedding is a function between two topological spaces has the following properties:

- is a Homeomorphism

Embedding preserves the structure of a topological structure.

Link to original

Homeomorphism

Definition

A function between two topological spaces is a homeomorphism if it satisfies the following conditions:

- is Bijective

- and its inverse function are continuous ()

- Equivalent:

Homeomorphic

Consider the two topological spaces . If there exists a homeomorphism between and , then is homeomorphic to

Link to original

Compactness

Definition

Consider a Topological Space . The space is compact if every open covering (a collection of open subsets of whose union is ) of has a finite subcovering.

Examples

Consider a Standard Topology on real numbers . Then, the subspace Topologies

Facts

Consider a Subspace Topology of a Topological Space . Then, is compact if and only if every covering of by open sets in has a finite subcollection covering .

A compact subspace of a Hausdorff Space is closed.

The image of a compact space under a continuous map is compact.

Consider a Bijective continuous function between two topological spaces. If is a compact space and is a Hausdorff Space, then is a Homeomorphism

Tychonoff's Theorem

Definition

The Product Topology of any collection of compact topological spaces is compact.

Link to originalConsider a Topological Space . is compact if and only if for every collection of closed sets in that have the Finite Intersection Property, its entire intersection is a non-empty set .

Every closed interval in Real Number is compact.

Heine-Borel Theorem

Definition

Consider a Standard Topology on a Euclidean Space and a subset Then, is closed and bounded if and only if is compact

Link to originalConsider a Topological Space . If is compact then is Limit Point Compact

Consider a metrizable space . Then, is compact if and only if is Limit Point Compact if and only if is Sequentially Compact

Link to originalEvery Compact metrizable space has a countable basis.

Link to originalA Compact Hausdorff Space is a normal space.

Consider a Hausdorff Space and disjoint Compact subsets . Then, there exists disjoint open sets satisfying and .

Every compact space is Countably Compact.

Link to originalLink to originalA Topological Space is Compact if has a basis such that every open covering of by elements of has a finite subcover.

Link to originalAlexander Subbasis Theorem

Definition

A Topological Space is Compact if and only if has a Subbasis such that every open covering of by elements of has a finite subcover.

Link to original

Heine-Borel Theorem

Definition

Consider a Standard Topology on a Euclidean Space and a subset Then, is closed and bounded if and only if is compact

Link to original

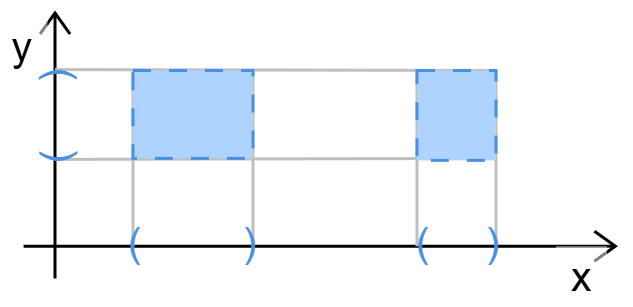

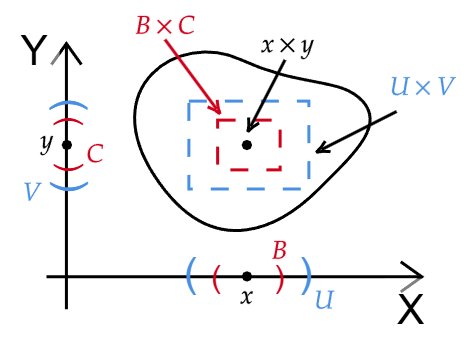

Product Topology

Definition

A product topology is the Cartesian product of a family of topological spaces

Let be an index set, and be a Topological Space. The product topology is defined as where is the -th coordinate (component) of the function .

Facts

Consider bases for the topologies on . Then, is a basis for the topology on .

The countably infinite product space of real numbers with the Product Topology is metrizable.

Consider a function between a Topological Space and a product space. Then, the function is continuous if and only if each Composite Function of the function and the Projection Map is continuous.

Link to originalThe Product Space of any collection of Hausdorff spaces is a Hausdorff space.

Link to originalThe Product Space of any collection of connected spaces is a connected space.

Link to originalThe Product Space of two separable spaces is a separable space.

Link to originalThe Product Space of two first-countable spaces is a first-countable space.

Link to originalThe Product Space of two second-countable spaces is a second-countable space.

Link to originalTychonoff's Theorem

Definition

The Product Topology of any collection of compact topological spaces is compact.

Link to original

Tychonoff's Theorem

Definition

The Product Topology of any collection of compact topological spaces is compact.

Link to original

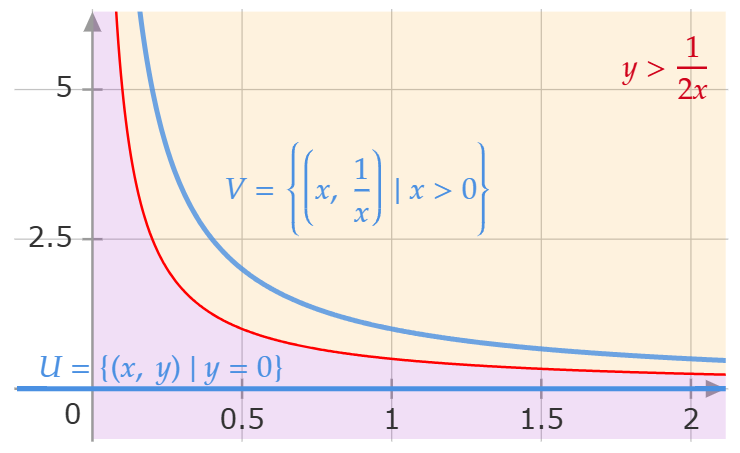

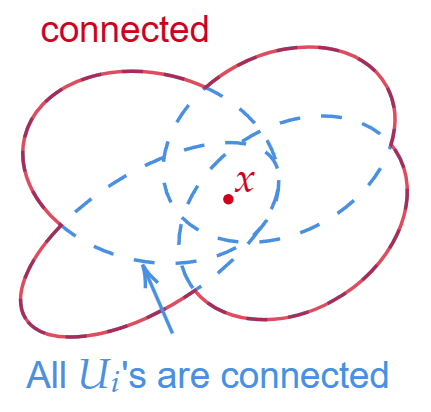

Connected Space

Definition

A Topological Space is connected if it can not be represented as the union of two disjoint, non-empty, open or closed subsets. where is a disjoint union.

Or, equivalently, if are the only open and closed subsets.

Examples

Topologist’s sine curve

Consider a Subspace Topology , where , of a Standard Topology on real plane. The Topological Space is connected, but not path connected.

Consider a set .

- The Topological Space with discrete topology is not connected.

- The Topological Space with trivial topology is connected and path connected by a path .

Consider a Subspace Topology of a Standard Topology on real number. The Topological Space is not connected by a separation

Consider a Subspace Topology , where , of a Standard Topology on real plane. The Topological Space is not connected by a separation .

Facts

Link to originalEvery path connected space is a Connected Space.

Consider a Subspace Topology of a Topological Space . Then, is a separation of if and only if . where is a disjoint union, is the set of all limit points of .

Consider a Subspace Topology of a Topological Space and a separation of . If is a connected subspace, then .

Consider a collection of connected subspaces of a Topological Space . If the subspaces have a point in common , then the union of the collection is connected.

Consider a collection of connected subspaces of a Topological Space , and a connected subspace . If , then is connected.

Consider a connected Subspace Topology of a Topological Space . For some Subspace Topology , if , then is connected.

Consider two topological spaces . If is connected and there exists a Continuous Function , then is a connected space.

Consider a continuous function between two topological spaces. If is connected, then is a connected subspace in .

Consider a Topological Space and a subspace . If is connected, then its Closure is also connected.

Link to originalThe Product Space of any collection of connected spaces is a connected space.

Topological Manifold

Definition

A Locally Euclidean second-countable Hausdorff Space Topological Space is a topological manifold.

A one-dimensional manifold is called a curve, and a two-dimensional manifold is called a surface.

Facts

The Product Space of an -dimensional manifold and an -dimensional manifold is an -dimensional manifold.

Link to originalConsider an -dimensional manifold without boundary and an -dimensional manifold with boundary . Then,

Second-Countable Space

Definition

A second-countable space is a Topological Space whose Topology has a countable basis.

Facts

Every second-countable space is a Separable Space.

-dimension Euclidean Space where , is a second-countable space.

Hilbert Space is a second-countable space.

The Product Space of two second-countable spaces is a second-countable space.

Link to originalLink to originalEvery Second-Countable Space is a Lindelof space.

Hausdorff Space

Definition

A Topological Space is Hausdorff space if where and are the neighbourhoods of and respectively.

Facts

Every finite point set in Hausdorff space is closed ( T1 Axiom).

Consider a Topological Space satisfying axiom. Then, where

Consider a Topological Space . Then, a Sequence of points of converges to at most one point of .

Every totally ordered set is a Hausdorff space in the Order Topology.

A Subspace Topology of a Hausdorff space is a Hausdorff space (Hereditary Property).

The Product Space of any collection of Hausdorff spaces is a Hausdorff space.

Link to originalLink to originalA Compact Hausdorff Space is a normal space.

Whitney Embedding Theorem

Definition

Any -dimensional smooth manifold can be embedded in

Link to original

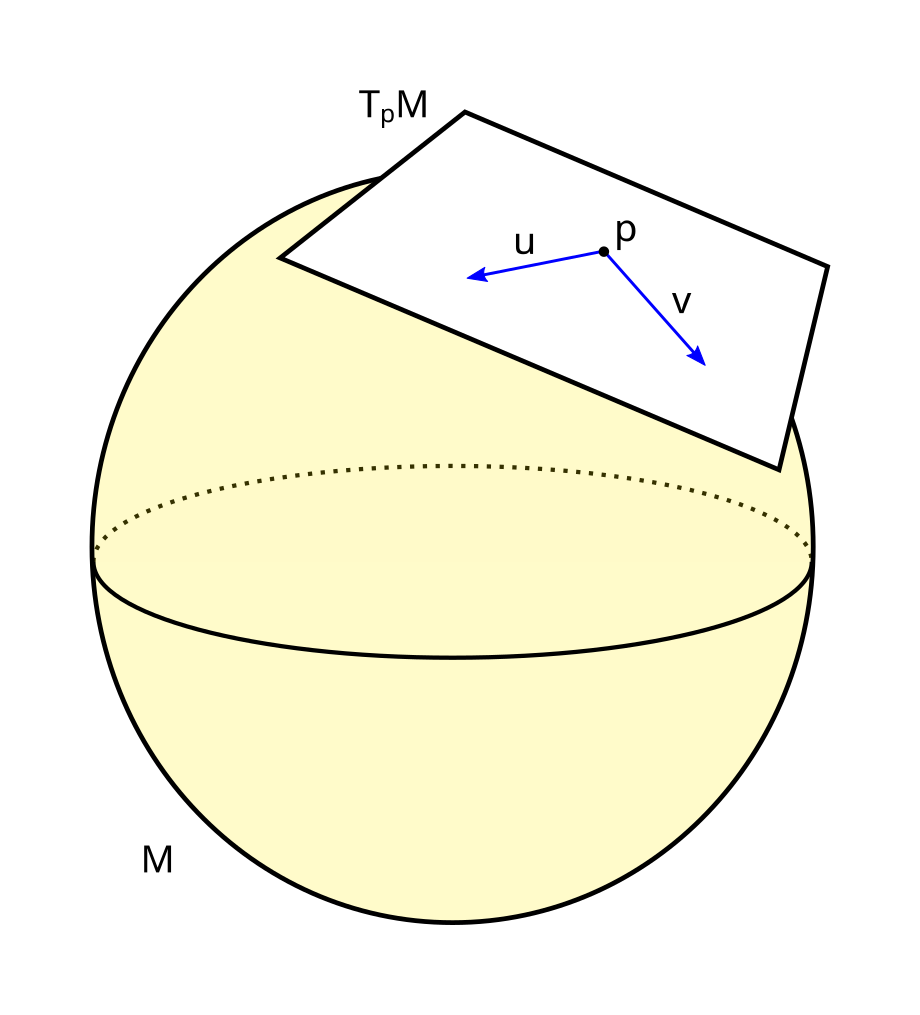

Riemannian Manifold

Definition

A Riemannian manifold is a smooth Topological Manifold together with a Riemannian metric. Many geometric notions such as distance, angles, length, volume, and curvature are defined on a Riemannian manifold.

Let be a Smooth Manifold. For each point , there is an associated vector space called Tangent Space of at . Each Tangent Space equipped with an Inner product defined by the basis of the tangent space. Where is a standard inner product of the ambient space (Euclidean inner product).

The collection of inner products is a Riemannian metric on , and the pair of the manifold and the Riemannian metric defines a Riemannian manifold.

Link to original

Atlas

Definition

Atlas

An atlas on a Topological Manifold is a collection of charts which covers .

Link to original

Chart

Definition

Let be a Topological Manifold. A chart for the manifold is a Homeomorphism , where is an open subset of the manifold, and is an open subset of the Euclidean Space.

Link to original

Differentiable Manifold

Definition

Let be a Topological Manifold. It is -times differentiable if the following condition holds: where is Differentiability Class.

Link to original

Smooth Manifold

Definition

A smooth manifold is an infinitely Differentiable Manifold ().

Facts

The Product Space of an -dimensional smooth manifold and an -dimensional smooth manifold is an -dimensional smooth manifold.

Link to originalConsider an -dimensional smooth manifold without boundary and an -dimensional smooth manifold with boundary . Then,

Diffeomorphism

Definition

Given two differentiable manifolds and , a differentiable map is a diffeomorphism if it is a Bijective and its inverse is differentiable as well.

If these functions are times continuously differentiable, is called -diffeomorphism

If there is a diffeomorphism between two manifolds and , we call these two manifolds are diffeomorphic and denote as

Link to original

Tangent Space

Definition

Suppose that is a Differentiable Manifold and that . Pick a coordinate Chart , where is an open subset of containing . The tangent space is the set of all tangent vectors at on the manifold .

The basis of the tangent plane is given by the partial derivatives

Link to original

Integration of a Function over a Manifold

Definition

Consider a scalar function and a Riemannian Manifold with a local Chart . The integral of over manifold can be calculated in a local coordinate space. where , , and is the absolute value of the Determinant of the inner product at

Link to original

Isometry

Definition

Let two Riemannian manifolds and , and a Diffeomorphism . Then is called an isometry if the following condition holds:

Link to original

Geodesic

Definition

A geodesic is a curve representing the shortest path between two points in a Riemannian Manifold.

Suppose a Riemannian Manifold . A differentiable curve in is defined as a smooth mapping from an open interval into . The distance of a smooth curve is given by Arc Length The distance between two points and on is defined as the Infimum of the length taken over all differentiable curves such that and . where is the set of all differentiable curves in that join up the points and .

Link to original

Laplace-Beltrami Operator

Definition

The Laplace–Beltrami operator is the Divergence of the Gradient. where , and is the absolute value of the Determinant of the inner product at

Calculations

Calculation of Gradient

Consider an -dimensional Riemannian Manifold with a local Chart , and a function .

By the definition of the Gradient, where is the inner product at , and and are the -th and -th element of the vector and gradient vector respectively.

By Chain Rule, The directional derivative of at a point in the direction of is given by where

By combining these two equations, we can obtain the following equality. Let be the inverse matrix of , then The gradient vector is where

Calculation of Divergence

Consider an -dimensional Riemannian Manifold with a local Chart , and a function .

By the Divergence Theorem, for a function with a compact support and a vector field , In a Euclidean Space, the integration of both sides are The integration can be performed over a coordinate Chart of the manifold.^[Integration of a Function over a Manifold] By the Integration by Parts,

\int_{\mathbb{R}^{n}} \tilde{f} \cdot \operatorname{div}V \sqrt{|g|} dx &= - \int_{\mathbb{R}^{n}} g(\nabla f, V) \sqrt{|g|} dx\\ &= - \int_{\mathbb{R}^{n}} \sum\limits_{i=1}^{n} V_{i} \frac{\partial\tilde{f}}{\partial x_{i}} \sqrt{|g|} dx\\ &= - \int_{\mathbb{R}^{n}} \tilde{f} \sum\limits_{i=1}^{n} \frac{\partial}{\partial x_{i}} (V_{i} \sqrt{|g|}) dx \end{aligned}$$ where $\tilde{f} := f \circ \varphi^{-1}$, $U = \varphi(\mathcal{M})$, $|g|$ is the absolute value of the [[Determinant]] of the inner product $g$ at $\varphi^{-1}(x)$, and $V_{i}$ is the $i$-th element of the vector $V$. Therefore, we have $$\operatorname{div}V = \frac{1}{\sqrt{|g|}} \sum\limits_{i=1}^{n} \frac{\partial}{\partial x_{i}} (V_{i} \sqrt{|g|})$$Link to original

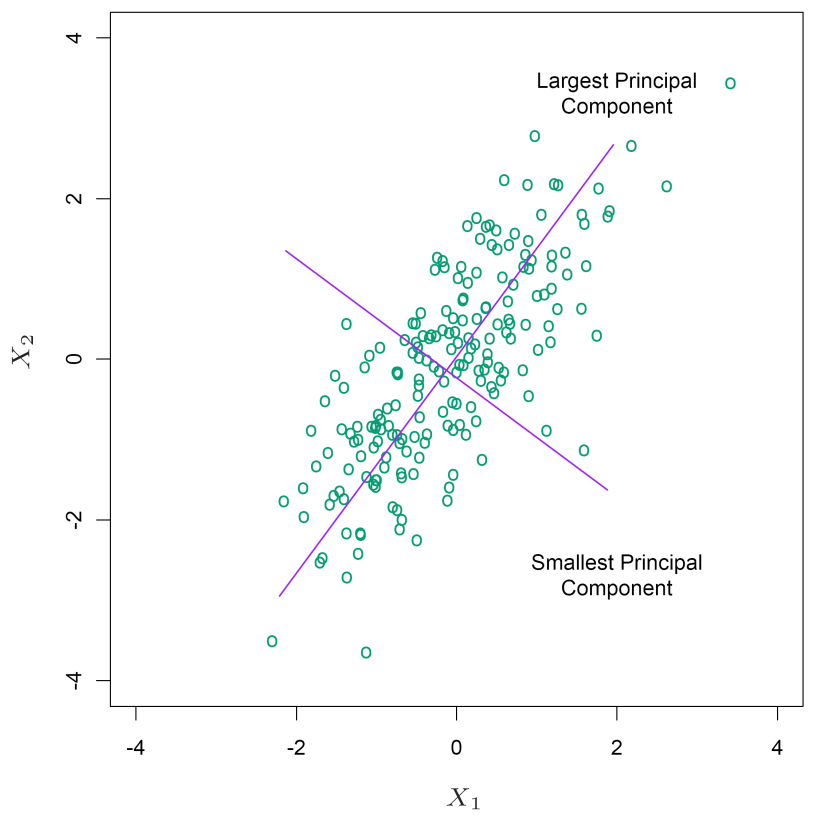

Linear Manifold Learning

Principal Component Analysis

Definition

PCA is a linear dimensionality reduction technique. The correlated variables are linearly transformed onto a new coordinate system such that the directions capturing the largest variance in the data.

Population Version

Given a random vector , we find a such that is maximized: Equivalently, by the Method of Lagrange Multipliers with , By differentiation, the is given by the eigen value problem Thus the maximizing the variance of is the eigenvector corresponding to the largest Eigenvalue.

Sample Version

Given a data matrix , by Singular Value Decomposition, A matrix can be factorized as . By algebra, , where we call the -th principal component.

Facts

Link to originalSince and

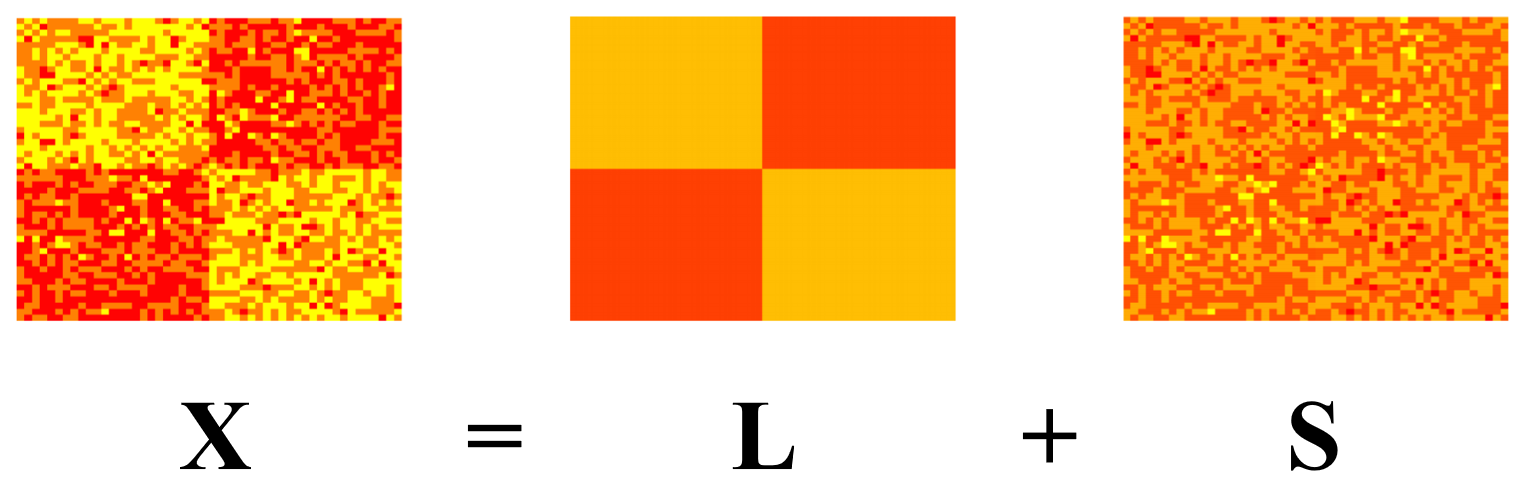

Robust Principal Component Analysis

Definition

PCA doesn’t work well when there are noises in the input data. The goal of Robust PCA is to find a matrix decomposition to a low rank matrix and a sparse matrix.

The optimization problem of Robust PCA is set up as where is a low-rank matrix, is a sparse matrix, and is the number of non-zero elements in the matrix.

However, the optimization problem is infeasible because it is neither continuous nor convex. So, we solve the relaxed problem where is the Nuclear Norm of the matrix, and is the matrix L1 norm.

Applications

Link to original

- Video Surveillance

- Face Recognition

- Latent Semantic Indexing

- Collaborative Filtering (Matrix Completion)

Nonlinear Manifold Learning

Kernel Principal Component Analysis

Definition

Consider an demeaned matrix By SVD , where are orthonormal matrices and is a Diagonal Matrix. Then, By PCA . For a linear kernel , . is an Orthonormal Matrix, The kernel principal components are given by solution of the optimization problem. where

Link to original

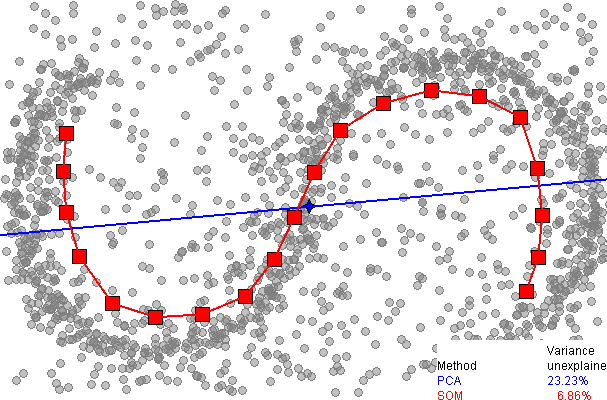

Self-Organizing Map

Definition

A self-organizing map (SOM) is a clustering method that produces a low-dimensional representation of a higher-dimensional data set while preserving the Topological Manifold structure of the data.

The algorithm fits a grid to high-dimensional data and assigns the data to the fitted nodes (prototypes) of the grid.

Link to original

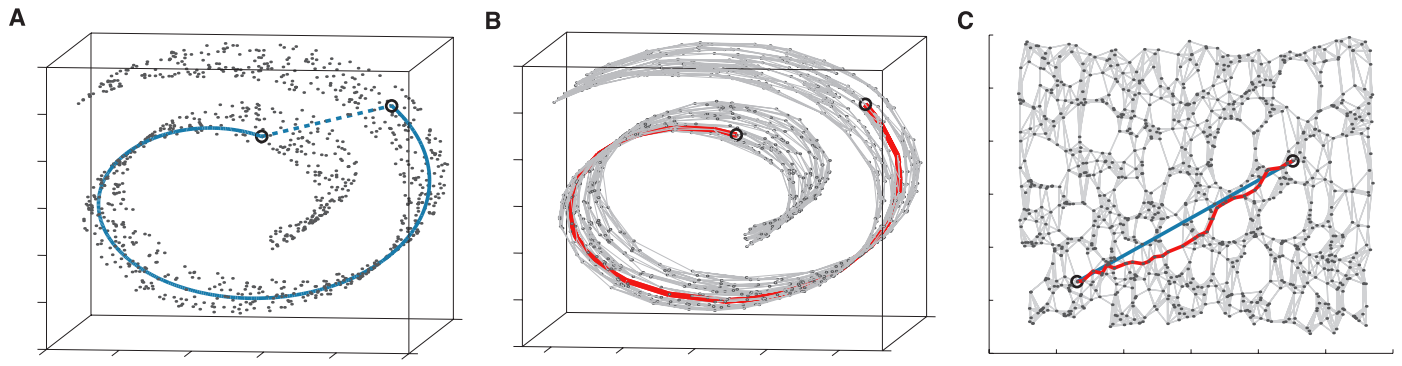

Isomap

Definition

Isomapis a non-linear dimensional reduction method that aims to preserve the intrinsic geometry of high-dimensional data in a lower-dimensional space. The main idea of isomap is to approximate the geodesic distances between data points on a manifold, rather than just using straight-line Euclidean distances.

Algorithm

Link to original

- Determine the neighbors of each point

- All points in some fixed radius

- k-nearest neighbors

- Construct a neighborhood distnace graph

- Compute the shortest path between two nodes with Dijkstra’s algorithm.

Locally Linear Embedding

Definition

Locally linear embedding (LLE) is a non-linear dimensional reduction method that aims to preserve the intrinsic geometry of high-dimensional data in a lower-dimensional space.

Algorithm

Link to original

Determine the k-nearest neighbors of each point

Compute a set of weights for each point that best approximates the point as a linear combination of its neighbors. where is a data point vector, is matrix, and is the set of the neighbors of

Find the low-dimensional embedding of points by solving Eigenvalue problem. Where each point is still described with the same linear combination of its neighbors. where is a vector has lower dimension than , and is the matrix obtained by the step 2.

In a matrix notation, the problem is written as where

By the Method of Lagrange Multipliers, the solution of the optimization problem is given as Therefore, consists of eigenvectors corresponding to the -smallest eigenvalues.

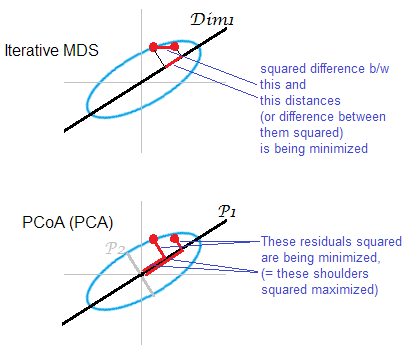

Multidimensional Scaling

Definition

Multidimensional Scaling (MDS) is a non-linear dimensional reduction method. It aims to represent high-dimensional data in a lower-dimensional space while preserving the pairwise distances

Algorithm

MDS

- Find a low-dimensional that minimizes the difference in the pairwise distance of points. where is a data point vector, is a distance function.

Local MDS

Link to original

- Build a set of nearby pairs where is the set of the k-nearest neighbors of

- Find a low-dimensional that minimizes the difference in the local pairwise distance of points. where is a data point vector, is a distance function, is a matrix with very large value, and is a small value weight often .

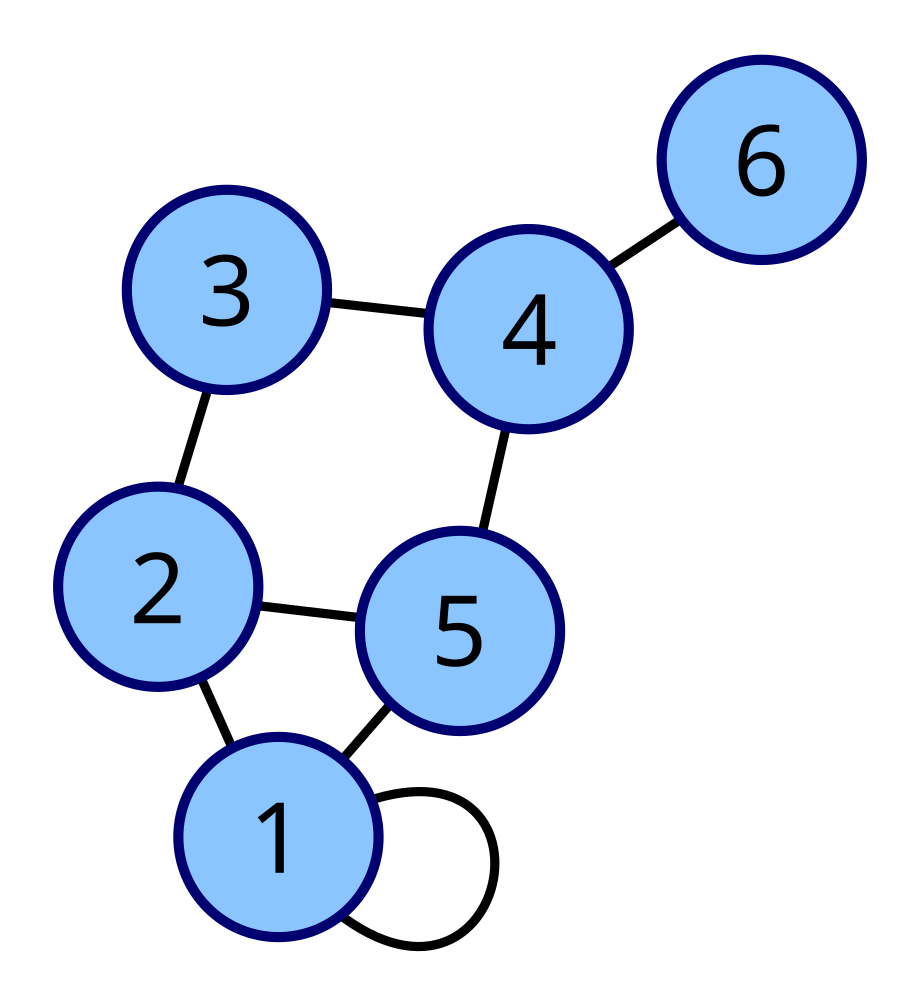

Adjacency Matrix

Definition

Consider a Graph with nodes .

Unweighted Adjacency Matrix

An unweighted adjacency matrix is matrix such that its element is one when there is edge from node to and zero when there is no edge.

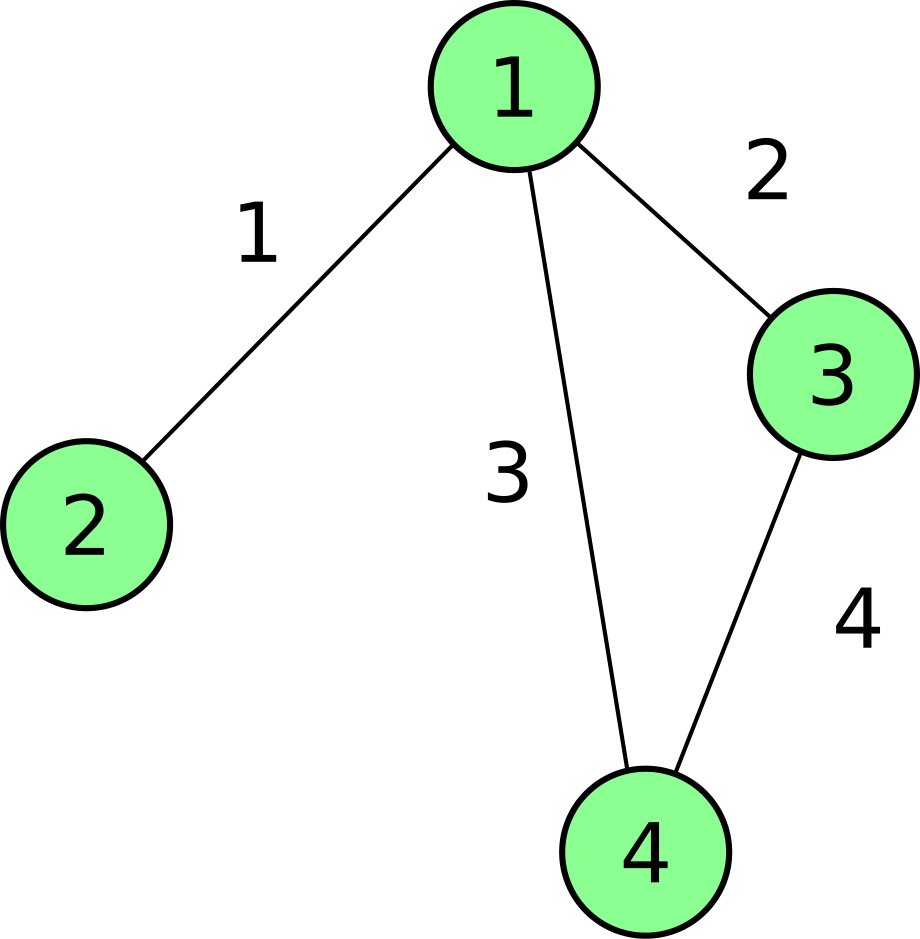

Weighted Adjacency Matrix

An unweighted adjacency matrix is matrix such that its element is the weight of the edge between and , and is zero when there is no edge.

Examples

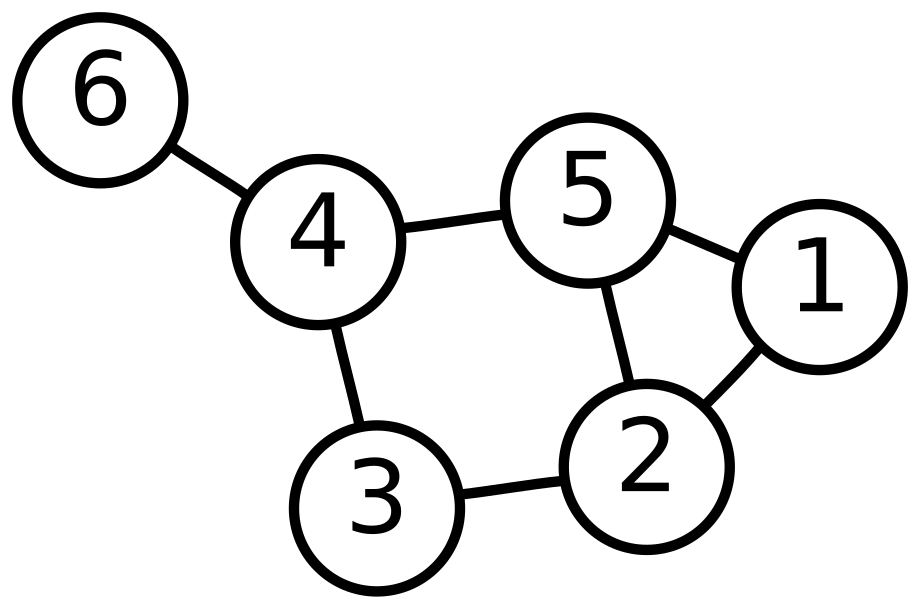

Given graph

Adjacency matrix

Facts

If a graph is undirected, then the adjacency matrix is Symmetric Matrix

Link to original

Degree Matrix

Definition

The degree matrix of an undirected Graph is a Diagonal Matrix representing the sum of the weight of edges connected to each node. It is used together with the Adjacency Matrix to construct the Laplacian Matrix of a graph.

Given a graph and the corresponding Adjacency Matrix . The degree matrix is defined as where ,

Examples

Given graph

Degree matrix

Link to original

Laplacian Matrix

Definition

The Laplacian matrix is a matrix representation of a Graph. where is the Degree Matrix, and is the Adjacency Matrix of the graph.

Random Walk Normalized Laplacian Matrix

Symmetrically Normalized Laplacian Matrix

Examples

Laplacian Matrix for Simple Graph

Adjacency Matrix and Degree Matrix

Laplacian matrix

Laplacian Matrix for Graph with Weighted Edges

Adjacency Matrix and Degree Matrix

Laplacian matrix

Facts

The number of zero-eigenvalues of the Laplacian matrix equals the number of connected clusters in the graph.

Analogousness to the Laplace-Beltrami Operator

The Laplacian matrix on a graph, can be ragarded as a discrete approximation to the Laplace-Beltrami Operator on a manifold.

The quadratic form of the Laplacian Matrix can be seen as a discretization of squared gradient on manifold.

where is a distance between two points and defined by the Adjacency Matrix.

Link to original

Laplacian Eigenmap

Definition

The Laplacian eigenmap is an embedding preserving local information optimally.

Algorithm

Consider a set of data points . Make an Adjacency Matrix with Gaussian Radial Basis Function Kernel where is a scale parameter.

Construct a Laplacian Matrix using the Adjacency Matrix. The following options are available.

- Unnormalized Laplacian Matrix

- Random Walk Normalized Laplacian Matrix

- Symmetrically Normalized Laplacian Matrix

Construct the matrix whose columns are eigenvectors corresponding to the -smallest eigenvalues of the Laplacian Matrix. It is the result of Laplacian eigenmap where , and is intentionally omitted because it corresponds to the trivial solution (constant vector).

Link to original

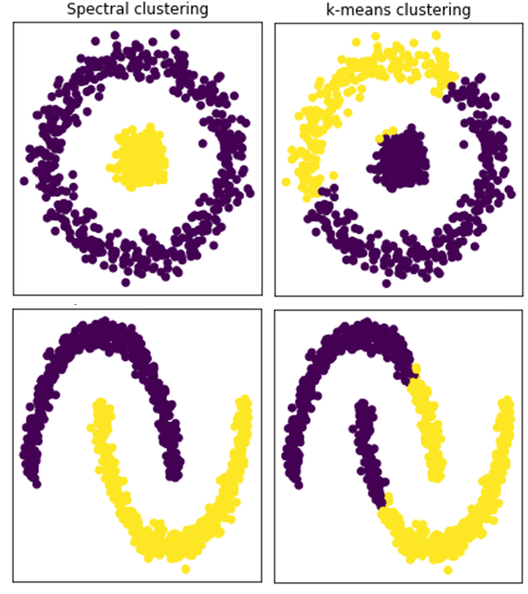

Spectral Clustering

Definition

Spectral clustering technique make use of spectrum of the similarity matrix of the data to perform dimensionality reduction before clustering.

Algorithms

Apply Laplacian Eigenmap to the given data .

Form an sub matrix using the first -columns of the embedded matrix , and normalize each row to norm .

Apply clustering algorithm (e.g. K-Means Clustering) to , where is the -th row of .

Mathematical Background

Consider a cluster assignment vector for each data point. Then, clustering observations is corresponding to estimating ‘s The entries of the Adjacency Matrix represents the similarity between points and .

Under the assumption that the close data points (large ) have a similar label (), the optimization problem is set up that minimizing the difference between assignments for similar points. where the constraint is imposed to avoid the trivial solution .

In a matrix notation, the problem is

When , is large and when , is small. So, works as the weight for each pair in the optimization.

The Lagrangian function is defined as And the solution of the problem is given by an Eigenvalue problem. The solution is an matrix of eigenvectors sorted in ascending by their corresponding eigenvalues.

Link to original

t-Distributed Stochastic Neighbor Embedding

Definition

The t-distributed stochastic neighbor embedding (t-SNE) is a statistical method for visualizing high-dimensional data. It models each high-dimensional object by a two- or three-dimensional point in such a way that similar objects are modeled by nearby points and dissimilar objects are modeled by distant points with high probability.

Algorithm

- Constructs a probability distribution over pairs of high-dimensional objects in such a way that similar objects are assigned a higher probability while dissimilar points are assigned a lower probability.

Define the conditional probability by Normal Distribution where acts as an adaptive bandwidth parameter for each point , and is indirectly determined by the given hyperparameter (perplexity).

And define the probability where is the number of data set

- Defines a similar probability distribution over the points in the low-dimensional map,

Define the similarities between two points in the low-dimensional map.

- Minimizes the Kullback-Leibler Divergence between the two distributions with respect to the locations of the points in the low-dimensional map.

Link to original

UMAP

Definition

Uniform manifold approximation and projection (UMAP) is a nonlinear dimensionality reduction algorithm similar to t-SNE. However, unlike to t-SNE, UMAP uses spectral embedding to initialize the low-dimensional graph, and uses a graph that only connected to each points’ nearest neighbors.

Algorithm

For each point in high-dimensional space, find its nearest neighbors.

Compute the distances between the nearest neighbors for each point.

Calculate the local connectivity parameter for each point using the equation where is the number of nearest neighbors, and is the minimum distance among the nearest neighbors

Calculate the edge weights to the nearest neighbors and symmetrize the graph

Initialize the low-dimensional representation using spectral embedding.

optimize the low-dimensional representation by minimizing both the attractive and the repulsive force using SGD.

The cost function is defined as where is the set of edges, and and are the weights of the edge of the high-dimensional and low-dimensional graph respectively.

Link to original