Euclidean Vector

Definition

Geometric object that has magnitude and direction

Elements of a Vector Space

Properties

Length

Vector

Real

Let be an -dimensional vector, then the norm of the is defined as

Complex

Let be an -dimensional complex vector, then the norm of the is defined as

Link to originalAddition and Subtraction

for

Distance

for

Scalar Multiplication

for

Inner Product

Vector

Real

for where is a Norm

Complex

for

Link to originalVector Product

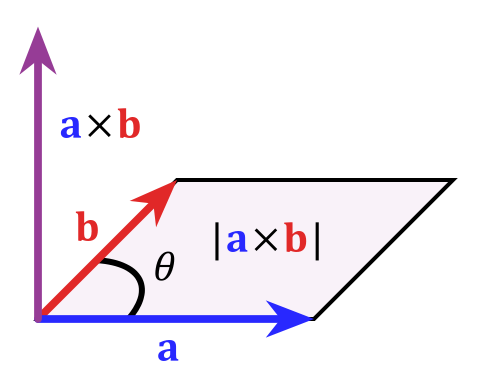

Link to originalCross Product

Definition

where the , the is the angle between and , and the is a unit vector perpendicular to the both and .

Facts

Link to original

Matrix

Definition

Operations

Addition, Subtraction

Scalar Multiplication

Transpose

,

Matrix Multiplication

where ,

Vector-Matrix Multiplication

Link to original

Gauss Elimination

Definition

An algorithm for solving systems of linear equations. Transform a matrix to an upper triangular matrix using the elementary row operations.

Elementary Row Operations

- Swapping two rows

- Multiplying a row by a non-zero scalar

- Adding a multiple of one row to another row

Examples

Link to original

LU Decomposition

Definiiton

where is the lower triangular matrix of Gauss Elimination^[History of G.E] and is the result upper triangular matrix of Gauss Elimination^[Result of G.E].

Link to original

Cofactor

Definition

where is the Minor.

Link to original

Adjugate Matrix

Definition

where is a Cofactor matrix

Facts

Link to original

Determinant

Definition

Computation

matrix

matrix

Laplace Expansion

Definition

Let be a cofactor matrix of

Along the th column gives

Along the th row gives

Link to original

Facts

The volume of box^[parallelepiped] is expressed by a determinant

changes sign, when two rows are exchanged (permutated)

depends on linearly on the 1st row.

If two row or column vectors in are equal, then

Gauss Elimination does not change

If a matrix has zero row or column vectors, then

If a matrix is diagonal or triangular, then the determinant of is the product of diagonal elements where is the element of the matrix .

Link to originalThe Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

where the dimensions of the matrices are and respectively.

Link to original

Cramer's Rule

Definition

The solution of a Linear System Equation. It expresses the solution in terms of the determinants.

For the system of linear equations , the elements of the column vector , are given by where is the matrix formed by replacing -th column of by the column vector .

Link to original

Inverse Matrix

Definition

satisfies for given matrix

Computation

2 x 2 matrix

where the matrix , and is a Determinant of the matrix

Using Cofactor

where the matrix is the cofactor matrix, which is formed by all the cofactors of a given matrix . Then, by the definition of inverse matrix,

Link to original

Vector Space

Definition

or is a vector space over .

A Module whose set of scalar is a Field. is an Abelian Group scalar multiplication is a Monoid action of on the scalar multiplication is distributive with both vector and scalar addition

Vector

Where we call the element of vector space a Vector

Dimension

Dimension (Vector Space)

Definition

where is a Vector Space

The number of vectors in a basis for the Vector Space

Link to originalConditions for Vector Space

Let

- where is the origin.

(Linear Combination of Vectors)

Link to original

Linear Independence

Definition

Let be vectors or functions

Linearly independent

If ,

then is linearly independent.can’t be expressed using the others

Linearly dependent

If , not all zero, s.t. ,

then is linearly dependent.Facts

Basis(solutions of the ODE) = linearly independent solutions

Mutually orthogonal vectors are linearly independent

Check of linear independence of a set of vectors

Link to original

- make a matrix using the given column vectors

- apply Gauss Elimination to make an upper triangular or Echelon matrix

- check the number of non-zero pivots

Basis (Linear Algebra)

Definition

Basis

A linearly independent subset of Vector Space that spans

Basis Vector

The elements of a basis

Facts

Basis vectors are not unique for a Vector Space

Link to originalBasis vectors are changed by Gauss Elimination

Dimension (Vector Space)

Definition

where is a Vector Space

The number of vectors in a basis for the Vector Space

Link to original

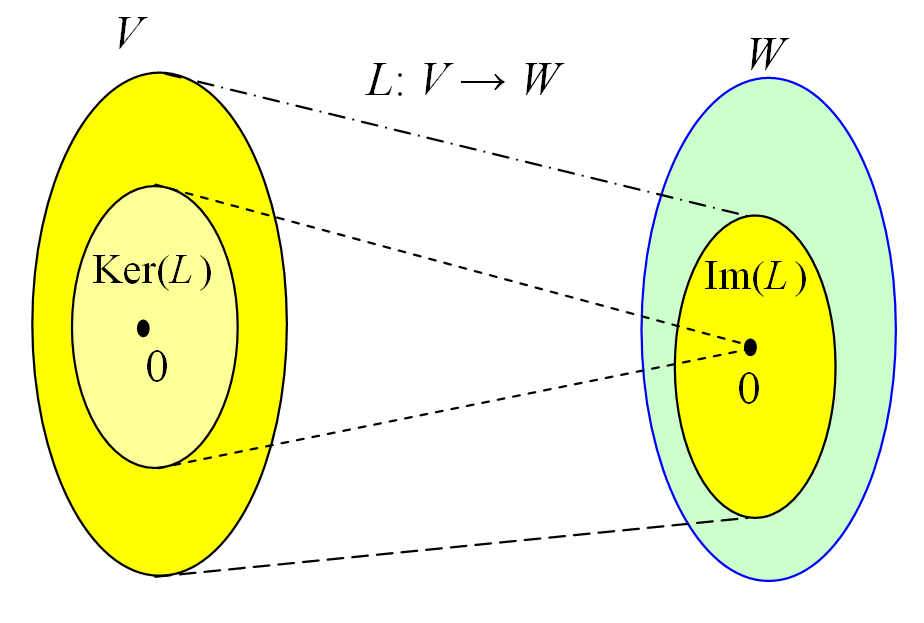

Linear Map

Definition

A mapping between two vector spaces that preserves Additivity on a vector addition and Homogeneity on a scalar multiplication on

Link to original

Fundamental Theorem of Linear Algebra

Definition

Let where is linear map and define a sum and scalar operation to the

Where , , , and is a set of matrices on

Let the functions

- where satisfies

And terms

- for

where , and is a column vector. and are the ordered basis of respectively

Then, both are Isomomorphism and the inverse maps of each other.

If , then s.t. Every Linear Map between finite-demensional vector spaces can be represented by a Matrix.

Proof

is a Linear Map

Proof of Additivity of

by the definition of . So, by the definition of

Proof of Homogeneity of

by the definition of . So, by the definition of

is a Isomomorphism

Proof of Monomorphism of

Let , then s.t. Since , by the definition of .

Proof of Epimorphism of

Let , and Then,

is a Linear Map

Proof of Additivity of

by the definition of the operation by the definition of matrix operation

Proof of Homogeneity of

is a Isomomorphism

Proof of Monomorphism of

Proof of Epimorphism of

Let Then, by the definition

are the inverse maps of each other.

Link to original

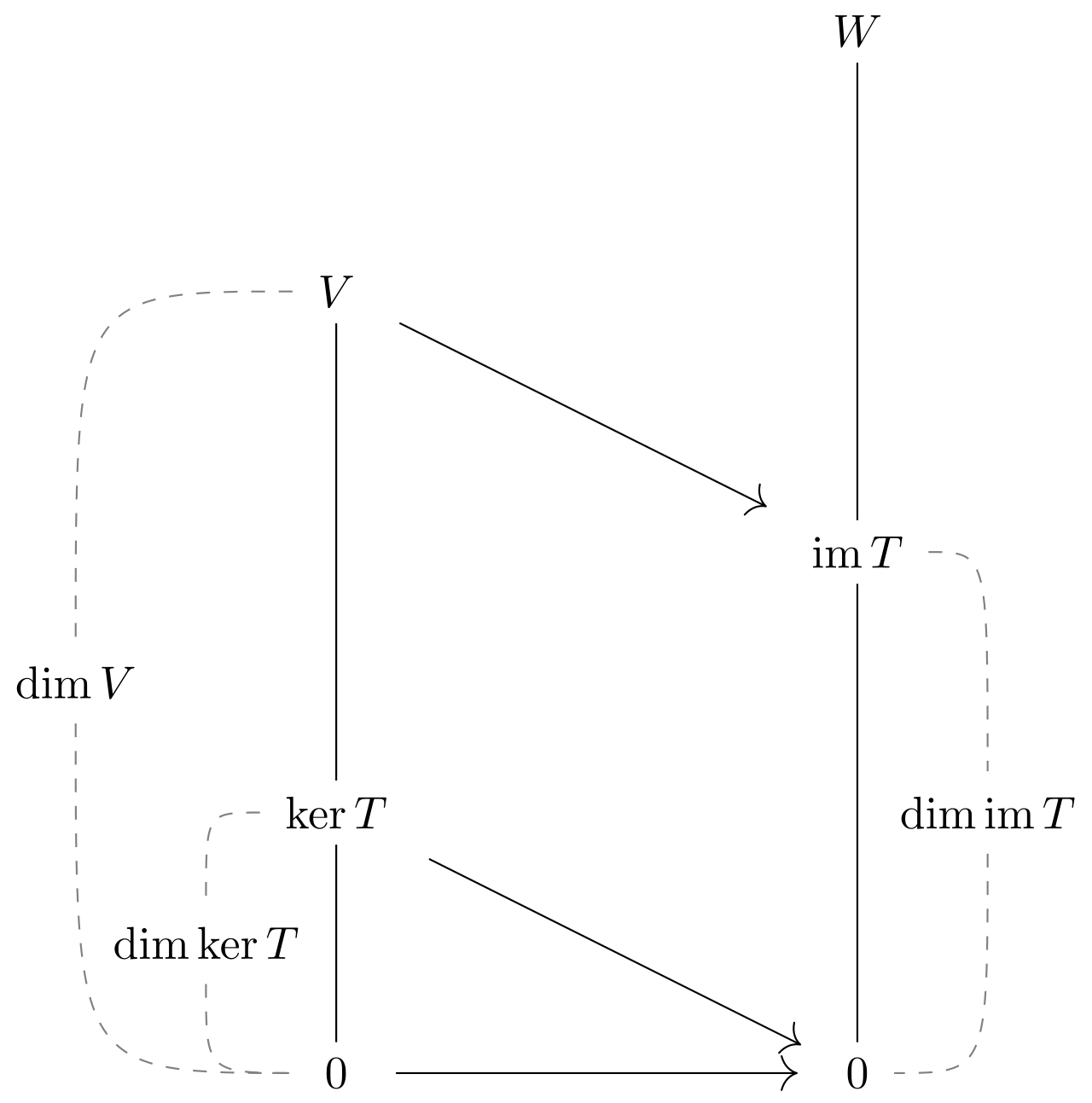

Rank–Nullity Theorem

Definition

Linear Transformation

where is a finite-dimensional Vector Space and is a Linear Map

The dimension of vector space is equal to the sum of the dimension of kernel of and the dimension of image of .

Proof

We want to show that is the basis of , or

Let be the basis of Vector Space , and where be the basis of the

Span

Since by the definition of

Linear Independence

Let We can transform the equation by the Linearity of Then,

The expression can be expressed using only the basis vectors. So, by subtracting LHS - RHS

Since is a basis of the vector space, is linearly independent.

Since satisfies Span and Linear Independence, is the basis of

Matrices

where

Proof

Let a matrix be the echelon form of the matrix , and Then, the number of pivots of is . Also the number of free variables of become

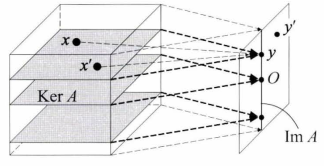

Visualization

Link to original

Pigeonhole Principle

Definition

Let be sets and , and . Then is Surjective is Injective is Bijective

Lemma

Let be a linear map, and suppose that . Then is Epimorphism is Monomorphism is Isomomorphism

Proof

Epimorphism Monomorphism

Let If is Monomorphism by the definition or Monomorphism by the Dimension Theorem So, by the

Monomorphism Epimorphism

by the Dimension Theorem and

How to prove this statement? Let V be a vector space and W \subset V be given, If dim V = dim W, then V = W

Link to original

Rank Theorem

Definition

The Column Rank and the Row Rank are always equal

Proof

Let a matrix be the echelon form of the matrix . Then, and by the property of determinant

Since the number of columns or rows which containing pivots are the column or row rank of .

$\therefore \text{col-rank}(\mathbf{M}) = \text{row-rank}(\mathbf{M})$$

Link to original

Null Space

Definition

The vector space of satisfying for the matrix

Facts

Link to originaland there exists infinitely many solutions, where

Row Space

Definition

The span of the column vectors of the transposed matrix

Facts

Link to originalRow Rank

Definition

A dimension of the Row Space

Link to original

Left Null Space

Definition

The vector space of satisfying for the matrix

Link to original

Column Space

Definition

where

The span of the column vectors of the matrix

Facts

is solvable is in the

non-singular matrix:

Column Rank

Definition

A dimension of the Column Space

Link to originalLink to original

Orthonormal Matrix

Definition

The matrix whose column and rows are orthonormal vectors.

Examples

Facts

Every orthonormal matrix is Unitary Matrix.

Link to originalFor a vector , which can be expressed as a linear combination of column vectors of the orthonormal matrix , we can easily find the coefficients of the linear combination using the Inner product.

Unitary Matrix

Definition

and

Facts

Given two complex vectors , multiplication by preserves their Inner product.

Given a complex vector , multiplication by preserves its Norm.

Every Eigenvalue of has unit length. Therefore,

Eigenvectors of are orthogonal

Proof For the Eigendecomposition where , . So, . Since only where , by the assumption .

Link to original

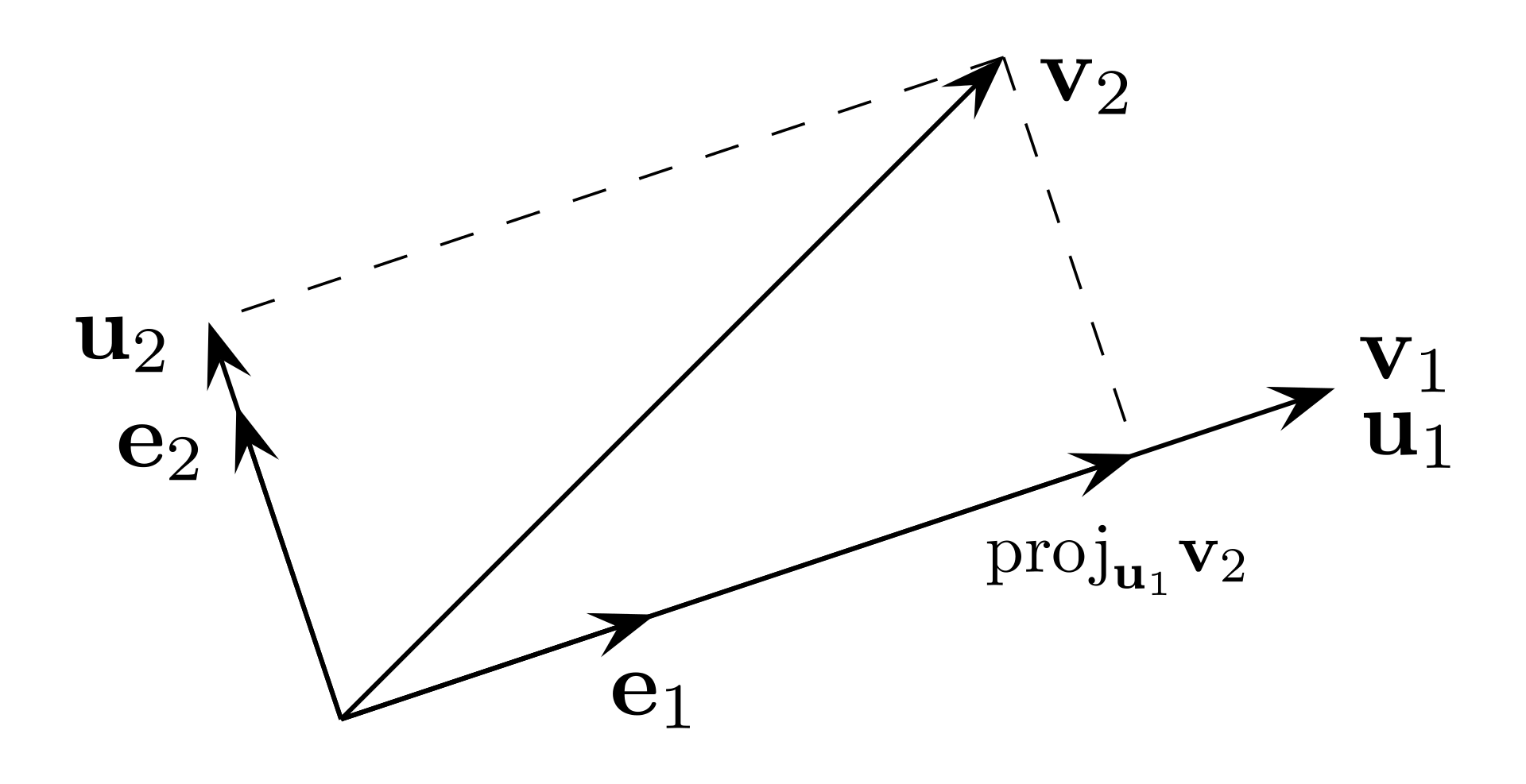

Gram Schmidt Orthonormalization

Definition

Method for orthogonalizing a set of vectors.

Computation

Given

- :

- :

- :

- : Where means the projection of the vector onto the vector space of .

Therefore, it can be expressed using

Link to original

- :

- :

- :

- :

QR Decomposition

Definition

Decomposition of matrix into a product of an orthonormal matrix and an upper triangular matrix .

Computation

Using the Gram Schmidt Orthonormalization

QR decomposition can be computed by Gram Schmidt Orthonormalization. Where is the matrix of orthonormal column vectors obtained by the orthonormalization and

Link to original

Symmetric Matrix

Definition

For real elements, A square matrix that is equal to its transpose.

Facts

Every real symmetric matrix is Hermitian Matrix

Link to original

Hermitian Matrix

Definition

A complex square matrix that is equal to its own Conjugate Transpose

Facts

The diagonal elements of the hermitian matrix should be real numbers.

Link to originalLet be hermitian matrix Then,

Proof

Definite Matrix

Kinds

Positive-Definite Matrix

Definition

Matrix , in which is positive for every non-zero column vector is a positive-definite matrix

Facts

Let , then

- where is a matrix.

- The diagonal elements of are positive.

- For a Symmetric Matrix , for sufficiently small

where is a non-singular matrix.

all the leading minor determinants of are positive.

Link to original

Positive Semi-Definite Matrix

Definition

Matrix , in which is positive or zero for every non-zero column vector is a positive semi-definite matrix

Facts

Link to originalLet , then

- where is matrix.

Negative-Definite Matrix

Definition

Matrix , in which is negative for every non-zero column vector is a negative-definite matrix

Link to originalFacts

Every positive (semi)-definite matrix is Hermitian Matrix

Positive (Semi)-definite and Symmetric Matrix

- is positive definite all eigenvalues of are real and positive.

- is positive semi definite all eigenvalues of are real and positive or zero.

where is Hermitian Matrix

Proof

A Hermitian Matrix is orthogonally diagonalizable as 1 Therefore,

A Hermitian Matrix is orthogonally diagonalizable as 1 where is one of the eigenvectors of

The can be replaced by the corresponding eigenvalue ^[By definition of Eigendecomposition]

Since matrix of eigenvectors is orthogonal1, for every ^[By definition of positive definite matrix] Therefore, holds. In other words, every eigenvalue is non-zero.

Link to original Footnotes

By Spectral Theorem ↩ ↩2 ↩3

Cholesky Decomposition

Definition

Decomposition of a Positive-Definite Matrix into the product of lower triangular matrix and its Conjugate Transpose.

Computation

Let be Positive-Definite Matrix. Then, By setting the first-row first-column entry , can find other entries using substitution. By summarizing, ,

Link to original

Eigendecomposition

Definition

where is a matrix and

If is a scalar multiple of non-zero vector , then is the eigenvalue and is the eigenvector.

Characteristic Polynomial

The values satisfying the characteristic polynomial, are the eigenvalues of the matrix

Eigenspace

The set of all eigenvectors of corresponding to the same eigenvalue, together with the zero vector.

The Kernel of the matrix

Eigenvector: non-zero vector in the eigenspace

Algebraic Multiplicity

Let be an eigenvalue of an matrix . The algebraic multiplicity of the eigenvalue is its multiplicity as a root of a Characteristic Polynomial, that is, the largest k such that

Geometric Multiplicity

Let be an eigenvalue of an matrix . The geometric multiplicity of the eigenvalue is the dimension of the Eigenspace associated with the eigenvalue.

Computation

- Find the solution^[eigenvalues] of the Characteristic Polynomial.

- Find the solution^[eigenvectors] of the Under-Constrained System using the found eigenvalue.

Facts

There exists at least one eigenvector corresponding to the eigenvalue

Eigenvectors corresponding to different eigenvalues are always linearly independent.

When is a normal or real Symmetric Matrix, the eigendecomposition is called Spectral Decomposition

The Trace of the matrix is equal to the sum of the eigenvalues of the matrix.

Proof Since the matrix only has term on diagonal, and the calculation of cofactor deletes a row and a column, the coefficient of of is always . Also, since are the solution of the Characteristic Polynomial, the expression is factorized as and the coefficient of become a Therefore, and .

The Determinant of the matrix is equal to the product of the eigenvalues of the matrix.

Not all matrices have linearly independent eigenvectors.

When holds,

- the eigenvalues of are and the eigenvectors of the matrix are the same as .

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

- the eigenvalues of is

An eigenvalue’s Geometric Multiplicity cannot exceed its Algebraic Multiplicity

the matrix is diagonalizable the Geometric Multiplicity is equal to the Algebraic Multiplicity for all eigenvalues

Link to originalLet be a symmetric matrix. Then, has eigenvalues equal to and the rest zero .

Link to originalThe non-zero eigenvalues of are the same as those of .

Cayley–Hamilton theorem

Definition

Let the Characteristic Polynomial of be Then, for the similar mapping , is satisfied

Proof

Let Then, by the property of adjucate matrix

Since each element of is -order polynomial about So we can express

Then, by using the two above expansions

Therefore,

Examples

For

Link to original

Square System

Definition

The number of unknowns(n) = the number of equations(n) has unique or no solution.

Link to original

Under-Constrained System

Definition

where

The number of unknowns(n) > the number of equations(m) usually has infinitely many or no solutions.

Solution

Homogeneous Under-Constrained System

solving the homogeneous under-constrained linear system

- Obtain and identify pivot and free variables where is the reduced Echelon matrix.

- Find Special solution

- set a free variable = 1, and others = 0

- solve to find the pivot variables

Example

- Find the reduced Echelon matrix

- where are the pivot variables and are the free variables.

- Set a free variable and others and find the pivot variables

- Set a free variable and others and find the pivot variables

- Now, the solutions^[null space] are all linear combinations of special solution

Non-Homogeneous Under-Constrained System

solving the non-homogeneous under-constrained linear system

- Find the special solution for and find a particular solution from obtained by Gauss Elimination where is the reduced Echelon matrix

- Sum the special solution and particular solution

Example

Link to original

- Find the reduced Echelon matrix where is the and is the

- Find the special solution using the method of homogeneous equation

- Find the particular solution using

- Now, the solution of are the sum of all linear combination of special solution and a particular solution

Over-Constrained System

Definition

where

The number of unknowns (n) < the number of equations (m) no solution for equality

Solutions

Non-Homogeneous Over-Constrained System

solve the non-homogeneous over-constrained linear system

The error term of projection is orthogonal to the column space of , . Therefore, . Now, is the least square solution and become a projection matrix onto the column space of , .

Homogeneous Over-Constrained System

solve the homogeneous over-constrained linear system

Let the Eigendecomposition of be where is the eigenvalue and is the corresponding eigenvector. Then, we can change the expression into by multiplying to the both left side So, holds by the property of the real-valued gram matrix Since over-constrained system doesn’t have the solution for equality, . Therefore, the eigenvalue of over-constrained system is positive and real .

Since there is not exist the exact solution for the , the is the best solution for the over-constrained system. By the above expansion . So we can change the expression into Therefore, subject is the eigenvector with the minimum eigenvalue of .

Facts

is the linear combination of column vectors in . So . Also, since, . Thus, is the best solution. This is the projection of onto the subspace of .

If is in , then There exists a solution satisfying all equality

If is perpendicular to the every column vectors in , , then . In other words, become the Left Null Space of .

If is square() and invertible, then = , , and

Link to originalIf is non-invertable^[singular], then solve the Under-Constrained System

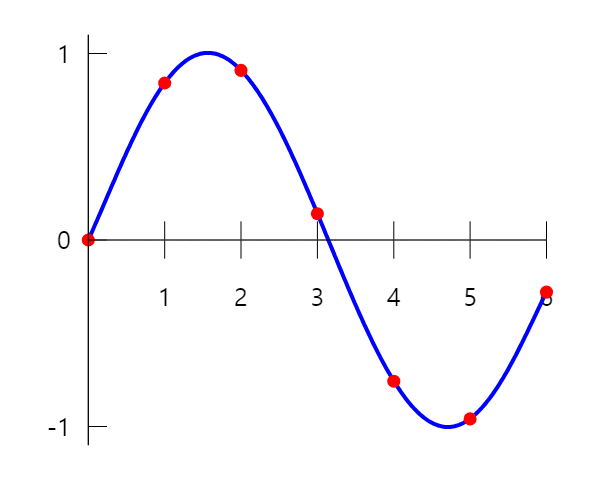

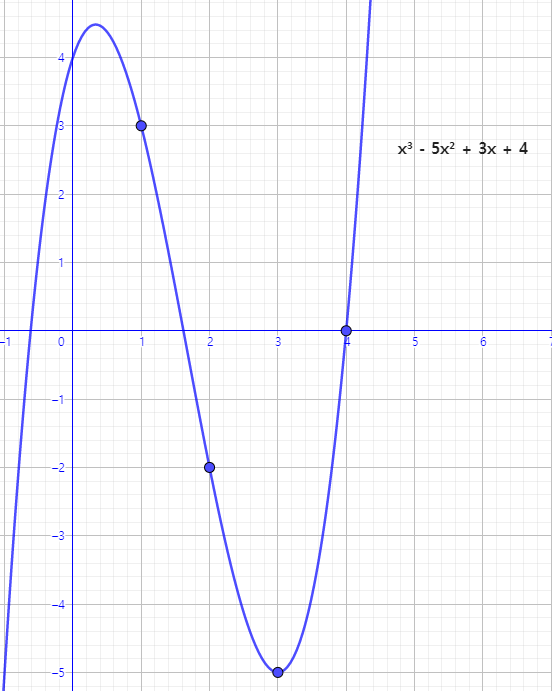

Polynomial Interpolation

Definition

The interpolation of a given bivariate data set by the polynomial of lowest possible degree that passes through the points of the dataset.

Examples

Find the cubic polynomial passes through the four points .

Set the system equation for the polynomial substitute the given points to the system equation and make the augmented matrix solve the system of equation using the gauss elimination Then, .

Facts

Interpolation theorem

Link to originalFor any bivariate data points , where no two are the same, there exists a unique polynomial of degree at most that interpolates these points.

Diagonalizable Matrix

Definition

For square matrix , There exist invertible matrix that satisfies where is Diagonal Matrix

Orthogonally Diagonalizable Matrix

For square matrix , There exist orthogonal matrix that satisfies where is Diagonal Matrix

Unitary Diagonalizable Matrix

For square matrix , There exist Unitary Matrix that satisfies where is Diagonal Matrix

Diagonalization

Let be a matrix of eigenvectors of and be a Diagonal Matrix which has eigenvalues of . Then, by formula of Eigendecomposition If is full rank matrix, then is invertible. So, holds.

Facts

is diagonalizable has linearly independent eigenvectors

The matrix is not unique.

The order of and in and should be the same.

Not all matrices are diagonalizable^[Since the property of eigen decomposition]

the matrix is diagonalizable If the Geometric Multiplicity is equal to the Algebraic Multiplicity for all eigenvalues

Link to originalDiagonalized matrix is similar to the original matrix.

Matrix Similarity

Definition

Two matrices and are similar if there exists

Similar matrices represent the same linear map under two different bases, with being the change of basis matrix.

Facts

Similarity invariant

Similar matrices share all properties of their shared underlying operator

Link to original

- Rank of Matrix

- Determinant

- Invertibility

- Nullity

- Characteristic Polynomial

- Eigenvalue

- Algebraic Multiplicity

- Geometric Multiplicity

- Trace

Spectral Theorem

Definition

where is a Unitary Matrix, and

A matrix is a Hermitian Matrix if and only if is Unitary Diagonalizable Matrix.

Facts

Every Hermitian Matrix is diagonalizable, and has real-valued Eigenvalues and orthonormal eigenvector matrix.

For the Hermitian Matrix , the every eigenvalue is real.

Proof For the eigendecomposition , . Since is hermitian, and norm is always real. Therefore, every eigenvalue is real.

Link to originalFor the Hermitian Matrix , the eigenvectors from different eigenvalues are orthogonal.

Proof Let the eigendecomposition where . By the property of hermitian matrix, and So, . Since , . Therefore, are orthogonal.

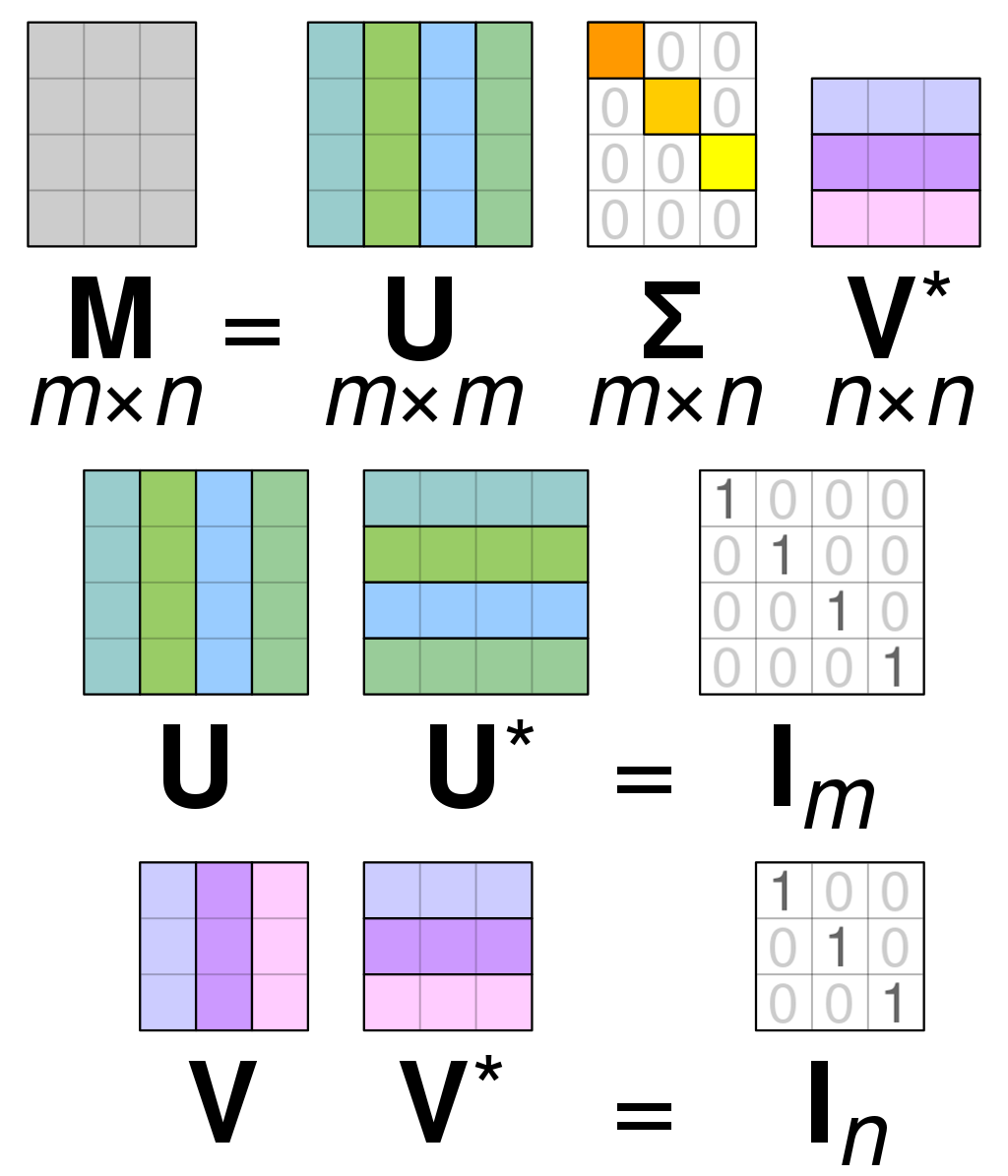

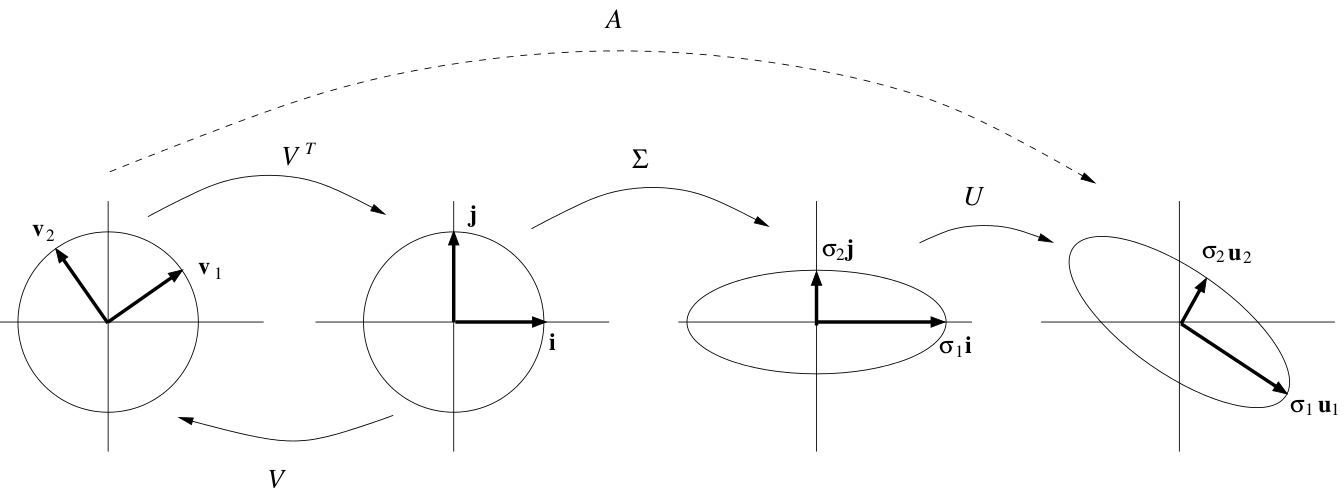

Singular Value Decomposition

Definition

An arbitrary matrix can be decomposed to .

For where and and is Diagonal Matrix

For where and and is Diagonal Matrix

Calculation

For matrix

is the matrix of orthonormal eigenvectors of is the matrix of orthonormal eigenvectors of is the Diagonal Matrix made of the square roots of the non-zero eigenvalues of and sorted in descending order.

If the eigendecomposition is , then the eigenvalues and the eigenvectors are orthonormal by Spectral Theorem. If the , then . Where are called the singular values.

Now, the Orthonormal Matrix is calculated using the singular values. Where is the Orthonormal Matrix of eigenvectors corresponding to the non-zero eigenvalues and is the Orthonormal Matrix of eigenvectors corresponding to zero eigenvalues where each , and is a rectangular Diagonal Matrix.

Since is an orthonormal matrix and is a Diagonal Matrix, . Now, the Orthonormal Matrix is calculated using the linear system and the null space of , Where is the Orthonormal Matrix of the vectors obtained from the system equation And is the Orthonormal Matrix of the vectors . which is corresponding to zero eigenvalues

The Orthonormal Matrix can also be formed by the eigenvectors of similarly to calculating of .

Facts

are the Unitary Matrix

Let be a real symmetric Positive Semi-Definite Matrix Then, the Eigendecomposition(Spectral Decomposition) of and the singular value decomposition of are equal.

where and are non-negative and the shape of the every matrix is

Visualization

every matrix can be decomposed as a

Link to original

- : rotation and reflection

- : scaling

- : rotation and reflection

Sherman–Morrison Formula

Definition

Suppose is an invertible matrix an d . Then, is invertible . In this case,

Proof

Multiplying to the RHS gives

Since is a scalar, The numerator of the last term can be expressed as

So,

Examples

Updating Fitted Least Square Estimator

Sherman–Morrison formula can be used for updating fitted Least Square estimator

Let be the least square estimator of and the matrices with a new data be and Then,

Let for convenience Then, by Sherman-Morrison formula

So, where by expansion

So, can be obtained without additional inverse matrix calculation.

Facts

Sherman–Morrison formula is a special case of the Woodbury Formula

Link to original

Matrix Inversion Lemma

Definition

where the size of matrices are is , is , and is and should be invertible.

The inverse of a rank-k correction of some matrix can be computed by doing a rank-k correction to the inverse of the original matrix

Proof

Start by the matrix

The decomposition of the original matrix become Inverting both sides gives^[Diagonalizable Matrix]

The decomposition of the original matrix become Again inverting both sides,

The first-row first-column entry of RHS of (1) and (2) above gives the Woodbury formula

Link to original