Convex Function

Definition

If is differentiable on , then

Link to original

- is convex

- is convex

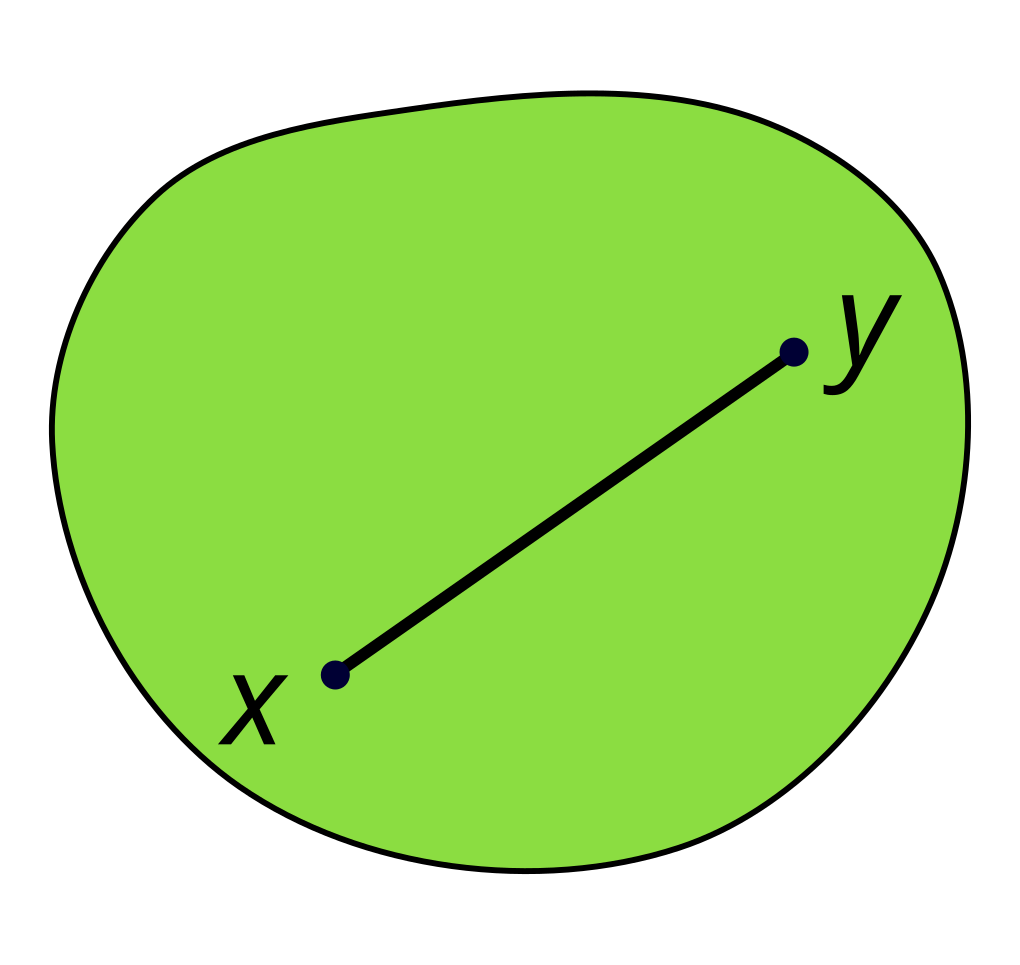

Convex Set

Definition

A set of points is convex if it contains every line segment between two points in the set.

Link to original

Feasible Region

Definition

The set of all possible points on an optimization problem that satisfy the problem’s constraints.

Link to original

Convex Optimization

Definition

subject to

An optimization problem in which the objective function is a Convex Function and the feasible set is a Convex Set.

Facts

Every local minimum is a global minimum

Link to originalThe convex feasible set condition is equivalent to the following conditions

- the inequality constraint function is a Convex Function

- the equality constraint function is an affine function

Gradient Descent

Definition

An iterative optimization algorithm for finding a local minimum of a differentiable function

where is a learning rate

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient is

Then, solution is

Link to original

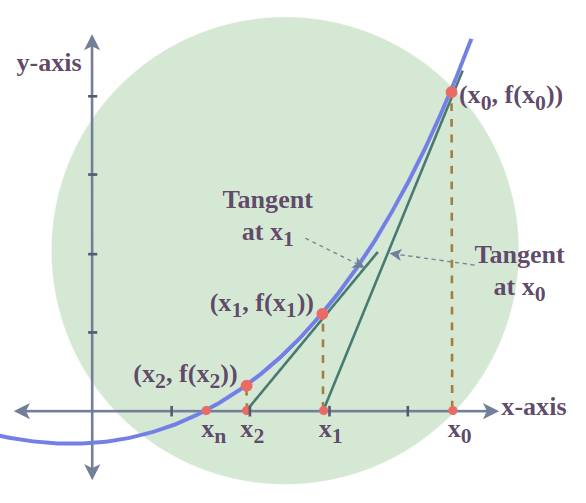

Newton's Method

Definition

An iterative algorithm for finding the roots of a differentiable function, which are solution to the equation

Algorithm

Find the next point such that the Taylor series of the given point is 0 Taylor first approximation: The point such that the Taylor series is 0:

multivariate version:

In convex optimization,

Find the minimum point^[its derivative is 0] of Taylor quadratic approximation. Taylor quadratic approximation: The derivative of the quadratic approximation: The minimum point of the quadratic approximation^[the point such that the derivative of the quadratic approximation is 0]: multivariate version:

Examples

Solution of a linear system

Solve with an MSE loss

The cost function is and its gradient and hessian are ,

Then, solution is If is invertible, is a Least Square solution.

Link to original

Quasi-Newton Method

Definition

Secant equation

Methods

- Broyden’s Method

- Symmetric Rank-One Method

- Broyden–Fletcher–Goldfarb–Shanno Algorithm

- Davidon–Fletcher–Powell Formula

- Broyden Family Method

Facts

Link to original

Broyden's Method

Definition

Solve using Newton’s Method with approximation of Jacobian Matrix

Algorithm

The essence of Broyden’s method is to approximate Jacobian Matrix of Newton’s Method using the average rate of change

Replace derivative or gradient with an approximation Then,

Define

Then,

We want to find satisfying the following expression by above approximation

To ensure stability of update, take a minimum modification In other words, solve the minimization problem using method of Lagrange multipliers subject to

Then, the solution is and is calculated by

Now updating formula become

Where can be easily calculated by Sherman–Morrison Formula

and

Link to original

Symmetric Rank-One Method

Symmetric Rank-One Method

The Quasi-Newton Method method with a symmetric condition

Algorithm

Define

Then,

To assure the symmetry of the approximation of hessian matrix, let the perturbation matrix be

Then,

Now we can express and

Since satisfying is unique(because is the scaling of ), we can get by multiplying to the both side

by substituting to original expression,

Therefore, Since the denominator is a scalar and

Link to original

Broyden–Fletcher–Goldfarb–Shanno Algorithm

Definition

The quasi-Newton method with symmetric and positive-definite condition Update inverse of hessian directly

Algorithm

Define

Then,

We want to find satisfying the following expression with the symmetric and positive-definite condition by the definition

So, Solve the minimization problem with the symmetric and positive-definite conditions using method of Lagrange multipliers subject to and where

Then, the solution is where by the definition

Therefore, updating formula is

And by the Sherman–Morrison Formula, and

Link to original

Davidon–Fletcher–Powell Formula

Definition

The quasi-Newton method with symmetric and positive-definite condition Update hessian and find inverse using Sherman–Morrison Formula

Algorithm

Define

Then,

We want to find satisfying the following expression with the symmetric and positive-definite condition by the definition

So, solve the minimization problem with the symmetric and positive-definite conditions using method of Lagrange multipliers subject to and where

Then, the solution is where by the definition Therefore, updating formula is

And by the Sherman–Morrison Formula, and

Link to original

Broyden Family Method

Definition

Broyden family update is obtained from combining the BFGS and the DFP method

Algorithm

where

Facts

Link to originalIf the of Broyden Family is a , then the update is equal to Symmetric Rank-One Method

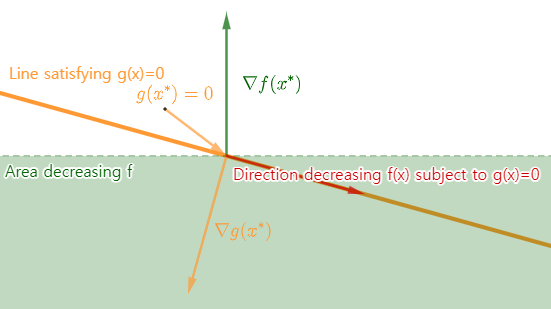

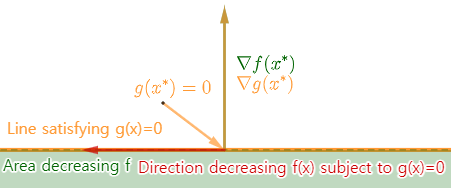

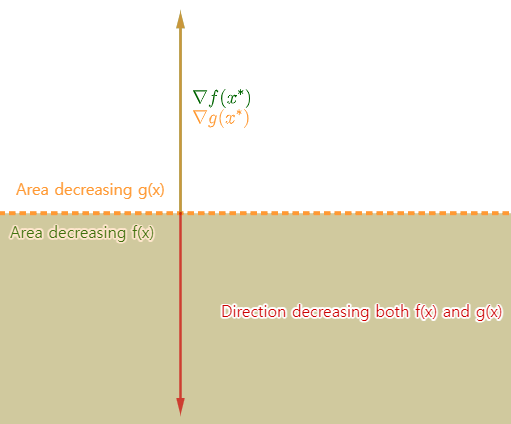

Method of Lagrange Multipliers

Definition

The method of Lagrange multipliers is a method for finding the local maxima and minima of a function subject to equality constraints

Solution

Given general problem

In order to find the maximum or minimum of a function subject to the equality constraint , find the stationary point of considered as a function of and the Lagrange multiplier . In other words, all partial derivatives should be zero. The solution of the constrained optimization problem is always a Saddle Point of the Lagrangian function .

Facts

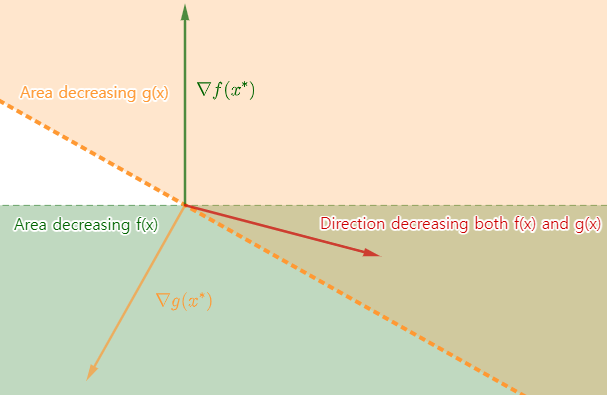

Link to originalThe gradient of constraint and the gradient of the function should be in same or directions.

Proof

If and don’t have same or directions, then there is the direction decreasing subject to

If and have the same or opposite direction, then there is no direction decreasing subject to

Infeasible Start Newton's Method

Definition

Algorithm

Solve the minimization problem with the equality condition subject to

Use a Newton’s Method to find the optimal point satisfying above conditions

The quadratic Taylor approximation of at the point is

The minimization problem is subject to

The Lagrangian function is where is a Lagrangian multiplier

The optimality condition is

In a matrix form,

we solve this linear equation to find the Newton direction

Link to original

Backtracking Line Search

Definition

The method to determine the amount to move along a given search direction (learning rate).

Algorithm

We want to find a learning rate minimizing the cost function

Exact line search

The optimal can be obtained by using grid search in the interval However, it causes substantial computational costs.

Backtracking line search

Identify a value of that provides a reasonable amount of improvement in the objective function, rather than to find the actual minimizing value

The backtracking line search starts with a large and iteratively shrinks it until the value is enough to provide a decrease in the objective function.

Calculate the gradient of objective function with respect to learning rate at the point

and define a parameter which modify the slope of

Now, the line is used to check whether the decrease in the objective function is enough

Start from large usually , and iteratively shrinks it by multiplying to until the value is lower than the line

If the condition is satisfied, then use as a learning rate

Link to original

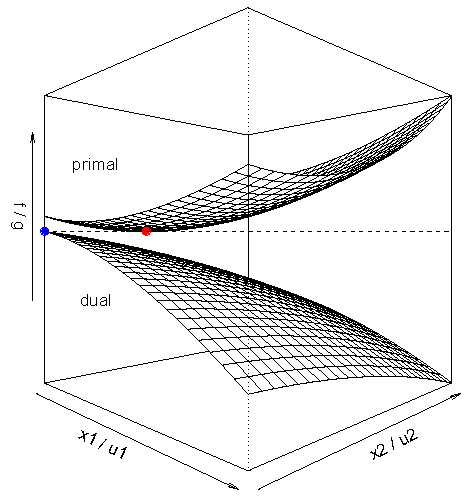

Duality

Definition

Optimization problems may be viewed from either the primal problem or the dual problem.

Properties

Consider the following optimization problem

The original problem is called a Primal problem subject to Let a be the domain of the problem and the be its feasible set

The Lagrangian function corresponding to the primal problem is called a Lagrangian primal function where the variable is called a Primal variable, and are called Dual variables

Since by the constraints, the Lagrangian primal function is always lower than or equal to the for all in the feasible set

Let a primal optimal value be the feasible optimal value of the primal problem

Let a Lagrange dual function be the minimum of the Lagrangian primal function for all in the domain

We say another optimization problem for the Lagrange dual function a Dual problem subject to

Since the Lagrange dual function minimize the Lagrangian primal function , it is always lower than or equal to the primal optimal value that minimizes that

Let a dual optimal value be the maximum of the dual problem

Then, by the above inequality, become the lower bound of This property is called a Weak Duality

and the difference between the dual optimal value and the primal optimal value is called a Duality Gap

If the dual optimal value and the primal optimal value are the same, then this property is called a Strong Duality

Solution for the Problem Satisfying Strong Duality

Find the Lagrange dual function by using the gradient condition find the dual optimal variables from the dual problem by using the gradient condition obtain the primal optimal variable using the dual optimal variables

Visual Explanation of Duality

Consider an optimization problem subject to

Let a set where

And let be the primal optimal solution

By definition of Lagrange dual function, where

Consider plane

Where the feasible region is of

The optimal value is given by the tangent horizontal line that indicates the minimum value when

Since , the line always has negative slope. So, for a given , the line provides the lower bound of the objective function

The figure shows the hyperplanes for different values We see that gives the best lower bound , also see that Strong Duality does not hold because

Optimality Property

If we have feasible solutions to the primal and dual problems such that their respective objective functions are equal, then these solutions are optimal to their respective problems

Proof Let a prime variable be and dual variables be satisfying

Since the primal optimal minimize , the value with the prime variable always greater than or equal to the

And by the assumption, the first term and the last term of the inequality become the same.

Then, by the equality , the dual variable are the dual optimal, and by the equality , the primal variable is the primal optimal And the two are the same.

Therefore, values are optimal.

Facts

If the primal is a minimization problem, then the dual is a maximization problem, and vice versa

The solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal, and vice versa

Link to original

For the minimization primal problem, the dual optimal is a lower bound of the primal optimal

Duality Gap

Definition

The difference between the primal and dual solutions

Link to original

Weak Duality

Definition

For a minimization problem, The solution to the primal problem is always greater than or equal to the solution to the dual problem

The Duality Gap of an optimization problem is always greater than or equal to zero

Link to original

Strong Duality

Definition

The primal optimal objective and the dual optimal objective are equal. The Duality Gap of an optimization problem is zero.

Facts

Link to original

If a strong duality holds, the dual optimal primal optimal

Slater's Condition

Definition

For a convex problem, there exists a point such that and

Link to original

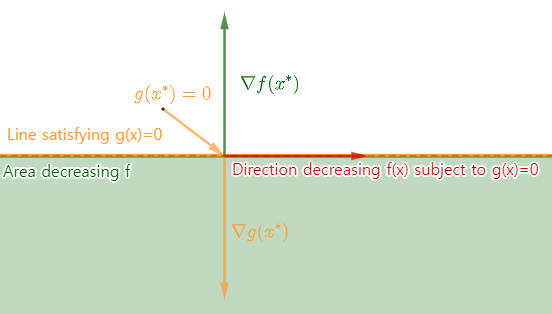

Karush-Kun-Tucker Conditions

Definition

By allowing inequality constraints, generalizes the method of Lagrange multiplier, which allows only equality constraints.

Given general problem subject to

The KKT conditions are

- Stationarity:

- Primal feasibility:

- Dual feasibility:

- Complementary slackness:

Facts

The gradient of inequality constraint and the gradient of the function should be in opposite directions.

If and don’t have opposite directions, then there is the area(direction) decreasing both and

For a problem with Strong Duality, are optimal satisfy the KKT conditions

For a convex optimization, satisfy the KKT condition Strong Duality holds

Proof Since are all convex function, the Lagrangian primal function is also convex

Let be the values satisfy KKT conditions. By the stationarity condition, So, is a local minimum of and by the convexity, it is also global minimum Therefore, ^[Lagrange dual function]

Also, by the complementary slackness and primal feasibility conditions, So, Therefore, the dual optimal value and the primal optimal value is the same. In other words, satisfy the Strong Duality condition

are optimal

Link to originalFor a problem with Slater’s Condition (ensure Strong Duality and convexity), and satisfy the KKT condition are optimal

Proof Let be the primal and dual solutions

Since are the dual optimal, the dual optimal value can be expressed as an expression in regard to where

Since is a feasible value, the with , is always greater than or equal to the with and by the constraints of Lagrangian primal function, and So,

In summary

Since Slater’s Condition ensures Strong Duality , the inequality become the equality Therefore, , so the complementary slackness condition is satisfied Also, , so the stationary condition is satisfied Finally, since are the primal and dual solutions, the constraints of Lagrangian primal and dual function, satisfy the primal and dual feasibility condition

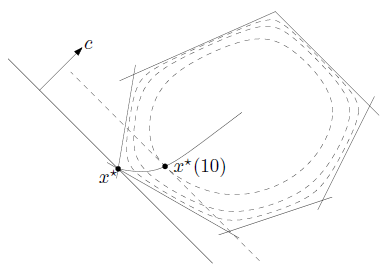

Barrier Method

Definition

An interior point optimization technique used to solve constrained optimization problems. It involves adding a barrier or penalty term to the objective function that penalizes solutions for being outside the feasible region.

Algorithm

Consider the optimization problem with an inequality constraint

subject to

The KKT Conditions of the problem are the following

- ^[Stationarity]

- ^[Primal feasibility]

- ^[Dual feasibility]

- ^[Complementary slackness]

Interior point methods solve the problem whose complementary slackness condition is relaxed where

the stationary condition become where is called a logarithmic barrier function

As large, the logarithmic barrier function functions like an indicator function.

Now, by the definition of log function, should be negative or zero, so the primal feasibility condition holds. Also, by the relaxed complementary slackness conditions, is positive or zero, so the dual feasibility conditions holds

Now the conditions have to be satisfied are the following

and the problem with inequality become the problem with equality subject to

It can be solved using Newton’s Method

Newton’s Method’s approximation becomes unstable if is too small and the current point is far from optimal. So, we initially choose large and iteratively shrink it.

Analytic Central Point of Feasible Region

If is very large, then the optimization problem become the following. subject to

Since is a monotonic increasing function, the problem can be transformed as subject to

So, it finds the analytic central point of feasible region (gravity of the feasible region)

Phase 1 method

In the iteration, we have to find the initial points satisfying constraints satisfying

To ensure the condition, transform the optimization problem only at the initial stage. subject to

Now the problem can be solved using Infeasible Start Newton’s Method

Link to original

Primal-Dual Interior-Point Method

Definition

An interior point optimization technique used to solve constrained optimization problems. Converging and accuracy are faster than Barrier Method

Algorithm

Consider the optimization problem with an inequality constraint

subject to

The KKT Conditions of the problem are the following

- ^[Stationarity]

- ^[Primal feasibility]

- ^[Dual feasibility]

- ^[Complementary slackness]

Interior point methods solve the problem whose complementary slackness condition is relaxed where

Instead of substituting in the stationary condition with like the Barrier Method, Primal-dual interior method directly solve the problem with Newton’s Method

Now, the conditions have to be satisfied are the following

and the problem with inequality become the problem with equality subject to

It can be solved using Newton’s Method

Link to original