Random Vector

Definition

Column vector whose components are random variables .

Link to original

Stochastic Process

Definition

where each is a Random Variable.

Stochastic process is a collection of random variables defined on a common probability space , and whose index represents time.

Notation

Stochastic Process whose Initial Distribution is a Delta Distribution

If a random variable has a positive probability only at and otherwise , then the distribution is expressed as . Also, the probability and expectation of are expressed as , and respectively.

The probability of given some initial distribution is expressed as following

- where is the initial state

- where is the initial distribution

where is a HMC defined on the state space

Averages

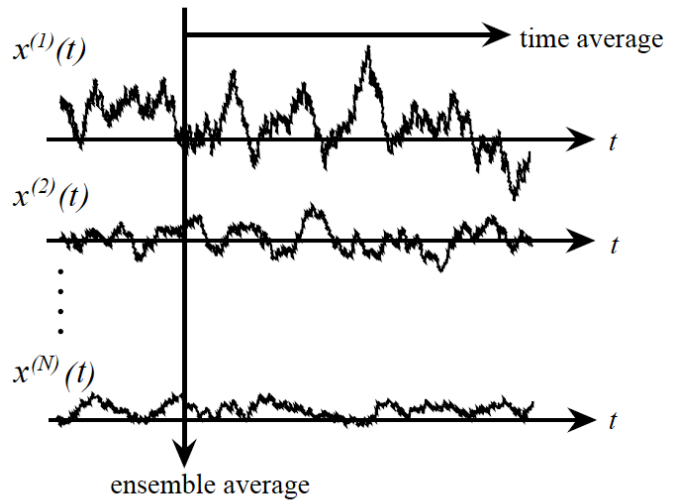

For a sequence of random variables

Ensemble Average

Time Average

Facts

If the random variables of a stochastic process satisfy i.i.d condition, then the ensemble average is equal to time average

Summary

The Distributions of Stochastic Process

Empirical distribution Stationary Distribution Limiting Distribution Used for Every stochastic process Every stochastic process Markov chain Expression Consideration of use single use every don’t consider Statistical distribution? O O X Analytic value? X O O Related to data? O X X Related to limit? O X O Related to LLN? O O X Description Averaged calculated by the data Theoretical expectation Theoretical convergence value : the empirical distribution of with consideration of single (use time average)

: the Stationary Distribution of with consideration of every : the Limiting Distribution of obtained by the limit of without consideration of . Used with Markov Chainwhere is a sequence of random variables

Link to original

Markov Property

Definition

Markov property refers to the memoryless property of a Stochastic Process.

Consider a Probability Space with a Filtration , and a Measurable Space . A Stochastic Process is said to possess the Markov property if Here, in the LHS represents all information up to time , whereas conditions only on the single value at time .

In the case where is a discrete set and , this can be reformulated as follows

Link to original

Markov Chain

Definition

A Markov chain is a Stochastic Process that satisfies Markov Property. It consists of a set of states and a Transition Probability Matrix

Summary

Conditions and Properties of Markov Chain (Finite States)

Example Finite IRR Aperiodicity ^[a unique Limiting Distribution] ^[a unique Stationary Distribution] ^[Ergodic Theorem] ^[Ergodicity] O X X X X X X Identity/Block matrix O X O X X X X Exchange matrix O O X X O O X Ergodic Markov Chain O O O O O O O flowchart LR mc[HMC] --> finite subgraph finiteness finite([finite]) end finite --> finite_mc finite_mc --> irreducible finite_mc --> reducible subgraph Irreducibility irreducible([irreducible]) reducible([reducible]) end irreducible --> irr_mc[Irreducible MC] reducible -->|divide|irr_mc irr_mc ---|holds|egt([Ergodic Theorem]) irr_mc --> aperiodic subgraph Aperiodicity aperiodic([aperiodic]) end aperiodic --> ergmc[Ergodic MC]Conditions and Properties of Markov Chain (Infinite States)

IRR Nature Aperiodicity ^[a unique Limiting Distribution] ^[a unique Stationary Distribution] ^[Ergodic Theorem] ^[Ergodicity] O Transient can’t define X X X X O Null recurrent no matter X X X X O Positive recurrent X X O O X O Positive recurrent O O O O O Link to originalflowchart LR mc[HMC] mc --> irreducible mc --> reducible subgraph Irreducibility irreducible([irreducible]) reducible([reducible]) end irr_mc[Irreducible MC] irreducible --> irr_mc reducible -->|divide|irr_mc irr_mc --> pr irr_mc --> nr irr_mc --> tr subgraph Recurrence pr([positive recurrent]) nr([null recurrent]) tr([transient]) end pr_mc[Recurrent MC] pr --> pr_mc pr_mc --> aperiodic egt([Ergodic Theorem]) pr_mc ---|holds|egt subgraph Aperiodicity aperiodic([aperiodic]) end aperiodic --> ergmc[Ergodic MC]

Homogeneous Markov Chain

Definiotion

Markov Chain that are the same for any ,

Link to original

Transition

Definition

where is the distribution of states and is Transition Matrix

Link to original

Transition Probability

Definition

where is HMC that has countable state space

The conditional probability of the Transition from state to

Facts

Link to original

Transition Probability Matrix

Definition

A transition probability matrix is a matrix whose -th element is . where each is a Transition Probability of HMC having countable state space

Facts

Since the number of elements of state space can be infinity, strictly speaking is not a matrix. However, several operations of matrix are satisfied for the

Link to originalThe sum of each row of a transition matrix is one

Invariant Measure

Definition

The Measure that satisfies the above

where is a Transition Matrix of the Markov Chain

Link to original

Stationary Distribution

Definition

Invariant Measure satisfying definition of distribution

distribution that satisfies where is a Distribution defined on Measurable Space and

distribution that satisfies where is a Transition Matrix of the Markov Chain

Facts

When is a stationary distribution of the finite stochastic process , it becomes a PMF of the random variable

For the finite states case, the stationary distribution can be obtained using Eigendecomposition. The stationary distribution is the unit eigenvector^[whose Norm is one] corresponding to the eigenvalue with value one.

Finite HMC has at least one Stationary Distribution

If we set the initial distribution of the stochastic process to a stationary distribution , the stochastic process has the same distributions for every (not independent)

Link to originalIf is a IRR HMC, then the has a Stationary Distribution has a unique Stationary Distribution Positive recurrent

Accessibility

Definition

, is accessible from

where is a HMC defined on the state space and the is a transition matrix of and is a -th element of

Link to original

Equivalence Relation

Definition

A binary Relation on set that satisfies the following conditions for all

Facts

Link to originalThe equivalence relation may be constructed from the Partition

Communication

Definition

: are accessible from each other

where is a state space

Facts

Link to originalCommunication is an Equivalence Relation

Communication Class

Definition

where The Equivalence Class of the state space by the Communication

Example

Divide Transition Matrix by Communication

where are Recurrent block matrix and is Transient block matrix

Facts

are pairwise coprime

are IRR

is PR, NR or TR

Link to originalTherefore the set of communication classes^[Quotient Set] form a Partition.Facts

Link to originalThe quotient set form a Partition

Irreducible Markov Chain

Definition

State space which has only one Communication Class. where is a HMC defined on the state space and the is a Transition Matrix of

where and is the probability of departing from and reaching after

Facts

If HMC has finite state space and is irreducible, then the unique Stationary Distribution of exists, and the probability of every state is positive.

Link to originalSince Communication is a Equivalence Class, the reducible Markov chain can be partitioned into the irreducible Markov chains.

Recurrence

Definition

Continuous Version

Let be a HMC defined on the state space , and be the return time for the state

Recurrent

Positive recurrent

Null recurrent

Transient

Incomplete Discrete Version

Let be a HMC defined on the state space , , and be the element of the transition matrix of

Recurrent

Transient

Notation

The first time to return to state

The probability of returning to from within a finite time.

Example

Let the be a HMC and be a transition matrix.

is an Irreducible Markov Chain. Whether recurrent or transient is determined by

- : transient

- with : positive recurrent

- with : null recurrent

Facts

If a HMC is Irreducible Markov Chain, then every state has the same nature. In other words, it has one among the following

- every state is transient

- every state is null recurrent

- every state is positive recurrent

Link to originalThe number of times that returning to oneself for a time approximates the inverse of .

Period of Markov Chain

Definition

Let be a HMC defined on the state space , and the set be the set of the time steps returning from the to oneself .

The period of is the G.C.D of every element of

Link to original

Aperiodic Markov Chain

Definition

Markov Chain whose period is one.

Facts

If there is a probability of returning to oneself after 1 period or there exist non-zero diagonal elements of the Transition Matrix, then the Markov always aperiodic

If HMC is IRR and recurrent, then every state has the same period.

Examples

Periodic Markov Chain with 3-period

flowchart LR 0 -->|1| 1 1 -->|1| 3 2 -->|1| 1 3 -->|1/3| 0 3 -->|2/3| 2Aperiodic Markov Chain with 1-period

Link to originalflowchart LR 0 -->|1| 1 1 -->|1/2| 0 1 -->|1/2| 2 2 -->|1| 3 3 -->|1| 1

Ergodic Theorem

Definition

A finite IRR HMC holds the following for an arbitrary function where is the unique Stationary Distribution, and is a Transition Matrix of

IRR PR HMC holds the following for a function that satisfies where is the unique Stationary Distribution, and is a Transition Matrix of

Facts

Approximate Stationary Distribution Using Ergodic Theorem (Finite)

If the Markov Chain has a unique Stationary Distribution, it can be approximated using the time average by the ergodic theorem.

Let function be , The stationary distribution can be approximated by using the ergodic theorem

Approximate Stationary Distribution Using Ergodic Theorem (Infinite)

where is the time average, and is the Stationary Distribution, of

Examples

Identity Matrix

Since the IRR condition is not satisfied, there is no unique Stationary Distribution. So the Ergodic theorem cannot be used.

Exchange Matrix

Link to original

Ergodic Markov Chain

Definition

The HMC that satisfies the following conditions is an ergodic Markov chain

- has finite states

- irreducible

- aperiodic

The HMC that satisfies the following conditions is an ergodic Markov chain

Link to original

Limiting Distribution

Definition

If exists and the every row of are the same, an arbitrary row vector of the is called the limiting distribution of the stochastic process .

where the is the transition matrix of the finite HMC

Facts

If a limiting distribution exists, it is unique.

If a finite HMC has a limiting distribution , then the limiting distribution satisfies the definition of Stationary Distribution

Ergodic Markov Chain has a unique limiting distribution

IRR TR HMC doesn’t have a limiting distribution because the converged Transition Matrix

Link to originalIRR NR HMC doesn’t have a limiting distribution because the converged Transition Matrix

Detailed Balance Condition

Definition

where is IRR HMC, is the Transition Matrix of the , and is a Distribution

The probability of the transition from the state to is equal to the probability from the to

Facts

Link to originalThe detailed balanced condition implies the stationarity of the distribution In other words, the distribution satisfying the detailed balanced condition is a Stationary Distribution.

Reversible Markov Chain

Definition

The Markov Chain satisfying Detailed Balance Condition

Link to original