Definition

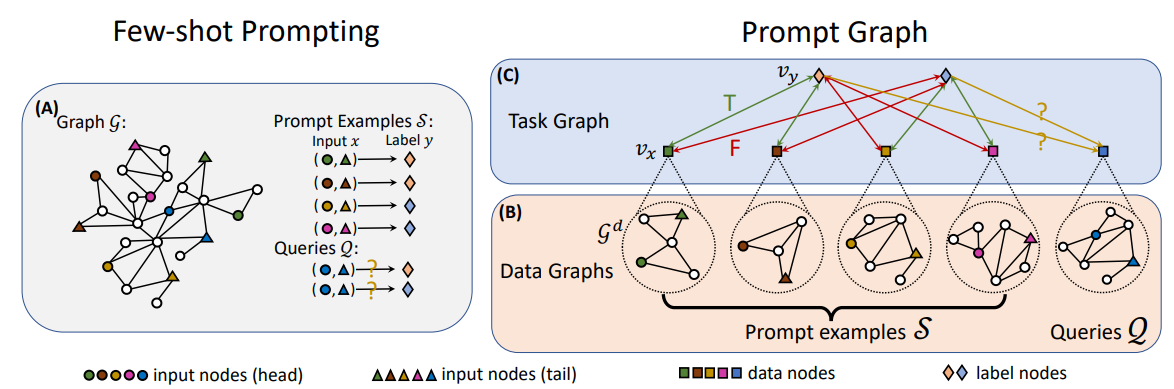

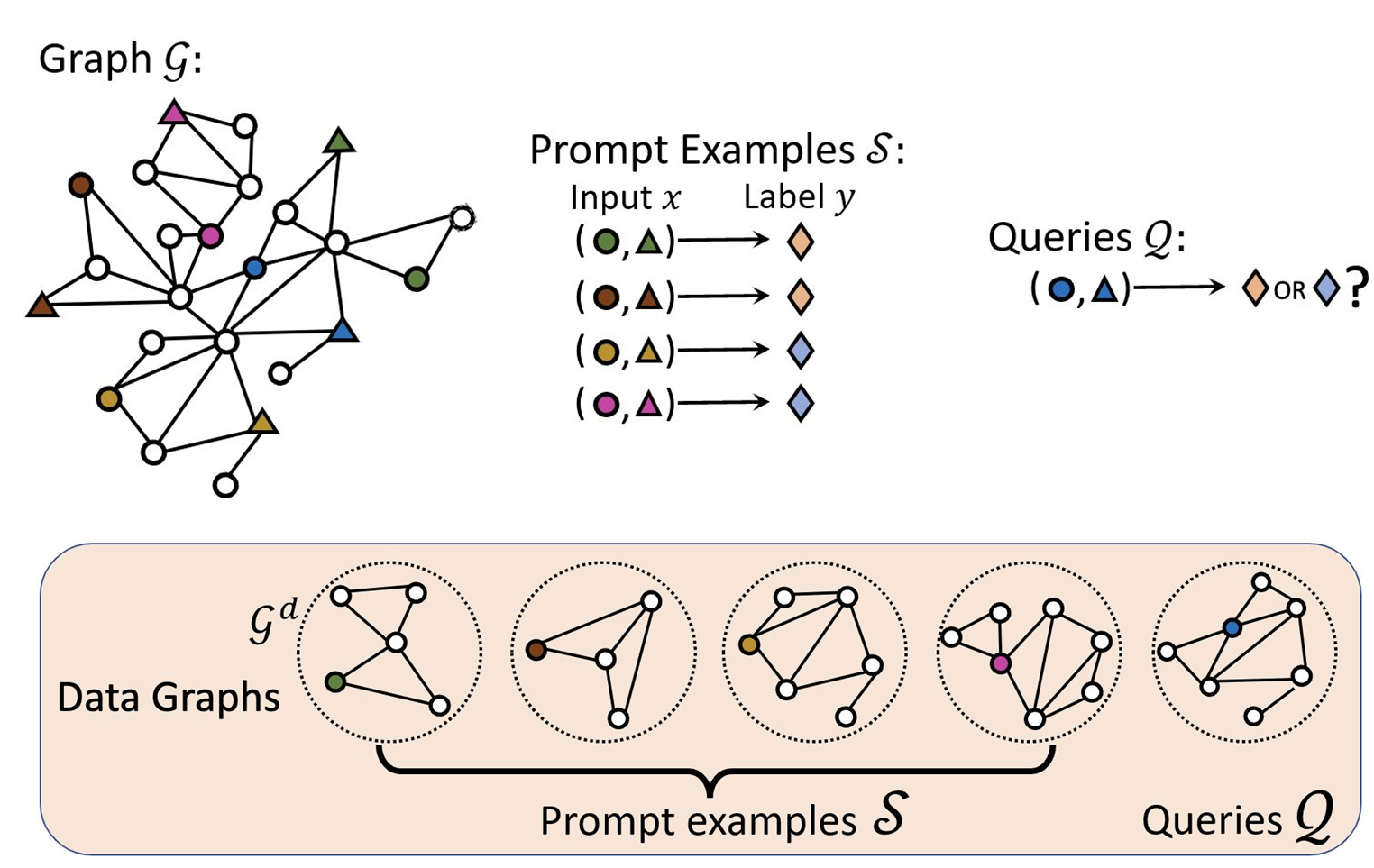

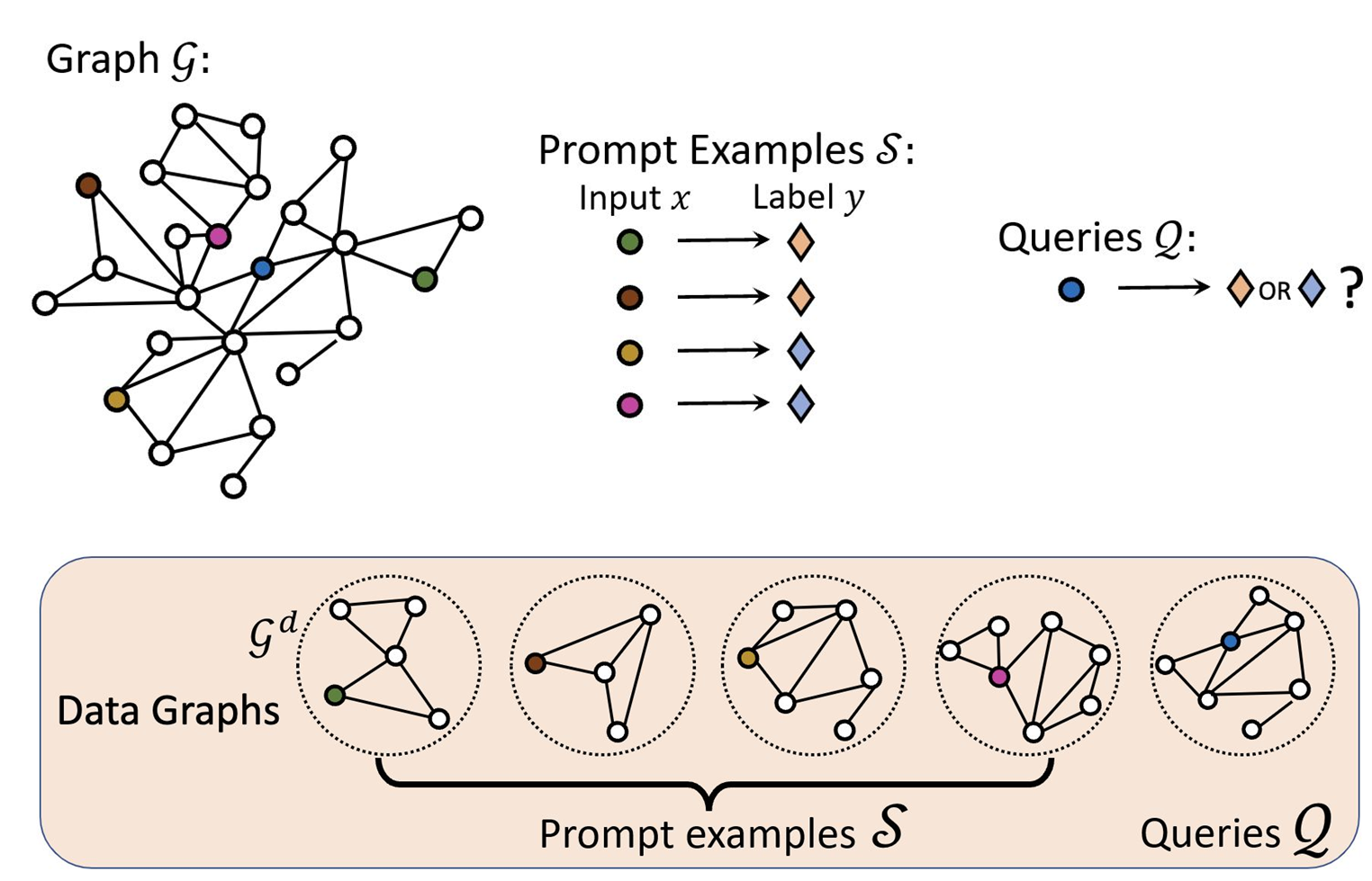

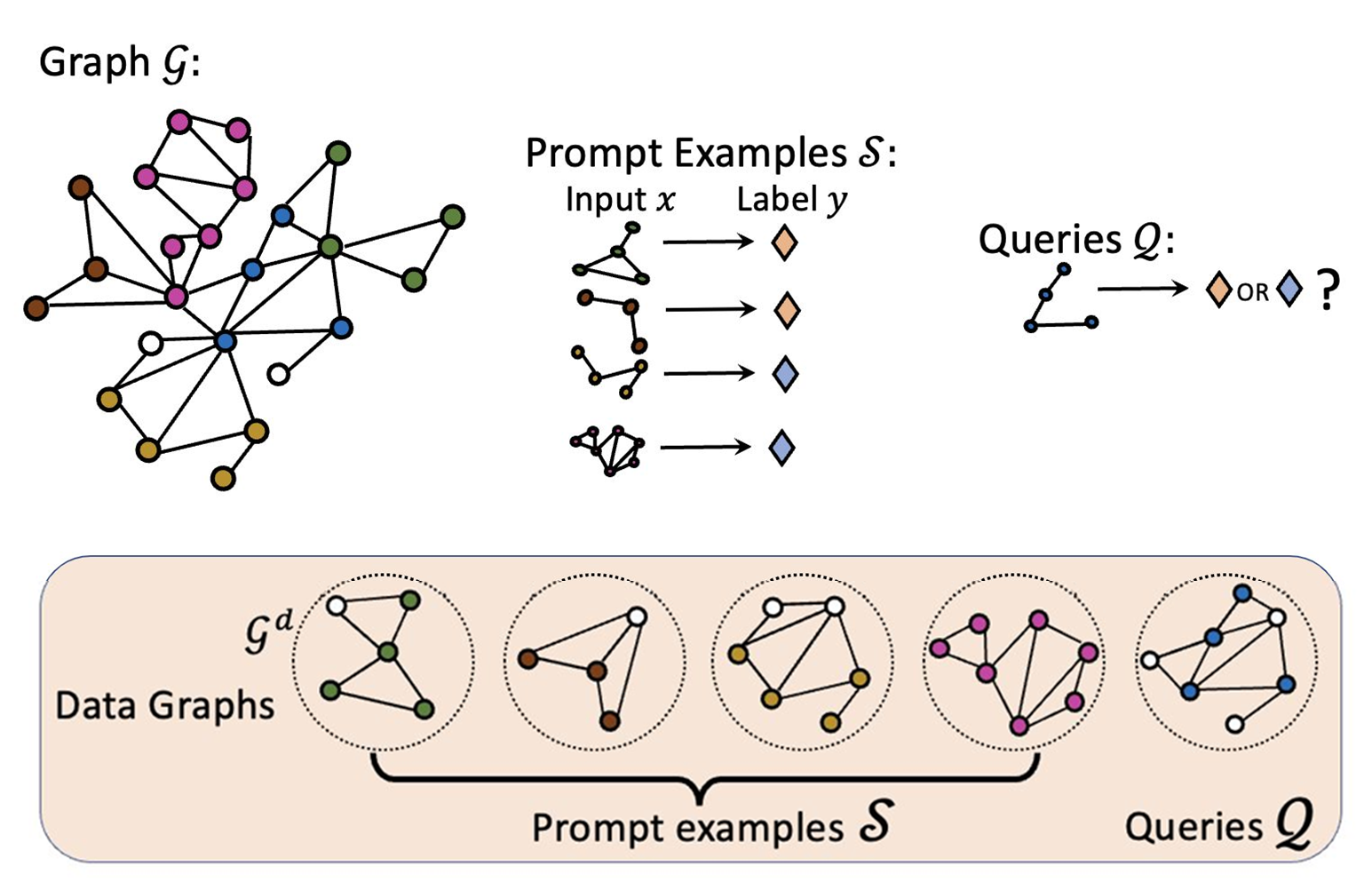

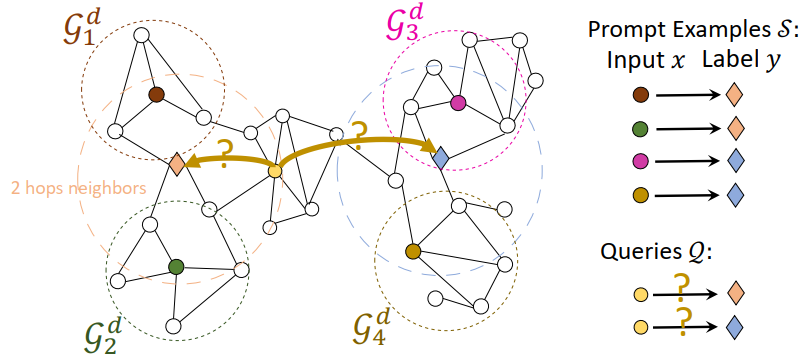

Pretraining Over Diverse In-Context Graph Systems (PRODIGY) model is a pretraining framework that enables In-Context Learning over graphs. The key idea of PRODIGY is to formulate in-context learning over graphs with a novel prompt graph representation. It is similar to the few-shot prompting widely used for LLM.

Architecture

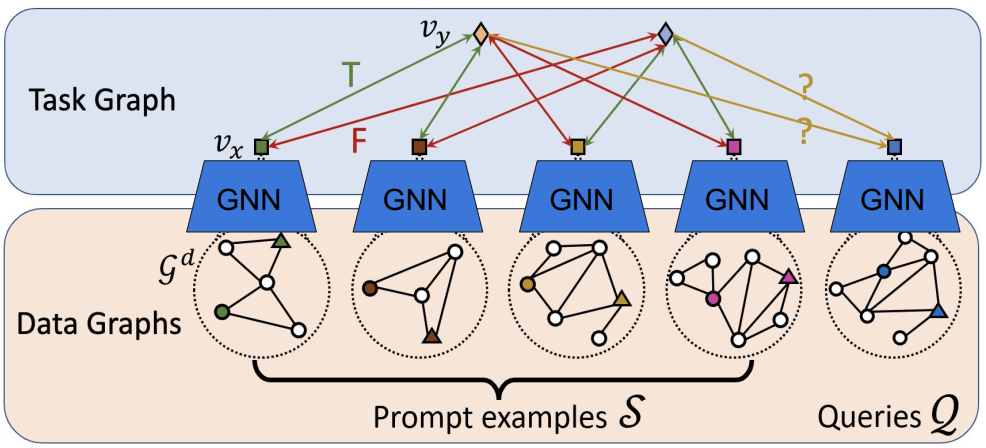

Prompt Graph

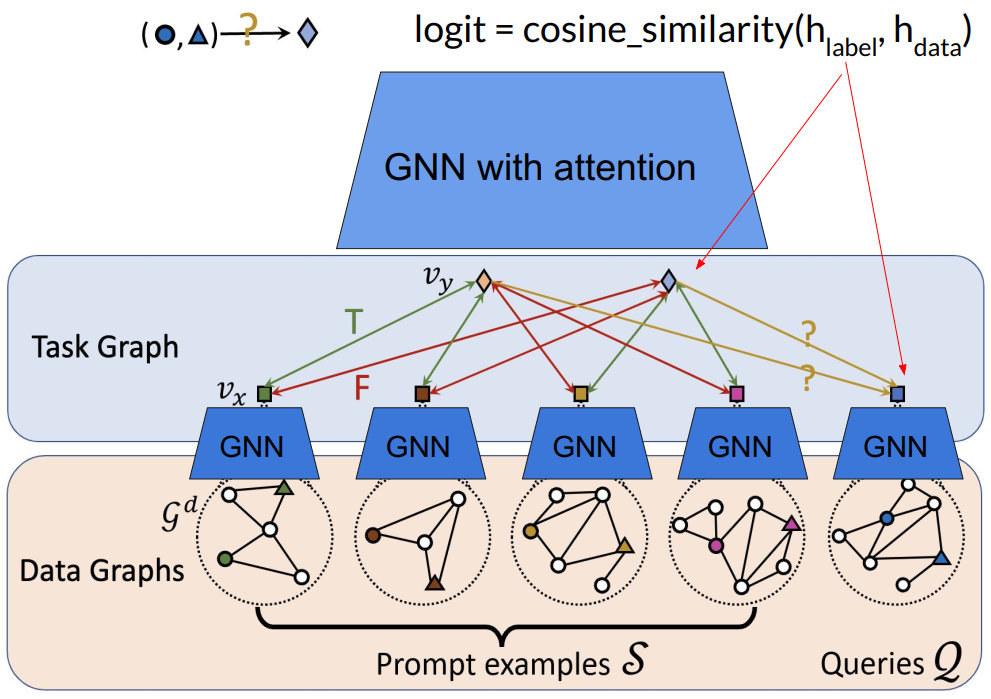

- Data graphs of the input nodes/edges/subgraphs are contextualized to by an embedding model such as GCN or GAT.

For node classification problem, the embedding of root node of the data graph is used as data node embedding.

For link classification problem, the data node embedding is calculated using the edge’s nodes.

where and are learnable parameters.

For node classification problem, the embedding of root node of the data graph is used as data node embedding.

For link classification problem, the data node embedding is calculated using the edge’s nodes.

where and are learnable parameters.

- Link Prediction

- Node Classification

- Graph Classification

- Link Prediction

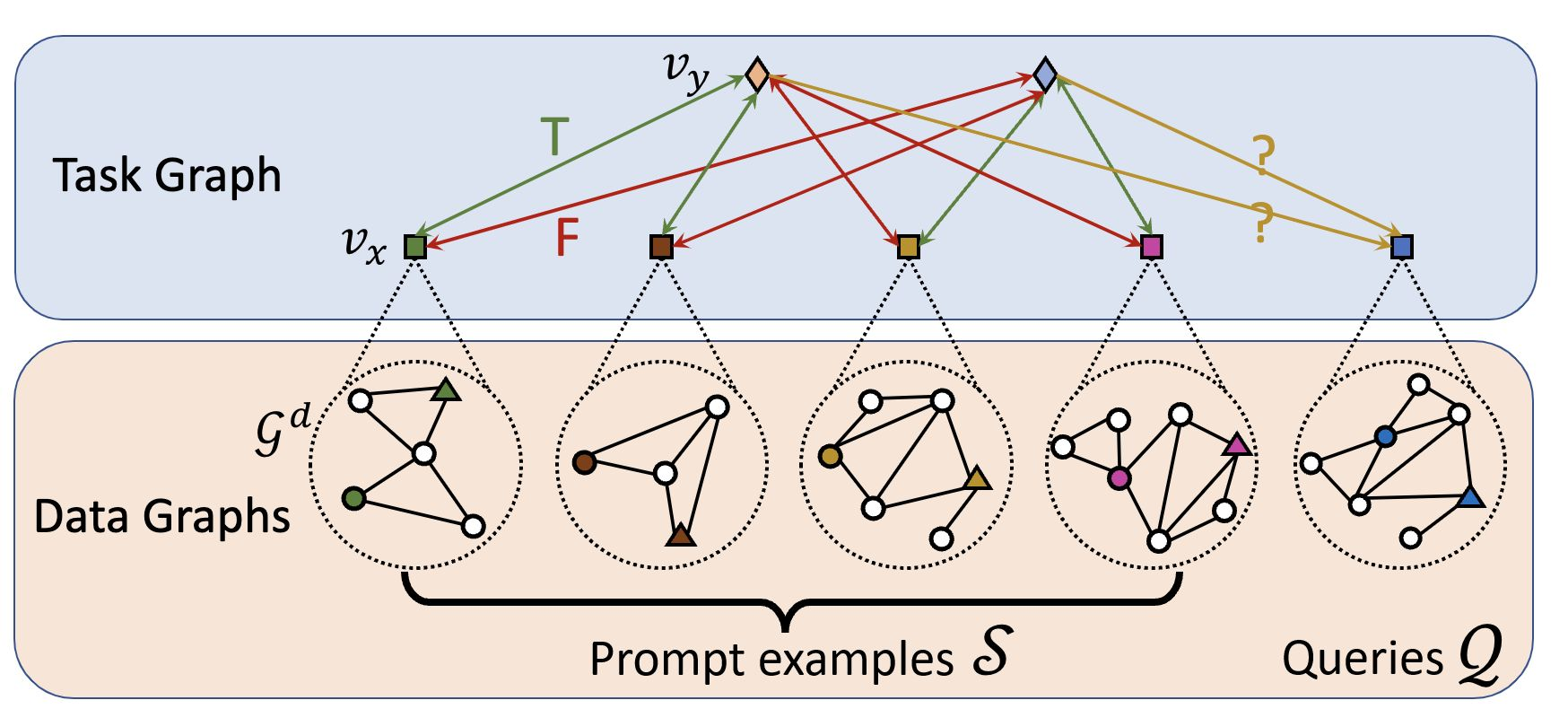

- Task graph is constructed using the contextualized data graphs and the labels. The edges between the data and label node groups are fully connected.

- The task graph is fed into the another GAT to obtain updated representation of data nodes and label nodes.

- The classification is performed by the cosine similarity of the embedded nodes of the target graph.

Pretraining

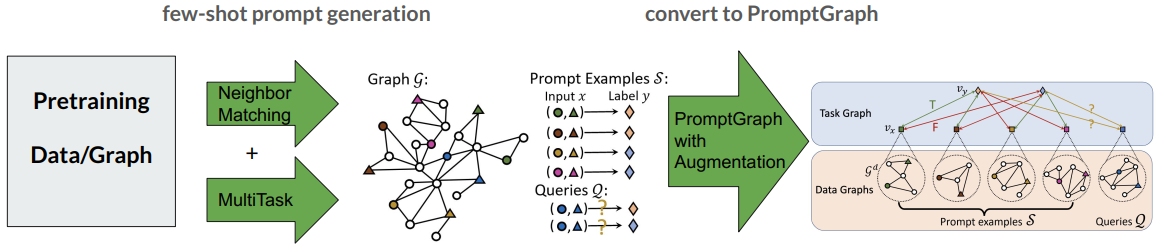

The PRODIGY model is trained by the neighbor matching task and the multi(edge/node/subgraph)task.

Neighbor Matching

We sample multiple subgraphs from the pretraining graph as the local neighborhoods, and we say a node belongs to a local neighborhood if it is in the sampled subgraph. The sampled subgraphs are used as the prompt/query data graphs

Multitask

In the pretraining stage, each data graph of prompts and queries is constructed by sampling -hop neighborhoods of the randomly sampled nodes.