Entropy

Definition

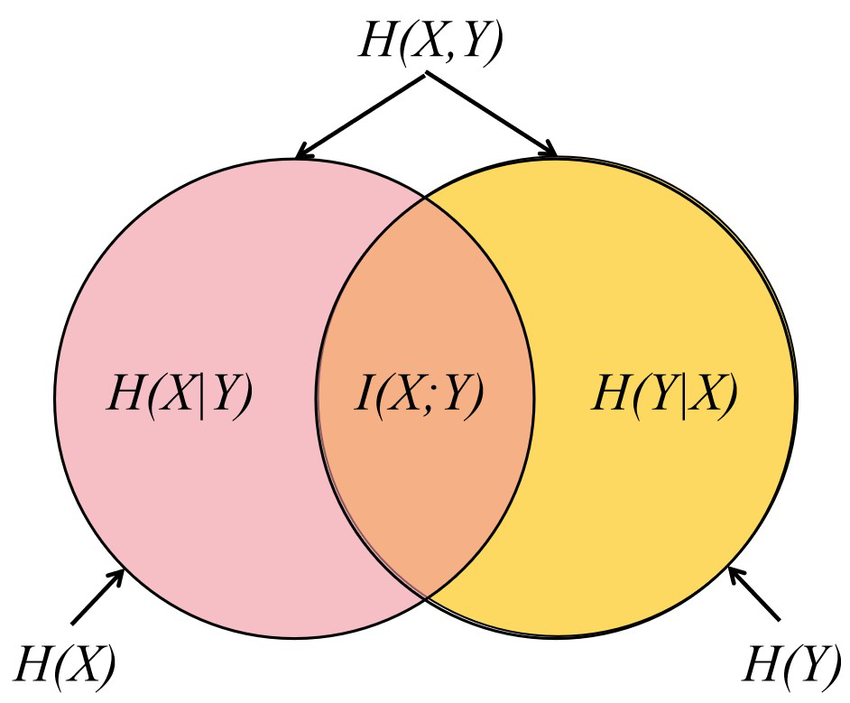

The entropy of a Random Variable quantifies the average level of uncertainty or information associated with the variable’s potential states or possible outcomes.

Link to original

Joint Entropy

Definition

Joint entropy is a measure of the uncertainty associated with a set of random variables.

Link to original

Conditional Entropy

Definition

Conditional entropy quantifies the amount of information needed to describe the outcome of a Random Variable given that the value of another Random Variable is known.

Link to original

Mutual Information

Definition

Mutual information of two random variables quantifies the amount of information obtained about one Random Variable by observing the other Random Variable.

Link to original